Win10下手把手教你Mask R-CNN用自己的数据集训练(从labelme标记开始)

对于Mask R-CNN的环境配置不了解的同学可以看我之前的博客,详细的讲了如何复现跑通demo

https://blog.csdn.net/hesongzefairy/article/details/104702119(Win10下快速复现Mask_RCNN避坑指南)

但是我们不能仅仅满足于跑通一个demo,用自己的数据集来训练一个模型并测试才是更高的追求,目前网上很多博客给出的训练方法都大同小异,都是基于官方给出的ballon样例修改而来,不过由于时间较为久远,细节上有了一些变化,这里我就一步一步从数据的标注开始为大家详细展示如果在Mask R-CNN用自己的数据集训练。

在开始之前,我们先看一下Mask R-CNN文件夹下的samples文件夹,可以看到里面有ballon文件夹,这就是训练的原始模板,为了进行最小的改动让训练跑起来,在ballon文件夹的同级目录新建一个叫cat的文件夹,后面新建文件夹尽量与我命名相同,这样代码中就基本不用改动路径。

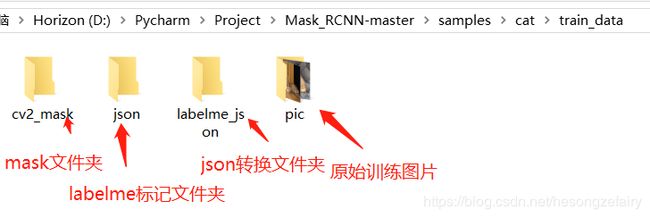

然后在使用labelme标记数据之前,还有一项准备工作,在cat文件夹下新建一个文件夹叫train_data,同时在train_data文件夹下再新建四个文件夹,命名如下,同时我在pic文件夹中放入几张训练图片

做好以上准备工作之后,就可以开始着手标记数据了,这里我们使用的工具是labelme,不是yolo标记用的labelimg

一. 使用labelme标记数据

首先安装labelme

conda activate maskrcnn

pip install pyqt5

# 不要装高版本的,原因后面讲

pip install labelme==3.16.2这里下载的时候很容易出现http error导致安装失败,不要慌张,重新运行pip命令即可,多试几次肯定能下好,如果实在中间某一个包下载失败,可以使用pip install命令单独下载这个包,然后再来安装labelme

安装成功之后,我们在命令行启动labelme

启动之后,打开训练图片文件夹,点击create polygons绘制mask(沿着轮廓打点),画好点save保存json文件到之前新建的json文件夹中即可

所有训练图片绘制完成后,json文件夹中应该生成了和pic中数量对应的json文件,接下来要处理json文件

这里碰到了第一个问题,labelme的作者给出的转化方法:labelme_json_to_dataset+空格+文件名称.json,一次只能处理一个json文件,对于稍微大一点的数据库这样就非常不方便了,因此智慧的网友们给出了批量处理的方法,但是这个方法适用于低版本的labelme,这就是为什么之前安装labelme的时候特别强调了版本问题,否则安装高版本会出现找不到utils的draw模块。

使用批量转换json文件的方法,首先需要定位anaconda中labelme的位置,找到json_to_dataset.py文件

我的路径是D:\Anaconda\envs\mask\Lib\site-packages\labelme\cli\json_to_dataset.py

将该文件替换成以下代码

import argparse

import json

import os

import os.path as osp

import warnings

import PIL.Image

import yaml

from labelme import utils

import base64

def main():

warnings.warn("This script is aimed to demonstrate how to convert the\n"

"JSON file to a single image dataset, and not to handle\n"

"multiple JSON files to generate a real-use dataset.")

parser = argparse.ArgumentParser()

parser.add_argument('json_file')

parser.add_argument('-o', '--out', default=None)

args = parser.parse_args()

json_file = args.json_file

if args.out is None:

out_dir = osp.basename(json_file).replace('.', '_')

out_dir = osp.join(osp.dirname(json_file), out_dir)

else:

out_dir = args.out

if not osp.exists(out_dir):

os.mkdir(out_dir)

count = os.listdir(json_file)

for i in range(0, len(count)):

path = os.path.join(json_file, count[i])

if os.path.isfile(path):

data = json.load(open(path))

if data['imageData']:

imageData = data['imageData']

else:

imagePath = os.path.join(os.path.dirname(path), data['imagePath'])

with open(imagePath, 'rb') as f:

imageData = f.read()

imageData = base64.b64encode(imageData).decode('utf-8')

img = utils.img_b64_to_arr(imageData)

label_name_to_value = {'_background_': 0}

for shape in data['shapes']:

label_name = shape['label']

if label_name in label_name_to_value:

label_value = label_name_to_value[label_name]

else:

label_value = len(label_name_to_value)

label_name_to_value[label_name] = label_value

# label_values must be dense

label_values, label_names = [], []

for ln, lv in sorted(label_name_to_value.items(), key=lambda x: x[1]):

label_values.append(lv)

label_names.append(ln)

assert label_values == list(range(len(label_values)))

lbl = utils.shapes_to_label(img.shape, data['shapes'], label_name_to_value)

captions = ['{}: {}'.format(lv, ln)

for ln, lv in label_name_to_value.items()]

lbl_viz = utils.draw_label(lbl, img, captions)

out_dir = osp.basename(count[i]).replace('.', '_')

out_dir = osp.join(osp.dirname(count[i]), out_dir)

if not osp.exists(out_dir):

os.mkdir(out_dir)

PIL.Image.fromarray(img).save(osp.join(out_dir, 'img.png'))

# PIL.Image.fromarray(lbl).save(osp.join(out_dir, 'label.png'))

utils.lblsave(osp.join(out_dir, 'label.png'), lbl)

PIL.Image.fromarray(lbl_viz).save(osp.join(out_dir, 'label_viz.png'))

with open(osp.join(out_dir, 'label_names.txt'), 'w') as f:

for lbl_name in label_names:

f.write(lbl_name + '\n')

warnings.warn('info.yaml is being replaced by label_names.txt')

info = dict(label_names=label_names)

with open(osp.join(out_dir, 'info.yaml'), 'w') as f:

yaml.safe_dump(info, f, default_flow_style=False)

print('Saved to: %s' % out_dir)

if __name__ == '__main__':

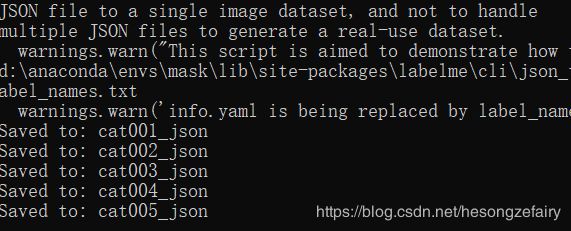

main()替换之后在cd到pic文件夹,命令行执行labelme_json_to_dataset (json文件夹路径),举例如下:

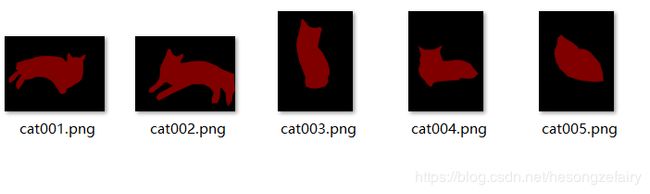

labelme_json_to_dataset D:\Pycharm\Project\Mask_RCNN-master\samples\cat\train_data\json执行完成后,每一个json文件会生成一个对应的文件夹,且包含五个文件,其中label.png就是我们接下来要转移到cv2_mask中的文件,需要强调的是,有很多之前的博客说label.png是16位保存的,还需要通过什么手段转成8位才行,各位,现在labelme已经升级了,如果你的label.png能正常显示彩色的mask而不是一团黑证明你就是8位保存的,不需要再有其他操作,放心大胆的往下走。

同样的,将label.png从每一个文件夹向cv2_mask中一个个移太浪费时间了,这里给一个移动的方法,同时对图片重命名,保持和文件夹名字对应

import os

path='labelme_json'

files=os.listdir(path)

for file in files:

jpath=os.listdir(os.path.join(path,file))

new=file[:-5]

newnames=os.path.join('cv2_mask',new)

filename=os.path.join(path,file,jpath[2])

print(filename)

print(newnames)

os.rename(filename,newnames+'.png')执行该文件后,cv2_mask中已经有了mask文件

这样,数据的标注工作就完成了!

二. 训练步骤

在cat文件夹下新建一个python文件,命名train.py,直接拷贝以下代码,然后我再来详细说需要修改的地方

# -*- coding: utf-8 -*-

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

import tensorflow as tf

from mrcnn.config import Config

#import utils

from mrcnn import model as modellib,utils

from mrcnn import visualize

import yaml

from mrcnn.model import log

from PIL import Image

#os.environ["CUDA_VISIBLE_DEVICES"] = "0"

# Root directory of the project

ROOT_DIR = os.getcwd()

#ROOT_DIR = os.path.abspath("../")

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num=0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 6 # background + 3 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 320

IMAGE_MAX_DIM = 384

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 100

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 10

# use small validation steps since the epoch is small

VALIDATION_STEPS = 10

config = ShapesConfig()

config.display()

class DrugDataset(utils.Dataset):

# 得到该图中有多少个实例(物体)

def get_obj_index(self, image):

n = np.max(image)

return n

# 解析labelme中得到的yaml文件,从而得到mask每一层对应的实例标签

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read(), Loader=yaml.FullLoader)

labels = temp['label_names']

del labels[0]

return labels

# 重新写draw_mask

def draw_mask(self, num_obj, mask, image,image_id):

#print("draw_mask-->",image_id)

#print("self.image_info",self.image_info)

info = self.image_info[image_id]

#print("info-->",info)

#print("info[width]----->",info['width'],"-info[height]--->",info['height'])

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

#print("image_id-->",image_id,"-i--->",i,"-j--->",j)

#print("info[width]----->",info['width'],"-info[height]--->",info['height'])

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

# 重新写load_shapes,里面包含自己的类别,可以任意添加

# 并在self.image_info信息中添加了path、mask_path 、yaml_path

# yaml_pathdataset_root_path = "/tongue_dateset/"

# img_floder = dataset_root_path + "rgb"

# mask_floder = dataset_root_path + "mask"

# dataset_root_path = "/tongue_dateset/"

def load_shapes(self, count, img_floder, mask_floder, imglist, dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes,可通过这种方式扩展多个物体

self.add_class("shapes", 1, "HP")

self.add_class("shapes", 2, "PJ")

self.add_class("shapes", 3, "PP")

self.add_class("shapes", 4, "YJ")

self.add_class("shapes", 5, "YP")

self.add_class("shapes", 6, "YPS")

for i in range(count):

# 获取图片宽和高

filestr = imglist[i].split(".")[0]

#print(imglist[i],"-->",cv_img.shape[1],"--->",cv_img.shape[0])

#print("id-->", i, " imglist[", i, "]-->", imglist[i],"filestr-->",filestr)

#filestr = filestr.split("_")[1]

mask_path = mask_floder + "/" + filestr + ".png"

yaml_path = dataset_root_path + "labelme_json/" + filestr + "_json/info.yaml"

print(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

# 重写load_mask

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

print("image_id",image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img,image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("HP") != -1:

# print "box"

labels_form.append("HP")

elif labels[i].find("PJ")!=-1:

#print "column"

labels_form.append("PJ")

elif labels[i].find("PP")!=-1:

#print "package"

labels_form.append("PP")

elif labels[i].find("YJ")!=-1:

#print "column"

labels_form.append("YJ")

elif labels[i].find("YP")!=-1:

#print "column"

labels_form.append("YP")

elif labels[i].find("YPS")!=-1:

#print "column"

labels_form.append("YPS")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

def get_ax(rows=1, cols=1, size=8):

"""Return a Matplotlib Axes array to be used in

all visualizations in the notebook. Provide a

central point to control graph sizes.

Change the default size attribute to control the size

of rendered images

"""

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

#基础设置

dataset_root_path="train_data/"

img_floder = dataset_root_path + "pic"

mask_floder = dataset_root_path + "cv2_mask"

#yaml_floder = dataset_root_path

imglist = os.listdir(img_floder)

count = len(imglist)

#train与val数据集准备

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_floder, mask_floder, imglist,dataset_root_path)

dataset_train.prepare()

#print("dataset_train-->",dataset_train._image_ids)

dataset_val = DrugDataset()

dataset_val.load_shapes(6, img_floder, mask_floder, imglist,dataset_root_path)

dataset_val.prepare()

#print("dataset_val-->",dataset_val._image_ids)

# Load and display random samples

#image_ids = np.random.choice(dataset_train.image_ids, 4)

#for image_id in image_ids:

# image = dataset_train.load_image(image_id)

# mask, class_ids = dataset_train.load_mask(image_id)

# visualize.display_top_masks(image, mask, class_ids, dataset_train.class_names)

# Create model in training mode

model = modellib.MaskRCNN(mode="training", config=config,

model_dir=MODEL_DIR)

# Which weights to start with?

init_with = "coco" # imagenet, coco, or last

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

# Load weights trained on MS COCO, but skip layers that

# are different due to the different number of classes

# See README for instructions to download the COCO weights

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last model you trained and continue training

model.load_weights(model.find_last()[1], by_name=True)

# Train the head branches

# Passing layers="heads" freezes all layers except the head

# layers. You can also pass a regular expression to select

# which layers to train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs=20,

layers='heads')

# Fine tune all layers

# Passing layers="all" trains all layers. You can also

# pass a regular expression to select which layers to

# train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE / 10,

epochs=40,

layers="all")

修改1:代码33行 其实不用修改 就是强调一下在根目录下需要有预训练的权重可以加载,没有的话程序会自动下载,等待下载完成即可,如果下载途中断掉,你直接运行程序,会报错OSError: Unable to open file (truncated file: eof = 8388608, sblock->base_addr = 0, stored_eof =34643576),其实意思就是权重文件没有下载完成,无法加载。

修改2:代码53行,如果你有三类就是1+3,五类就是1+5,1表示background不能改变,动后面一个数字即可。

NUM_CLASSES = 1 + 1 # background + 3 shapes修改3:代码196行,这里是数据集的路径,如果你按照我之前说的来建立文件夹,这里不需要改动,否则根据自己的实际情况来修改数据集的路径

修改4:代码121行,这里需要修改成和你的类别对应,你有五类就要写五行五类的名称,这里我一类改成cat

self.add_class("shapes", 1, "cat")修改5:代码162行,这里同样是要根据你的实际类别数量来修改,我修改成一类cat,新增就多写一个elif

if labels[i].find("cat") != -1:

# print "box"

labels_form.append("cat")以上就是必要的修改地点,根据自己数据集的情况来修改,其他的如epoch等参数,就不再赘述了。

最后就直接运行train.py

三. 测试步骤

将训练好的h5文件从log中最后一次训练的文件夹中拿出来,放在和log同级的目录

然后在cat文件夹下新建一个python文件,命名predict.py,直接拷贝以下代码,需要修改的地方已做注释。

# -*- coding: utf-8 -*-

import os

import sys

import random

import math

import numpy as np

import skimage.io

import matplotlib

import matplotlib.pyplot as plt

import cv2

import time

from mrcnn.config import Config

from datetime import datetime

# Root directory of the project

ROOT_DIR = os.getcwd()

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

# Import COCO config

# sys.path.append(os.path.join(ROOT_DIR, "samples/coco/")) # To find local version

# from samples.coco import coco

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(MODEL_DIR, "mask_rcnn_shapes_0040.h5") # 修改

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

print("cuiwei***********************")

# Directory of images to run detection on

IMAGE_DIR = os.path.join(ROOT_DIR, "images")

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 6 # background + 3 shapes 修改

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 320

IMAGE_MAX_DIM = 384

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 100

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 100

# use small validation steps since the epoch is small

VALIDATION_STEPS = 50

# import train_tongue

# class InferenceConfig(coco.CocoConfig):

class InferenceConfig(ShapesConfig):

# Set batch size to 1 since we'll be running inference on

# one image at a time. Batch size = GPU_COUNT * IMAGES_PER_GPU

GPU_COUNT = 1

IMAGES_PER_GPU = 1

config = InferenceConfig()

model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=config)

# Create model object in inference mode.

model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=config)

# Load weights trained on MS-COCO

model.load_weights(COCO_MODEL_PATH, by_name=True)

# COCO Class names

# Index of the class in the list is its ID. For example, to get ID of

# the teddy bear class, use: class_names.index('teddy bear')

class_names = ['HP', 'PJ', 'PP', 'YJ', 'YP', 'YPS'] # 修改

# Load a random image from the images folder

file_names = next(os.walk(IMAGE_DIR))[2]

image = skimage.io.imread("./images/ScrewYJ0090.jpg") # 修改测试图片

a = datetime.now()

# Run detection

results = model.detect([image], verbose=1)

b = datetime.now()

# Visualize results

print("shijian", (b - a).seconds)

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'],

class_names, r['scores'])