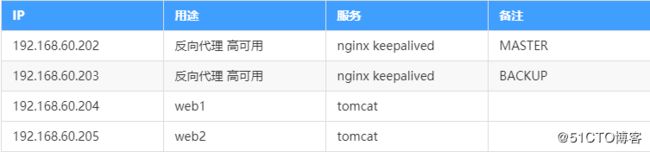

1.环境说明

2.安装tomcat

在192.168.60.204,192.168.60.205上安装tomcat,并修改 /usr/local/tomcat/webapps/ROOT/index.jsp页面,在页面中加入tomcat的IP地址,例如192.168.60.204上我们修改为:

vim /usr/local/tomcat/webapps/ROOT/index.jsp

${pageContext.servletContext.serverInfo}(192.168.60.204)<%=request.getHeader("X-NGINX")%>

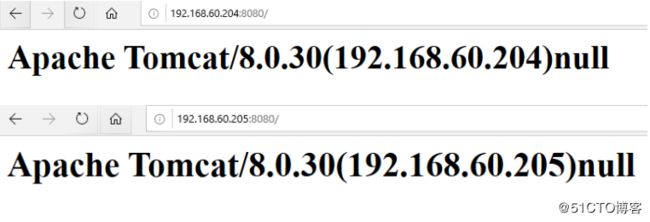

启动204和205两台机器的tomcat,确认浏览器能正确访问http://192.168.60.204:8080和http://192.168.60.205:8080

并且页面上能正确显示各自的IP地址,此时request header里没有X-NGINX,所以显示null。

3.安装nginx

在192.168.60.202 ,192.168.60.203 上都要安装nginx服务,例如192.168.60.202安装过程如下:

安装依赖包

#yum -y install epel-release lrzsz zip unzip wget tree git dpkg pcre pcre-devel openssl openssl-devel gd-devel zlib-devel gcc

#wget http://nginx.org/download/nginx-1.18.0.tar.gz -O /data

#tar -xf nginx-1.18.0.tar.gz

#cd nginx-1.18.0/

#./configure --prefix=/data/nginx --with-http_ssl_module --with-http_v2_module --with-http_stub_status_module --with-pcre --with-http_gzip_static_module --with-http_dav_module --with-http_addition_module --with-http_sub_module --with-http_flv_module --with-http_mp4_module

#make && make install

#/data/nginx/sbin/nginx

#ps -ef |grep nginx

加入开机自启动

#chmod +x /etc/rc.d/rc.local

# vim /etc/rc.d/rc.local

/data/nginx/sbin/nginx浏览器中访问http://192.168.60.202

看到如下界面,则nginx配置成功

4.配置nginx反向代理

在/data/nginx/conf/nginx.conf配置nginx的反向代理,将请求转发到103和104两台tomcat上

在Master(202)中设置proxy_set_header X-NGINX “NGINX-1”

upstream tomcat_server {

server 192.168.60.204:8080 weight=1;

server 192.168.60.205:8080 weight=1;

}

server {

listen 80;

server_name 192.168.60.202;

charset utf-8;

# access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm index.jsp;

proxy_pass http://tomcat_server;

proxy_set_header X-NGINX "NGINX-1";

}在Backup(203)中设置proxy_set_header X-NGINX “NGINX-2”

upstream tomcat_server {

server 192.168.60.204:8080 weight=1;

server 192.168.60.205:8080 weight=1;

}

server {

listen 80;

server_name 192.168.60.203;

charset utf-8;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm index.jsp;

proxy_pass http://tomcat_server;

proxy_set_header X-NGINX "NGINX-2";

}按照上面方法在Master(202)上安装和配置好nginx,浏览器地址栏输入http://192.168.60.202

多次刷新页面,能看到页面上显式IP地址信息,192.168.60.204和192.168.60.205交替显式,说明nginx已经将用户请求负载均衡到了2台tomcat上

并且能看到NGINX-1也能显示在页面上,如下图

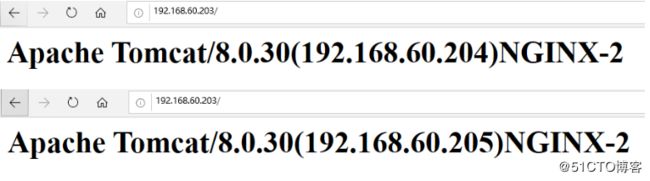

同理在Backup(203)上安装和配置好nginx,浏览器地址栏输入http://192.168.60.203

多次刷新页面,能看到页面上显式IP地址信息,192.168.60.204和192.168.60.205交替显式,说明nginx已经将用户请求负载均衡到了2台tomcat上

并且能看到NGINX-2也能显示在页面上,如下图

5.安装Keepalived

yum -y install keepalived

systemctl start keepalived.service

systemctl enable keepalived.service

ps -ef |grep keepalived在/etc/keepalived目录下,添加nginx_check.sh(检查nginx存活的shell脚本)和keepalived.conf(keepalived配置文件)

vim nginx_check.sh

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ];then

systemctl stop keepalived

fiMaster(202)中的keepalived.conf配置如下

修改之前先备份下 cp keepalived.conf{,.bak}

global_defs {

router_id NodeA

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh" //检测nginx进程的脚本

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER //主服务器

interface eth0

virtual_router_id 50

mcast_src_ip 192.168.60.202

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.60.220 //虚拟ip

}

}Backup(203)中的keepalived.conf配置如下

global_defs {

router_id NodeA

}

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh" //检测nginx进程的脚本

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP //从服务器

interface eth0

virtual_router_id 50

mcast_src_ip 192.168.60.203

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

track_script {

chk_nginx

}

virtual_ipaddress {

192.168.60.220 //虚拟ip

}

}关于keepalived配置的几点说明

state - 主服务器需配成MASTER,从服务器需配成BACKUP

interface - 这个是网卡名

mcast_src_ip - 配置各自的实际IP地址

priority - 主服务器的优先级必须比从服务器的高,这里主服务器配置成100,从服务器配置成99

virtual_ipaddress - 配置虚拟IP(192.168.60.220)

authentication - auth_pass主从服务器必须一致,keepalived靠这个来通信

virtual_router_id - 主从服务器必须保持一致

Master,Backup都正常,只有Master对外提供服务

查看keepalived和nginx进程,确保keepalived和nginx启动正常

[```

root@master ~]# ps -ef |grep keepalived

root 4798 1 0 14:26 ? 00:00:00 /usr/sbin/keepalived -D

root 4799 4798 0 14:26 ? 00:00:00 /usr/sbin/keepalived -D

root 4800 4798 0 14:26 ? 00:00:11 /usr/sbin/keepalived -D

root 36110 1509 0 17:51 pts/0 00:00:00 grep --color=auto keepalived

[root@master ~]# ps -ef |grep nginx

root 4770 1 0 14:26 ? 00:00:00 nginx: master process nginx

nobody 32491 4770 0 17:28 ? 00:00:00 nginx: worker process

nobody 32492 4770 0 17:28 ? 00:00:00 nginx: worker process

root 36297 1509 0 17:53 pts/0 00:00:00 grep --color=auto nginx

使用ip add命令,查看VIP是否被绑定到202机器上,可以看到192.168.60.220已经被绑定到202机器上了

[root@master ~]# ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5e:b0:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.60.202/22 brd 192.168.63.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.60.220/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5221:c926:ed9:826/64 scope link noprefixroute

valid_lft forever preferred_lft forever

再启动Backup(203)机器的keepalived和nginx,确保其正常启动,查看Backup的IP信息,发现VIP现在还没有绑定到Backup(203)上。 [root@slve ~]# ip addr

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0:

link/ether 00:0c:29:bf:ab:7f brd ff:ff:ff:ff:ff:ff

inet 192.168.60.203/22 brd 192.168.63.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::6538:4b94:1249:2af8/64 scope link noprefixroute

valid_lft forever preferred_lft forever

# 6.验证高可用性

当Master(202)nginx挂了,Backup接替Master对外提供服务

接着,我们在Master(202)机器上停掉nginx服务来模拟Master服务器挂掉,并查看VIP是否还在Master机器上[root@master ~]# nginx -s stop

[root@master ~]# ps -ef |grep nginx

root 37172 1509 0 17:59 pts/0 00:00:00 grep --color=auto nginx

[root@master ~]# ip addr

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0:

link/ether 00:0c:29:5e:b0:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.60.202/22 brd 192.168.63.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::5221:c926:ed9:826/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0:

link/ether 02:42:c8:f0:d5:63 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[root@master ~]#

查看Backup(203)的VIP,发现VIP已经绑定到了Backup(203)[root@slve ~]# ip addr

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0:

link/ether 00:0c:29:bf:ab:7f brd ff:ff:ff:ff:ff:ff

inet 192.168.60.203/22 brd 192.168.63.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.60.220/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::6538:4b94:1249:2af8/64 scope link noprefixroute

valid_lft forever preferred_lft forever

浏览器多次刷新并访问http://192.168.60.203/

可以看到页面上IP交替显式204和205,并且显示NGINX-2,则表明是Backup(203)在转发web请求,也就是说Master挂掉后,Backup继续接管Master的服务。

Master恢复正常后,Master继续提供服务,Backup停止服务,并继续等待Master出现故障

我们再启动Master(202)机器的keepalived和nginx,查看VIP,发现VIP已经被Master“夺回”了使用权限

浏览器多次刷新并访问http://192.168.60.202/

可以看到页面上IP交替显式204和205,并且显示NGINX-1,则表明是Master(202)在转发web请求,也就是说Master重新启动后,Master重新对外提供服务,Backup则停止服务继续等待Master挂掉。[root@master ~]# /data/nginx/sbin/nginx

[root@master ~]# ps -ef |grep nginx

root 37200 1 0 18:05 ? 00:00:00 nginx: master process /data/nginx/sbin/nginx

nobody 37201 37200 0 18:05 ? 00:00:00 nginx: worker process

nobody 37202 37200 0 18:05 ? 00:00:00 nginx: worker process

root 37205 1509 0 18:05 pts/0 00:00:00 grep --color=auto nginx

[root@master ~]# systemctl start keepalived.service

[root@master ~]# ps -ef |grep keepalived

root 37230 1 0 18:05 ? 00:00:00 /usr/sbin/keepalived -D

root 37231 37230 0 18:05 ? 00:00:00 /usr/sbin/keepalived -D

root 37232 37230 0 18:05 ? 00:00:00 /usr/sbin/keepalived -D

root 37307 1509 0 18:06 pts/0 00:00:00 grep --color=auto keepalived

[root@master ~]# ip addr

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0:

link/ether 00:0c:29:5e:b0:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.60.202/22 brd 192.168.63.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.60.220/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5221:c926:ed9:826/64 scope link noprefixroute

valid_lft forever preferred_lft forever

到此nginx高可用性已经成功实现,当Master(202)机器nginx挂掉,Backup (203)继续对外提供服务。