直播--android端推流实现二

了解本章内容之前,需要了解H.264编码原理。链接地址

H.264编码原理

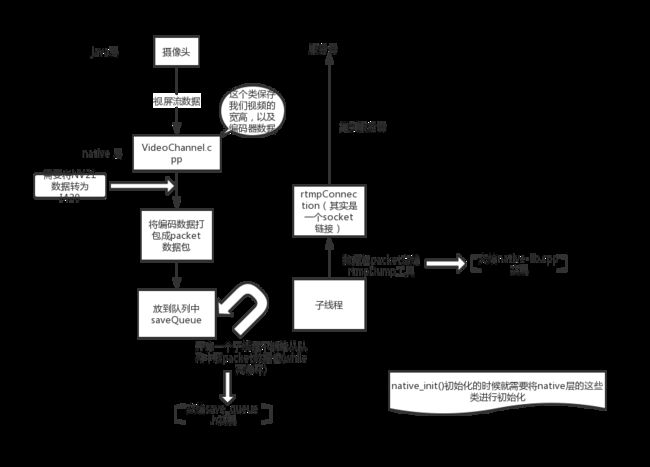

上面讲到了如何将推流需要的库rtmpDump、x264集成到项目中,本节讲述视频推流实现,上一张推流的流程图:

流程图看到,首先我们摄像头采集到的数据,会通过VideoChannel.cpp将NV21数据编码成I420数据。并将I420数据按照rtmp协议规则将数据封装成packet中,将packet放入队列,通过线程不断地从队列中取出packet,发送给服务端。

一、JAVA层代码实现

1、创建CameraHelper.java类,主要负责摄像头初始化,以及摄像头旋转,采集摄像头数据等,代码如下

package com.example.live.meida;

import android.app.Activity;

import android.graphics.ImageFormat;

import android.hardware.Camera;

import android.util.Log;

import android.view.Surface;

import android.view.SurfaceHolder;

import java.util.Iterator;

import java.util.List;

public class CameraHelper implements SurfaceHolder.Callback, Camera.PreviewCallback {

private static final String TAG = "CameraHelper";

private Activity mActivity;

private int mHeight;

private int mWidth;

private int mCameraId;

private Camera mCamera;

private byte[] buffer;

private SurfaceHolder mSurfaceHolder;

private Camera.PreviewCallback mPreviewCallback;

private int mRotation;

private OnChangedSizeListener mOnChangedSizeListener;

byte[] bytes;

public CameraHelper(Activity activity, int cameraId, int width, int height) {

mActivity = activity;

mCameraId = cameraId;

mWidth = width;

mHeight = height;

}

/**

*旋转镜头

*/

public void switchCamera() {

if (mCameraId == Camera.CameraInfo.CAMERA_FACING_BACK) {

mCameraId = Camera.CameraInfo.CAMERA_FACING_FRONT;

} else {

mCameraId = Camera.CameraInfo.CAMERA_FACING_BACK;

}

stopPreview();

startPreview();

}

private void stopPreview() {

if (mCamera != null) {

//预览数据回调接口

mCamera.setPreviewCallback(null);

//停止预览

mCamera.stopPreview();

//释放摄像头

mCamera.release();

mCamera = null;

}

}

private void startPreview() {

try {

//获得camera对象

mCamera = Camera.open(mCameraId);

//配置camera的属性

Camera.Parameters parameters = mCamera.getParameters();

//设置预览数据格式为nv21

parameters.setPreviewFormat(ImageFormat.NV21);

//这是摄像头宽、高

setPreviewSize(parameters);

// 设置摄像头 图像传感器的角度、方向

setPreviewOrientation(parameters);

mCamera.setParameters(parameters);

buffer = new byte[mWidth * mHeight * 3 / 2];

bytes = new byte[buffer.length];

//数据缓存区

mCamera.addCallbackBuffer(buffer);

mCamera.setPreviewCallbackWithBuffer(this);

//设置预览画面

mCamera.setPreviewDisplay(mSurfaceHolder);

mCamera.startPreview();

} catch (Exception ex) {

ex.printStackTrace();

}

}

//显示画面 会调用 编码 横着的

private void setPreviewOrientation(Camera.Parameters parameters) {

Camera.CameraInfo info = new Camera.CameraInfo();

Camera.getCameraInfo(mCameraId, info);

mRotation = mActivity.getWindowManager().getDefaultDisplay().getRotation();

int degrees = 0;

switch (mRotation) {

case Surface.ROTATION_0:

degrees = 0;

mOnChangedSizeListener.onChanged(mHeight, mWidth);

break;

case Surface.ROTATION_90: // 横屏 左边是头部(home键在右边)

degrees = 90;

mOnChangedSizeListener.onChanged(mWidth, mHeight);

break;

case Surface.ROTATION_270:// 横屏 头部在右边

degrees = 270;

mOnChangedSizeListener.onChanged(mWidth, mHeight);

break;

}

int result;

if (info.facing == Camera.CameraInfo.CAMERA_FACING_FRONT) {

result = (info.orientation + degrees) % 360;

result = (360 - result) % 360; // compensate the mirror

} else { // back-facing

result = (info.orientation - degrees + 360) % 360;

}

//设置角度

mCamera.setDisplayOrientation(result);

}

private void setPreviewSize(Camera.Parameters parameters) {

//获取摄像头支持的宽、高

List supportedPreviewSizes = parameters.getSupportedPreviewSizes();

Camera.Size size = supportedPreviewSizes.get(0);

Log.d(TAG, "支持 " + size.width + "x" + size.height);

//选择一个与设置的差距最小的支持分辨率

// 10x10 20x20 30x30

// 12x12

int m = Math.abs(size.height * size.width - mWidth * mHeight);

supportedPreviewSizes.remove(0);

Iterator iterator = supportedPreviewSizes.iterator();

//遍历

while (iterator.hasNext()) {

Camera.Size next = iterator.next();

Log.d(TAG, "支持 " + next.width + "x" + next.height);

int n = Math.abs(next.height * next.width - mWidth * mHeight);

if (n < m) {

m = n;

size = next;

}

}

mWidth = size.width;

mHeight = size.height;

parameters.setPreviewSize(mWidth, mHeight);

Log.d(TAG, "设置预览分辨率 width:" + size.width + " height:" + size.height);

}

public void setPreviewDisplay(SurfaceHolder surfaceHolder) {

mSurfaceHolder = surfaceHolder;

mSurfaceHolder.addCallback(this);

}

public void setPreviewCallback(Camera.PreviewCallback previewCallback) {

mPreviewCallback = previewCallback;

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

//释放摄像头

stopPreview();

//开启摄像头

startPreview();

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

stopPreview();

}

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

switch (mRotation) {

case Surface.ROTATION_0:

rotation90(data);

break;

case Surface.ROTATION_90: // 横屏 左边是头部(home键在右边)

break;

case Surface.ROTATION_270:// 横屏 头部在右边

break;

}

// data数据依然是倒的

mPreviewCallback.onPreviewFrame(bytes, camera);

camera.addCallbackBuffer(buffer);

}

private void rotation90(byte[] data) {

int index = 0;

int ySize = mWidth * mHeight;

//u和v

int uvHeight = mHeight / 2;

//后置摄像头顺时针旋转90度

if (mCameraId == Camera.CameraInfo.CAMERA_FACING_BACK) {

//将y的数据旋转之后 放入新的byte数组

for (int i = 0; i < mWidth; i++) {

for (int j = mHeight - 1; j >= 0; j--) {

bytes[index++] = data[mWidth * j + i];

}

}

//每次处理两个数据

for (int i = 0; i < mWidth; i += 2) {

for (int j = uvHeight - 1; j >= 0; j--) {

// v

bytes[index++] = data[ySize + mWidth * j + i];

// u

bytes[index++] = data[ySize + mWidth * j + i + 1];

}

}

} else {

//逆时针旋转90度

for (int i = 0; i < mWidth; i++) {

int nPos = mWidth - 1;

for (int j = 0; j < mHeight; j++) {

bytes[index++] = data[nPos - i];

nPos += mWidth;

}

}

//u v

for (int i = 0; i < mWidth; i += 2) {

int nPos = ySize + mWidth - 1;

for (int j = 0; j < uvHeight; j++) {

bytes[index++] = data[nPos - i - 1];

bytes[index++] = data[nPos - i];

nPos += mWidth;

}

}

}

}

public void setOnChangedSizeListener(OnChangedSizeListener listener) {

mOnChangedSizeListener = listener;

}

public void release() {

mSurfaceHolder.removeCallback(this);

stopPreview();

}

public interface OnChangedSizeListener {

void onChanged(int w, int h);

}

}

2、创建VideoChannel.java类,主要负责开启直播以及初始化CameraHelper类。代码如下;

package com.example.live.meida;

import android.app.Activity;

import android.hardware.Camera;

import android.util.Log;

import android.view.SurfaceHolder;

import com.example.live.LivePusher;

public class VideoChannel implements Camera.PreviewCallback, CameraHelper.OnChangedSizeListener {

private static final String TAG = "tuch";

private CameraHelper cameraHelper;

private int mBitrate;

private int mFps;

private boolean isLiving;

LivePusher livePusher;

public VideoChannel(LivePusher livePusher, Activity activity, int width, int height, int bitrate, int fps, int cameraId) {

mBitrate = bitrate;

mFps = fps;

this.livePusher = livePusher;

cameraHelper = new CameraHelper(activity, cameraId, width, height);

cameraHelper.setPreviewCallback(this);

cameraHelper.setOnChangedSizeListener(this);

}

//data nv21

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

Log.i(TAG, "onPreviewFrame: ");

//标志位,只有开始的时候才推流

if (isLiving) {

//开始推流

livePusher.native_pushVideo(data);

}

}

@Override

public void onChanged(int w, int h) {

//

livePusher.native_setVideoEncInfo(w, h, mFps, mBitrate);

}

public void switchCamera() {

cameraHelper.switchCamera();

}

public void setPreviewDisplay(SurfaceHolder surfaceHolder) {

cameraHelper.setPreviewDisplay(surfaceHolder);

}

public void startLive() {

isLiving = true;

}

}

3、创建LivePusher.java类,主要负责将native层的初始化,以及将摄像头数据发送到native层。代码如下:

package com.example.live;

import android.app.Activity;

import android.view.SurfaceHolder;

import com.example.live.meida.AudioChannel;

import com.example.live.meida.VideoChannel;

public class LivePusher {

private AudioChannel audioChannel;

private VideoChannel videoChannel;

static {

System.loadLibrary("native-lib");

}

public LivePusher(Activity activity, int width, int height, int bitrate,

int fps, int cameraId) {

native_init();

videoChannel = new VideoChannel(this, activity, width, height, bitrate, fps, cameraId);

audioChannel = new AudioChannel(this);

}

public void setPreviewDisplay(SurfaceHolder surfaceHolder) {

videoChannel.setPreviewDisplay(surfaceHolder);

}

public void switchCamera() {

videoChannel.switchCamera();

}

public void startLive(String path) {

native_start(path);

videoChannel.startLive();

}

public native void native_init();

public native void native_setVideoEncInfo(int width, int height, int fps, int bitrate);

public native void native_start(String path);

public native void native_pushVideo(byte[] data);

}

4、acticity的布局文件代码如下:

5、在activity中初始化livePusher,并设置预览画面。然后调用开始直播方法,将url地址传给native层。代码如下:

void onCreate(){

livePusher = new LivePusher(this, 800, 480, 800_000, 10, Camera.CameraInfo.CAMERA_FACING_BACK);

// 设置摄像头预览的界面

livePusher.setPreviewDisplay(surfaceView.getHolder());

}

//地址为你自己的服务器地址

public void startLive(View view) {

livePusher.startLive("rtmp://192.168.14.135/myapp");

}上面就完成了java层的初始化。总结一下:

在onCreate方法中初始化livePusher,会将宽高、码率、以及帧率传给VideoChannel,在VideoChannel会持有CameraHelper对象的引用,继而通过它传递给CameraHelper进行摄像头的初始化。VideoChannel实现了CameraHelper的接口,当摄像头横竖屏切换,或者有视频流过来,都会通过回调的方式交给VideoChannel来取调用native层的方法。

二、native层实现

1、创建VideoChannel类,主要保存java层传递的视频信息,以及x264编码器和和一帧图片。

(1)VideoChannel.h代码如下:

//

// Created by qon 2019/5/31.

//

#ifndef LIVE_VIDEOCHANNEL_H

#define LIVE_VIDEOCHANNEL_H

#include

#include "librtmp/rtmp.h"

class VideoChannel {

typedef void(*VideoCallBack)(RTMPPacket* packet) ;

public:

void setVideoEncInfo(int width, int height, int fps, int bitrate);

void encodeData(int8_t *date);

void setVideoCallBack(VideoCallBack callBack);

private:

int mWidth;

int mHeight;

int mFps;

int mBitrate;

int ySize;

int uvSize;

//编码器

x264_t *videoCodec;

//一帧

x264_picture_t *pic_in;

void sendSpsPps(uint8_t sps[100], uint8_t pps[100], int len, int pps_len);

//设置回调

VideoCallBack videoCallBack;

void sendFrame(int type, uint8_t *payload, int i_payload);

};

#endif //LIVE_VIDEOCHANNEL_H

(2)VideoChannel.cpp代码如下

//

// Created by 秦彬 on 2019/5/31.

//

#include

#include "VideoChannel.h"

#include "librtmp/rtmp.h"

#include "macro.h"

void VideoChannel::setVideoEncInfo(int width, int height, int fps, int bitrate) {

mWidth = width;

mHeight = height;

mFps = fps;

mBitrate = bitrate;

ySize = width*height;

uvSize = ySize/4;

//初始化编码器

x264_param_t param;

x264_param_default_preset(¶m, "ultrafast", "zerolatency");

// 初始化x264的编码器

//base_line 3.2 编码复杂度

param.i_level_idc = 32;

//输入数据格式 NV21 服务器 i420 nv21 -i420

param.i_csp = X264_CSP_I420;

param.i_width = width;

param.i_height = height;

//无b帧 首开

param.i_bframe = 0;

//参数i_rc_method表示码率控制,CQP(恒定质量),CRF(恒定码率),ABR(平均码率)

param.rc.i_rc_method = X264_RC_ABR;

//码率(比特率,单位Kbps)

param.rc.i_bitrate = bitrate / 1000;

//瞬时最大码率 网速 1M 10M

param.rc.i_vbv_max_bitrate = bitrate / 1000 * 1.2;

//设置了i_vbv_max_bitrate必须设置此参数,码率控制区大小,单位kbps

param.rc.i_vbv_buffer_size = bitrate / 1000;

//

param.i_fps_num = fps;

// 音视频 特点 1s 60

// 1ms 1帧

param.i_fps_den = 1;

// 时间间隔 ffmpeg

// param.time=20ms;

// param.i_timebase_num = 1s param.i_timebase_den=50

param.i_timebase_den = param.i_fps_num;

param.i_timebase_num = param.i_fps_den;

// 为了音视频同步

// param.pf_log = x264_log_default2;

//用fps而不是时间戳来计算帧间距离

param.b_vfr_input = 0;

//帧距离(关键帧) 2s一个关键帧

param.i_keyint_max = fps * 2;

// 是否复制sps和pps放在每个关键帧的前面 该参数设置是让每个关键帧(I帧)都附带sps/pps。

param.b_repeat_headers = 1;

//多线程

param.i_threads = 1;

x264_param_apply_profile(¶m, "baseline");

//返回一个编码器

videoCodec= x264_encoder_open(¶m);

//一帧图片

pic_in = new x264_picture_t;

x264_picture_alloc(pic_in, X264_CSP_I420, width, height);

// 编码

}

//将NV21数据转为I420数据,并发送

void VideoChannel::encodeData(int8_t *data) {

//nv21-->I420 转换的数据保存到一个容器就是pic_in 这个容器有4个参数,第一个参数存放Y,第二个参数存放U,第三个参数V

//存放Y

memcpy(pic_in->img.plane[0],data,ySize);

//交替存放UV

for(int i=0;iimg.plane[1]+i) = *(data+ySize+i*2+1);//1,3,5,7 U

*(pic_in->img.plane[2]+i) = *(data+ySize+i*2);//0,2,4,6 V

}

//转成I420不能直接发送出去,要转成切片的形式NALU

//NALU单元

x264_nal_t *pp_nal;

//一帧编码出来有几个NALU单元

int pi_nal;

//返回的图片,可传可不传

x264_picture_t pic_out;

x264_encoder_encode(videoCodec,&pp_nal,&pi_nal,pic_in,&pic_out);

int sps_len;

int pps_len;

uint8_t sps[100];

uint8_t pps[100];

//遍历nalu单元,I帧的第一个NALU单元包含编码器信息,这些信息放在SPS于PPS中

//将sps和pps解析封装成一个数据包packet,只有关键帧才有sps和pps

for(int i=0;im_body[i++] = 0x17;

headerPacket->m_body[i++] = 0x00;

headerPacket->m_body[i++] = 0x00;

headerPacket->m_body[i++] = 0x00;

headerPacket->m_body[i++] = 0x00;

//下面是sps数据

headerPacket->m_body[i++] = sps[1];//profile

headerPacket->m_body[i++] = sps[2];//兼容性

headerPacket->m_body[i++] = sps[3];//profile level

headerPacket->m_body[i++] = 0xff;

headerPacket->m_body[i++] = 0xe1;

headerPacket->m_body[i++] = (sps_len>>8)&0xff;//长度2个字节

memcpy(&headerPacket->m_body[i],sps,sps_len);//数据

i += sps_len;

//下面是pps数据

headerPacket->m_body[i++] = 0x01;

headerPacket->m_body[i++] = (pps_len >> 8) & 0xff;

headerPacket->m_body[i++] = (pps_len) & 0xff;

memcpy(&headerPacket->m_body[i], pps, pps_len);

//视频

headerPacket->m_packetType = RTMP_PACKET_TYPE_VIDEO;

headerPacket->m_nBodySize = packetLen;

//随意分配一个管道(尽量避开rtmp.c中使用的)

headerPacket->m_nChannel = 10;

//sps pps没有时间戳

headerPacket->m_nTimeStamp = 0;

//不使用绝对时间

headerPacket->m_hasAbsTimestamp = 0;

headerPacket->m_headerType = RTMP_PACKET_SIZE_MEDIUM;

//丢到队列中,定义回调方法

if(videoCallBack){

videoCallBack(headerPacket);

}

};

void VideoChannel::setVideoCallBack(VideoChannel::VideoCallBack callBack) {

videoCallBack = callBack;

}

//后发送关键帧其他信息和非关键帧

void VideoChannel::sendFrame(int type, uint8_t *payload, int i_payload) {

if (payload[2] == 0x00) {

i_payload -= 4;

payload += 4;

} else {

i_payload -= 3;

payload += 3;

}

//看表

int bodySize = 9 + i_payload;

RTMPPacket *packet = new RTMPPacket;

//

RTMPPacket_Alloc(packet, bodySize);

packet->m_body[0] = 0x27;

if(type == NAL_SLICE_IDR){

packet->m_body[0] = 0x17;

LOGE("关键帧");

}

//类型

packet->m_body[1] = 0x01;

//时间戳

packet->m_body[2] = 0x00;

packet->m_body[3] = 0x00;

packet->m_body[4] = 0x00;

//数据长度 int 4个字节

packet->m_body[5] = (i_payload >> 24) & 0xff;

packet->m_body[6] = (i_payload >> 16) & 0xff;

packet->m_body[7] = (i_payload >> 8) & 0xff;

packet->m_body[8] = (i_payload) & 0xff;

//图片数据

memcpy(&packet->m_body[9], payload, i_payload);

packet->m_hasAbsTimestamp = 0;

packet->m_nBodySize = bodySize;

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;

packet->m_nChannel = 0x10;

packet->m_headerType = RTMP_PACKET_SIZE_LARGE;

if(videoCallBack){

videoCallBack(packet);

}

}

2、在native初始化的时候会创建VideoChannel对象,在native_setVideoEncInfo的时候会将摄像头信息保存在对象中。代码如下:

#include

#include

#include

#include

#include "VideoChannel.h"

#include "librtmp/rtmp.h"

#include "macro.h"

#include "safe_queue.h"

VideoChannel* videoChannel;

int inStart = 0;

pthread_t pthread;

uint32_t startTime;

int readyPush;

//定义队列

SafeQueue safeQueue;

//*&b1

//如果是 *b1的话,传的是指针,只能改变指针b1指向的内容,而若如果是*&b1,说明参数是传进来指针的同名指针,既能改变指针指向的内容,又能改变指针本身

void releasePacket(RTMPPacket *&pPacket){

if(pPacket){

RTMPPacket_Free(pPacket);

//释放指针指向的内存地址

delete pPacket;

//杜绝野指针,改变的是指针的本身

pPacket = 0;

}

}

//从VideoChannel回调而来

void callBack(RTMPPacket* packet){

if(packet){

//设置时间戳

packet->m_nTimeStamp = RTMP_GetTime()-startTime;

//加入队列

safeQueue.put(packet);

}

}

//线程回调方法,参数为创建线程传递的url地址

void *start(void *args) {

char *url = static_cast(args);

RTMP *rtmp = 0;

rtmp = RTMP_Alloc();

if (!rtmp) {

LOGE("alloc rtmp失败");

return NULL;

}

RTMP_Init(rtmp);

int ret = RTMP_SetupURL(rtmp, url);

if (!ret) {

LOGE("设置地址失败:%s", url);

return NULL;

}

rtmp->Link.timeout = 5;

RTMP_EnableWrite(rtmp);

ret = RTMP_Connect(rtmp, 0);

if (!ret) {

LOGE("连接服务器:%s", url);

return NULL;

}

ret = RTMP_ConnectStream(rtmp, 0);

if (!ret) {

LOGE("连接流:%s", url);

return NULL;

}

startTime= RTMP_GetTime();

//表示可以开始推流了

readyPush = 1;

safeQueue.setWork(1);

RTMPPacket *packet = 0;

while (readyPush) {

// 队列取数据 pakets

safeQueue.get(packet);

LOGE("取出一帧数据");

if (!readyPush) {

break;

}

if (!packet) {

continue;

}

packet->m_nInfoField2 = rtmp->m_stream_id;

ret = RTMP_SendPacket(rtmp, packet, 1);

releasePacket(packet);

// packet 释放

}

inStart = 0;

readyPush = 0;

safeQueue.setWork(0);

safeQueue.clear();

if (rtmp) {

RTMP_Close(rtmp);

RTMP_Free(rtmp);

}

delete (url);

return 0;

}extern "C" JNIEXPORT jstring JNICALL

Java_com_example_live_MainActivity_stringFromJNI(

JNIEnv *env,

jobject /* this */) {

std::string hello = "Hello from C++";

return env->NewStringUTF(hello.c_str());

}

extern "C"

JNIEXPORT void JNICALL

Java_com_example_live_LivePusher_native_1init(JNIEnv *env, jobject instance) {

videoChannel = new VideoChannel;

videoChannel->setVideoCallBack(callBack);

}extern "C"

JNIEXPORT void JNICALL

Java_com_example_live_LivePusher_native_1setVideoEncInfo(JNIEnv *env, jobject instance, jint width,

jint height, jint fps, jint bitrate) {

if(!videoChannel){

return;

}

videoChannel->setVideoEncInfo(width,height,fps,bitrate);

}extern "C"

JNIEXPORT void JNICALL

Java_com_example_live_LivePusher_native_1start(JNIEnv *env, jobject instance, jstring path_) {

const char *path = env->GetStringUTFChars(path_, 0);

// TODO 初始化线程

//防止线程多次启用

if(inStart){

return;

}

inStart =1;

//创建临时变量保存url地址,防止线程在执行的过程中path被下面的方法回收

char *url = new char[strlen(path)+1];

strcpy(url,path);

//创建线程 start是一个回调方法,url为参数

pthread_create(&pthread,0,start,url);

env->ReleaseStringUTFChars(path_, path);

}extern "C"

JNIEXPORT void JNICALL

Java_com_example_live_LivePusher_native_1pushVideo(JNIEnv *env, jobject instance,

jbyteArray data_) {

if (!videoChannel || !readyPush) {

return;

}

jbyte *data = env->GetByteArrayElements(data_, NULL);

//将nv21转为I420

videoChannel->encodeData(data);

// TODO

env->ReleaseByteArrayElements(data_, data, 0);

} 以上就是native-lib.cpp的完整代码,当我们调用native_start()时,就会在native层的开启一个线程,这里是pthread,在线程的执行方法start中,我们首先将地址传给了rtmp务器,并进行链接服务器。连接成功后,进入一个while死循环。不断的从队列中取packet数据包,发送给服务器。

3、队列safe_queue.h代码如下:

//

// Created by liuxiang on 2017/10/15.

//

#ifndef DNRECORDER_SAFE_QUEUE_H

#define DNRECORDER_SAFE_QUEUE_H

#include

#include

using namespace std;

template

class SafeQueue {

public:

SafeQueue() {

pthread_mutex_init(&mutex, NULL);

pthread_cond_init(&cond, NULL);

}

~SafeQueue() {

pthread_cond_destroy(&cond);

pthread_mutex_destroy(&mutex);

}

void put( T new_value) {

//锁 和智能指针原理类似,自动释放

pthread_mutex_lock(&mutex);

if (work) {

q.push(new_value);

pthread_cond_signal(&cond);

}else{

}

pthread_mutex_unlock(&mutex);

}

int get(T& value) {

int ret = 0;

pthread_mutex_lock(&mutex);

//在多核处理器下 由于竞争可能虚假唤醒 包括jdk也说明了

while (work && q.empty()) {

pthread_cond_wait(&cond, &mutex);

}

if (!q.empty()) {

value = q.front();

q.pop();

ret = 1;

}

pthread_mutex_unlock(&mutex);

return ret;

}

void setWork(int work) {

pthread_mutex_lock(&mutex);

this->work = work;

pthread_cond_signal(&cond);

pthread_mutex_unlock(&mutex);

}

int empty() {

return q.empty();

}

int size() {

return q.size();

}

void clear() {

pthread_mutex_lock(&mutex);

int size = q.size();

for (int i = 0; i < size; ++i) {

T value = q.front();

q.pop();

}

pthread_mutex_unlock(&mutex);

}

void sync() {

pthread_mutex_lock(&mutex);

//同步代码块 当我们调用sync方法的时候,能够保证是在同步块中操作queue 队列

pthread_mutex_unlock(&mutex);

}

private:

pthread_cond_t cond;

pthread_mutex_t mutex;

queue q;

//是否工作的标记 1 :工作 0:不接受数据 不工作

int work;

};

#endif //DNRECORDER_SAFE_QUEUE_H

4、第2步中讲到从队列中不断的取packet,那么队列中是如何存放packet呢?在VideoChannel类中encodeData()方法中我们首先将摄像头采集的NV21数据转为I420,然后会将x264提供的方法x264_encoder_encode()方法,将I420数据编码成NALU单元,并返回单元的个数。通过遍历每个NALU单元,先取出SPS和PPS,通过sendSpsPps()方法,将这些数据按照rtmp包协议的形式重新拼接成完整的协议包RTMPPacket。并通过回调的方式,将packet包回调给native-lib.cpp中的callBack()方法,保存到队列中。然后会取出关键帧非关键,通过sendFrame()方法,同样封装成packet回调给callBack()方法。

注意:只有I帧才有sps和pps,并且是在每个I帧之前首先发送给服务器,然后才会发送I帧,然后是非关键帧。

5、代码中用到了打印macro.h,打印类代码如下:

/**

* @author Lance

* @date 2018/8/9

*/

#ifndef DNPLAYER_MACRO_H

#define DNPLAYER_MACRO_H

#include

#define LOGE(...) __android_log_print(ANDROID_LOG_ERROR,"WangyiPush",__VA_ARGS__)

//宏函数

#define DELETE(obj) if(obj){ delete obj; obj = 0; }

#endif //DNPLAYER_MACRO_H

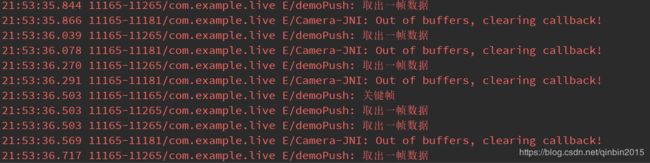

三、完成以上代码集成。通过浏览器代开服务器地址,当我们点击开始直播按钮,会发现,每个一段时间都会打印如下信息,然后刷新浏览器会看到有一条推流的通知,则表明视频推流已经成功了

注意,在推流的时候,服务器一定要打开1935端口,否则在RTMP_Connect()的时候返回0,即链接不成功