interrupts & exceptions中断异常

中断分为同步和异步:

同步中断是指当指令时由cpu控制单元产生的,因为只有在一条指令终止执行后CPU才会产生中断;我们称为异常

异常由程序错误产生,此时会发送一个信号处理异常,或者由内核必须处理的异常条件产生此时比如缺页或者 对内核服务请求(int 或者 sysenter指令) ;

异步中断是指由其他硬件设备和定时器产生的中断信号;我们称为中断

每个进程的thread_info 描述符与thread_union 中内核栈紧邻,如果thread_union 结构大小为8KB。那么当前进程的内核栈被用于所有类型 的内核控制路径:

异常、中断、和可延迟函数,如果thread_union结构的大小为4Kb,对于常规的内核工作以及IRQ处理例程所需空间来说,4KB并不是总够用,所以内核引入三种类型的内核栈:

异常栈:拥有处理异常包括系统调用;

硬中断请求栈:用于处理中断包括硬中断请求栈,每个栈都占用一个单独页框;

软中断请求栈:用于处理科研此函数,系统中的每个CPU都有一个软中断请求栈而且每个栈单独占用一个单独的页框;

硬中断请求存放在hardirq_stack中。软中断存放在softirq_stack数组中;都是irq_ctx类型联合体。

/*

* per-CPU IRQ handling contexts (thread information and stack)

*/

union irq_ctx {

struct thread_info tinfo;

u32 stack[THREAD_SIZE/sizeof(u32)];

} __attribute__((aligned(THREAD_SIZE)));

static DEFINE_PER_CPU(union irq_ctx *, hardirq_ctx);

static DEFINE_PER_CPU(union irq_ctx *, softirq_ctx); 中断初始化

-------------------------------------------------------------

a、实模式:将中断向量表中的每一项都初始化为默认的中断服务例程ignore_int

b、保护模式,基本流程如下:

start_kernel

trap_init()

陷阱门直接将IDT表项初始化为异常处理handler

init_IRQ()

中断门初始化统一的入口:common_interrupt

设备驱动程序调用request_irq注册中断

-------------------------------------------------------------

setup_irq()

在全局中断描述符结构数组(irq_destc[])中,如果该中断号被使用,并且新旧中断都设置了共享,就把新中断加载就中断的后面;如果中

断号没有被使用,就直接加在中断描述符结构数组中,并设置中断使能。

中断产生时;

1.Determines the vector i (0≤i≤255) associated with the interrupt or the exception.

read the Segment Descriptor identified by the selector in the IDT entry. This

descriptor specifies the base address of the segment that includes the interrupt or

exception handler.

4. Makes sure the interrupt was issued by an authorized source. First, it compares

the Current Privilege Level (CPL), which is stored in the two least significant bits

of the cs register, with the Descriptor Privilege Level (DPL) of the Segment

Descriptor included in the GDT. Raises a “General protection” exception if the

CPL is lower than the DPL, because the interrupt handler cannot have a lower

privilege than the program that caused the interrupt. For programmed exceptions, makes a further security check: compares the CPL with the DPL of thegate descriptor included in the IDT and raises a “General protection” exception

if the DPL is lower than the CPL. This last check makes it possible to prevent

access by user applications to specific trap or interrupt gates.

5. Checks whether a change of privilege level is taking place—that is, if CPL is different from the selected Segment Descriptor’s DPL. If so, the control unit must

start using the stack that is associated with the new privilege level. It does this by

performing the following steps:

a. Reads the tr register to access the TSS segment of the running process.

b. Loads the ss and esp registers with the proper values for the stack segment

and stack pointer associated with the new privilege level. These values are

found in the TSS (see the section “Task State Segment” in Chapter 3).

c. In the new stack, it saves the previous values ofssand esp, which define the

logical address of the stack associated with the old privilege level.

6. If a fault has occurred, it loads cs and eip with the logical address of the instruction that caused the exception so that it can be executed again.

7. Saves the contents of eflags, cs, and eip in the stack.

8. If the exception carries a hardware error code, it saves it on the stack.

9. Loads cs and eip, respectively, with the Segment Selector and the Offset fields of

the Gate Descriptor stored in the ith entry of the IDT. These values define the

logical address of the first instruction of the interrupt or exception handler.即eip、es寄存器指向了下一条指令逻辑地址

vector=0

ENTRY(irq_entries_start)

.rept NR_IRQS

ALIGN

1: pushl $vector-256

jmp common_interrupt

.data

.long 1b

.text

vector=vector+1

.endr

common_interrupt:

addl $-0x80,(%esp) /* Adjust vector into the [-256,-1] range */

SAVE_ALL

TRACE_IRQS_OFF

movl %esp,%eax

call do_IRQ

jmp ret_from_intr * Stack layout in 'syscall_exit':

* ptrace needs to have all regs on the stack.

* if the order here is changed, it needs to be

* updated in fork.c:copy_process, signal.c:do_signal,

* ptrace.c and ptrace.h

*

* 0(%esp) - %ebx

* 4(%esp) - %ecx

* 8(%esp) - %edx

* C(%esp) - %esi

* 10(%esp) - %edi

* 14(%esp) - %ebp

* 18(%esp) - %eax

* 1C(%esp) - %ds

* 20(%esp) - %es

* 24(%esp) - %fs

* 28(%esp) - %gs saved iff !CONFIG_X86_32_LAZY_GS

* 2C(%esp) - orig_eax

* 30(%esp) - %eip

* 34(%esp) - %cs

* 38(%esp) - %eflags

* 3C(%esp) - %oldesp

* 40(%esp) - %oldss

*

#define SAVE_ALL \

cld; \

pushl %es; \

pushl %ds; \

pushl %eax; \

pushl %ebp; \

pushl %edi; \

pushl %esi; \

pushl %edx; \

pushl %ecx; \

pushl %ebx; \

movl $(__USER_DS), %edx; \

movl %edx, %ds; \

movl %edx, %es;

SAVE_ALl可以在栈中保存中断处理程序的可能UI使用的CPU寄存器,但是eflags、cs、eip、ss、esp除外。这几个这几个寄存器已经被控制单元自动保存了。

保存寄存器的值后,栈顶的地址存放在eax寄存器中,然后调用do_IRQ函数。执行do_IRQ的ret指令后,控制权转移到ret_from_intr.The do_IRQ( ) function

1.Executes the irq_enter() macro, which increases a counter representing thenumber of nested interrupt handlers. The counter is stored in thepreempt_countfield of the thread_info structure of the current process

2.If the size of thethread_unionstructure is 4 KB, it switches to the hard IRQstack.In particular, the function performs the following substeps:a. Executes the current_thread_info() function to get the address of thethread_infodescriptor associated with the Kernel Mode stack addressed bythe esp register (see the section “Identifying a Process” in Chapter 3).b. Compares the address of the thread_info descriptor obtained in the previous step with the address stored inhardirq_ctx[smp_processor_id()], thatis, the address of the thread_info descriptor associated with the local CPU.If the two addresses are equal, the kernel is already using the hard IRQstack, thus jumps to step 3. This happens when an IRQ is raised while thekernel is still handling another interrupt.c. Here the Kernel Mode stack has to be switched. Stores the pointer to thecurrent process descriptor in the task field of the thread_info descriptor in

irq_ctx union of the local CPU. This is done so that the current macroworks as expected while the kernel is using the hard IRQ stack d. Stores the current value of the esp stack pointer register in theprevious_espfield of the thread_info descriptor in the irq_ctx union of the local CPU (thisfield is used only when preparing the function call trace for a kernel oops).e. Loads in the esp stack register the top location of the hard IRQ stack of thelocal CPU (the value in hardirq_ctx[smp_processor_id()] plus 4096); theprevious value of the esp register is saved in the ebx register.

3. Invokes the_ _do_IRQ()function passing to it the pointerregsand the IRQ number obtained from theregs->orig_eaxfield 4. If the hard IRQ stack has been effectively switched in step 2e above, the functioncopies the original stack pointer from the ebx register into the esp register, thusswitching back to the exception stack or soft IRQ stack that were in use before.5. Executes the irq_exit() macro, which decreases the interrupt counter andchecks whether deferrable kernel functions are waiting to be executed

6.Terminates: the control is transferred to theret_from_intr()function

/*

* do_IRQ handles all normal device IRQ's (the special

* SMP cross-CPU interrupts have their own specific

* handlers).

*/

unsigned int __irq_entry do_IRQ(struct pt_regs *regs)

{

struct pt_regs *old_regs = set_irq_regs(regs);

/* high bit used in ret_from_ code */

unsigned vector = ~regs->orig_ax;

unsigned irq;irq_ctx

exit_idle();

irq_enter();

irq = __this_cpu_read(vector_irq[vector]);

if (!handle_irq(irq, regs)) {

ack_APIC_irq();

if (printk_ratelimit())

pr_emerg("%s: %d.%d No irq handler for vector (irq %d)\n",

__func__, smp_processor_id(), vector, irq);

}

irq_exit();

set_irq_regs(old_regs);

return 1;

}

/*

* Enter an interrupt context.

*/

void irq_enter(void)

{

int cpu = smp_processor_id();

rcu_irq_enter();

if (idle_cpu(cpu) && !in_interrupt()) {

/*

* Prevent raise_softirq from needlessly waking up ksoftirqd

* here, as softirq will be serviced on return from interrupt.

*/

local_bh_disable();

tick_check_idle(cpu);

_local_bh_enable();

}

__irq_enter();

}

/*

* It is safe to do non-atomic ops on ->hardirq_context,

* because NMI handlers may not preempt and the ops are

* always balanced, so the interrupted value of ->hardirq_context

* will always be restored.

*/

#define __irq_enter() \

do { \

account_system_vtime(current); \

add_preempt_count(HARDIRQ_OFFSET); \

trace_hardirq_enter(); \

} while (0)

bool handle_irq(unsigned irq, struct pt_regs *regs)

{

struct irq_desc *desc;

int overflow;

overflow = check_stack_overflow();

desc = irq_to_desc(irq);//#define irq_to_desc(irq) (&irq_desc[irq])

if (unlikely(!desc))

return false;

if (!execute_on_irq_stack(overflow, desc, irq)) {

if (unlikely(overflow))

print_stack_overflow();

desc->handle_irq(irq, desc);//desc->handle_irq最后又会调用handle_irq_event,调用注册的handle_irq函数

return true;

}

static inline int

execute_on_irq_stack(int overflow, struct irq_desc *desc, int irq)

{

union irq_ctx *curctx, *irqctx;

u32 *isp, arg1, arg2;

curctx = (union irq_ctx *) current_thread_info();

irqctx = __this_cpu_read(hardirq_ctx);

/*

* this is where we switch to the IRQ stack. However, if we are

* already using the IRQ stack (because we interrupted a hardirq

* handler) we can't do that and just have to keep using the

* current stack (which is the irq stack already after all)

*/

if (unlikely(curctx == irqctx))

return 0;

/* build the stack frame on the IRQ stack */

isp = (u32 *) ((char *)irqctx + sizeof(*irqctx));

irqctx->tinfo.task = curctx->tinfo.task;

irqctx->tinfo.previous_esp = current_stack_pointer;

/*

* Copy the softirq bits in preempt_count so that the

* softirq checks work in the hardirq context.

*/

irqctx->tinfo.preempt_count =

(irqctx->tinfo.preempt_count & ~SOFTIRQ_MASK) |

(curctx->tinfo.preempt_count & SOFTIRQ_MASK);

if (unlikely(overflow))

call_on_stack(print_stack_overflow, isp);

asm volatile("xchgl %%ebx,%%esp \n"

"call *%%edi \n"

"movl %%ebx,%%esp \n"

: "=a" (arg1), "=d" (arg2), "=b" (isp)

: "0" (irq), "1" (desc), "2" (isp),

"D" (desc->handle_irq)

: "memory", "cc", "ecx");

return 1;

}

/*

* Exit an interrupt context. Process softirqs if needed and possible:

*/

void irq_exit(void)

{

account_system_vtime(current);

trace_hardirq_exit();

sub_preempt_count(IRQ_EXIT_OFFSET);

if (!in_interrupt() && local_softirq_pending())

invoke_softirq();//# define invoke_softirq() do_softirq()

rcu_irq_exit();

#ifdef CONFIG_NO_HZ

/* Make sure that timer wheel updates are propagated */

if (idle_cpu(smp_processor_id()) && !in_interrupt() && !need_resched())

tick_nohz_stop_sched_tick(0);

#endif

preempt_enable_no_resched();

}

asmlinkage void do_softirq(void)

{

__u32 pending;

unsigned long flags;

if (in_interrupt())

return;

local_irq_save(flags);

pending = local_softirq_pending();

/* Switch to interrupt stack */

if (pending) {

call_softirq();

WARN_ON_ONCE(softirq_count());

}

local_irq_restore(flags);

}

#define preempt_enable_no_resched() \

do { \

barrier(); \

dec_preempt_count(); \

} while (0)上述代码为linux2.6.38对于硬件的中断初始化:

void __init init_ISA_irqs(void)

{

struct irq_chip *chip = legacy_pic->chip;

const char *name = chip->name;

int i;

#if defined(CONFIG_X86_64) || defined(CONFIG_X86_LOCAL_APIC)

init_bsp_APIC();

#endif

legacy_pic->init(0);

for (i = 0; i < legacy_pic->nr_legacy_irqs; i++)

set_irq_chip_and_handler_name(i, chip, handle_level_irq, name);//重要 handle_level_irq

}

void __init init_IRQ(void)

{

int i;

/*

* On cpu 0, Assign IRQ0_VECTOR..IRQ15_VECTOR's to IRQ 0..15.

* If these IRQ's are handled by legacy interrupt-controllers like PIC,

* then this configuration will likely be static after the boot. If

* these IRQ's are handled by more mordern controllers like IO-APIC,

* then this vector space can be freed and re-used dynamically as the

* irq's migrate etc.

*/

for (i = 0; i < legacy_pic->nr_legacy_irqs; i++)

per_cpu(vector_irq, 0)[IRQ0_VECTOR + i] = i;

x86_init.irqs.intr_init();

}/**

* handle_level_irq - Level type irq handler

* @irq: the interrupt number

* @desc: the interrupt description structure for this irq

*

* Level type interrupts are active as long as the hardware line has

* the active level. This may require to mask the interrupt and unmask

* it after the associated handler has acknowledged the device, so the

* interrupt line is back to inactive.

*/

void

handle_level_irq(unsigned int irq, struct irq_desc *desc)

{

struct irqaction *action;

irqreturn_t action_ret;

raw_spin_lock(&desc->lock);

mask_ack_irq(desc);

if (unlikely(desc->status & IRQ_INPROGRESS))

goto out_unlock;

desc->status &= ~(IRQ_REPLAY | IRQ_WAITING);

kstat_incr_irqs_this_cpu(irq, desc);

/*

* If its disabled or no action available

* keep it masked and get out of here

*/

action = desc->action;

if (unlikely(!action || (desc->status & IRQ_DISABLED)))

goto out_unlock;

desc->status |= IRQ_INPROGRESS;

raw_spin_unlock(&desc->lock);

action_ret = handle_IRQ_event(irq, action);调用handle_irq_event

if (!noirqdebug)

note_interrupt(irq, desc, action_ret);

raw_spin_lock(&desc->lock);

desc->status &= ~IRQ_INPROGRESS;

if (!(desc->status & (IRQ_DISABLED | IRQ_ONESHOT)))

unmask_irq(desc);

out_unlock:

raw_spin_unlock(&desc->lock);

而在linux2.6.11中

fastcall unsigned int do_IRQ(struct pt_regs *regs)

{

/* high bits used in ret_from_ code */

int irq = regs->orig_eax & 0xff;

#ifdef CONFIG_4KSTACKS

union irq_ctx *curctx, *irqctx;

u32 *isp;

#endif

irq_enter();

#ifdef CONFIG_DEBUG_STACKOVERFLOW

/* Debugging check for stack overflow: is there less than 1KB free? */

{

long esp;

__asm__ __volatile__("andl %%esp,%0" :

"=r" (esp) : "0" (THREAD_SIZE - 1));

if (unlikely(esp < (sizeof(struct thread_info) + STACK_WARN))) {

printk("do_IRQ: stack overflow: %ld\n",

esp - sizeof(struct thread_info));

dump_stack();

}

}

#endif

#ifdef CONFIG_4KSTACKS

curctx = (union irq_ctx *) current_thread_info();

irqctx = hardirq_ctx[smp_processor_id()];

/*

* this is where we switch to the IRQ stack. However, if we are

* already using the IRQ stack (because we interrupted a hardirq

* handler) we can't do that and just have to keep using the

* current stack (which is the irq stack already after all)

*/

if (curctx != irqctx) {

int arg1, arg2, ebx;

/* build the stack frame on the IRQ stack */

isp = (u32*) ((char*)irqctx + sizeof(*irqctx));

irqctx->tinfo.task = curctx->tinfo.task;

irqctx->tinfo.previous_esp = current_stack_pointer;

asm volatile(

" xchgl %%ebx,%%esp \n"

" call __do_IRQ \n"

" movl %%ebx,%%esp \n"

: "=a" (arg1), "=d" (arg2), "=b" (ebx)

: "0" (irq), "1" (regs), "2" (isp)

: "memory", "cc", "ecx"

);

} else

#endif

__do_IRQ(irq, regs);

irq_exit();

return 1;

/*

* do_IRQ handles all normal device IRQ's (the special

* SMP cross-CPU interrupts have their own specific

* handlers).

*/

fastcall unsigned int __do_IRQ(unsigned int irq, struct pt_regs *regs)

{

irq_desc_t *desc = irq_desc + irq;

struct irqaction * action;

unsigned int status;

kstat_this_cpu.irqs[irq]++;

if (desc->status & IRQ_PER_CPU) {

irqreturn_t action_ret;

/*

* No locking required for CPU-local interrupts:

*/

desc->handler->ack(irq);

action_ret = handle_IRQ_event(irq, regs, desc->action);

if (!noirqdebug)

note_interrupt(irq, desc, action_ret);

desc->handler->end(irq);

return 1;

}

spin_lock(&desc->lock);

desc->handler->ack(irq);

/*

* REPLAY is when Linux resends an IRQ that was dropped earlier

* WAITING is used by probe to mark irqs that are being tested

*/

status = desc->status & ~(IRQ_REPLAY | IRQ_WAITING);

status |= IRQ_PENDING; /* we _want_ to handle it */

/*

* If the IRQ is disabled for whatever reason, we cannot

* use the action we have.

*/

action = NULL;

if (likely(!(status & (IRQ_DISABLED | IRQ_INPROGRESS)))) {

action = desc->action;

status &= ~IRQ_PENDING; /* we commit to handling */

status |= IRQ_INPROGRESS; /* we are handling it */

}

desc->status = status;

/*

* If there is no IRQ handler or it was disabled, exit early.

* Since we set PENDING, if another processor is handling

* a different instance of this same irq, the other processor

* will take care of it.

*/

if (unlikely(!action))

goto out;

/*

* Edge triggered interrupts need to remember

* pending events.

* This applies to any hw interrupts that allow a second

* instance of the same irq to arrive while we are in do_IRQ

* or in the handler. But the code here only handles the _second_

* instance of the irq, not the third or fourth. So it is mostly

* useful for irq hardware that does not mask cleanly in an

* SMP environment.

*/

for (;;) {

irqreturn_t action_ret;

spin_unlock(&desc->lock);

action_ret = handle_IRQ_event(irq, regs, action);

spin_lock(&desc->lock);

if (!noirqdebug)

note_interrupt(irq, desc, action_ret);

if (likely(!(desc->status & IRQ_PENDING)))

break;

desc->status &= ~IRQ_PENDING;

}

desc->status &= ~IRQ_INPROGRESS;

out:

/*

* The ->end() handler has to deal with interrupts which got

* disabled while the handler was running.

*/

desc->handler->end(irq);

spin_unlock(&desc->lock);

return 1;

}

/*

* Have got an event to handle:

*/

fastcall int handle_IRQ_event(unsigned int irq, struct pt_regs *regs,

struct irqaction *action)

{

int ret, retval = 0, status = 0;

if (!(action->flags & SA_INTERRUPT))

local_irq_enable();

do {

ret = action->handler(irq, action->dev_id, regs);

if (ret == IRQ_HANDLED)

status |= action->flags;

retval |= ret;

action = action->next;

} while (action);

if (status & SA_SAMPLE_RANDOM)

add_interrupt_randomness(irq);

local_irq_disable();

return retval;

}

对于驱动通过request_irq注册中断服务函数;

软中断:

The open_softirq() function takes care of softirq initialization. It uses three parameters: the softirq index, a pointer to the softirq function to be executed, and a second

pointer to a data structure that may be required by the softirq function. open_

softirq() limits itself to initializing the proper entry of the softirq_vec array.

Softirqs are activated by means of the raise_softirq() function. This function,

which receives as its parameter the softirq index nr, performs the following actions:

1. Executes the local_irq_save macro to save the state of the IF flag of the eflags

register and to disable interrupts on the local CPU.

2. Marks the softirq as pending by setting the bit corresponding to the index nr in

the softirq bit mask of the local CPU.

3. If in_interrupt() yields the value 1, it jumps to step 5. This situation indicates

either that raise_softirq() has been invoked in interrupt context, or that the

softirqs are currently disabled.

This is the Title of the Book, eMatter Edition

Copyright © 2007 O’Reilly & Associates, Inc. All rights reserved.

Softirqs and Tasklets | 175

4. Otherwise, invokes wakeup_softirqd() to wake up, if necessary, the ksoftirqd

kernel thread of the local CPU (see later).

5. Executes the local_irq_restore macro to restore the state of the IF flag saved in

step 1.

Checks for active (pending) softirqs should be perfomed periodically, but without

inducing too much overhead. They are performed in a few points of the kernel code.

Here is a list of the most significant points (be warned that number and position of

the softirq checkpoints change both with the kernel version and with the supported

hardware architecture):

The do_softirq( ) function

If pending softirqs are detected at one such checkpoint (local_softirq_pending() is

not zero), the kernel invokes do_softirq() to take care of them. This function performs the following actions:

1. If in_interrupt() yields the value one, this function returns. This situation indicates either that do_softirq() has been invoked in interrupt context or that the

softirqs are currently disabled.

2. Executes local_irq_save to save the state of the IF flag and to disable the interrupts on the local CPU.

3. If the size of the thread_union structure is 4 KB, it switches to the soft IRQ stack,

if necessary. This step is very similar to step 2 of do_IRQ() in the earlier section

“I/O Interrupt Handling;” of course, the softirq_ctx array is used instead of

hardirq_ctx.

4. Invokes the _ _do_softirq() function

5. If the soft IRQ stack has been effectively switched in step 3 above, it restores the

original stack pointer into the esp register, thus switching back to the exception

stack that was in use before.

6. Executes local_irq_restore to restore the state of the IF flag (local interrupts

enabled or disabled) saved in step 2 and returns.

The _ _do_softirq() function reads the softirq bit mask of the local CPU and executes the deferrable functions corresponding to every set bit. While executing a softirq function, new pending softirqs might pop up; in order to ensure a low latency

time for the deferrable funtions, _ _do_softirq() keeps running until all pending softirqs have been executed. This mechanism, however, could force _ _do_softirq() to

run for long periods of time, thus considerably delaying User Mode processes. For

that reason, _ _do_softirq() performs a fixed number of iterations and then returns.

The remaining pending softirqs, if any, will be handled in due time by the ksoftirqd

kernel thread described in the next section. Here is a short description of the actions

performed by the function:

1. Initializes the iteration counter to 10.

2. Copies the softirq bit mask of the local CPU (selected by local_softirq_

pending()) in the pending local variable.

3. Invokes local_bh_disable() to increase the softirq counter. It is somewhat counterintuitive that deferrable functions should be disabled before starting to execute them, but it really makes a lot of sense. Because the deferrable functions

mostly run with interrupts enabled, an interrupt can be raised in the middle of

the _ _do_softirq() function. When do_IRQ() executes the irq_exit() macro,

another instance of the _ _do_softirq() function could be started. This has to be

avoided, because deferrable functions must execute serially on the CPU. Thus,

the first instance of _ _do_softirq() disables deferrable functions, so that every

new instance of the function will exit at step 1 of do_softirq().

4. Clears the softirq bitmap of the local CPU, so that new softirqs can be activated

(the value of the bit mask has already been saved in the pending local variable in

step 2).

5. Executes local_irq_enable() to enable local interrupts.

6. For each bit set in the pending local variable, it executes the corresponding softirq function; recall that the function address for the softirq with index n is stored

in softirq_vec[n]->action.

7. Executes local_irq_disable() to disable local interrupts.

8. Copies the softirq bit mask of the local CPU into the pending local variable and

decreases the iteration counter one more time.

This is the Title of the Book, eMatter Edition

Copyright © 2007 O’Reilly & Associates, Inc. All rights reserved.

Softirqs and Tasklets | 177

9. If pending is not zero—at least one softirq has been activated since the start of

the last iteration—and the iteration counter is still positive, it jumps back to

step 4.

10. If there are more pending softirqs, it invokes wakeup_softirqd() to wake up the

kernel thread that takes care of the softirqs for the local CPU (see next section).

11. Subtracts 1 from the softirq counter, thus reenabling the deferrable functions.

The ksoftirqd kernel threads

In recent kernel versions, each CPU has its own ksoftirqd/n kernel thread (where n is

the logical number of the CPU). Each ksoftirqd/n kernel thread runs the ksoftirqd()

function, which essentially executes the following loop:

for(;;) {

set_current_state(TASK_INTERRUPTIBLE);

schedule();

/* now in TASK_RUNNING state */

while (local_softirq_pending()) {

preempt_disable();

do_softirq();

preempt_enable();

cond_resched();

}

void raise_softirq(unsigned int nr)

{

unsigned long flags;

local_irq_save(flags);

raise_softirq_irqoff(nr);

local_irq_restore(flags);

}

void open_softirq(int nr, void (*action)(struct softirq_action *))

{

softirq_vec[nr].action = action;

}/*

* This function must run with irqs disabled!

*/

inline void raise_softirq_irqoff(unsigned int nr)

{

__raise_softirq_irqoff(nr);do_IRQ

/*

* If we're in an interrupt or softirq, we're done

* (this also catches softirq-disabled code). We will

* actually run the softirq once we return from

* the irq or softirq.

*

* Otherwise we wake up ksoftirqd to make sure we

* schedule the softirq soon.

*/

if (!in_interrupt())

wakeup_softirqd();

}static inline void __raise_softirq_irqoff(unsigned int nr)

{

trace_softirq_raise(nr);

or_softirq_pending(1UL << nr);

}

/*

* we cannot loop indefinitely here to avoid userspace starvation,

* but we also don't want to introduce a worst case 1/HZ latency

* to the pending events, so lets the scheduler to balance

* the softirq load for us.

*/

static void wakeup_softirqd(void)

{

/* Interrupts are disabled: no need to stop preemption */

struct task_struct *tsk = __this_cpu_read(ksoftirqd);

if (tsk && tsk->state != TASK_RUNNING)

wake_up_process(tsk);

}

/*

* We restart softirq processing MAX_SOFTIRQ_RESTART times,

* and we fall back to softirqd after that.

*

* This number has been established via experimentation.

* The two things to balance is latency against fairness -

* we want to handle softirqs as soon as possible, but they

* should not be able to lock up the box.

*/

#define MAX_SOFTIRQ_RESTART 10

asmlinkage void __do_softirq(void)

{

struct softirq_action *h;

__u32 pending;

int max_restart = MAX_SOFTIRQ_RESTART;

int cpu;

pending = local_softirq_pending();

account_system_vtime(current);

__local_bh_disable((unsigned long)__builtin_return_address(0),

SOFTIRQ_OFFSET);

lockdep_softirq_enter();

cpu = smp_processor_id();

restart:

/* Reset the pending bitmask before enabling irqs */

set_softirq_pending(0);

local_irq_enable();

h = softirq_vec;

do {

if (pending & 1) {

unsigned int vec_nr = h - softirq_vec;

int prev_count = preempt_count();

kstat_incr_softirqs_this_cpu(vec_nr);

trace_softirq_entry(vec_nr);

h->action(h);

trace_softirq_exit(vec_nr);

if (unlikely(prev_count != preempt_count())) {

printk(KERN_ERR "huh, entered softirq %u %s %p"

"with preempt_count %08x,"

" exited with %08x?\n", vec_nr,

softirq_to_name[vec_nr], h->action,

prev_count, preempt_count());

preempt_count() = prev_count;

}

rcu_bh_qs(cpu);

}

h++;

pending >>= 1;

} while (pending);

local_irq_disable();

pending = local_softirq_pending();

if (pending && --max_restart)

goto restart;

if (pending)

wakeup_softirqd();

lockdep_softirq_exit();

account_system_vtime(current);

__local_bh_enable(SOFTIRQ_OFFSET);

}

#ifndef __ARCH_HAS_DO_SOFTIRQ

asmlinkage void do_softirq(void)

{

__u32 pending;

unsigned long flags;

if (in_interrupt())

return;

local_irq_save(flags);

pending = local_softirq_pending();

if (pending)

__do_softirq();

local_irq_restore(flags);

}

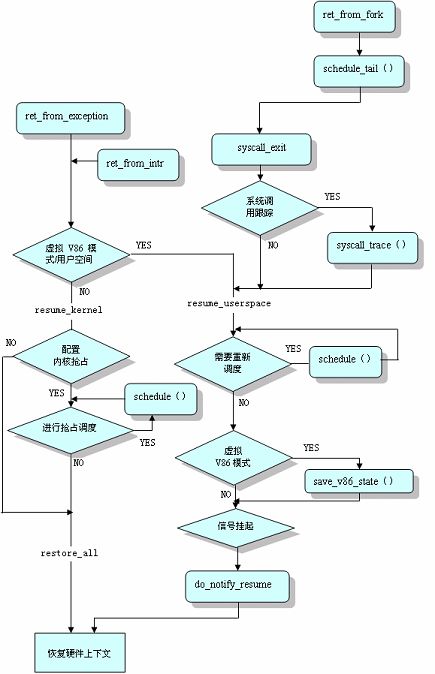

中断异常返回: