Oracle 10g RAC Install for rhel 5.8

1.环境规划

1.1软件环境

虚拟机:Oracle VM VirtualBox4.3.18r96516

Linux OS: Red Hat Enterprise Linux Server release 5.8 (Tikanga)

Oracle集群软件:102010_clusterware_linux_x86_64.cpio.gz

Oracle数据库软件:10201_database_linux_x86_64.cpio.gz

1.2IP地址规划

| HostName |

Public IP |

Private IP |

VIP |

ORACLE_SID |

| rac1 |

eth1:192.168.56.106 |

10.10.10.100 |

192.168.56.110 |

rac1 |

| rac2 |

eth1:192.168.56.107 |

10.10.10.200 |

192.168.56.120 |

rac2 |

| 主机192.168.56.108作为共享存储和ntp server使用 |

||||

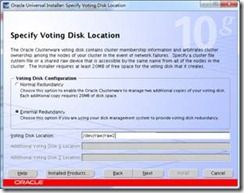

1.3存储磁盘规划

30G大小的分区作为共享盘,再分区!

分区大小分别为:

200M OCR Location

200M Voting Disk

3G ASM Disk

3G ASM Disk

2.安装准备工作

注:以下操作没有特别说明,都是以root用户在所有集群节点配置

2.1.关闭iptables和selinux

~]#getenforce

Disabled

~]#chkconfig --list iptables

iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off

2.2.OS版本限制

#oracle10G的版本只支持到RHEL4,生产中建议安装官方认可的操作系统版本

]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 4.8 (Tikanga)

2.3.操作系统资源限制

内存至少512M 交换分区至少1G

oracle软件安装位置1.3G

数据库安装位置至少1G

/tmp至少要有足够400M的剩余[最好是独立的一个分区]

如果这些目录都是隶属于根文件系统那根需要有3G空闲才能安装 (1.3+1+0.4)

上述都属于最小要求这是远远不够后期运行添加数据使用的.

并且随着后期运行oracle自身产生的文件会逐渐增大请保留足够的空间需求

~]#df -h

FilesystemSize Used Avail Use% Mounted on

/dev/sda3 5.8G 5.2G 321M 95% /

/dev/sda1 99M 12M 82M 13% /boot

tmpfs 502M 0 502M 0% /dev/shm

/dev/sdb1 9.9G 151M 9.2G 2% /u01

~]#grep -E 'MemTotal|SwapTotal' /proc/meminfo

MemTotal: 1026080 kB

SwapTotal: 2096472 kB

2.4.软件包检查

for i in binutils compat-gcc-34 compat-libstdc++-296 control-center \

gccgcc-c++ glibcglibc-common glibc-devellibaiolibgcc \

libstdc++ libstdc++-devellibXp make openmotif22 setarch

do

rpm -q $i &>/dev/null || F="$F $i"

done ;echo $F;unset F

向系统中补充上面输出的软件包(缺少的软件包)

2.5.配置hosts文件

~]# cat /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 yujx.terkizyujxlocalhost.localdomainlocalhost

::1 localhost6.localdomain6 localhost6

192.168.56.106 rac1

192.168.56.110 rac1-vip

10.10.10.100 rac1-priv

192.168.56.107 rac2

192.168.56.120 rac2-vip

10.10.10.200 rac2-priv

2.6.配置系统参数

~]# vim /etc/sysctl.conf

#Install Oracle 10g

kernel.shmall = 2097152

kernel.shmmax = 2147483648

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

fs.file-max = 65536

net.ipv4.ip_local_port_range = 1024 65000

net.core.rmem_default = 1048576

net.core.rmem_max = 1048576

net.core.wmem_default = 262144

net.core.wmem_max = 262144

~]#sysctl–p

2.7.修改shell限制

~]#grep ^[^#] /etc/security/limits.conf

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

2.8.配置pam.d文件

]# vim /etc/pam.d/login

session required /lib/security/pam_limits.so

session required pam_limits.so

2.9.配置hangcheck-timer

#用于监视 Linux 内核是否挂起

~]#vi /etc/modprobe.conf

optionshangcheck-timer hangcheck_tick=30 hangcheck_margin=180

~]# vim /etc/rc.local

modprobehangcheck-timer

~]# /etc/rc.local

~]#lsmod |grephangcheck_timer

hangcheck_timer37465 0

2.10.创建用户和组

~]#groupadd -g 1000 oinstall

~]#groupadd -g 1001 dba

~]#groupadd -g 1002 oper

~]#groupadd -g 1003 asmadmin

~]#useradd -u 1000 -g oinstall -G dba,oper,asmadmin oracle

~]# id oracle

uid=1000(oracle) gid=1000(oinstall) groups=1000(oinstall),1001(dba),1002(oper),1003(asmadmin)

]#passwd oracle

Changing password for user oracle.

New UNIX password:

BAD PASSWORD: it is based on a dictionary word

Retype new UNIX password:

passwd: all authentication tokens updated successfully.

2.11.创建安装目录

~]#mkdir -p /u01/app/oracle/db_1

~]#mkdir /u01/app/oracle/asm

~]#mkdir /u01/app/oracle/crs

~]#chown -R oracle.oinstall /u01

~]#chmod -R 755 /u01/

2.12.配置Oracle profile

~]$ vim .bash_profile

export PATH

export ORACLE_BASE=/u01

export ASM_HOME=$ORACLE_BASE/app/oracle/asm

export ORA_CRS_HOME=$ORACLE_BASE/app/oracle/crs

export ORACLE_HOME=$ORACLE_BASE/app/oracle/db_1

export ORACLE_SID=rac1 #sid根据实际写,rac2写为rac2

export PATH=$ORACLE_HOME/bin:$ORA_CRS_HOME/bin:/usr/sbin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

aliassqlplus="rlwrapsqlplus"

aliasramn="rlwrapramn"

stty erase ^H

~]$ . .bash_profile

2.13.配置SSH互信

#分别在rac1&rac2执行

su - oracle

ssh-keygen -t rsa

ssh-keygen -t dsa

cd .ssh

cat *.pub >authorized_keys

#将rac1的key文件拷贝到rac2并改名:

scpauthorized_keysrac2:/home/oracle/.ssh/keys_dbs

#在rac2将得到的rac1的key文件合并到自己的key文件,并拷贝回rac1覆盖原始的key文件

catkeys_dbs>>authorized_keys

scpauthorized_keysrac1:/home/oracle/.ssh/

#所有节点测试信任关系

]$ssh rac1 date

Thu Nov 27 17:26:31 CST 2014

]$ssh rac2 date

Thu Nov 27 17:26:34 CST 2014

]$ssh rac2-priv date

Thu Nov 27 17:26:37 CST 2014

]$ssh rac1-priv date

Thu Nov 27 17:26:39 CST 2014

2.14.配置NTP时间同步

#108作为服务端

~]#vim /etc/ntp.conf

server 127.127.1.0

fudge 127.127.1.0 stratum 11

driftfile /var/lib/ntp/drift

broadcastdelay 0.008

~]# /etc/init.d/ntpd restart

Shutting down ntpd: [ OK ]

Starting ntpd: [ OK ]

#rac1、rac2客户端

~]#vim /etc/ntp.conf

server 192.168.56.108 prefer

driftfile /var/lib/ntp/drift

broadcastdelay 0.008

~]# /etc/init.d/ntpd restart

Shutting down ntpd: [ OK ]

Starting ntpd: [ OK ]

]#ntpq -p

remoterefidst t when poll reach delay offset jitter

==============================================================================

*LOCAL(0) .LOCL. 10 l 63 64 17 0.000 0.000 0.001

192.168.56.108 .INIT. 16 u 62 64 0 0.000 0.000 0.000

2.15.配置共享存储

1).安装软件包

#客户端rac1、rac2

~]# rpm -qa | grepscsi

iscsi-initiator-utils-6.2.0.871-0.16.el5

#108服务端安装

~]# yum -y install scsi-target-utils

~]# rpm -qa |grepscsi

iscsi-initiator-utils-6.2.0.872-13.el5

scsi-target-utils-1.0.14-2.el5

2).服务端配置

~]# vim /etc/tgt/targets.conf

backing-store /dev/sdb1 #backing-store:可以共享一个分区或者一个文件

# direct-store /dev/sdb # Becomes LUN 3直接方式,必须是scisc整块硬盘

# backing-store /dev/sdc # Becomes LUN 1

~]# /etc/init.d/tgtd restart

Stopping SCSI target daemon: [ OK ]

Starting SCSI target daemon: Starting target framework daemon

~]# tgtadm --lldiscsi --op show --mode target #查看配置是否有效

Target 1: iqn.2014-11.com.trekiz.yujx:server.target1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 32210 MB, Block size: 512

Online: Yes

Removable media: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/sdb1

Backing store flags:

Account information:

ACL information:

ALL

3).客户端配置

装iscsi-initiator-utils包

~]# rpm -qa |grepiscsi

iscsi-initiator-utils-6.2.0.871-0.16.el5

~]#ls /var/lib/iscsi/

ifacesisns nodes send_targetsslp static

#启动服务

~]# /etc/init.d/iscsid start

#缓存共享存储到本地

~]#iscsiadm -m discovery -t sendtargets -p 192.168.56.108:3260

192.168.56.108:3260,1 iqn.2014-11.com.trekiz.yujx:server.target1

~]#ls /var/lib/iscsi/nodes/

iqn.2014-11.com.trekiz.yujx:server.target1

#登录存储

~]# /etc/init.d/iscsi start

#查看,其中sdc就是共享过来的

~]#ls /dev/sd

sda sda1 sda2 sda3 sdb sdb1 sdc

4).对共享存储分区

rac1:

~]#fdisk /dev/sdc

~]#partprobe /dev/sdc

~]#ls /dev/sdc

sdc sdc1 sdc2 sdc3 sdc4sdc5

rac2:

~]#partprobe /dev/sdc

~]#ls /dev/sdc

sdc sdc1 sdc2 sdc3 sdc4 sdc5

2.16.共享磁盘制作raw

~]# vim /etc/udev/rules.d/60-raw.rules

ACTION=="add", KERNEL=="sdc1", RUN+="/bin/raw /dev/raw/raw1 %N"

ACTION=="add", KERNEL=="sdc2", RUN+="/bin/raw /dev/raw/raw2 %N"

ACTION=="add", KERNEL=="sdc3", RUN+="/bin/raw /dev/raw/raw3 %N"

ACTION=="add", KERNEL=="sdc5", RUN+="/bin/raw /dev/raw/raw4 %N"

KERNEL=="raw[1]", MODE="0660", GROUP="oinstall", OWNER="root"

KERNEL=="raw[2]", MODE="0660", GROUP="oinstall", OWNER="oracle"

KERNEL=="raw[3]", MODE="0660", GROUP="oinstall", OWNER="oracle"

KERNEL=="raw[4]", MODE="0660", GROUP="oinstall", OWNER="oracle"

~]#start_udev

~]#ll /dev/raw/raw*

crw-rw---- 1 root oinstall 162, 1 Nov 28 11:25 /dev/raw/raw1

crw-rw---- 1 oracle oinstall 162, 2 Nov 28 11:25 /dev/raw/raw2

crw-rw---- 1 oracle oinstall 162, 3 Nov 28 11:25 /dev/raw/raw3

crw-rw---- 1 oracle oinstall 162, 4 Nov 28 11:25 /dev/raw/raw4

3.安装Clusterware软件

3.1.解压安装包

]#gunzip 102010_clusterware_linux_x86_64.cpio.gz

]#cpio -idmv<102010_clusterware_linux_x86_64.cpio

3.2.校验集群安装可行性

]$ ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 –verbose

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "rac1"

Destination Node Reachable?

------------------------------------ ------------------------

rac2 yes

rac1 yes

Result: Node reachability check passed from node "rac1".

Checking user equivalence...

Check: User equivalence for user "oracle"

Node Name Comment

------------------------------------ ------------------------

rac2 passed

rac1 passed

Result: User equivalence check passed for user "oracle".

Checking administrative privileges...

Check: Existence of user "oracle"

Node Name User Exists Comment

------------ ------------------------ ------------------------

rac2 yes passed

rac1 yes passed

Result: User existence check passed for "oracle".

Check: Existence of group "oinstall"

Node Name Status Group ID

------------ ------------------------ ------------------------

rac2 exists 1000

rac1 exists 1000

Result: Group existence check passed for "oinstall".

Check: Membership of user "oracle" in group "oinstall" [as Primary]

Node Name User Exists Group Exists User in Group Primary Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 yes yesyesyes passed

rac1 yes yesyesyes passed

Result: Membership check for user "oracle" in group "oinstall" [as Primary] passed.

Administrative privileges check passed.

Checking node connectivity...

Interface information for node "rac2"

Interface Name IP Address Subnet

------------------------------ ------------------------------ ----------------

eth0 10.10.10.200 10.10.10.0

eth1 192.168.56.107 192.168.56.0

Interface information for node "rac1"

Interface Name IP Address Subnet

------------------------------ ------------------------------ ----------------

eth0 10.10.10.100 10.10.10.0

eth1 192.168.56.106 192.168.56.0

Check: Node connectivity of subnet "10.10.10.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac2:eth0 rac1:eth0 yes

Result: Node connectivity check passed for subnet "10.10.10.0" with node(s) rac2,rac1.

Check: Node connectivity of subnet "192.168.56.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac2:eth1 rac1:eth1 yes

Result: Node connectivity check passed for subnet "192.168.56.0" with node(s) rac2,rac1.

Suitable interfaces for the private interconnect on subnet "10.10.10.0":

rac2 eth0:10.10.10.200

rac1 eth0:10.10.10.100

Suitable interfaces for the private interconnect on subnet "192.168.56.0":

rac2 eth1:192.168.56.107

rac1 eth1:192.168.56.106

ERROR:

Could not find a suitable set of interfaces for VIPs.

Result: Node connectivity check failed.

Checking system requirements for 'crs'...

No checks registered for this product.

Pre-check for cluster services setup was unsuccessful on all the nodes.

3.3.启动安装程序

]$ ./runInstaller

********************************************************************************

Please run the script rootpre.sh as root on all machines/nodes. The script can be found at the toplevel of the CD or stage-area. Once you have run the script, please type Y to proceed

Answer 'y' if root has run 'rootpre.sh' so you can proceed with Oracle Clusterware installation.

Answer 'n' to abort installation and then ask root to run 'rootpre.sh'.

********************************************************************************

Has 'rootpre.sh' been run by root? [y/n] (n)

y

Starting Oracle Universal Installer...

Checking installer requirements...

Checking operating system version: must be redhat-3, SuSE-9, redhat-4, UnitedLinux-1.0, asianux-1 or asianux-2

Passed

All installer requirements met.

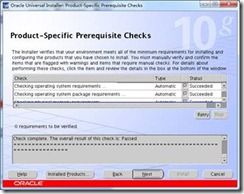

3.4.图形界面选择配置

1.手动修改crs_home后

2.手动添加远程节点信息

3.修改192.168.56.0为public

4.指定注册盘

5.指定仲裁盘

6.执行Root脚本

1. 运行 root.sh 脚本之前,修改 vipca 和 srvctl 两个脚本vi +123 $ORA_CRS_HOME/bin/vipca

vi + $ORA_CRS_HOME/bin/srvctl

#在两个脚本中取消变量LD_ASSUME_KERNEL:

]#vi +123 $ORA_CRS_HOME/bin/vipca

-------------------------------------

LD_ASSUME_KERNEL=2.4.19

export LD_ASSUME_KERNEL

fi

unset LD_ASSUME_KERNEL

-------------------------------------

]#vi + $ORA_CRS_HOME/bin/srvctl

-------------------------------------

export LD_ASSUME_KERNEL

unset LD_ASSUME_KERNEL

-------------------------------------

2. 执行 Root 脚本rac1:

]# /u01/oraInventory/orainstRoot.sh

Changing permissions of /u01/oraInventory to 770.

Changing groupname of /u01/oraInventory to oinstall.

The execution of the script is complete

]# /u01/app/oracle/crs/root.sh

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

Checking to see if Oracle CRS stack is already configured

Setting the permissions on OCR backup directory

Setting up NS directories

Oracle Cluster Registry configuration upgraded successfully

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node:

node 1: rac1 rac1-priv rac1

node 2: rac2 rac2-priv rac2

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Now formatting voting device: /dev/raw/raw2

Format of 1 voting devices complete.

Startup will be queued to init within 90 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

CSS is active on these nodes.

rac1

CSS is inactive on these nodes.

rac2

Local node checking complete.

Run root.sh on remaining nodes to start CRS daemons.

rac2:

]# /u01/oraInventory/orainstRoot.sh

Changing permissions of /u01/oraInventory to 770.

Changing groupname of /u01/oraInventory to oinstall.

The execution of the script is complete

]# /u01/app/oracle/crs/root.sh

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

Checking to see if Oracle CRS stack is already configured

Setting the permissions on OCR backup directory

Setting up NS directories

Oracle Cluster Registry configuration upgraded successfully

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

clscfg: EXISTING configuration version 3 detected.

clscfg: version 3 is 10G Release 2.

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node:

node 1: rac1 rac1-priv rac1

node 2: rac2 rac2-priv rac2

clscfg: Arguments check out successfully.

NO KEYS WERE WRITTEN. Supply -force parameter to override.

-force is destructive and will destroy any previous cluster

configuration.

Oracle Cluster Registry for cluster has already been initialized

Startup will be queued to init within 90 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

CSS is active on these nodes.

rac1

rac2

CSS is active on all nodes.

Waiting for the Oracle CRSD and EVMD to start

Oracle CRS stack installed and running under init(1M)

Running vipca(silent) for configuring nodeapps

Error 0(Native: listNetInterfaces:[3])

[Error 0(Native: listNetInterfaces:[3])]

3. 手动配置 IP]# ./oifcfgiflist

eth0 10.10.10.0

eth1 192.168.56.0

]# ./oifcfgsetif -global eth0/10.10.10.0:cluster_interconnect

]# ./oifcfggetif

eth1 192.168.56.0 global public

eth0 10.10.10.0 global cluster_interconnect

4.vipca 配置7.Finish结束之后root.sh脚本才全部完成!结束运行脚本的向导

3.5.检测crs状态

]$crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.rac1.gsd application ONLINE ONLINE rac1

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip application ONLINE ONLINE rac1

ora.rac2.gsd application ONLINE ONLINE rac2

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip application ONLINE ONLINE rac2

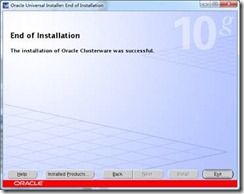

至此clusterware安装完成

4.安装数据库软件

4.1.解压安装包

]#gunzip 10201_database_linux_x86_64.cpio.gz

]#cpio -idmv<10201_database_linux_x86_64.cpio

4.2.安装oracle软件

]$ ./runInstaller

4.3.执行Root脚本

#rac1、rac2

]# /u01/app/oracle/db_1/root.sh

Running Oracle10 root.sh script...

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/db_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

4.4.安装完成

5.配置监听网络

]$netca

]$crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....C1.lsnr application ONLINE ONLINE rac1

ora.rac1.gsd application ONLINE ONLINE rac1

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip application ONLINE ONLINE rac1

ora....C2.lsnr application ONLINE ONLINE rac2

ora.rac2.gsd application ONLINE ONLINE rac2

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip application ONLINE ONLINE rac2

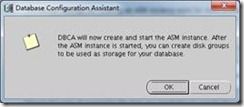

6.创建数据库

]$dbca

7.查看状态

~]$crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora.rac.db application ONLINE ONLINE rac2

ora....rac1.cs application ONLINE ONLINE rac1

ora....c1.inst application ONLINE ONLINE rac1

ora....ac1.srv application ONLINE ONLINE rac1

ora....ac2.srv application ONLINE ONLINE rac2

ora....rac2.cs application ONLINE ONLINE rac1

ora....c2.inst application ONLINE ONLINE rac2

ora....ac1.srv application ONLINE ONLINE rac1

ora....ac2.srv application ONLINE ONLINE rac2

ora....SM1.asm application ONLINE ONLINE rac1

ora....C1.lsnr application ONLINE ONLINE rac1

ora.rac1.gsd application ONLINE ONLINE rac1

ora.rac1.ons application ONLINE ONLINE rac1

ora.rac1.vip application ONLINE ONLINE rac1

ora....SM2.asm application ONLINE ONLINE rac2

ora....C2.lsnr application ONLINE ONLINE rac2

ora.rac2.gsd application ONLINE ONLINE rac2

ora.rac2.ons application ONLINE ONLINE rac2

ora.rac2.vip application ONLINE ONLINE rac2

8.日常管理

8.1.CRS的管理

#CRSCTL命令控制着本地节点的CRS服务(Oracle clusterware processes)

~]# /u01/app/oracle/db_1/bin/crsctlstart|stop|enable|disablecrs或者

~]#/etc/init.d/init.crs {stop|start|enable|disable}或者

#enable 开启自动启动crs #disable 关闭自动启动crs

$ crs_start|crs_stop –all

~]$crs_start -h

Usage: crs_startresource_name [...] [-c cluster_member] [-f] [-q] ["attrib=value ..."]

crs_start -all [-q]

~]$crs_stop -h

Usage: crs_stopresource_name [...] [-f] [-q] ["attrib=value ..."]

crs_stop -c cluster_member [...] [-q] ["attrib=value ..."]

crs_stop -all [-q]

#查看CRS服务状态

$ crsctl check crs

~]$crsctl

Usage: crsctlcheck crs - checks the viability of the CRS stack

crsctl check cssd - checks the viability of CSS

crsctl check crsd - checks the viability of CRS

crsctl check evmd - checks the viability of EVM

crsctl set css - sets a parameter override

crsctl get css - gets the value of a CSS parameter

crsctl unset css - sets CSS parameter to its default

crsctl query cssvotedisk - lists the voting disks used by CSS

crsctl add cssvotedisk - adds a new voting disk

crsctl delete cssvotedisk - removes a voting disk

crsctl enable crs - enables startup for all CRS daemons

crsctl disable crs - disables startup for all CRS daemons

crsctl start crs - starts all CRS daemons.

crsctl stop crs - stops all CRS daemons. Stops CRS resources in case of cluster.

crsctl start resources - starts CRS resources.

crsctl stop resources - stops CRS resources.

crsctl debug statedumpevm - dumps state info for evm objects

crsctl debug statedumpcrs - dumps state info for crs objects

crsctl debug statedumpcss - dumps state info for css objects

crsctl debug log css [module:level]{,module:level} ...

- Turns on debugging for CSS

crsctl debug trace css - dumps CSS in-memory tracing cache

crsctl debug log crs [module:level]{,module:level} ...

- Turns on debugging for CRS

crsctl debug trace crs - dumps CRS in-memory tracing cache

crsctl debug log evm [module:level]{,module:level} ...

- Turns on debugging for EVM

crsctl debug trace evm - dumps EVM in-memory tracing cache

crsctl debug log res turns on debugging for resources

crsctl query crssoftwareversion [] - lists the version of CRS software installed

crsctl query crsactiveversion - lists the CRS software operating version

crsctllsmodulescss - lists the CSS modules that can be used for debugging

crsctllsmodulescrs - lists the CRS modules that can be used for debugging

crsctllsmodulesevm - lists the EVM modules that can be used for debugging

8.2.SRVCTL命令

SRVCTL命令可以控制RAC数据库中的instance,listener以及services。

通常SRVCTL在ORACLE用户下执行

#通过SRVCTL命令来start/stop/check所有的实例:

$ srvctlstart|stop|status database -d

start/stop指定的实例:

$ srvctlstart|stop|status instance -d -i

列出当前RAC下所有的

$ srvctlconfig database -d

start/stop/check所有的nodeapps,比如:VIP, GSD, listener, ONS:

$ srvctlstart|stop|statusnodeapps -n

如果你使用ASM,srvctl也可以start/stop ASM实例:

$ srvctlstart|stopasm -n [-i ] [-o]

可以获取所有的环境信息:

$ srvctlgetenv database -d [-i ]

设置全局环境和变量:

$ srvctlsetenv database -d -t LANG=en

从OCR中删除已有的数据库:

$ srvctl remove database -d

向OCR中添加一个数据库:

$ srvctl add database -d -o

[-m ] [-p] [-A /netmask] [-r {PRIMARY | PHYSICAL_STANDBY |LOGICAL_STANDBY}] [-s ]

向OCR中添加一个数据库的实例:

$ srvctl add instance -d -i -n

$ srvctl add instance -d -i -n

向OCR中添加一个ASM实例:

$ srvctl add asm -n -i -o

添加一个service

$ srvctl add service -d -s -r [-a ] [-P ] [-u]

修改在其他节点上的service

$ srvctl modify service -d -s -I -t

relocate某个节点的service到其他节点

srvctl relocate service -d -s -I

问题:

1.安装clusterwarelibXp.so.6: cannot open shared object file: No such file or directory occurred..

]$ ./runInstaller

********************************************************************************

Please run the script rootpre.sh as root on all machines/nodes. The script can be found at the toplevel of the CD or stage-area. Once you have run the script, please type Y to proceed

Answer 'y' if root has run 'rootpre.sh' so you can proceed with Oracle Clusterware installation.

Answer 'n' to abort installation and then ask root to run 'rootpre.sh'.

********************************************************************************

Has 'rootpre.sh' been run by root? [y/n] (n)

y

Starting Oracle Universal Installer...

Checking installer requirements...

Checking operating system version: must be redhat-3, SuSE-9, redhat-4, UnitedLinux-1.0, asianux-1 or asianux-2

Passed

All installer requirements met.

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2014-11-28_11-53-35AM. Please wait ...[oracle@rac1 clusterware]$ Oracle Universal Installer, Version 10.2.0.1.0 Production

Copyright (C) 1999, 2005, Oracle. All rights reserved.

Exception java.lang.UnsatisfiedLinkError: /tmp/OraInstall2014-11-28_11-53-35AM/jre/1.4.2/lib/i386/libawt.so: libXp.so.6: cannot open shared object file: No such file or directory occurred..

java.lang.UnsatisfiedLinkError: /tmp/OraInstall2014-11-28_11-53-35AM/jre/1.4.2/lib/i386/libawt.so: libXp.so.6: cannot open shared object file: No such file or directory

atjava.lang.ClassLoader$NativeLibrary.load(Native Method)

at java.lang.ClassLoader.loadLibrary0(Unknown Source)

atjava.lang.ClassLoader.loadLibrary(Unknown Source)

at java.lang.Runtime.loadLibrary0(Unknown Source)

atjava.lang.System.loadLibrary(Unknown Source)

atsun.security.action.LoadLibraryAction.run(Unknown Source)

atjava.security.AccessController.doPrivileged(Native Method)

atsun.awt.NativeLibLoader.loadLibraries(Unknown Source)

atsun.awt.DebugHelper.(Unknown Source)

atjava.awt.Component.(Unknown Source)

at oracle.sysman.oii.oiif.oiifm.OiifmGraphicInterfaceManager.(OiifmGraphicInterfaceManager.java:222)

at oracle.sysman.oii.oiic.OiicSessionInterfaceManager.createInterfaceManager(OiicSessionInterfaceManager.java:193)

at oracle.sysman.oii.oiic.OiicSessionInterfaceManager.getInterfaceManager(OiicSessionInterfaceManager.java:202)

at oracle.sysman.oii.oiic.OiicInstaller.getInterfaceManager(OiicInstaller.java:436)

at oracle.sysman.oii.oiic.OiicInstaller.runInstaller(OiicInstaller.java:926)

at oracle.sysman.oii.oiic.OiicInstaller.main(OiicInstaller.java:866)

Exception in thread "main" java.lang.NoClassDefFoundError

at oracle.sysman.oii.oiif.oiifm.OiifmGraphicInterfaceManager.(OiifmGraphicInterfaceManager.java:222)

at oracle.sysman.oii.oiic.OiicSessionInterfaceManager.createInterfaceManager(OiicSessionInterfaceManager.java:193)

at oracle.sysman.oii.oiic.OiicSessionInterfaceManager.getInterfaceManager(OiicSessionInterfaceManager.java:202)

at oracle.sysman.oii.oiif.oiifm.OiifmAlert.(OiifmAlert.java:151)

at oracle.sysman.oii.oiic.OiicInstaller.runInstaller(OiicInstaller.java:984)

at oracle.sysman.oii.oiic.OiicInstaller.main(OiicInstaller.java:866)

解决方法:

]# rpm -ivh libXp-1.0.0-8.1.el5.i386.rpm

warning: libXp-1.0.0-8.1.el5.i386.rpm: Header V3 DSA signature: NOKEY, key ID 37017186

Preparing... ########################################### [100%]

1:libXp########################################### [100%]

2.安装clusterware执行root.sh 报错libstdc++.so.5: cannot open shared object file

]# /u01/app/oracle/crs/root.sh

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up NS directories

Oracle Cluster Registry configuration upgraded successfully

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

/u01/app/oracle/crs/bin/crsctl.bin: error while loading shared libraries: libstdc++.so.5: cannot open shared object file: No such file or directory

解决方法:

]# rpm -ivhcompat-libstdc++-33-3.2.3-61.x86_64.rpm

warning: compat-libstdc++-33-3.2.3-61.x86_64.rpm: Header V3 DSA signature: NOKEY, key ID 37017186

Preparing... ########################################### [100%]

1:compat-libstdc++-33 ########################################### [100%]

3.安装clusterware执行root.shFailed to upgrade Oracle Cluster Registry configuration

]# /u01/app/oracle/crs/root.sh

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

WARNING: directory '/u01' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up NS directories

Failed to upgrade Oracle Cluster Registry configuration

解决方法

清空orc disk和清空 vote disk

]#dd if=/dev/zero of=/dev/sdc1 bs=8192 count=2000

16384000 bytes (16 MB) copied, 0.368825 seconds, 44.4 MB/s

]#dd if=/dev/zero of=/dev/sdc2 bs=8192 count=2000

2000+0 records in

来自 “ ITPUB博客 ” ,链接:http://blog.itpub.net/27000195/viewspace-1409094/,如需转载,请注明出处,否则将追究法律责任。

转载于:http://blog.itpub.net/27000195/viewspace-1409094/