CoreML & ARKit3

CoreML&ARKit3

大纲

- ARKit3的新特性

- CoreML和ARKit的结合使用

Recognizing Objects in Live Capture

静态图片识别官方demo

ARKit3

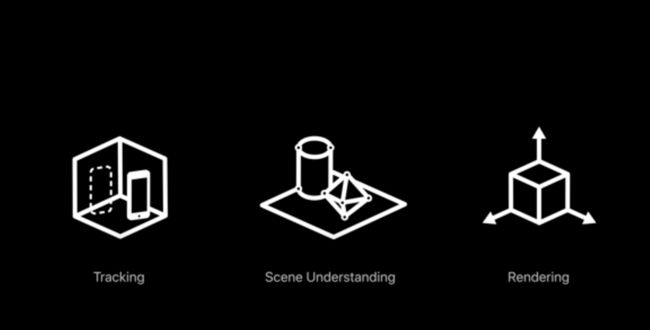

Introducing ARKit 3

ARKit is the groundbreaking augmented reality (AR) platform for iOS that can transform how people connect with the world around them. Explore the state-of-the-art capabilities of ARKit 3 and discover the innovative foundation it provides for RealityKit. Learn how ARKit makes AR even more immersive through understanding of body position and movement for motion capture and people occlusion. Check out additions for multiple face tracking, collaborative session building, a coaching UI for on-boarding, and much more.

https://developer.apple.com/videos/play/wwdc2019/604/

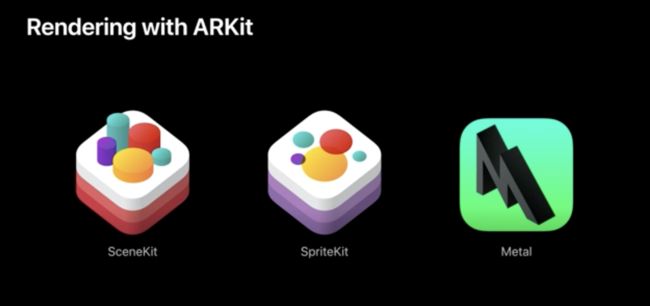

SceneKit 3D场景

SpriteKit 2D游戏

Metal 类似openGL图像处理

新增RealityKit

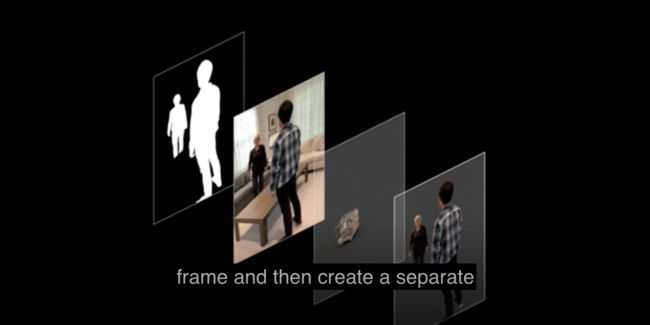

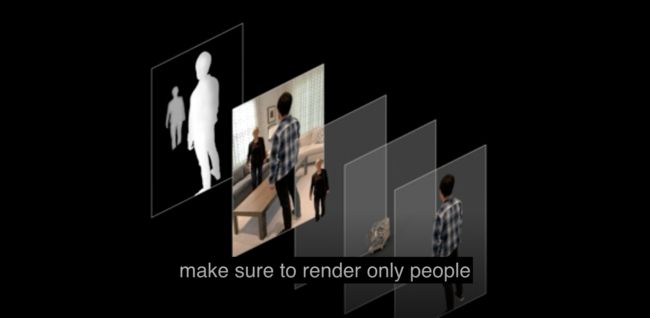

通过机器学习分离场景和人物(Depth Estimation)

![]()

仔细看还是有点问题,因为后面的人应该在物体后面,而不应该遮挡物体

ARKit3可以做到人物远近的分离,这样多了一层就可以把物体放到中间

通过apple处理器,我们可以同步做到这样,就是边播放边处理

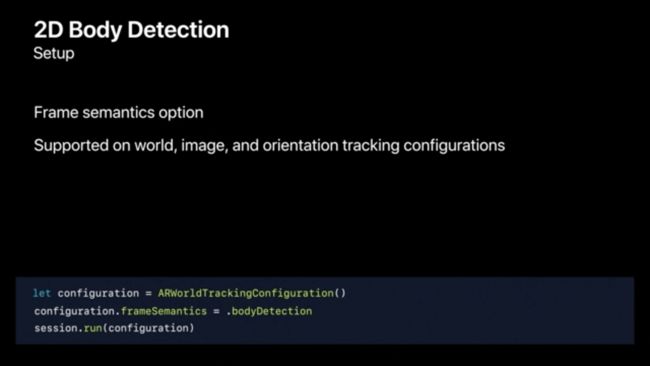

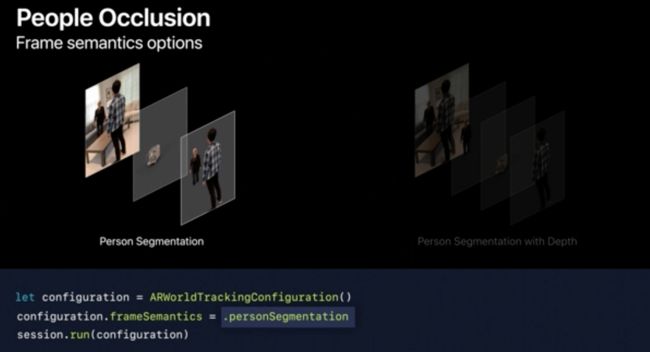

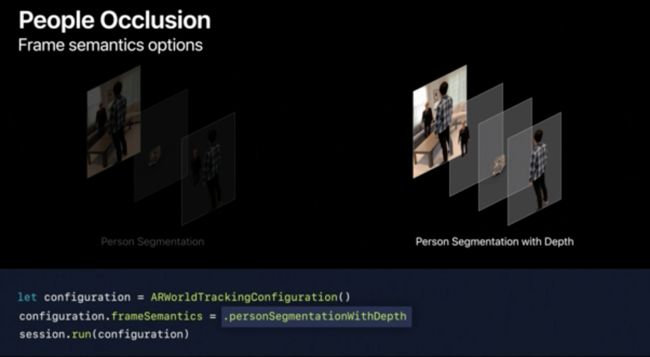

API新增通过frameSemantics检测人物。

.personSegmentation可以分割出人物,主要运用在人物站在3D模型前面的场景。

第二种是

.personSegmentationWithDepth可以分割出人物的远近

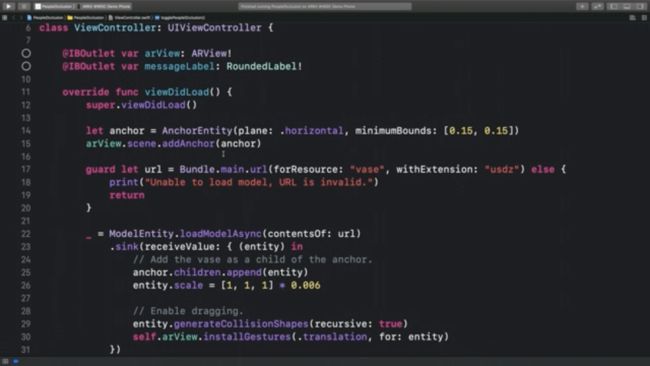

一个遮挡花瓶的demo

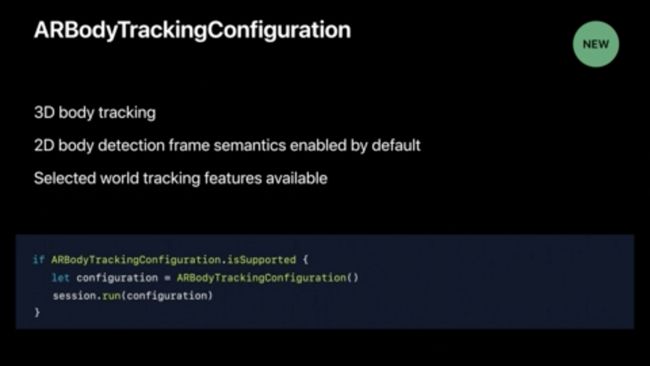

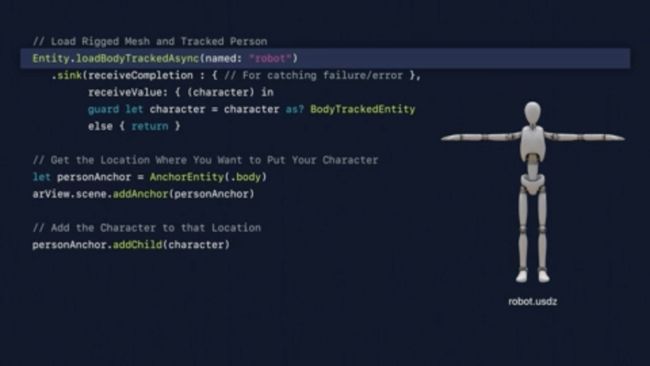

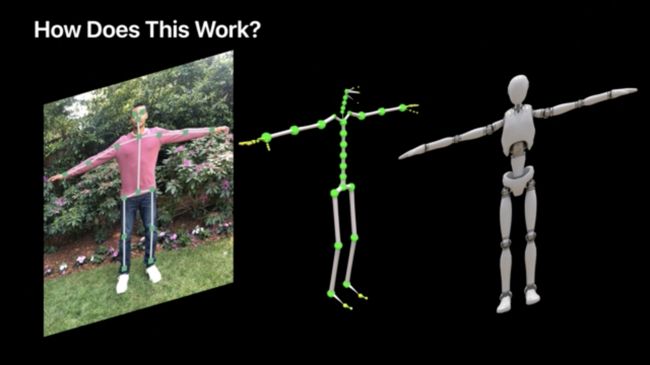

新增了一个ARBodyTrackingConfiguration

同时使用前后摄像头

将前置摄像头采集的面部表情展现到后置摄像头拍摄的场景上,并且可以同步跟踪

两个不同用户可以共享观察到的场景,在他们观察到共同的场景关键点时开始融合

一个个点叫AR Anchor

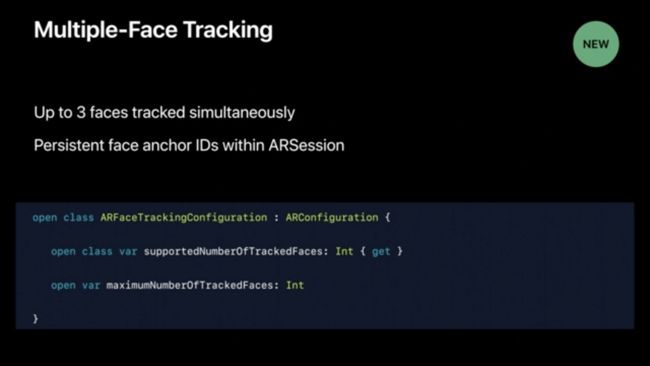

ARKit3的人脸识别可以保存每个人ID的信息,在一个session中,如同一个人进入镜头后离开,再进入镜头可以获得之前的ID。如果你重新初始化一个session,那么保留的信息将删除。

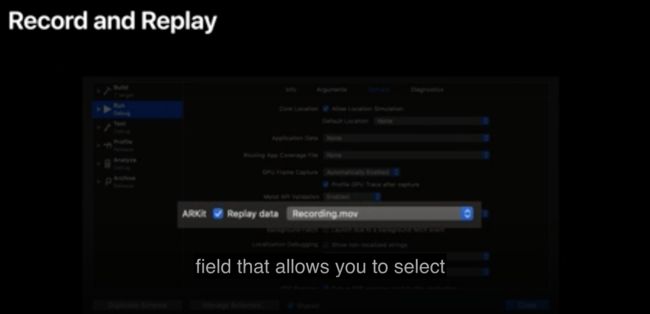

录制场景再重播

我们在使用ARKit时需要到特定的场景测试,这样很不方便,这期提供了录制场景的功能,可以重复测试。

在设置里会多一个选项

协作AR体验

Building Collaborative AR Experiences

With iOS 13, ARKit and RealityKit enable apps to establish shared AR experiences faster and easier than ever. Understand how collaborative sessions allow multiple devices to build a combined world map and share AR anchors and updates in real-time. Learn how to incorporate collaborative sessions into ARKit-based apps, then roll into SwiftStrike, an engaging and immersive multiplayer AR game built using RealityKit and Swift.

https://developer.apple.com/videos/play/wwdc2019/610/

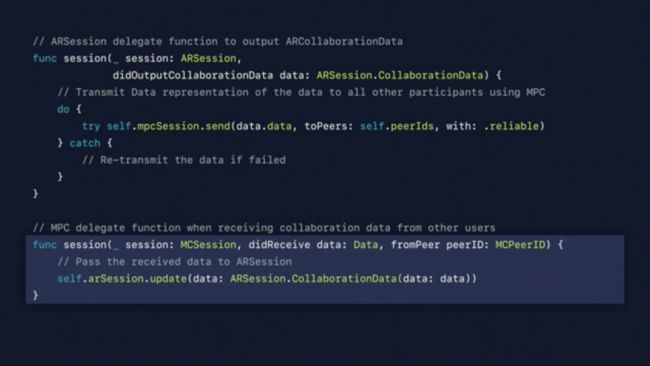

实现共享的两个delegate方法,一个发送共享数据,一个接收到数据更新session

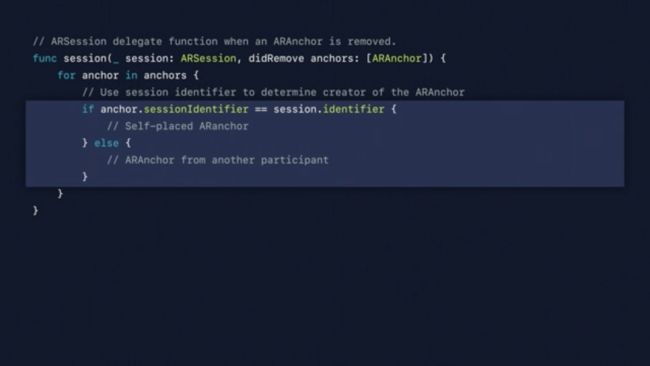

通过session的sessionIdentifier判断添加的anchor是自己添加的还是另一个用户添加的

用户A在场景中添加了立方体,用户B可以在另一个视角看到立方体的侧面视角

把人物带入AR

Bringing People into AR

ARKit 3 enables a revolutionary capability for robust integration of real people into AR scenes. Learn how apps can use live motion capture to animate virtual characters or be applied to 2D and 3D simulation. See how People Occlusion enables even more immersive AR experiences by enabling virtual content to pass behind people in the real world.

https://developer.apple.com/videos/play/wwdc2019/607/

CoreML&ARKit

Creating Great Apps Using Core ML and ARKit

Take a journey through the creation of an educational game that brings together Core ML, ARKit, and other app frameworks. Discover opportunities for magical interactions in your app through the power of machine learning. Gain a deeper understanding of approaches to solving challenging computer vision problems. See it all come to life in an interactive coding session.

https://developer.apple.com/videos/play/wwdc2019/228/

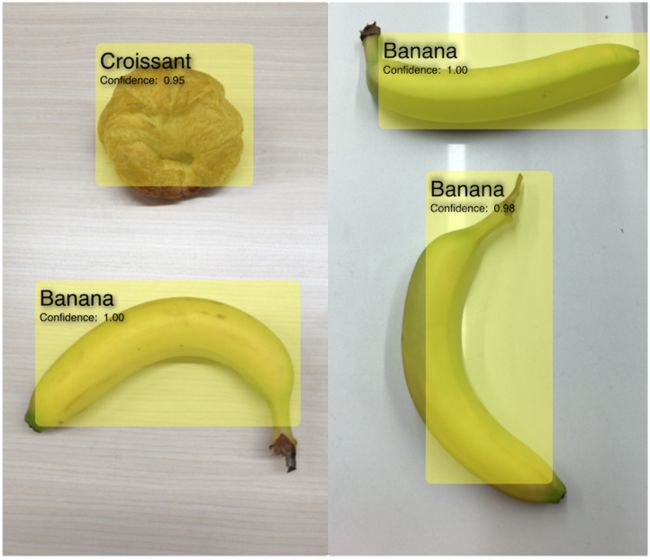

检测到有几个骰子后,我们还想知道上面的点数

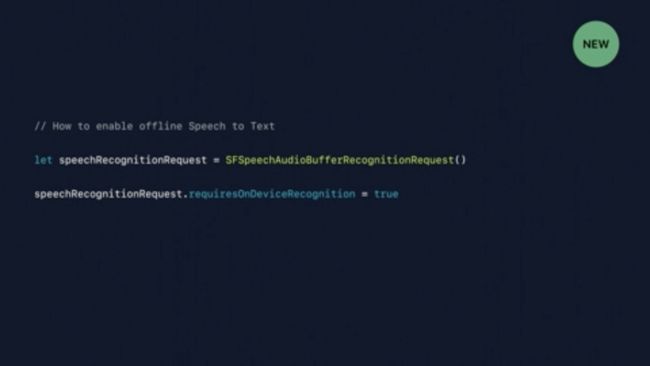

也可以通过语音识别交互,即使没有联网

之后介绍了一款识别骰子数字的游戏,谁先走到9

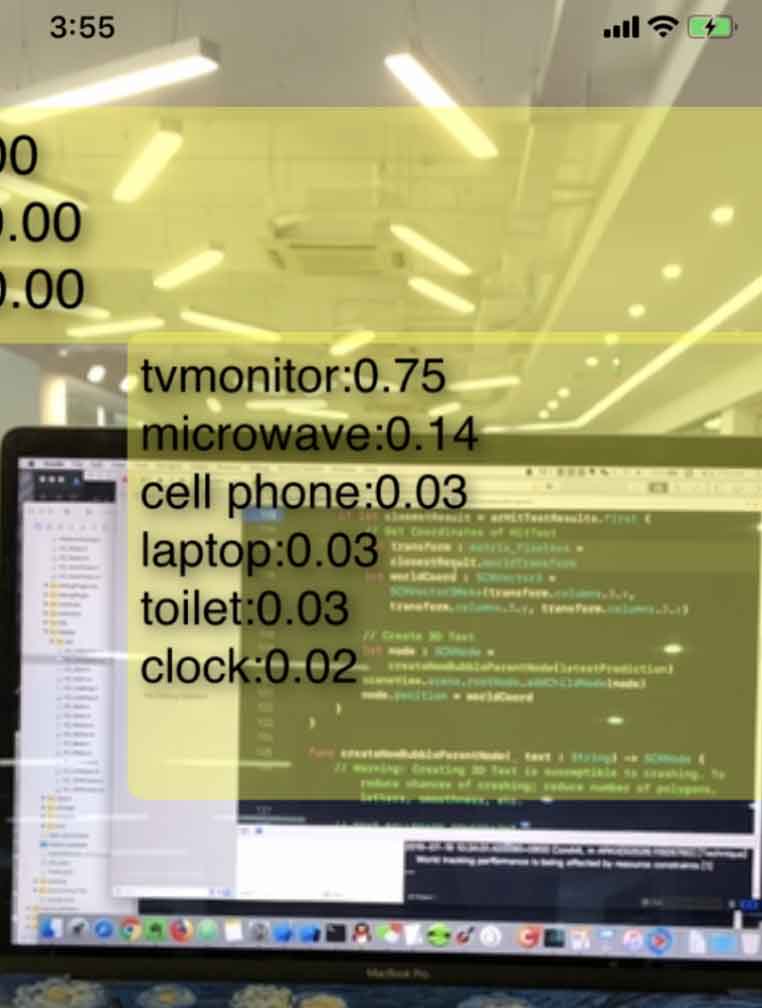

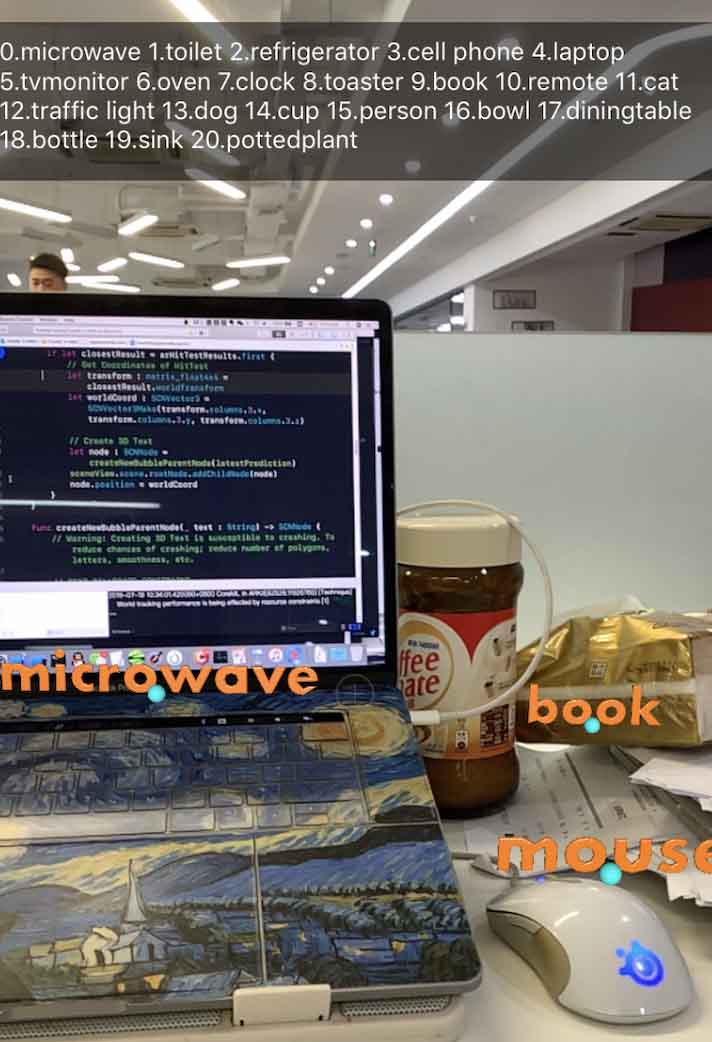

Recognizing Objects in Live Capture

静态图片识别官方demo

https://developer.apple.com/documentation/vision/classifying_images_with_vision_and_core_ml

动态图片识别,用摄像头识别官方demo

https://developer.apple.com/documentation/vision/recognizing_objects_in_live_capture

下载其他Apple提供的模型库

https://developer.apple.com/machine-learning/models/

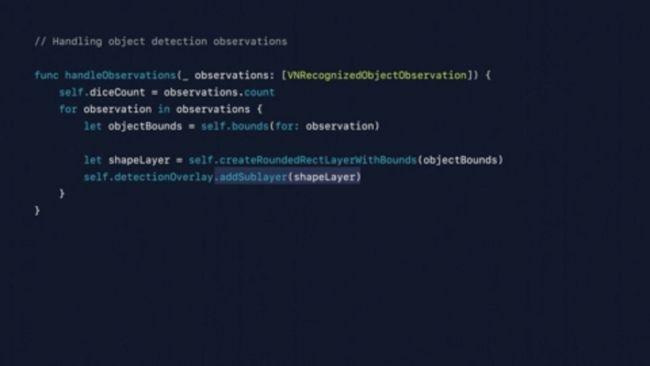

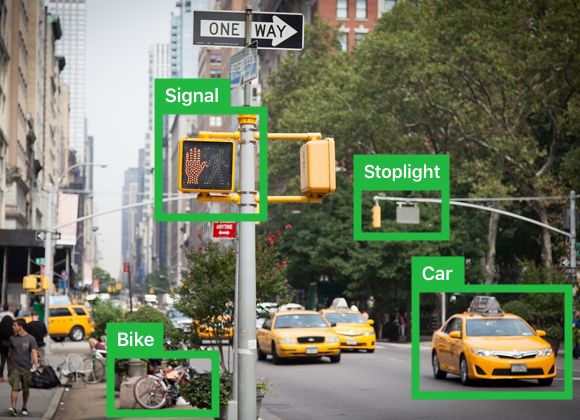

With the Vision framework, you can recognize objects in live capture. Starting in iOS 12, macOS 10.14, and tvOS 12, Vision requests made with a Core ML model return results asVNRecognizedObjectObservation objects, which identify objects found in the captured scene.

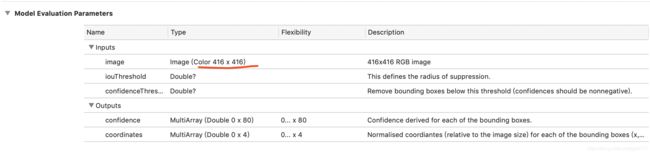

Check the model parameters in Xcode to find out if your app requires a resolution smaller than 640 x 480 pixels.

Set the camera resolution to the nearest resolution that is greater than or equal to the resolution of images used in the model:

- (instancetype)initWithImage:(CVPixelBufferRef)image;

说明我们需要把相机拍摄的图片或选取的图片转换成CVPixelBuffer类型传入

有一点比较麻烦是需要将图片处理成模型说明的尺寸。

如果我们创建一个VNCoreMLRequest就可以省去图像裁剪这步骤,直接在completionHandler回调中获取结果

3D模型检测的demo

https://github.com/hanleyweng/CoreML-in-ARKit

demo测试和修改

https://github.com/gwh111/testARKitWithAction