VS2019 OpenCL安装和快速入门

本文基于NVIDIA GPU和VS2019讲解如何配置OpenCL环境和快速上手编程。

文章目录

- 1.OpenCL安装在VS2019 上

- 1.安装资源准备

- 2.安装步骤

- 2.OpenCL 快速入门

- 1.原文和翻译

- 2.代码改动和调试

- 3.测试通过的代码

1.OpenCL安装在VS2019 上

1.安装资源准备

从NVIDIA 官网下载CUDA 并双击运行安装程序:

https://developer.nvidia.com/cuda-downloads?target_os=Windows&target_arch=x86_64&target_version=7&target_type=exelocal

然后在安装路径找到以下资源:

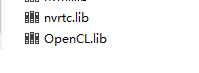

1 .\lib\x64\OpenCL.lib

OpenCL.lib

OpenCL.lib

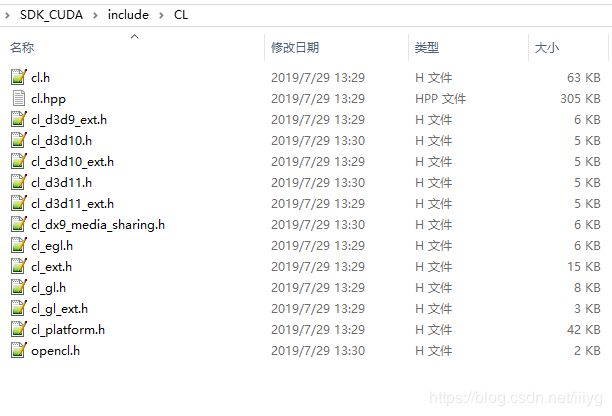

2.CL 头文件

CL 头文件

CL 头文件

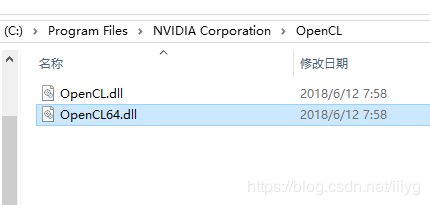

3.在显卡的默认驱动路径找到

OpenCL64.dll

在这里插入图片描述 OpenCL64.dll

OpenCL64.dll

2.安装步骤

新建OpenCL_inc 和OpenCL_lib 目录。将上一步找到的资源分别复制到这两个目录。

其中OpenCL_inc 用来包含CL 头文件,OpenCL_lib 目录用来包含OpenCL.lib以及OpenCL.dll,OpenCL64.dll。

VS2019 环境配置:

1.

项目–> (项目名)属性 -->C/C+±->常规 -->附加包含目录 --> F:\OPENCL\code\OpenCL_inc

2.

项目–> (项目名)属性 -->连接器 --> 常规 --> 附加库目录 --> F:\OPENCL\code\OpenCL_lib

3.

项目–> (项目名)属性 -->连接器 -->输入–> 附加依赖项 --> OpenCL.lib

2.OpenCL 快速入门

1.原文和翻译

原文地址

参考翻译

2.代码改动和调试

尝试将vector_add_gpu.cl kernel文件直接以字符串的方式放在代码中,然后在kernel编译(即执行 clBuildProgram)后再获取PROGRAM SOURCE:

error = clGetProgramInfo(program, CL_PROGRAM_SOURCE, bufSize, programBuffer, &program_size_ret);

发现这样不使用.cl 文件也时可行的。但是需要注意的是将编译后的字符串打印出来时buffer长度需要+1。

3.测试通过的代码

#include