【kubernetes】使用kubeadm快速搭建k8s集群学习

专题:Docker&Kubernetes

Docker&Kubernetes

更多内容请点击 我的博客 查看,欢迎来访。

系统初始化配置

部署Kubernetes(k8s)时,为什么要关闭swap、selinux、防火墙?

- Swap会导致docker的运行不正常,性能下降,是个bug,但是后来关闭swap就解决了,就变成了通用方案,后续可能修复了(我没关注),基本上默认关闭了就OK,内存开大点儿不太会oom,本来容器也可以限制内存的使用量,控制一下就好。

- Selinux是内核级别的一个安全模块,通过安全上下文的方式控制应用服务的权限,是应用和操作系统之间的一道ACL,但不是所有的程序都会去适配这个模块,不适配的话开着也不起作用,何况还有规则影响到正常的服务。比如权限等等相关的问题。

- 防火墙看你说的是那种,如果你说的是iptables的话 ,那样应该是开着的,不应该关。k8s通过iptables做pod间流量的转发和端口映射,如果你说的防火墙是firewalld那就应该关闭,因为firewalld和iptables属于前后两代方案,互斥。当然,如果k8s没用iptables,比如用了其他的方式那就不需要用到自然可以关掉。

配置主机名和hosts

配置所有节点的主机名

# 192.168.99.100 Master节点

[root@localhost ~]# hostnamectl set-hostname k8s-master

[root@localhost ~]# su

[root@k8s-master ~]#

# 192.168.99.201 Node1节点

[root@localhost ~]# hostnamectl set-hostname k8s-node1

[root@localhost ~]# su

[root@k8s-node1 ~]#

# 192.168.99.202 Node2节点

[root@localhost ~]# hostnamectl set-hostname k8s-node2

[root@localhost ~]# su

[root@k8s-node2 ~]#

配置hosts,以Master节点为例,其他一样

[root@k8s-master ~]# vim /etc/hosts

[root@k8s-master ~]# cat /etc/hosts | grep k8s

192.168.99.100 k8s-master

192.168.99.201 k8s-node1

192.168.99.202 k8s-node2

# 之后ping主机名就可以通了

[root@k8s-master ~]# ping k8s-node2

关闭防火墙

所有节点都需要关闭

# 关闭防火墙

[root@k8s-master ~]# systemctl stop firewalld

# 禁止开机启动

[root@k8s-master ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

关闭SELinux

关闭selinux:linux下的一个安全机制,主要是对文件系统访问做一个权限控制,这个权限控制会影响到kubernetes中的一个组件kuberlete的安装,因为这个组件的安装会访问本地的文件操作系统。

所有节点都需要操作

# 先临时关闭,永久关闭需要重启主机

[root@k8s-master ~]# setenforce 0

# 永久关闭

[root@k8s-master ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@k8s-master ~]# cat /etc/selinux/config | grep ^SELINUX=

SELINUX=disabled

关闭Swap

禁止swap分区:swap分区的作用是当物理内存不足时,利用swap分区做数据交换,但是在kubernetes中完全不支持swap分区,所以必须禁止掉,或者创建系统的时候就不创建它。

所有节点都需要关闭

# 临时关闭

[root@k8s-master ~]# swapoff -a

# 永久关闭

[root@k8s-master ~]# cat /etc/fstab | grep swap

/dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-master ~]# vim /etc/fstab

[root@k8s-master ~]# cat /etc/fstab | grep swap

# /dev/mapper/centos-swap swap swap defaults 0 0

将桥接的IPv4流量传递到iptables的链

有一些ipv4的流量不能走iptables链,(linux内核的一个过滤器,每个流量都会经过他,然后再匹配是否可进入当前应用进程去处理),导致流量丢失。

# k8s.conf文件原来不存在,需要自己创建的

[root@k8s-master ~]# cat > /etc/sysctl.d/k8s.conf << EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@k8s-master ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

* Applying /etc/sysctl.conf ...

所有节点安装Docker、kubeadm、kubelet、kubectl

安装安装Doctor、kubeadm【引导集群的客户端工具】、kubelet【kubernetes中管理容器】

安装Docker(实际版本18.06.3)

# 配置Docker官方源并将下载的源文件存放于/etc/yum.repos.d/下

[root@k8s-master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# 安装Docker-CE,先测试最新版,如果指定的版本号错误,会安装最新版,先测试下行不行

[root@k8s-master ~]# yum install docker-ce-19.03.9-3.el7 -y

# 实际需要安装

[root@k8s-master ~]# yum install docker-ce-18.06.3.ce-3.el7

# 启动Docker

[root@k8s-master ~]# systemctl start docker

[root@k8s-master ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

# 查看Docker版本

[root@k8s-master ~]# docker --version

Docker version 19.03.9, build 9d988398e7

安装kubeadm,kubelet和kubectl(实际版本1.18.2)

ubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

# 设置源

[root@k8s-master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 开始安装

[root@k8s-master ~]# yum install kubelet-1.18.2 kubeadm-1.18.2 kubectl-1.18.2 -y

# 提示xxx.rpm公钥尚未安装

# 跳过公钥检查

[root@k8s-master ~]# yum install kubelet-1.18.2 kubeadm-1.18.2 kubectl-1.18.2 --nogpgcheck -y

已安装:

kubeadm.x86_64 0:1.18.2-0 kubectl.x86_64 0:1.18.2-0 kubelet.x86_64 0:1.18.2-0

作为依赖被安装:

conntrack-tools.x86_64 0:1.4.4-7.el7 cri-tools.x86_64 0:1.13.0-0

kubernetes-cni.x86_64 0:0.7.5-0 libnetfilter_cthelper.x86_64 0:1.0.0-11.el7

libnetfilter_cttimeout.x86_64 0:1.0.0-7.el7 libnetfilter_queue.x86_64 0:1.0.2-2.el7_2

socat.x86_64 0:1.7.3.2-2.el7

完毕!

[root@k8s-master ~]# whereis kubeadm

kubeadm: /usr/bin/kubeadm

[root@k8s-master ~]# whereis kubectl

kubectl: /usr/bin/kubectl

[root@k8s-master ~]# whereis kubelet

kubelet: /usr/bin/kubelet

# 此时此刻不需要启动kubelet,因为最后kubeadm会一键部署,仅仅设置开机启动即可

[root@k8s-master ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

部署Kubernetes Master

参数说明

kubeadm init命令参数说明

--apiserver-advertise-address string 设置 apiserver 绑定的 IP.

--apiserver-bind-port int32 设置apiserver 监听的端口. (默认 6443)

--apiserver-cert-extra-sans strings api证书中指定额外的Subject Alternative Names (SANs) 可以是IP 也可以是DNS名称。 证书是和SAN绑定的。

--cert-dir string 证书存放的目录 (默认 "/etc/kubernetes/pki")

--certificate-key string kubeadm-cert secret 中 用于加密 control-plane 证书的key

--config string kubeadm 配置文件的路径.

--cri-socket string CRI socket 文件路径,如果为空 kubeadm 将自动发现相关的socket文件; 只有当机器中存在多个 CRI socket 或者 存在非标准 CRI socket 时才指定.

--dry-run 测试,并不真正执行;输出运行后的结果.

--feature-gates string 指定启用哪些额外的feature 使用 key=value 对的形式。

-h, --help 帮助文档

--ignore-preflight-errors strings 忽略前置检查错误,被忽略的错误将被显示为警告. 例子: 'IsPrivilegedUser,Swap'. Value 'all' ignores errors from all checks.

--image-repository string 选择拉取 control plane images 的镜像repo (default "k8s.gcr.io")

--kubernetes-version string 选择K8S版本. (default "stable-1")

--node-name string 指定node的名称,默认使用 node 的 hostname.

--pod-network-cidr string 指定 pod 的网络, control plane 会自动将 网络发布到其他节点的node,让其上启动的容器使用此网络

--service-cidr string 指定service 的IP 范围. (default "10.96.0.0/12")

--service-dns-domain string 指定 service 的 dns 后缀, e.g. "myorg.internal". (default "cluster.local")

--skip-certificate-key-print 不打印 control-plane 用于加密证书的key.

--skip-phases strings 跳过指定的阶段(phase)

--skip-token-print 不打印 kubeadm init 生成的 default bootstrap token

--token string 指定 node 和control plane 之间,简历双向认证的token ,格式为 [a-z0-9]{6}\.[a-z0-9]{16} - e.g. abcdef.0123456789abcdef

--token-ttl duration token 自动删除的时间间隔。 (e.g. 1s, 2m, 3h). 如果设置为 '0', token 永不过期 (default 24h0m0s)

--upload-certs 上传 control-plane 证书到 kubeadm-certs Secret.

在Master节点kubeadm部署

kubeadm init初始化命令

由于测试修改了kubernetes-version,但是阿里云没有v1.18.3 not found,仍使用v1.18.2

[root@k8s-master ~]# kubeadm init \

--apiserver-advertise-address=192.168.99.100 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.2 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

初始化报错处理

W0523 16:43:11.953762 3851 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.2

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2

[ERROR CRI]: container runtime is not running: output: Client:

Debug Mode: false

Server:

ERROR: Error response from daemon: client version 1.40 is too new. Maximum supported API version is 1.39

errors pretty printing info

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

client version 1.40 is too new

Docker版本 19.03.9 过高,考虑升级k8s版本,从 kubernetes 1.18.2 -> 1.18.3,仍然不行,在降级Docker

[root@k8s-master ~]# yum update kubelet-1.18.3 kubeadm-1.18.3 kubectl-1.18.3 --nogpgcheck -y

更新完毕:

kubeadm.x86_64 0:1.18.3-0 kubectl.x86_64 0:1.18.3-0 kubelet.x86_64 0:1.18.3-0

完毕!

docker 19.03.9降级18.06.3安装冲突

# 降级Docker版本

[root@k8s-master ~]# yum list docker-ce --showduplicates | sort -r

已加载插件:fastestmirror

已安装的软件包

可安装的软件包

* updates: mirror.lzu.edu.cn

Loading mirror speeds from cached hostfile

* extras: mirror.lzu.edu.cn

docker-ce.x86_64 3:19.03.9-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.8-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.7-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.6-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.5-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.4-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.3-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.2-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.1-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.0-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.9-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.9-3.el7 @docker-ce-stable

docker-ce.x86_64 3:18.09.8-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.7-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.6-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.5-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.4-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.3-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.2-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.1-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.0-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.3.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.2.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.3.ce-1.el7 docker-ce-stable

docker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable

* base: mirror.lzu.edu.cn

[root@k8s-master ~]# yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

[root@k8s-master ~]# yum install docker-ce-18.06.3.ce-3.el7

# 安装报错

Transaction check error:

file /usr/bin/docker from install of docker-ce-18.06.3.ce-3.el7.x86_64 conflicts with file from package docker-ce-cli-1:19.03.9-3.el7.x86_64

file /usr/share/bash-completion/completions/docker from install of docker-ce-18.06.3.ce-3.el7.x86_64 conflicts with file from package docker-ce-cli-1:19.03.9-3.el7.x86_64

file /usr/share/fish/vendor_completions.d/docker.fish from install of docker-ce-18.06.3.ce-3.el7.x86_64 conflicts with file from package docker-ce-cli-1:19.03.9-3.el7.x86_64

file /usr/share/man/man1/docker-attach.1.gz from install of docker-ce-18.06.3.ce-3.el7.x86_64 conflicts with file from package docker-ce-cli-1:19.03.9-3.el7.x86_64

file /usr/share/man/man1/docker-checkpoint-create.1.gz from install of docker-ce-18.06.3.ce-3.el7.x86_64 conflicts with file from package docker-ce-cli-1:19.03.9-3.el7.x86_64

file /usr/share/man/man1/docker-checkpoint-ls.1.gz from install of docker-ce-18.06.3.ce-3.el7.x86_64 conflicts with file from package docker-ce-cli-1:19.03.9-3.el7.x86_64

# 。。。。

# 可以通过find / -name docker查找缓存包,如果找不到直接下载即可。

# 用rpm手动安装并添加–replacefiles 参数

[root@k8s-master ~]# rpm -ivh /var/cache/yum/x86_64/7/docker-ce-stable/packages/docker-ce-18.06.3.ce-3.el7.x86_64.rpm --replacefiles

错误:依赖检测失败:

container-selinux >= 2.9 被 docker-ce-18.06.3.ce-3.el7.x86_64 需要

# 需要安装container-selinux

[root@k8s-master ~]# yum install container-selinux -y

已安装:

container-selinux.noarch 2:2.119.1-1.c57a6f9.el7

完毕!

# 再次使用rpm安装docker

[root@k8s-master ~]# rpm -ivh /var/cache/yum/x86_64/7/docker-ce-stable/packages/docker-ce-18.06.3.ce-3.el7.x86_64.rpm --replacefiles

[root@k8s-master ~]# docker version

Client:

Version: 18.06.3-ce

API version: 1.38

# 启动docker

[root@k8s-master ~]# systemctl start docker

[root@k8s-master ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

cgroupfs警告问题(所有节点修改)

[警告IsDockerSystemdCheck]:检测到“cgroupfs”作为Docker cgroup驱动程序。 推荐的驱动程序是“systemd”。

[root@k8s-node1 ~]# vim /etc/docker/daemon.json

[root@k8s-node1 ~]# cat /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"]

}

[root@k8s-node1 ~]# systemctl restart docker

CPU核心数修改

由于是VMware安装的,所以所有节点修改 处理器内核数量大于2

阿里云v1.18.3 not found

[root@k8s-master ~]# kubeadm init \

--apiserver-advertise-address=192.168.99.100 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.18.3 \

--service-cidr=10.1.0.0/16\

--pod-network-cidr=10.244.0.0/16

rror execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.3: output: Error response from daemon: manifest for registry.aliyuncs.com/google_containers/kube-apiserver:v1.18.3 not found

, error: exit status 1

[ERROR ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-controller-manager:v1.18.3: output: Error response from daemon: manifest for registry.aliyuncs.com/google_containers/kube-controller-manager:v1.18.3 not found

, error: exit status 1

# 所有节点上都进行降级安装,即 1.18.3 -> 1.18.2

[root@k8s-master ~]# yum downgrade kubelet-1.18.2 kubeadm-1.18.2 kubectl-1.18.2 --nogpgcheck -y

kubeadm init初始化日志

# 1、运行一系列预检代码来检查系统的状态;

# 大部分的检查只会抛出一个警告,也有一部分会抛出异常错误从而导致工作流推出

# (比如没有关闭swap或者没有安装docker)。官方给出一个参数–ignore-preflight-errors=...

W0523 18:08:32.999414 2448 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

# 2、生成一个用来认证k8s组件间调用的自签名的CA(Certificate Authority,证书授权);

# 这个证书也可以通过–cert-dir(默认是/etc/kubernetets/pki)的方式传入,那么这一步就会跳过。

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 192.168.99.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.99.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.99.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

# 3、把kubelet、controller-manager和scheduler等组件的配置文件写到/etc/kubernets/目录,

# 这几个组件会使用这些配置文件来连接API-server的服务;

# 除了上面几个配置文件,还会生成一个管理相关的admin.conf文件。

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

# 4、创建一些 静态pod 的配置文件了,包括API-server、controller-manager和scheduler。

# 假如没有提供外部etcd,还会另外生成一个etcd的静态Pod配置文件。

# 这些静态pod会被写入/etc/kubernetes/manifests,kubelet进程会监控这个目录,从而创建相关的pod。

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0523 18:09:33.958611 2448 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0523 18:09:33.960089 2448 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

# 5、如果上一步比较顺利,这个时候k8s的控制面进程(api-server、controller-manager、scheduler)就全都起来了。

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.002340 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

# 6、给当前的节点(Master节点)打label和taints,从而防止其他的负载在这个节点运行。

[mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

# 7、生成token,其他节点如果想加入当前节点(Master)所在的k8s集群,会用到这个token。

[bootstrap-token] Using token: mfouno.1nacdahemjebmzgb

# 8、进行一些允许节点以 Bootstrap Tokens) 和 TLS bootstrapping 方式加入集群的必要的操作

# 设置RBAC规则,同时创建一个用于节点加入集群的ConfigMap(包含了加入集群需要的所有信息)。

# 让Bootstrap Tokens可以访问CSR签名的API。

# 给新的CSR请求配置自动认证机制。

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

# 9、通过API-server安装DNS服务器(1.11版本后默认为CoreDNS,早期版本默认为kube-dns)和kube-proxy插件。

# 这里需要注意的是,DNS服务器只有在安装了CNI(flannel或calico)之后才会真正部署,否则会处于挂起(pending)状态。

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.99.100:6443 --token mfouno.1nacdahemjebmzgb \

--discovery-token-ca-cert-hash sha256:b333ae5c7fc2888d4a416816b1c977866277caf467e1a0f18e585c857d5d17ed

开始使用集群前创建配置文件

直接复制上面的一段即可

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

使用kubectl获取节点

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 67m v1.18.2

上面的状态为NotReady,因为他在等待网络的加入。

安装Pod网络插件(CNI)

flannel解释

Kubernetes-基于flannel的集群网络

Kubernetes网络分析之Flannel

flannel is a network fabric for containers, designed for Kubernetes(GitHub)

应用kube-flannel.yml文件

Master节点,Node节点加入后自动下载

# 应用在线的kube-flannel.yml文件

[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

# 查看命名空间kube-system中的pod变化

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 0/1 Pending 0 74m

coredns-7ff77c879f-x5cmh 0/1 Pending 0 74m

etcd-k8s-master 1/1 Running 0 74m

kube-apiserver-k8s-master 1/1 Running 0 74m

kube-controller-manager-k8s-master 1/1 Running 0 74m

kube-flannel-ds-amd64-d7mbx 0/1 Init:0/1 0 55s

kube-proxy-jndns 1/1 Running 0 74m

kube-scheduler-k8s-master 1/1 Running 0 74m

# 节点只有master,因为还没有其他节点加入进来

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 75m v1.18.2

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 0/1 Pending 0 80m

coredns-7ff77c879f-x5cmh 0/1 Pending 0 80m

etcd-k8s-master 1/1 Running 0 80m

kube-apiserver-k8s-master 1/1 Running 0 80m

kube-controller-manager-k8s-master 1/1 Running 0 80m

kube-flannel-ds-amd64-d7mbx 0/1 Init:ImagePullBackOff 0 6m18s

kube-proxy-jndns 1/1 Running 0 80m

kube-scheduler-k8s-master 1/1 Running 0 80m

# 查看所有pod的状态

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 1/1 Running 0 3h5m

coredns-7ff77c879f-x5cmh 1/1 Running 0 3h5m

etcd-k8s-master 1/1 Running 0 3h5m

kube-apiserver-k8s-master 1/1 Running 0 3h5m

kube-controller-manager-k8s-master 1/1 Running 0 3h5m

kube-flannel-ds-amd64-d7mbx 1/1 Running 0 111m

kube-proxy-jndns 1/1 Running 0 3h5m

kube-scheduler-k8s-master 1/1 Running 0 3h5m

kube-flannel下载慢处理

如果下载太慢,将 https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml 下载到本地

修改,将image的地址修改为Docker Hub国内仓库地址,或者私有仓库。

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64

例如修改为七牛云的,然后再次执行kubectl apply -f kube-flannel.yml,kube-flannel.yml为本地的文件。

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.12.0-amd64

编辑该文件,然后重新应用kubectl apply -f kube-flannel.yml,即创建+更新,可以重复使用

[root@k8s-master ~]# vim kube-flannel.yml

# 重新应用更新的本地kube-flannel.yml文件

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged configured

clusterrole.rbac.authorization.k8s.io/flannel unchanged

clusterrolebinding.rbac.authorization.k8s.io/flannel unchanged

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg unchanged

daemonset.apps/kube-flannel-ds-amd64 configured # 可以看到这项更新了

daemonset.apps/kube-flannel-ds-arm64 unchanged

daemonset.apps/kube-flannel-ds-arm unchanged

daemonset.apps/kube-flannel-ds-ppc64le unchanged

daemonset.apps/kube-flannel-ds-s390x unchanged

将Node加入集群

在所有Node节点上运行,向集群添加新节点,执行在kubeadm init输出的kubeadm join命令

为Node节点初始化

Node1节点加入集群

[root@k8s-node1 ~]# kubeadm join 192.168.99.100:6443 --token mfouno.1nacdahemjebmzgb \

--discovery-token-ca-cert-hash sha256:b333ae5c7fc2888d4a416816b1c977866277caf467e1a0f18e585c857d5d17ed

# Node1日志如下:

W0523 21:21:28.246095 10953 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

Node2节点加入集群

[root@k8s-node2 ~]# kubeadm join 192.168.99.100:6443 --token mfouno.1nacdahemjebmzgb \

--discovery-token-ca-cert-hash sha256:b333ae5c7fc2888d4a416816b1c977866277caf467e1a0f18e585c857d5d17ed

# Node2日志如下

W0523 21:23:01.585236 11009 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

Master查看集群状态

查看集群中的节点信息

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 3h13m v1.18.2

k8s-node1 NotReady <none> 119s v1.18.3

k8s-node2 NotReady <none> 26s v1.18.3

# 过一段时间后,有一个节点已经加入进来了

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 3h32m v1.18.2

k8s-node1 NotReady <none> 21m v1.18.3

k8s-node2 Ready <none> 19m v1.18.3

查看命名空间kube-system下的所有pod信息

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 1/1 Running 0 3h21m

coredns-7ff77c879f-x5cmh 1/1 Running 0 3h21m

etcd-k8s-master 1/1 Running 0 3h21m

kube-apiserver-k8s-master 1/1 Running 0 3h21m

kube-controller-manager-k8s-master 1/1 Running 0 3h21m

kube-flannel-ds-amd64-d7mbx 1/1 Running 0 127m

kube-flannel-ds-amd64-lbqwz 0/1 Init:ImagePullBackOff 0 9m39s

kube-flannel-ds-amd64-z69q4 0/1 Init:ImagePullBackOff 0 8m6s

kube-proxy-dwpvg 1/1 Running 0 9m39s

kube-proxy-jndns 1/1 Running 0 3h21m

kube-proxy-w88h4 1/1 Running 0 8m6s

kube-scheduler-k8s-master 1/1 Running 0 3h21m

# # 过一段时间后,有一个节点已经加入进来了

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 1/1 Running 0 3h32m

coredns-7ff77c879f-x5cmh 1/1 Running 0 3h32m

etcd-k8s-master 1/1 Running 0 3h32m

kube-apiserver-k8s-master 1/1 Running 0 3h32m

kube-controller-manager-k8s-master 1/1 Running 0 3h32m

kube-flannel-ds-amd64-d7mbx 1/1 Running 0 138m

kube-flannel-ds-amd64-th9h9 1/1 Running 0 3m44s

kube-flannel-ds-amd64-xv27n 0/1 Init:ImagePullBackOff 0 3m44s

kube-proxy-dwpvg 1/1 Running 0 21m

kube-proxy-jndns 1/1 Running 0 3h32m

kube-proxy-w88h4 1/1 Running 0 19m

kube-scheduler-k8s-master 1/1 Running 0 3h32m

Master节点测试kubernetes集群

创建一个nginx的资源

# 创建一个nginx的pod

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

# 创建service:暴露容器让外部访问,--type=NodePort说明创建的是NodePort类型,则通过任意一个NodeIP+Port就可以访问。

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

查看pod、svc信息

-o wide 选项可以查看存在哪个对应的节点

# 查看pod详情

[root@k8s-master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-f89759699-vn4gb 0/1 ImagePullBackOff 0 32s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 3h35m

service/nginx NodePort 10.1.0.141 <none> 80:31519/TCP 25s

[root@k8s-master ~]# kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-f89759699-vn4gb 0/1 ImagePullBackOff 0 49s 10.244.3.2 k8s-node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 3h35m <none>

service/nginx NodePort 10.1.0.141 <none> 80:31519/TCP 42s app=nginx

查看pod的详情描述

状态为ImagePullBackOff或ErrImagePull时可以查看该pod描述,在排错时尤为实用

[root@k8s-master ~]# kubectl describe pod nginx-f89759699-vn4gb

Name: nginx-f89759699-vn4gb

Namespace: default

Priority: 0

Node: k8s-node2/192.168.99.202

Start Time: Sat, 23 May 2020 21:44:55 +0800

Labels: app=nginx

pod-template-hash=f89759699

Annotations: <none>

Status: Pending

IP: 10.244.3.2

IPs:

IP: 10.244.3.2

Controlled By: ReplicaSet/nginx-f89759699

Containers:

nginx:

Container ID:

Image: nginx

Image ID:

Port: <none>

Host Port: <none>

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-s2qmv (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-s2qmv:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-s2qmv

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 6m31s default-scheduler Successfully assigned default/nginx-f89759699-vn4gb to k8s-node2

Warning Failed 5m47s kubelet, k8s-node2 Failed to pull image "nginx": rpc error: code = Unknown desc = Error response from daemon: Get https://registry-1.docker.io/v2/library/nginx/manifests/latest: Get https://auth.docker.io/token?scope=repository%3Alibrary%2Fnginx%3Apull&service=registry.docker.io: net/http: TLS handshake timeout

Normal Pulling 4m21s (x4 over 6m30s) kubelet, k8s-node2 Pulling image "nginx"

Warning Failed 4m8s (x3 over 6m20s) kubelet, k8s-node2 Failed to pull image "nginx": rpc error: code = Unknown desc = Error response from daemon: Get https://registry-1.docker.io/v2/: net/http: TLS handshake timeout

Warning Failed 4m8s (x4 over 6m20s) kubelet, k8s-node2 Error: ErrImagePull

Warning Failed 3m41s (x7 over 6m19s) kubelet, k8s-node2 Error: ImagePullBackOff

Normal BackOff 78s (x14 over 6m19s) kubelet, k8s-node2 Back-off pulling image "nginx"

拉取Docker镜像慢处理

所有节点都设置国内的源

[root@k8s-master ~]# vim /etc/docker/daemon.json

[root@k8s-master ~]# cat /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

]

}

[root@k8s-master ~]# systemctl restart docker

[root@k8s-master ~]# docker info

# ...

Registry Mirrors:

https://hub-mirror.c.163.com/

https://docker.mirrors.ustc.edu.cn/

# ...

稍等下再次进行查看

查看pod、svc信息

[root@k8s-master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-f89759699-vn4gb 1/1 Running 0 2d22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.1.0.1 <none> 443/TCP 3d2h

service/nginx NodePort 10.1.0.141 <none> 80:31519/TCP 2d22h

可以看到资源nginx-f89759699-vn4gb的状态由ImagePullBackOff变为Running。

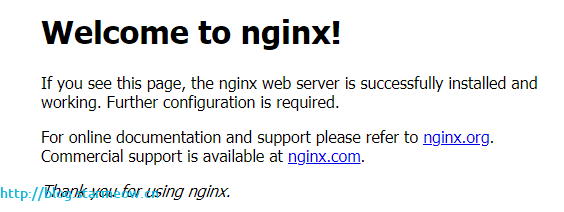

访问nginx资源

现在就可以通过任意一个节点+31519端口访问到,例如http://192.168.99.100:31519/、http://192.168.99.201:31519/、http://192.168.99.202:31519/

查看该pod的详情

[root@k8s-master ~]# kubectl describe pod nginx-f89759699-vn4gb

Name: nginx-f89759699-vn4gb

Namespace: default

Priority: 0

Node: k8s-node2/192.168.99.202

Start Time: Sat, 23 May 2020 21:44:55 +0800

Labels: app=nginx

pod-template-hash=f89759699

Annotations: <none>

Status: Running

IP: 10.244.3.5

# .....

Warning FailedCreatePodSandBox 107s kubelet, k8s-node2 Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "1953fda080b8d84918c671364c50409e3365a34dd1f983e9b2dc4f3042b96e71" network for pod "nginx-f89759699-vn4gb": networkPlugin cni failed to set up pod "nginx-f89759699-vn4gb_default" network: open /run/flannel/subnet.env: no such file or directory

Normal Pulling 106s kubelet, k8s-node2 Pulling image "nginx"

Normal Pulled 82s kubelet, k8s-node2 Successfully pulled image "nginx"

Normal Created 82s kubelet, k8s-node2 Created container nginx

Normal Started 82s kubelet, k8s-node2 Started container nginx

查看集群节点是否正常启动了

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 3d2h v1.18.2

k8s-node1 Ready <none> 2d23h v1.18.3

k8s-node2 Ready <none> 2d23h v1.18.3

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 1/1 Running 3 3d2h

coredns-7ff77c879f-x5cmh 1/1 Running 3 3d2h

etcd-k8s-master 1/1 Running 3 3d2h

kube-apiserver-k8s-master 1/1 Running 4 3d2h

kube-controller-manager-k8s-master 1/1 Running 3 3d2h

kube-flannel-ds-amd64-dsf69 1/1 Running 0 30m

kube-flannel-ds-amd64-th9h9 1/1 Running 5 2d23h

kube-flannel-ds-amd64-xv27n 1/1 Running 0 2d23h

kube-proxy-dwpvg 1/1 Running 3 2d23h

kube-proxy-jndns 1/1 Running 3 3d2h

kube-proxy-w88h4 1/1 Running 4 2d23h

kube-scheduler-k8s-master 1/1 Running 4 3d2h

扩容

一个可以有多个副本,可以对该资源进行扩容

[root@k8s-master ~]# kubectl scale deployment nginx --replicas=3

deployment.apps/nginx scaled

通过--replicas=3可以指定扩容后有多少个

查看扩容后的pod变化

# 扩容前

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-f89759699-vn4gb 1/1 Running 0 2d22h

# 扩容后

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-f89759699-lm824 1/1 Running 0 14s

nginx-f89759699-nf9f4 0/1 ContainerCreating 0 14s

nginx-f89759699-vn4gb 1/1 Running 0 2d23h

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-f89759699-lm824 1/1 Running 0 108s

nginx-f89759699-nf9f4 1/1 Running 0 108s

nginx-f89759699-vn4gb 1/1 Running 0 2d23h

# 可以看到每个nginx所在的node节点

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f89759699-lm824 1/1 Running 0 3m17s 10.244.3.6 k8s-node2 <none> <none>

nginx-f89759699-nf9f4 1/1 Running 0 3m17s 10.244.1.2 k8s-node1 <none> <none>

nginx-f89759699-vn4gb 1/1 Running 0 2d23h 10.244.3.5 k8s-node2 <none> <none>

并发性扩大了3倍,对于用户来说,只需要访问之前的端口即可。

Master部署Dashboard

部署dashboard/v1.10.1

下载修改kubernetes-dashboard.yaml文件

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

如果镜像访问慢,在Docker Hub找到了kubernetes-dashboard的链接,将

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

修改为

containers:

- name: kubernetes-dashboard

image: siriuszg/kubernetes-dashboard-amd64:v1.10.1

允许外部访问端口

默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部:

将

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

修改为type:NodePort、nodePort: 30001(端口默认30000起),修改后

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

应用kubernetes-dashboard.yaml文件

[root@k8s-master ~]# kubectl apply -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

error: error parsing kubernetes-dashboard.yaml: error converting YAML to JSON: yaml: line 12: mapping values are not allowed in this context

# 每个属性如设置值的时候,属性 冒号 : 后面 要加上空格隔开,然后再写值。检查刚才修改的内容

# 修改后再次执行

[root@k8s-master ~]# kubectl apply -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs unchanged

serviceaccount/kubernetes-dashboard unchanged

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal unchanged

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal unchanged

deployment.apps/kubernetes-dashboard unchanged

service/kubernetes-dashboard created

查看命名空间kube-system下的pod

默认放在了kube-system命名空间下。

如果想要快速运行,可以先在每个节点下使用docker pull siriuszg/kubernetes-dashboard-amd64:v1.10.1提前下载好镜像。然后使用kubectl apply时不会再次拉取镜像。

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 1/1 Running 3 3d4h

coredns-7ff77c879f-x5cmh 1/1 Running 3 3d4h

etcd-k8s-master 1/1 Running 3 3d4h

kube-apiserver-k8s-master 1/1 Running 4 3d4h

kube-controller-manager-k8s-master 1/1 Running 3 3d4h

kube-flannel-ds-amd64-dsf69 1/1 Running 0 112m

kube-flannel-ds-amd64-th9h9 1/1 Running 5 3d

kube-flannel-ds-amd64-xv27n 1/1 Running 0 3d

kube-proxy-dwpvg 1/1 Running 3 3d

kube-proxy-jndns 1/1 Running 3 3d4h

kube-proxy-w88h4 1/1 Running 4 3d

kube-scheduler-k8s-master 1/1 Running 4 3d4h

kubernetes-dashboard-694997dccd-htfqx 0/1 ContainerCreating 0 10m

# 过了一会儿,就运行起来了

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-5dl9p 1/1 Running 3 3d4h

coredns-7ff77c879f-x5cmh 1/1 Running 3 3d4h

etcd-k8s-master 1/1 Running 3 3d4h

kube-apiserver-k8s-master 1/1 Running 4 3d4h

kube-controller-manager-k8s-master 1/1 Running 3 3d4h

kube-flannel-ds-amd64-dsf69 1/1 Running 0 114m

kube-flannel-ds-amd64-th9h9 1/1 Running 5 3d

kube-flannel-ds-amd64-xv27n 1/1 Running 0 3d

kube-proxy-dwpvg 1/1 Running 3 3d

kube-proxy-jndns 1/1 Running 3 3d4h

kube-proxy-w88h4 1/1 Running 4 3d

kube-scheduler-k8s-master 1/1 Running 4 3d4h

kubernetes-dashboard-694997dccd-htfqx 1/1 Running 0 12m

# 查看运行的节点信息

[root@k8s-master ~]# kubectl get pod -n kube-system -o wide | grep kubernetes-dashboard

kubernetes-dashboard-694997dccd-htfqx 1/1 Running 0 15m 10.244.1.3 k8s-node1 <none> <none>

查看暴露的端口

[root@k8s-master ~]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-7ff77c879f-5dl9p 1/1 Running 3 3d4h

pod/coredns-7ff77c879f-x5cmh 1/1 Running 3 3d4h

pod/etcd-k8s-master 1/1 Running 3 3d4h

pod/kube-apiserver-k8s-master 1/1 Running 4 3d4h

pod/kube-controller-manager-k8s-master 1/1 Running 3 3d4h

pod/kube-flannel-ds-amd64-dsf69 1/1 Running 0 119m

pod/kube-flannel-ds-amd64-th9h9 1/1 Running 5 3d

pod/kube-flannel-ds-amd64-xv27n 1/1 Running 0 3d

pod/kube-proxy-dwpvg 1/1 Running 3 3d

pod/kube-proxy-jndns 1/1 Running 3 3d4h

pod/kube-proxy-w88h4 1/1 Running 4 3d

pod/kube-scheduler-k8s-master 1/1 Running 4 3d4h

pod/kubernetes-dashboard-694997dccd-htfqx 1/1 Running 0 18m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.1.0.10 <none> 53/UDP,53/TCP,9153/TCP 3d4h

service/kubernetes-dashboard NodePort 10.1.116.46 <none> 443:30001/TCP 8m31s

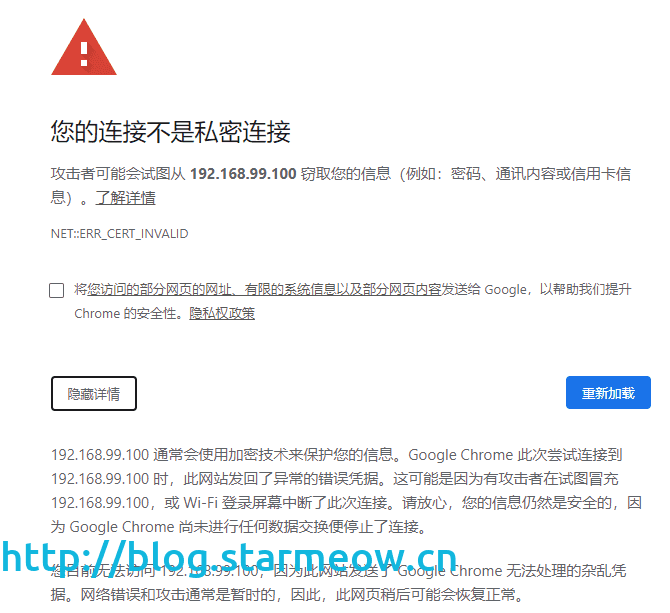

可以看到暴露的端口为30001。且需要使用https访问: https://192.168.99.100:30001/

在这个页面没有继续访问的按钮,直接在键盘上打thisisunsafe就可以自动进去了

https://192.168.99.100:30001/#!/login

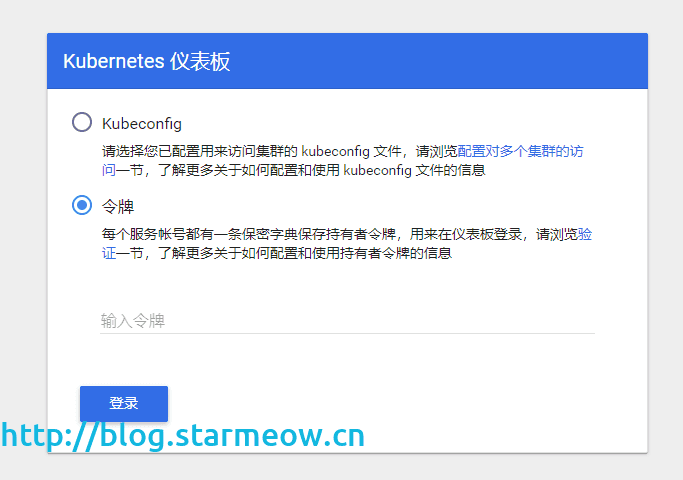

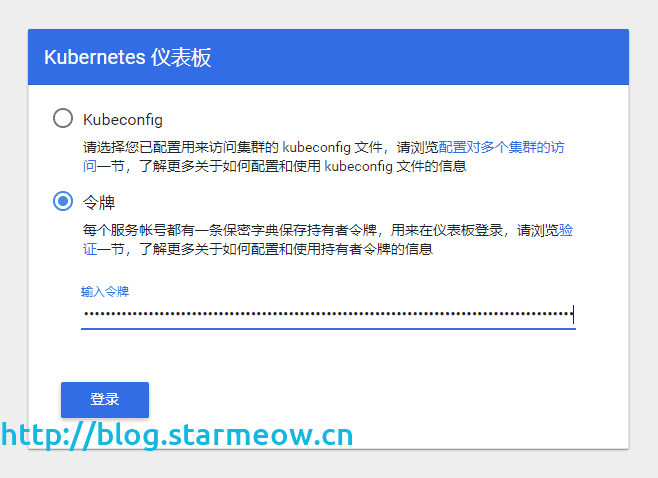

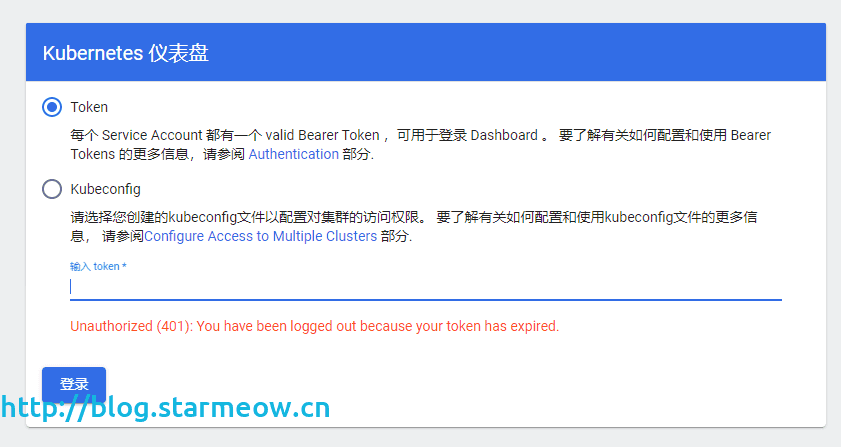

创建用户token登录

选择令牌,创建一个管理员的身份去访问UI,创建一个面向应用的serviceaccount用户去访问

[root@k8s-master ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@k8s-master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@k8s-master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-n25df

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 074b8084-cc73-4a30-88e2-a59ee518fee1

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlVHZDZsTGNLbTM3OEUzYzk3Q3k1dTFJVnFEZFJ6eG90cHZZMjN6RUdLVnMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbjI1ZGYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMDc0YjgwODQtY2M3My00YTMwLTg4ZTItYTU5ZWU1MThmZWUxIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.FNoMLtHKhmcMwFdEE2PcvBWRV4nei5T8wPwvJWSH_A_H09OZp-FIrwxotswzZbVE90-h76zXLbAghNp-kzPYWvdXYWlMUraFYnNRrRQMsrzcaHe_Ex5KiDwMmavxa0fd_1x0RQLNsIDL20gUR8LVfelKZ2Cdzy0v_xHAQjui4bW2HX6GtSmfVTcBO8PHrCwgOGNw3uBKjNxZcmhMoOc_m4Zj2lerUjpZFpmyhoGbmCytPgGywxPmWK56pK-SsDYLFFX-kSSpeDyv2GvH5MXK51aONU5P3n-jo4OngUvTjFT-FTGmSZ_oKN_YMTlsNlUYjrVHhDWmeqdYHkqGhSgV5Q

ca.crt: 1025 bytes

namespace: 11 bytes

然后将token粘贴到输入框

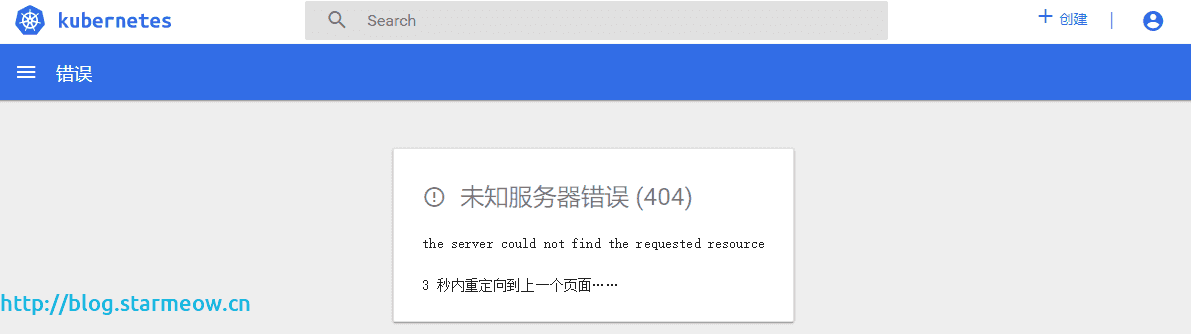

处理“未知服务器错误 (404)”问题

提示

未知服务器错误 (404)

the server could not find the requested resource

3 秒内重定向到上一个页面……

删除使用kubernetes-dashboard.yaml旧版创建的资源

# 通过yaml文件删除

[root@k8s-master ~]# kubectl delete -f kubernetes-dashboard.yaml

secret "kubernetes-dashboard-certs" deleted

serviceaccount "kubernetes-dashboard" deleted

role.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" deleted

rolebinding.rbac.authorization.k8s.io "kubernetes-dashboard-minimal" deleted

deployment.apps "kubernetes-dashboard" deleted

service "kubernetes-dashboard" deleted

部署dashboard/v2.0.1

访问 https://github.com/kubernetes/dashboard/releases 安装新版

下载新版recommended.yaml

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.1/aio/deploy/recommended.yaml

修改recommended.yaml端口暴露

增加type: NodePort和nodePort: 30001

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

应用recommended.yaml 文件

[root@k8s-master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

可以看到dashboard是运行到kubernetes-dashboard命名空间下的,所以要指定-n kubernetes-dashboard查看信息

查看kubernetes-dashboard无法启动的日志

# 查看状态

[root@k8s-master ~]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6b4884c9d5-z4cqb 1/1 Running 7 15m

kubernetes-dashboard-7bfbb48676-pgjcg 0/1 CrashLoopBackOff 7 15m

# 一直处于CrashLoopBackOff,

# 查看详情

[root@k8s-master ~]# kubectl describe pod kubernetes-dashboard-7bfbb48676-pgjcg -n kubernetes-dashboard

Name: kubernetes-dashboard-7bfbb48676-pgjcg

Namespace: kubernetes-dashboard

Priority: 0

Node: k8s-node1/192.168.99.201

Start Time: Wed, 27 May 2020 20:40:51 +0800

Labels: k8s-app=kubernetes-dashboard

pod-template-hash=7bfbb48676

# ........

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned kubernetes-dashboar

Normal Pulling 2m26s (x4 over 3m20s) kubelet, k8s-node1 Pulling image "kubernetesui/dashboard:v2.

Normal Pulled 2m24s (x4 over 3m17s) kubelet, k8s-node1 Successfully pulled image "kubernetesui/d

Normal Created 2m24s (x4 over 3m17s) kubelet, k8s-node1 Created container kubernetes-dashboard

Normal Started 2m24s (x4 over 3m17s) kubelet, k8s-node1 Started container kubernetes-dashboard

Warning BackOff 115s (x9 over 3m11s) kubelet, k8s-node1 Back-off restarting failed container

# 部署到k8s-node1节点上

# 查看日志

[root@k8s-master ~]# kubectl logs kubernetes-dashboard-7bfbb48676-pgjcg -n kubernetes-dashboard

2020/05/27 12:52:00 Starting overwatch

2020/05/27 12:52:00 Using namespace: kubernetes-dashboard

2020/05/27 12:52:00 Using in-cluster config to connect to apiserver

2020/05/27 12:52:00 Using secret token for csrf signing

2020/05/27 12:52:00 Initializing csrf token from kubernetes-dashboard-csrf secret

panic: Get https://10.1.0.1:443/api/v1/namespaces/kubernetes-dashboard/secrets/kubernetes-dashboard-csrf: dial tcp 10.1.0.1:443: connect: no route to host

goroutine 1 [running]:

github.com/kubernetes/dashboard/src/app/backend/client/csrf.(*csrfTokenManager).init(0xc0004ca880)

/home/travis/build/kubernetes/dashboard/src/app/backend/client/csrf/manager.go:41 +0x446

github.com/kubernetes/dashboard/src/app/backend/client/csrf.NewCsrfTokenManager(...)

/home/travis/build/kubernetes/dashboard/src/app/backend/client/csrf/manager.go:66

github.com/kubernetes/dashboard/src/app/backend/client.(*clientManager).initCSRFKey(0xc000462100)

/home/travis/build/kubernetes/dashboard/src/app/backend/client/manager.go:501 +0xc6

github.com/kubernetes/dashboard/src/app/backend/client.(*clientManager).init(0xc000462100)

/home/travis/build/kubernetes/dashboard/src/app/backend/client/manager.go:469 +0x47

github.com/kubernetes/dashboard/src/app/backend/client.NewClientManager(...)

/home/travis/build/kubernetes/dashboard/src/app/backend/client/manager.go:550

main.main()

/home/travis/build/kubernetes/dashboard/src/app/backend/dashboard.go:105 +0x20d

日志中kubernetes-dashboard-csrf报错处理

参考 https://www.jianshu.com/p/e359d3fe238f 的解决方案

由于 pod 不会分配到到 master 节点, 并且 kubeadm部署的 apiserver 中启用的验证方式为 Node 和 RBAC, 且关闭了 insecure-port,猜测可能是这个原因导致连接不上 apiServer , 即使是手动修改也不行 --apiserver-host 参数也不行。

重新修改 recommended.yaml 文件

[root@k8s-master ~]# vim recommended.yaml

增加nodeName: k8s-master的配置

# ...

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

nodeName: k8s-master

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.1

# ...

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

nodeName: k8s-master

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.4

# ...

上述配置将指定 kubernetes-dashboard 和 kubernetes-metrics-scraper 分配部署的节点,此处选择部署在 master 节点解决文中出现的通信问题。之前的端口暴露不变。

重新应用recommended.yaml文件

[root@k8s-master ~]# kubectl apply -f recommended.yaml

可以看到pod的名称也发生了变化,因为做了配置文件的修改。

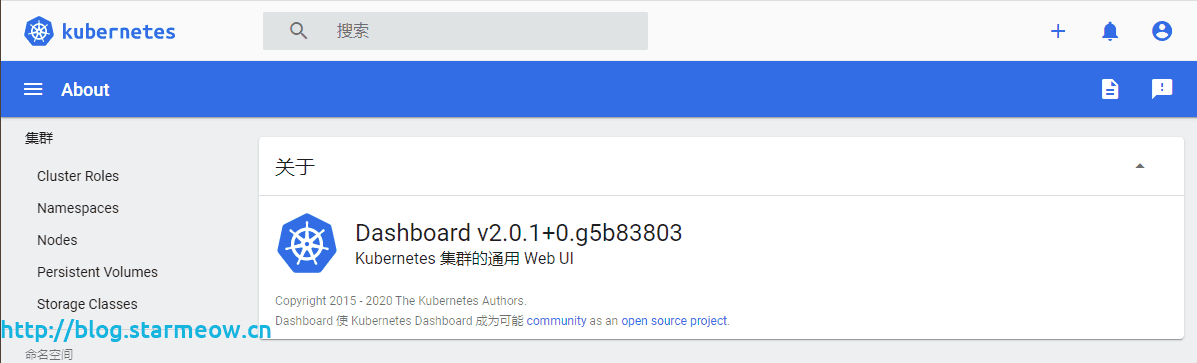

查看kubernetes-dashboard状态运行成功

[root@k8s-master ~]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-6fb66f87b-gdfbp 1/1 Running 0 3m47s

kubernetes-dashboard-fb89c4d57-sz86q 1/1 Running 0 3m47s

访问 https://192.168.99.100:30001/ 在token中输入之前生成的token字符串,点击登录

即可正常进入页面了