大数据处理实例——Amazon商品评分&评论(二)

上一篇已经详细分析了该案例的具体目标,本篇主要介绍实现的总体框架及其中的实时预处理部分。其中实时处理环境的搭建可参见这里

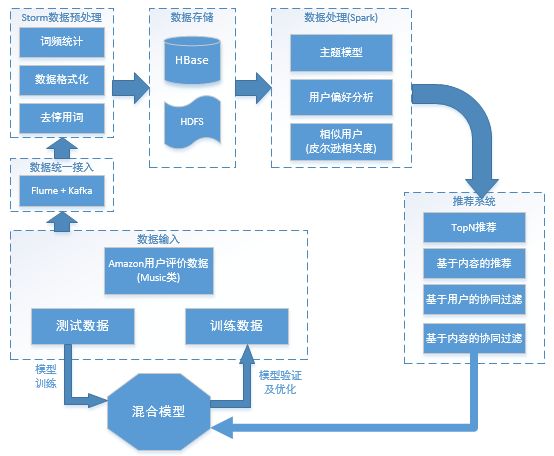

总体架构

实时预处理

1. 准备工作

从Stanford的Amazon开源数据上下载Music类商品的评价数据文件Musical_Instruments_5.json, 其中每行数据示例如下:

{

"reviewerID": "A2IBPI20UZIR0U",

"asin": "1384719342",

"reviewerName": "Jake",

"helpful": [0, 0],

"reviewText": "Not much to write about here, but it does exactly what it's supposed to. filters out the pop sounds. now my recordings are much more crisp. it is one of the lowest prices pop filters on amazon so might as well buy it, they honestly work the same despite their pricing,",

"overall": 5.0,

"summary": "good",

"unixReviewTime": 1393545600,

"reviewTime": "02 28, 2014"

}

其中我们关注的字段及含义如下:

| 字段 | 含义 |

|---|---|

| reviewerID | 评价人/用户ID |

| asin | 商品ID |

| reviewText | 用户对商品的评价文本 |

| overall | 用户对商品的评分 |

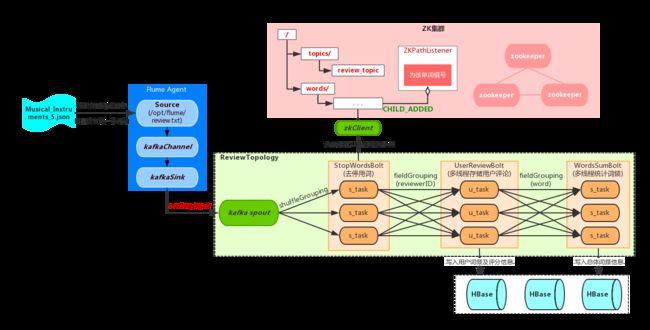

2. 整体流程

Musical_Instruments_5.json是汇总的数据集,为了模拟WEB上的实时评论,可以自己写个小应用每三秒发送一条评论(文本中的一行数据)。提交给Storm的ReviewTopology处理。考虑到后面离线处理过程中需要将评论的单词序列转化为词频向量,故而需要所有评论的非重复单词个数(向量维度)以及每个单词编号(该单词词频所在列),这里利用已搭建的ZK集群来实现。

3. ReviewTopology实现

(1) ReviewTopology的定义

public class ReviewTopology {

private static final String zks = "zk01:2181,zk02:2181,zk03:2181";

private static final String topic = "review-topic";

private static final String zkRoot = "/topics";

private static final String id = "musicReview";

public static void main(String[] args) {

SpoutConfig spoutConf = getKafkaSpoutConf();

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("kafkaSpout", new KafkaSpout(spoutConf), 1);//定义kafkaSpout

builder.setBolt("stopWordsFilter",new StopWordsFilterBolt()).shuffleGrouping("kafkaSpout");

builder.setBolt("userReview", new UserReviewBolt()).fieldsGrouping("stopWordsFilter",new Fields("user"));

builder.setBolt("wordsSum", new WordsSumBolt()).fieldsGrouping("userReview", new Fields("word"));

}

private static SpoutConfig getKafkaSpoutConf() {

BrokerHosts brokerHosts = new ZkHosts(zks);

SpoutConfig spoutConf = new SpoutConfig(brokerHosts, topic, zkRoot, id);

spoutConf.scheme = new SchemeAsMultiScheme(new StringScheme());

spoutConf.zkServers = Arrays.asList(new String[] {"zk01", "zk02", "zk03"});

spoutConf.zkPort = 2181;

return spoutConf;

}

}

(2) 一级StopWordsFilterBolt,读取原始的评价文本,去停用词并将新单词注册到ZK上;同时以(评价人, 商品, 评分, 去通用词后评价文本)向后一个Bolt传递数据流

public class StopWordsFilterBolt extends BaseRichBolt {

private static final Log LOG = LogFactory.getLog(StopWordsFilterBolt.class);

private OutputCollector collector;

private static CuratorFramework zkClient = ZKUtil.getInstance().getZkclient();

private static List stopWords = null;

private static final String stopWordsFile = "stop-words.txt";

private static final String ZK_WORDS_ROOT_PATH = "/words/";

/**

* 读取配置文件的停用词到内存中

* @throws IOException

*/

private void initStopWords() throws IOException {

if (stopWords == null) {

File file = new File(this.getClass().getResource("/").getPath() + "/resources/" + stopWordsFile);

if (file.isFile() && file.exists()) {

InputStreamReader read = new InputStreamReader(new FileInputStream(file), "UTF-8");

BufferedReader bufferedReader = new BufferedReader(read);

String lineTxt = null;

while ((lineTxt = bufferedReader.readLine()) != null) {

stopWords.add(lineTxt);

}

read.close();

}

}

}

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

this.collector = outputCollector;

try {

initStopWords();

} catch (IOException e) {

LOG.error(e.getLocalizedMessage());

}

}

public void execute(Tuple tuple) {

String originMessage = tuple.getString(0);

JsonObject jsonMessage = new JsonParser().parse(originMessage).getAsJsonObject();

String user = jsonMessage.get("reviewerID").getAsString();//获取评价人

String item = jsonMessage.get("asin").getAsString();//获取评价商品

String rating = jsonMessage.get("overall").getAsString();//获取评分

String reviewText = jsonMessage.get("reviewText").getAsString();//获取评价信息

try {

String words = handleOneReviewText(reviewText);

if (!StringUtils.isEmpty(words)) {

//将(user, item, rating, words)发射到"review-topic" Bolt中

collector.emit("user-review", tuple, new Values(user, item, rating,words));

this.collector.ack(tuple);

}

} catch (Exception e) {

LOG.error(String.format("handle reviewText [%s] fail: ",reviewText,e.getLocalizedMessage()));

}

}

/**

* 定义输出流"user-review",只发射我们感兴趣的字段(user, item, rating, words)

* @param outputFieldsDeclarer

*/

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

outputFieldsDeclarer.declareStream("user-review", new Fields("user", "item","rating", "words"));

}

/**

* 在zk的${rootPath}节点上为每个单词word(非停用词)创建子节点,由ZKPathListener为该节点编号

* @param reviewText

* @throws Exception

*/

private String handleOneReviewText(String reviewText) throws Exception {

StringBuffer stringBuffer = new StringBuffer();

String[] words = reviewText.split("\\s+");

for (String word : words) {

String childPath = ZK_WORDS_ROOT_PATH + word;

if (stopWords.contains(word)) {

continue; //去除停用词

} else {

if (zkClient.checkExists().forPath(childPath) == null) {

zkClient.create().forPath(ZK_WORDS_ROOT_PATH + word); //创建子节点

stringBuffer.append(word).append(" ");

} else {

stringBuffer.append(word).append(" ");

}

}

}

return stringBuffer.toString();

}

}

(3) 二级UserReviewBolt,多线程将每个用户评论信息写入HBase中,同时将文本中的每个单词发送给下一个统计Bolt。

public class UserReviewBolt extends BaseRichBolt {

private static final Log LOG = LogFactory.getLog(UserReviewBolt.class);

private static final String USER_REVIEW_TABLE = "user-review-table";

private static final String COLUME_FAMILY_REVIEW_INFO = "reviewInfo";

private static final String COLUME_ITEM = "item";

private static final String COLUME_RATING = "rating";

private static final String COLUME_WORDS = "words";

@Autowired

private HBaseOperation hBaseOperation;

private OutputCollector collector;

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

this.collector = outputCollector;

try {

if (!hBaseOperation.isTableExist(USER_REVIEW_TABLE)){

String[] columFamilies = {COLUME_FAMILY_REVIEW_INFO};

hBaseOperation.createTable(USER_REVIEW_TABLE, columFamilies);

}

} catch (IOException e) {

LOG.error(e.getLocalizedMessage());

}

}

public void execute(Tuple tuple) {

String user = tuple.getStringByField("user");

String item = tuple.getStringByField("item");

Float rating = tuple.getFloatByField("rating");

String words = tuple.getStringByField("words");

insertRow(user, item, rating, words);

String[] wordsArray = words.split("\\s+");

for (String word : wordsArray) {

collector.emit("words-review", tuple, new Values(word));

}

collector.ack(tuple);

}

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

outputFieldsDeclarer.declareStream("words-review", new Fields("word"));

}

public void insertRow(String user, String item, Float rating, String words) {

Put put = new Put(Bytes.toBytes(user));

put.addColumn(Bytes.toBytes(COLUME_FAMILY_REVIEW_INFO), Bytes.toBytes(COLUME_RATING), Bytes.toBytes(rating));

put.addColumn(Bytes.toBytes(COLUME_FAMILY_REVIEW_INFO), Bytes.toBytes(COLUME_ITEM), Bytes.toBytes(item));

put.addColumn(Bytes.toBytes(COLUME_FAMILY_REVIEW_INFO), Bytes.toBytes(COLUME_WORDS), Bytes.toBytes(words));

try {

hBaseOperation.insertRow(USER_REVIEW_TABLE, put);

} catch (IOException e) {

LOG.error(e.getLocalizedMessage());

}

}

}

(4) 三级Bolt,统计单词词频

public class WordsSumBolt extends BaseRichBolt {

private static final Log LOG = LogFactory.getLog(WordsSumBolt.class);

private OutputCollector collector;

@Autowired

private HBaseOperation hBaseOperation;

private Map String, Integer> wordsCount;

private static final String WORDS_SUM_TABLE = "words-sum";

private static final String COLUME_FAMILY_SUM_INFO = "countInfo";

private static final String COLUME_COUNT = "count";

public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) {

this.collector = outputCollector;

wordsCount = new HashMap String, Integer>();

try {

if (!hBaseOperation.isTableExist(WORDS_SUM_TABLE)){

String[] columFamilies = {COLUME_FAMILY_SUM_INFO};

hBaseOperation.createTable(WORDS_SUM_TABLE, columFamilies);

}

} catch (IOException e) {

LOG.error(e.getLocalizedMessage());

}

}

public void execute(Tuple tuple) {

String word = tuple.getString(0);

Integer count = wordsCount.get(word);

if (count == null) {

count = 0;

}

count++;

wordsCount.put(word, count);

Put put = new Put(Bytes.toBytes(word));

put.addColumn(Bytes.toBytes(COLUME_FAMILY_SUM_INFO), Bytes.toBytes(COLUME_COUNT), Bytes.toBytes(count));

try {

hBaseOperation.insertRow(WORDS_SUM_TABLE, put);

} catch (IOException e) {

e.printStackTrace();

}

}

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

}

}