运维笔记

在做运维工作期间的文档记录总结

- 一、docker

- docker安装方法

- 第一种

- 第二种

- 第三种

- 卸载docker

- 1. node_exporter

- 2. grafana

- 2.1 grafana变量级联问题

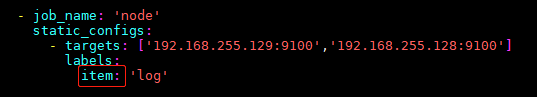

- 2.1.1 Prometheus配置

- 2.1.2 设置变量item取item标签的值

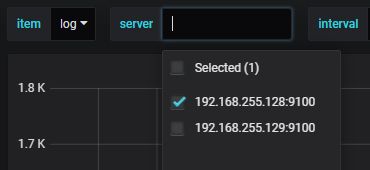

- 2.1.3 设置变量server提取item标签下的instance

- 2.1.4 结果

- 3. blackbox_exporter

- 4. cadvisor

- 5. alertmanager

- 6. prometheus

- 6.1 docker中运行prometheus server

- 6.2 热更新Prometheus配置

- 6.2.1 非Docker运行启动方式

- 6.2.1 Docker方式启动

- 6.3 Prometheus Pushgateway

- 6.4 API查询

- 7. docker-ce修改镜像存储位置

- 8. 让普通用户操作docker

- 9. 卸载旧docker

- 10. Docker Compose入门示例

- 11、nginx

- 二、yum download only

- 三、keepalived主备模式实例

- 3.1 实例环境

- 3.2 安装keepalived

- 3.3 安装web应用

- 3.2 配置keepalived

- 四、Supervisor

- 4.1 root有网安装supervisor

- 4.2 root离线安装

- 4.3 创建配置文件

- 4.4 管理命令

- 4.5 在virtualenv python 下安装supervisor并运行

- 4.6 supervisor管理Nginx示例

- 五、授权非root用户启动1024以下端口的应用服务

- 六、Linux ssh免密登录

- 6.1 root用户之间

- 6.2 普通用户之间

- 七、ELK

- 7.1 elasticsearch安装及配置

- 7.2 kibana安装及配置

- 7.2.1 kibana安装sentinl插件

- 7.2.2 kibana sentinl WebHook关键字报警配置示例

- 7.2.3 kibana sentinl Mail 发送邮件示例

- 7.2.4 kibana查询

- 7.3 logstash安装及配置

- 7.3.1 其他配置示例

- 7.3.2 匹配示例

- 八、Ansible

- 8.1 ansible-playbook批量部署Nginx示例

- 九、开机启动脚本示例

- 十、Linux 定时任务

- 十一、书栈网BookStack

- 十二、nginx

- 1、安装

- 2、相关模块

- 3、给nginx添加新的模块

- 十三、redis

- 1、安装

- 2、常用语法

- 十四、解压、压缩

- 1、解压

- 2、压缩

- 十五、tomcat监控

- 十六、pip离线安装包

- 零零散散的

- 使其centos7虚拟机静态ip

- 添加用户/组

- 权限

- 添加sudo权限

- 统计行数

- 查看文件首尾指定行数的内容

- 文件查找

一、docker

docker安装方法

在centos中安装docker环境

docker-ce官网安装教程:https://docs.docker.com/install/linux/docker-ce/centos/

第一种

配置yum源在线安装

1、如果有旧的版本docker,需要先卸载

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

2、安装 yum-utils 等组件

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

3、添加yum源

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

4、安装docker-ce

yum install docker-ce

第二种

在线脚本安装

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.sh

sudo usermod -aG docker your-user

# 启动docker并设置开机启动

systemctl start docker

systemctl enable docker

第三种

离线安装,下载docker包,这种方法最快最省事,比较奇葩

下载地址:https://download.docker.com/linux/static/stable/x86_64/

官方文档:https://docs.docker.com/install/linux/docker-ce/binaries/

官网说明:

Download the static binary archive. Go to https://download.docker.com/linux/static/stable/ (or change stable to edge or test), choose your hardware platform, and download the .tgz file relating to the version of Docker CE you want to install.

tar xzvf /path/to/.tgz

sudo cp docker/* /usr/bin/

sudo dockerd &

卸载docker

sudo yum remove docker-ce

sudo rm -rf /var/lib/docker

1. node_exporter

docker运行node_exporter

docker run -d \

--net="host" \

--pid="host" \

--name=node-exporter \

-v "/:/host:ro,rslave" \

quay.io/prometheus/node-exporter \

--path.rootfs /host

2. grafana

docker运行grafana

docker run -d -p 3000:3000 --name=grafana --network host \

-e "GF_SERVER_ROOT_URL=http://grafana.server.name" \

-e "GF_SECURITY_ADMIN_PASSWORD=secret" \

grafana/grafana

2.1 grafana变量级联问题

2.1.1 Prometheus配置

prometheus的配置文件job_name.static_configs.labels配置item

查询数据时,会有item标签,instance标签

在grafana中添加Prometheus数据源

2.1.2 设置变量item取item标签的值

1、选择设置变量

2、取名item

3、type选择Query

4、数据源选添加的Prometheus数据源

5、查询语句写up(根据实际情况更改)

6、正则过滤结果:/.*item="([^"]*).*/

7、结果值

2.1.3 设置变量server提取item标签下的instance

与上一步相同,关键点就是在Query里要引用上一步定义的变量item

Query:up{item="[[item]]"} #使用[[]]来引用变量

Regex::/.*instance="([^"]*).*/

2.1.4 结果

item选择redis,就在在server中展示item="redis"的所有instance,同理选log

tips:

变量设置例子

数据:a{service="XXX"}

/.*service="([^"]*).*/ 取得XXX

数据:a{instance="101.22.34.22:8080"}

/.*instance="([^"]*):.*/ 取得101.22.34.22

/.*instance="([^"]*).*/ 取得101.22.34.22:8080

3. blackbox_exporter

docker运行blackbox_exporter

docker pull prom/blackbox-exporter

docker run -it -p 9115:9115 -v /root/blackbox.yml:/etc/blackbox_exporter/config.yml prom/blackbox-exporter

# /root/blackbox.yml是你自己的配置文件

4. cadvisor

docker run \

--volume=/:/rootfs:ro \

--volume=/var/run:/var/run:ro \

--volume=/sys:/sys:ro \

--volume=/var/lib/docker/:/var/lib/docker:ro \

--volume=/dev/disk/:/dev/disk:ro \

--publish=8090:8080 \

--detach=true \

--name=cadvisor \

google/cadvisor

5. alertmanager

- 在docker运行

# 拉取镜像

docker pull prom/alertmanager

# 运行镜像,a.yml是当前目录下alertmanager需要用的配置文件

docker run -it -p 9093:9093 -v `pwd`/a.yml:/etc/alertmanager/alertmanager.yml --name alertmanager prom/alertmanager:latest

- 在Linux系统中运行

# Prometheus官网下载安装包

wget https://github.com/prometheus/alertmanager/releases/download/v0.15.2/alertmanager-0.15.2.linux-amd64.tar.gz

# 解压后切到解压目录

./alertmanager --config.file=.yml>

- 报警请求链接示例配置

global:

resolve_timeout: 5m

route:

group_by: ['alertname']

group_wait: 10s

group_interval: 10s

repeat_interval: 1h

receiver: 'web.hook'

receivers:

- name: 'web.hook'

webhook_configs:

- url: 'http://192.168.255.1:8080/alert/' //报警时需要调用的链接

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']

发送邮件示例

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.163.com:25'

smtp_from: '[email protected]'

smtp_auth_username: '[email protected]'

smtp_auth_password: '12345678'

smtp_require_tls: false

#templates:

#- 'templates/*.tmpl'

route:

receiver: 'default-receiver'

group_by: ['alertname']

group_wait: 30s

group_interval: 5m

repeat_interval: 3h

receivers:

- name: 'default-receiver'

email_configs:

- to: '[email protected], [email protected]'

# html: '{{ template "alert.html" . }}'

headers: { Subject: "[WARN] 邮件test" }

6. prometheus

6.1 docker中运行prometheus server

prometheus server的配置文件prometheus.yml实例

global:

scrape_interval: 15s

external_labels:

monitor: 'codelab-monitor'

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['192.168.255.129:9090']

labels:

instance: 'prometheus'

- job_name: 'linux1'

static_configs:

- targets: ['192.168.255.128:9100']

labels:

instance: 'sys1'

- job_name: 'linux2'

static_configs:

- targets: ['192.168.255.129:9100']

labels:

instance: 'sys2'

alerting:

alertmanagers:

- static_configs:

- targets: ["192.168.255.129:9093"]

# 报警规则文件必须在Docker中运行必须是这个路径/etc/prometheus/rules.yml

rule_files:

- /etc/prometheus/rules.yml

prometheus server的报警规则文件rules.yml实例

groups:

- name: test-rule

rules:

- alert: ServerDown

expr: up == 0

for: 2m

labels:

team: node

annotations:

summary: "{{$labels.instance}}: Down"

description: "{{$labels.instance}}: Down (current value is: {{ $value }}"

- alert: UrlConnectable

expr: probe_success == 0

for: 2m

labels:

team: blackbox

annotations:

summary: "{{$labels.instance}}: Lost connection "

description: "{{$labels.instance}}: Lost connection (current value is: {{ $value }}"

检测语法错误:./promtool check-config prometheus.yml

运行prometheus server

# prometheus.yml rules.yml都放在/root/prometheus/(同一个目录)目录下

docker run -it -p 9090:9090 -v /root/prometheus:/etc/prometheus --name prometheus --network host prom/prometheus

6.2 热更新Prometheus配置

要prometheus支持热更新配置,需要在启动的时候添加 --web.enable-lifecycle 参数

6.2.1 非Docker运行启动方式

./prometheus --config.file=prometheus.yml --web.enable-lifecycle

修改prometheus.yml之后curl -X POST http://IP:9090/-/reload即可重新加载配置

6.2.1 Docker方式启动

默认prometheus Docker镜像不支持热更新功能,需要重修制作镜像,制作镜像的方法如下:

从这个地址https://github.com/prometheus/prometheus/releases下载一个安装包,然后解压出来,进到解压到的目录,新建一个Dockerfile文件touch Dockerfile,编辑文件添加如下内容,参考来自https://github.com/prometheus/prometheus/blob/master/Dockerfile,在里面增加了 --web.enable-lifecycle,修改了COPY prometheus.yml /etc/prometheus/prometheus.yml

FROM quay.io/prometheus/busybox:latest

LABEL maintainer="The Prometheus Authors "

COPY prometheus /bin/prometheus

COPY promtool /bin/promtool

COPY prometheus.yml /etc/prometheus/prometheus.yml

COPY console_libraries/ /usr/share/prometheus/console_libraries/

COPY consoles/ /usr/share/prometheus/consoles/

RUN ln -s /usr/share/prometheus/console_libraries /usr/share/prometheus/consoles/ /etc/prometheus/

RUN mkdir -p /prometheus && \

chown -R nobody:nogroup etc/prometheus /prometheus

USER nobody

EXPOSE 9090

VOLUME [ "/prometheus" ]

WORKDIR /prometheus

ENTRYPOINT [ "/bin/prometheus" ]

CMD [ "--config.file=/etc/prometheus/prometheus.yml", \

"--storage.tsdb.path=/prometheus", \

"--web.console.libraries=/usr/share/prometheus/console_libraries", \

"--web.enable-lifecycle", \

"--web.console.templates=/usr/share/prometheus/consoles" ]

制作镜像

docker build -t build_repo/myprometheuus .

查看镜像,就会有一个build_repo/myprometheuus

[root@localhost prometheus-2.4.0.linux-amd64]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

build_repo/myprometheuus latest e6323bff4401 About an hour ago 110MB

运行镜像

docker run -it -d -p 9090:9090 -v `pwd`/prometheus.yml:/etc/prometheus/prometheus.yml --name prometheus --network host build_repo/myprometheuus

至此,镜像已经支持了curl -X POST http://IP:9090/-/reload但是直接修改pwd/prometheus.yml还不能生效,还在查找原因。

使配置文件生效docker restart 容器id ?

6.3 Prometheus Pushgateway

docker安装:

docker pull prom/pushgateway

docker run -d -p 9091:9091 prom/pushgateway

推送数据到Prometheus:

echo "some_metric 3.14" | curl --data-binary @- http://pushgateway.example.org:9091/metrics/job/some_job

在prometheus可看到metric为some_metric , 值为 3.14

some_metric{exported_job="some_job",farm="server",instance="192.168.255.129:9091",job="node_exporter",project="projects",service="server",test="test"} 3.14

一次推送多条

cat <删除:

curl -X DELETE http://pushgateway.example.org:9091/metrics/job/some_job/instance/some_instance

curl -X DELETE http://pushgateway.example.org:9091/metrics/job/some_job

更多参阅:https://github.com/prometheus/pushgateway

6.4 API查询

示例:

瞬时数据/api/v1/query,eg:http://192.168.255.128:9090/api/v1/query?query=node_disk_io_now

eg:http://localhost:9090/api/v1/query?query=up&time=2015-07-01T20:10:51.781Z

范围数据/api/v1/query_range

eg:http://192.168.255.128:9090/api/v1/query_range?query=http_requests_total&start=1542878423.447&end=1542878433.447&step=10s

eg:http://192.168.255.128:9090/api/v1/query_range?query=node_load15&start=2019-03-20T02:10:30.781Z&end=2019-03-20T02:11:00.781Z&step=15s

ps:注意时区的差别

元数据

标签选择器查询/api/v1/series

eg:http://192.168.255.128:9090/api/v1/series?match[]=up{job=\"kubernetes-cadvisor\"}&match[]=process_start_time_seconds{kubernetes_name=\"kube-state-metrics\"}

eg:http://192.168.255.128:9090/api/v1/series?match[]=up&match[]=process_start_time_seconds{job="prometheus"}

标签值查询/api/v1/label/

eg:http://192.168.255.128:9090/api/v1/label//values

eg:http://192.168.255.128:9090/api/v1/label/job/values

eg:http://192.168.255.128:9090/api/v1/labels

Targets

eg: http://192.168.255.128:9090/api/v1/targets

Rules

eg: http://192.168.255.128:9090/api/v1/rules

Alerts

eg: http://192.168.255.128:9090/api/v1/alerts

Alertmanagers

eg: http://192.168.255.128:9090/api/v1/alertmanagers

Config

eg: http://192.168.255.128:9090/api/v1/status/config

Flags

eg: http://192.168.255.128:9090/api/v1/status/flags

7. docker-ce修改镜像存储位置

vim /usr/lib/systemd/system/docker.service

在ExecStart=行的后面添加--graph /data/docker ,/data/docker是指定的目录

ExecStart=/usr/bin/dockerd -H unix:// --graph /data/docker

重新加载配置文件

systemctl daemon-reload

重新启动

systemctl restart docker.service

注意:如果需要,把旧目录/var/lib/docker 下的内容移到新的目录/data/docker 不然原来的镜像容器将不会管理。

8. 让普通用户操作docker

创建docker用户组,如果docker用户组存在可以忽略

groupadd docker

把普通用户添加进docker组中

gpasswd -a ${USER} docker

重启docker

service docker restart

如果普通用户执行docker命令,如果提示get …… dial unix /var/run/docker.sock权限不够,则修改/var/run/docker.sock权限,使用root用户执行如下命令,即可

chmod a+rw /var/run/docker.sock

9. 卸载旧docker

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

10. Docker Compose入门示例

以使用Docker Compose创建一个简单的Python Web服务应用,服务使用到Flask框架和Redis做一个计数,页面被访问一次,计数值加1。

确保已经安装了Docker 和 Docker Compose。

step1:准备

为项目创建一个目录

$ mkdir composetest

$ cd composetest

在创建的目录composetest下创建一个文件叫app.py,文件内容如下:

import time

import redis

from flask import Flask

app = Flask(__name__)

cache = redis.Redis(host='redis', port=6379)

def get_hit_count():

retries = 5

while True:

try:

return cache.incr('hits')

except redis.exceptions.ConnectionError as exc:

if retries == 0:

raise exc

retries -= 1

time.sleep(0.5)

@app.route('/')

def hello():

count = get_hit_count()

return 'Hello World Docker! I have been seen {} times.\n'.format(count)

if __name__ == "__main__":

app.run(host="0.0.0.0", debug=True)

在这个应用中Redis使用默认的端口6379。

创建一个文件叫requirements.txt,内容如下:

flask

redis

step2:创建一个Dockerfile文件

在这一步中,使用Dockerfile创建Docker镜像,镜像包含Python Web所有的依赖。

文件内容如下:

FROM python:3.4-alpine

ADD . /code

WORKDIR /code

RUN pip install -r requirements.txt

CMD ["python", "app.py"]

step3:在Compose文件中配置服务

创建一个文件叫docker-compose.yml,文件内容如下:

version: '3'

services:

web:

build: .

ports:

- "5000:5000"

redis:

image: "redis:alpine"

该配置文件中包括了两个服务,web和Redis,端口映射5000

step4:使用Docker compose创建和运行应用

执行docker-compose up命令,创建和运行应用

[root@localhost composetest]# docker-compose up

Starting composetest_web_1 ... done

Starting composetest_redis_1 ... done

Attaching to composetest_redis_1, composetest_web_1

redis_1 | 1:C 17 Sep 07:39:07.893 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

redis_1 | 1:C 17 Sep 07:39:07.893 # Redis version=4.0.11, bits=64, commit=00000000, modified=0, pid=1, just started

redis_1 | 1:C 17 Sep 07:39:07.894 # Warning: no config file specified, using the default config. In order to specify a config file use redis -server /path/to/redis.conf

redis_1 | 1:M 17 Sep 07:39:07.895 * Running mode=standalone, port=6379.

redis_1 | 1:M 17 Sep 07:39:07.895 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set t o the lower value of 128.

redis_1 | 1:M 17 Sep 07:39:07.895 # Server initialized

redis_1 | 1:M 17 Sep 07:39:07.896 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect.

redis_1 | 1:M 17 Sep 07:39:07.896 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency a nd memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

redis_1 | 1:M 17 Sep 07:39:07.896 * DB loaded from disk: 0.000 seconds

redis_1 | 1:M 17 Sep 07:39:07.896 * Ready to accept connections

web_1 | * Serving Flask app "app" (lazy loading)

web_1 | * Environment: production

web_1 | WARNING: Do not use the development server in a production environment.

web_1 | Use a production WSGI server instead.

web_1 | * Debug mode: on

web_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

web_1 | * Restarting with stat

web_1 | * Debugger is active!

web_1 | * Debugger PIN: 238-684-257

web_1 | 192.168.255.1 - - [17/Sep/2018 07:39:20] "GET / HTTP/1.1" 200 -

web_1 | 192.168.255.1 - - [17/Sep/2018 07:39:20] "GET / HTTP/1.1" 200 -

web_1 | 192.168.255.1 - - [17/Sep/2018 07:39:20] "GET / HTTP/1.1" 200 -

web_1 | 192.168.255.1 - - [17/Sep/2018 07:39:21] "GET / HTTP/1.1" 200 -

web_1 | 192.168.255.1 - - [17/Sep/2018 07:39:21] "GET / HTTP/1.1" 200 -

在浏览器打开http://0.0.0.0:5000/,可以看到:

Hello World Docker! I have been seen 1 times.

如果希望后台运行执行docker-compose up -d

停止应用docker-compose stop

11、nginx

docker run --name nginx -d -p 8000:8000 \

-v /data/projects/docker-nginx/conf/nginx.conf:/etc/nginx/nginx.conf \

-v /data/projects/docker-nginx/html/:/etc/nginx/html/ \

--net=host \

-v /data/projects/docker-nginx/logs:/var/log/nginx -d docker.io/nginx

二、yum download only

例子,下载docker-ce

centos7.5安装Docker

# step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

# Step 2: 添加软件源信息

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# Step 3: 更新并安装 Docker-CE

sudo yum makecache fast

sudo yum -y install docker-ce

# Step 4: 开启Docker服务

sudo service docker start

下载安装Docker所有必要的文件

# /tmp下载保存的目录

yum install --downloadonly --downloaddir=/tmp docker-ce

实例:

1、yum下载需要的所有包

yum install --downloadonly --downloaddir=<保存目录/home/java> <下载的包java>

2、安装所有的包

rpm -ivh *.rpm

三、keepalived主备模式实例

keepalived有一台主服务器和多台备用服务器,在主服务器和备服务器上部署相同的服务配置,使用一个共同的虚拟IP对外提供服务。当主服务器出现故障时,虚拟IP会自动漂移到备服务器。

3.1 实例环境

4台CentOS7系统服务器:

-192.168.255.128 主服务器

-192.168.255.129 备服务器

-192.168.255.130 Web应用服务器1

-192.168.255.131 Web应用服务器2

3.2 安装keepalived

在主服务器和备服务器上都安装keepalived

yun -y install keepalived

配置文件位置:/etc/keepalived/keepalived.conf

启动服务文件:/usr/sbin/keepalived

3.3 安装web应用

在两台web服务器上安装httpd

yun -y install httpd

安装完成后

Web1:

cd /var/www/html/

echo "WEB1" > index.html

Web2:

cd /var/www/html/

echo "WEB2" > index.html

两台服务器上都启动Web服务

systemctl start httpd

验证成功

[root@localhost html]# curl 192.168.255.130

WEB1

[root@localhost html]# curl 192.168.255.131

WEB2

3.2 配置keepalived

主服务器keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1 #邮件服务器的地址

smtp_connect_timeout 30

router_id keepalived_master #主调度器的主机名

vrrp_mcast_group4 224.26.1.1 #发送心跳信息的组播地址

}

vrrp_instance VI_1 {

state MASTER #主调度器的初始角色

interface ens33 #虚拟IP工作的网卡接口

virtual_router_id 66 #虚拟路由的ID

priority 100 #主调度器的选举优先级

advert_int 1

authentication {

auth_type PASS #集群主机的认证方式

auth_pass 123456 #密钥,最长8位

}

virtual_ipaddress {

192.168.255.100 #虚拟IP

}

}

virtual_server 192.168.255.100 80 { #LVS配置段,VIP

delay_loop 6

lb_algo rr #调度算法轮询

lb_kind DR #工作模式DR

nat_mask 255.255.255.0

# persistence_timeout 50 #持久连接,在测试时需要注释,否则会在设置的时间内把请求都调度到一台RS服务器上面

protocol TCP

sorry_server 127.0.0.1 80 #Sorry server的服务器地址及端口,Sorry server就是在后端的服务器全部宕机的情况下紧急提供服务

real_server 192.168.255.130 80 { #RS服务器地址和端口

weight 1 #RS的权重

HTTP_GET { #健康状态检测方法

url {

path /

status_code 200 #状态判定规则

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.255.131 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

}

备服务器keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id keepalived_backup #备份调度器的主机名

vrrp_mcast_group4 224.26.1.1 #这个组播地址需与集群内的其他主机相同

}

vrrp_instance VI_1 {

state BACKUP #初始角色,备份服务器需设置为BACKUP

interface ens33

virtual_router_id 66 #虚拟路由的ID一定要和集群内的其他主机相同

priority 90 #选举优先级,要比主调度器地一些

advert_int 1

authentication {

auth_type PASS

auth_pass 123456 #密钥需要和集群内的主服务器相同

}

virtual_ipaddress {

192.168.255.100

}

}

#余下配置和主服务器相同

virtual_server 192.168.255.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

# persistence_timeout 50

protocol TCP

sorry_server 127.0.0.1 80

real_server 192.168.255.130 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

real_server 192.168.255.131 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

}

在两天Web服务器上分配同一个虚拟ip

ifconfig ens33:1 192.168.255.100 netmask 255.255.255.255 broadcast 192.168.255.100 up

route add -host 192.168.255.100 dev ens33:1

分别启动keepalived

systemctl start keepalived //配置文件在相同目录下

测试成功

[root@data-node1 keepalived]# curl 192.168.255.100

WEB1

[root@data-node1 keepalived]# curl 192.168.255.100

WEB2

[root@data-node1 keepalived]# curl 192.168.255.100

WEB1

[root@data-node1 keepalived]# curl 192.168.255.100

WEB2

[root@data-node1 keepalived]# curl 192.168.255.100

WEB1

[root@data-node1 keepalived]# curl 192.168.255.100

WEB2

[root@data-node1 keepalived]# curl 192.168.255.100

WEB1

[root@data-node1 keepalived]# curl 192.168.255.100

WEB2

四、Supervisor

4.1 root有网安装supervisor

$ wget https://bootstrap.pypa.io/get-pip.py

$ python get-pip.py

$ pip -V #查看pip版本

easy_install supervisor

4.2 root离线安装

解压supervisor-3.3.1.tar.gz 并安装

tar zxvf supervisor-3.3.1.tar.gz && cd supervisor-3.3.1

python setup.py install

【可能报错】:ImportError: No module named setuptools

【解决办法】:# tar zxvf setuptools-0.6c11.tar.gz && cd setuptools-0.6c11

python setup.py build

python setup.py install

4.3 创建配置文件

有root权限:echo_supervisord_conf > /etc/supervisord.conf

无root权限:echo_supervisord_conf > supervisord.conf,启动时要-c指定配置文件

4.4 管理命令

常用命令

supervisord -c /etc/supervisord.conf 通过指定的配置文件启动supervisor

supervisorctl -c /etc/supervisord.conf status 查看状态

supervisorctl -c /etc/supervisord.conf reload 重新载入配置文件

supervisorctl -c /etc/supervisord.conf start [all]|[x] 启动所有/指定的程序进程

supervisorctl -c /etc/supervisord.conf stop [all]|[x] 关闭所有/指定的程序进程

supervisorctl -c /etc/supervisord.conf shutdown

// 这些命令必须在配置文件的目录下执行才有效

supervisorctl status 查看状态

supervisorctl reload 重新载入配置文件

supervisorctl start [all]|[x] 启动所有/指定的程序进程

supervisorctl stop [all]|[x] 关闭所有/指定的程序进程

supervisorctl shutdown 停止supervisor

// 普通用户需要制定用户名和密码才能操作?

supervisorctl shutdown -u lgz -p rootlgz

// remove是将已经stoped的进程,从进程(组)管理中移除,可用add再添加回到进程管理中。

supervisorctl remove XXX

// add是将被remove掉的进程(组)再添加到进程管理中

supervisorctl add XXX

官网命令介绍

supervisorctl Actions

help

Print a list of available actions

help

Print help for

add [...]

Activates any updates in config for process/group

remove [...]

Removes process/group from active config

update

Reload config and then add and remove as necessary (restarts programs)

clear

Clear a process’ log files.

clear

Clear multiple process’ log files

clear all

Clear all process’ log files

fg

Connect to a process in foreground mode Press Ctrl+C to exit foreground

pid

Get the PID of supervisord.

pid

Get the PID of a single child process by name.

pid all

Get the PID of every child process, one per line.

reload

Restarts the remote supervisord

reread

Reload the daemon’s configuration files, without add/remove (no restarts)

restart

Restart a process Note: restart does not reread config files. For that, see reread and update.

restart :*

Restart all processes in a group Note: restart does not reread config files. For that, see reread and update.

restart

Restart multiple processes or groups Note: restart does not reread config files. For that, see reread and update.

restart all

Restart all processes Note: restart does not reread config files. For that, see reread and update.

signal

No help on signal

start

Start a process

start :*

Start all processes in a group

start

Start multiple processes or groups

start all

Start all processes

status

Get all process status info.

status

Get status on a single process by name.

status

Get status on multiple named processes.

stop

Stop a process

stop :*

Stop all processes in a group

stop

Stop multiple processes or groups

stop all

Stop all processes

4.5 在virtualenv python 下安装supervisor并运行

用root用户安装virtualenv

安装:pip install virtualenv

非root用户:

启动新虚拟环境:virtualenv ENV

指定python:virtualenv -p /data/projects/common/python3/bin/python3 --no-site-packages $varVname

进入虚拟环境:source ./ENV/bin/activate

退出当前的虚拟环境:deactivate

进入虚拟环境后安装supervisor

执行脚本:./install-supervisor.sh //脚本同目录下需要有supervisor-3.3.4.tar.gz、setuptools-0.6c11.tar.gz、meld3-1.0.2.tar.gz,必须在自己的家目录下执行,否则没有权限

启动supervisor:supervisord -c <路径>/supervisord.conf

supervisord.conf:

[unix_http_server]

file=/tmp/supervisor.sock

chown=lgz:lgz ; socket file 所有者

[inet_http_server]

port=0.0.0.0:9001

username=lgz001 ; 设置打开0.0.0.0:9001时的用户,默认没有

password=lgz123456 ; 设置打开0.0.0.0:9001时的密码,默认没有

[supervisord]

logfile=/home/lgz/logs/supervisor/supervisord.log ; 主日志文件

logfile_maxbytes=50MB ; 主日志文件大小,默认50MB

logfile_backups=10 ; # 主日志文件最多10个,默认10

loglevel=info ; 日志级别; 默认 info; 其他: debug,warn,trace

pidfile=/home/lgz/logs/supervisor/supervisord.pid ; pid文件

[rpcinterface:supervisor]

supervisor.rpcinterface_factory = supervisor.rpcinterface:make_main_rpcinterface

[supervisorctl]

serverurl=unix:///tmp/supervisor.sock

[include]

files=/home/lgz/supervisor/programs/*.conf

nginx.conf,需要放在上面配置文件的目录/home/lgz/supervisor/programs/下

[program:nginx]

autorestart=True

autostart=True

redirect_stderr=True

command=/home/lgz/nginx/sbin/nginx ;nginx的启动命令

user=lgz ; 用于启动Nginx的用户

stdout_logfile_maxbytes=20MB

stdout_logfile_backups=20

stdout_logfile=/home/lgz/logs/supervisor/nginx.log ; 管理Nginx时的日志文件

stderr_logfile=/home/lgz/logs/supervisor/nginx_error.log ; 管理Nginx时的错误日志文件

注意:用supervisor监控nginx,nginx不能以daemon的方式运行。 daemon off 注意配置文件的位置,在events上面。非root用户需要改Nginx的监听端口为1204以上。

修改Nginx的nginx.conf文件

daemon off; #daemon的方式运行改为off

events {

worker_connections 1024;

}

http {

server {

listen 8088; #修改端口

}

}

ps: 普通用户不能安装supervisor使用virtualenv虚拟出一个Python环境。

安装:pip install virtualenv

创建一个独立的python环境virtualenv --no-site-packages venv ,名字叫venv,进入该虚拟环境source venv/bin/activate,退出当前的环境deactivate

4.6 supervisor管理Nginx示例

本示例使用非root用户使用supervisor监控Nginx进程配置

示例环境:

centos7.5,Python环境事先安装好,virtualenv已安装好,supervisor已安装好,Nginx非root安装。

supervisord.conf:

[unix_http_server]

file=/tmp/supervisor.sock

chown=lgz:lgz ; socket file 所有者

[inet_http_server]

port=0.0.0.0:9001

username=lgz001 ; 设置打开0.0.0.0:9001时的用户,默认没有

password=lgz123456 ; 设置打开0.0.0.0:9001时的密码,默认没有

[supervisord]

logfile=/home/lgz/logs/supervisor/supervisord.log ; 主日志文件

logfile_maxbytes=50MB ; 主日志文件大小,默认50MB

logfile_backups=10 ; # 主日志文件最多10个,默认10

loglevel=info ; 日志级别; 默认 info; 其他: debug,warn,trace

pidfile=/home/lgz/logs/supervisor/supervisord.pid ; pid文件

[rpcinterface:supervisor]

supervisor.rpcinterface_factory = supervisor.rpcinterface:make_main_rpcinterface

[supervisorctl]

serverurl=unix:///tmp/supervisor.sock

[include]

files=/home/lgz/supervisor/programs/*.conf

nginx.conf,需要放在上面配置文件的目录/home/lgz/supervisor/programs/下

[program:nginx]

autorestart=True

autostart=True

redirect_stderr=True

command=/home/lgz/nginx/sbin/nginx ;nginx的启动命令

user=lgz ; 用于启动Nginx的用户

stdout_logfile_maxbytes=20MB

stdout_logfile_backups=20

stdout_logfile=/home/lgz/logs/supervisor/nginx.log ; 管理Nginx时的日志文件

stderr_logfile=/home/lgz/logs/supervisor/nginx_error.log ; 管理Nginx时的错误日志文件

注意:用supervisor监控nginx,nginx不能以daemon的方式运行。 daemon off 注意配置文件的位置,在events上面。非root用户需要改Nginx的监听端口为1204以上。

修改Nginx的nginx.conf文件

aemon off; #daemon的方式运行改为off

events {

worker_connections 1024;

}

http {

server {

listen 8088; #修改端口

}

}

supervisor管理命令

supervisord -c /supervisord.conf 通过配置文件启动supervisor

supervisorctl status 查看状态

supervisorctl reload 重新载入配置文件

supervisorctl start nginx 启动指定的程序进程(nginx),启动所有填‘all’

supervisorctl stop nginx 关闭指定的程序进程(nginx),关闭所有填‘all’

supervisorctl shutdown

更多参阅:http://www.supervisord.org/

五、授权非root用户启动1024以下端口的应用服务

示例以Nginx为例,因为Nginx默认监听80端口,普通用户权限不足启动,下面给test用户授权启动Nginx。

方法1:所有用户都可以运行(文件所有者root,所有者root)

chown root:root nginx

chmod 755 nginx

chmod u+s nginx

方法2:仅root和test用户可以运行(文件所有者:root 组所有者:test)

chown root:test nginx

chmod 750 nginx

chmod u+s nginx

六、Linux ssh免密登录

准备两台机器A和B

例子:在A上免密登录B

6.1 root用户之间

在两台A和B上执行ssh-keygen -t rsa ,一直回车,分别生成公钥和秘钥。

秘钥生成的位置:

[root@master-node ~]# cd /root/.ssh/

[root@master-node .ssh]# ls

id_rsa id_rsa.pub known_hosts

id_rsa:秘钥文件

id_rsa.pub:公钥文件

在B的/root/.ssh/目录下创建文件touch authorized_keys

把A的公钥内容添加到B的authorized_keys文件

scp id_rsa.pub root@

从A免密登录B:

ssh 192.168.255.129

6.2 普通用户之间

在普通用户登录时:ssh-keygen -t rsa

秘钥文件在用户目录下

[lgz@master-node .ssh]$ pwd

/home/lgz/.ssh

[lgz@master-node .ssh]$ ls

id_rsa id_rsa.pub known_hosts

[lgz@master-node .ssh]$ scp id_rsa.pub [email protected]:/home/lgz/id_rsa.pub

cat id_rsa.pub >> .ssh/authorized_keys

tip:如果是普通用户之间的免密登录需要修改权限

chmod 700 ~/.ssh

chmod 600 ~/.ssh/authorized_keys

tip:免输入秘钥生成:ssh-keygen -t rsa -N '' -f ~/.ssh/id_rsa -q

七、ELK

7.1 elasticsearch安装及配置

切换到普通用户:su 用户 #注意es只能以普通用户启动运行,需要jdk环境

下载安装包:https://www.elastic.co/cn/downloads/elasticsearch

解压安装包:tar zxvf 包

编辑配置文件 :vim config/elasticsearch.yml

cluster.name: es

node.name: es1

path.data: /data/projects/logs/elasticsearch/data

path.logs: /data/projects/logs/elasticsearch/logs

network.host: 0.0.0.0

http.port: 9200

transport.tcp.port: 9300

node.master: true

node.data: true

discovery.zen.minimum_master_nodes: 1

启动:sh bin/elasticsearch

如果报错:

ERROR: [2] bootstrap checks failed

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

[2]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

切换到root用户

vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 2048

* hard nproc 4096

vi /etc/sysctl.conf

vm.max_map_count=655360

执行命令:sysctl -p

再切回普通用户重新启动

7.2 kibana安装及配置

下载安装包:https://www.elastic.co/downloads/kibana

解压包:tar zxvf 包

编辑配置文件:vi config/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.255.137:9200"

启动:sh bin/kibana

7.2.1 kibana安装sentinl插件

sentinl插件用于监控关键字,报警

下载sentinl安装包:https://github.com/sirensolutions/sentinl/releases

注意一定要kibana的版本对应一致,否则会安装失败

安装:/path/to/kibana-plugin install file:///path/to/sentinl-vX.X.X.zip

安装完成后重启kibana生效。

7.2.2 kibana sentinl WebHook关键字报警配置示例

{

"actions": {

"Webhook_72fe42b4-c99e-4f30-a311-a07e8e78e873": {

"name": "Webhook",

"throttle_period": "1m",

"webhook": {

"priority": "high",

"stateless": false,

"method": "POST",

"host": "192.168.255.1",

"port": "8080",

"path": "/alert",

"body": "{\n \"watcher\": \"{{watcher.title}}\",\n \"payload_count\": \"{{payload.hits.total}}\"\n}",

"params": {

"watcher": "{{watcher.title}}",

"payload_count": "{{payload.hits.total}}"

},

"headers": {

"Content-Type": "application/json"

},

"auth": "",

"message": "test"

}

}

},

"input": {

"search": {

"request": {

"index": [

"logstash-*"

],

"body": {

"query": {

"bool": {

"must": [

{

"query_string": {

"analyze_wildcard": true,

"query": "\"Exception\""

}

},

{

"range": {

"@timestamp": {

"format": "epoch_millis",

"gte": "now-60d",

"lte": "now"

}

}

}

],

"must_not": []

}

}

}

}

}

},

"condition": {

"script": {

"script": "payload.hits.total >= 3"

}

},

"trigger": {

"schedule": {

"later": "every 1 minutes"

}

},

"disable": true,

"report": false,

"title": "watcher_test2",

"wizard": {},

"save_payload": false,

"spy": false,

"impersonate": false

}

7.2.3 kibana sentinl Mail 发送邮件示例

需要事先:yum install -y mailx

配置mailx

vim /etc/mail.rc 在后面添加:

set [email protected]

set smtp=smtp.163.com

set smtp-auth-user=lan_guo_zhi

set smtp-auth-password=1234qwerasdf

set smtp-auth=login

测试发送邮件

echo "this is test" |mailx -v-s "Test"` `"收件邮箱"

kibana.yml后面添加:

sentinl:

settings:

email:

active: true

user: [email protected]

password: 1234qwerasdf

host: smtp.163.com

ssl: false #根据实际情况添加

report:

active: true

重启kibana使配置生效

示例:

{

"actions": {

"Email_alarm_aae7a8fe-04a3-4bb0-a0c9-542611e197c7": {

"name": "Email alarm",

"throttle_period": "15m",

"email": {

"priority": "high",

"stateless": false,

"body": "no content",

"to": "[email protected]",

"from": "[email protected]",

"subject": "what subject"

}

}

},

"input": {

"search": {

"request": {

"index": [

"logstash-*"

],

"body": {}

}

}

},

"condition": {

"script": {

"script": "payload.hits.total >= 0"

}

},

"trigger": {

"schedule": {

"later": "every 2 minutes"

}

},

"disable": true,

"report": false,

"title": "watcher_test_mail",

"wizard": {},

"save_payload": false,

"spy": false,

"impersonate": false

}

7.2.4 kibana查询

kibana 查询

日期范围:time : [18-10-16 TO 18-10-16]

time.keyword : *20\:00\:29.37*

time.keyword : "18-10-15 20:00:23.508" //精确时间

关键字查询:message : *nginx tomcat* //查询message字段包含关键字nginx或者tomcat

短语搜索:"like Gecko" //搜索:like Gecko

全文搜索:login //全文搜索login

限定字段全文搜索:field:value

精确搜索:filed:"value", //关键字加上双引号, 如:message : "2018-10-16",

//message : "2018-10-16 " AND message : "12:17:29.373"

quikc~ brwn~ foks~ //~:在一个单词后面加上~启用模糊搜索,可以搜到一些拼写错误的单词

"where select"~5 //表示 select 和 where 中间可以隔着5个单词,可以搜到 select password from users where id=1

范围搜索:

数值/时间/IP/字符串 类型的字段可以对某一范围进行查询

length:[100 TO 200]

sip:["172.24.20.110" TO "172.24.20.140"]

date:{"now-6h" TO "now"}

tag:{b TO e} 搜索b到e中间的字符

count:[10 TO *] * 表示一端不限制范围

count:[1 TO 5} [ ] 表示端点数值包含在范围内,{ } 表示端点数值不包含在范围内,可以混合使用,此语句为1到5,包括1,不包括5

可以简化成以下写法:

age:>10

age:<=10

age:(>=10 AND <20)

优先级:

quick^2 fox

使用^使一个词语比另一个搜索优先级更高,默认为1,可以为0~1之间的浮点数,来降低优先级

逻辑操作:

AND

OR

+:搜索结果中必须包含此项

-:不能含有此项

+apache -jakarta test aaa bbb:结果中必须存在apache,不能有jakarta,剩余部分尽量都匹配到

转义特殊字符:

[\():<>"*]

以上字符当作值搜索的时候需要用\转义

\(1+1\)=2用来查询(1+1)=2

7.3 logstash安装及配置

下载安装包:https://www.elastic.co/downloads/logstash

解压安装包:tar zxvf 包

修改配置文件:vim config/logstash.conf示例

# 输入日志文件

input{

file {

path => "/data/projects/logs/testlogs/aisp-srsc/*/*.log"

close_older => "90 day"

start_position => "beginning"

codec => multiline {

# java匹配异常日志与上一行合并

#pattern => '^[a-z]+.|^[[:space:]]+(at|\.{3})\b|^Caused by:|^[[:space:]]+(...)'

#negate => false

#what => previous

# 匹配不是以时间开头的行与上一行合并

pattern => "^%{TIMESTAMP_ISO8601} "

negate => true

what => previous

}

}

}

# 处理日志文件

filter {

grok {

# 正则表达式文件目录

patterns_dir => ["./patterns"]

match => { "message" => [

"%{TIMESTAMP_ISO8601:time} \- \[%{WZ:process}\] \- %{WZ:level} \- %{WZ:method} \- %{FKH:content}"

,"%{TIMESTAMP_ISO8601:time} \[%{WZ:process}\] %{WZ:level} %{WZ:method} \- %{FKH:content}"

]

}

}

date {

match => [ "time", "YYYY-MM-dd HH:mm:ss.SSS", "ISO8601" ]

target => [ "@timestamp" ]

}

}

# 输出日志文件到es

output {

#在控制台输出日志

# stdout{

# codec => rubydebug

#}

elasticsearch {

hosts => ["http://192.168.255.146:9200"]

index => "logstash-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

mkdir patterns

vim patterns/java ,名字无所谓不叫java叫其他都OK

WZ (.*)

FKH ([^;]*)

JAVA_DATE %{YEAR}-%{MONTH}-%{MONTHDAY} %{TIME}

启动:./bin/logstash -f ./config/logstash.conf --config.reload.automatic

7.3.1 其他配置示例

logstash把日志输出到redis配置:

input {

file {

path => "/data/projects/logs/testlogs/aisp-srsc/*/*.log"

#path => "/data/projects/logs/testlogs/test/*.log"

close_older => "90 day"

start_position => "beginning"

codec => multiline {

# 匹配异常日志

#pattern => '^[a-z]+.|^[[:space:]]+(at|\.{3})\b|^Caused by:|^[[:space:]]+(...)'

#negate => false

#what => previous

# 匹配不是以时间开头的行

pattern => "^%{TIMESTAMP_ISO8601} "

negate => true

what => previous

}

}

}

output {

stdout{

codec => rubydebug

}

redis {

data_type => "list"

host => "192.168.255.146"

db => "1"

port => "6379"

password => "redis123456"

key => "aisp-srsc"

batch => true

batch_events => 10

batch_timeout => 30

# 除了message数据之外还会有一个主机名和时间戳的字段生成,如果只需要message字段,配置codec如下

#codec => plain {

# format => "%{message}"

#}

congestion_interval => 0

}

}

logstash读取redis中的日志配置示例:

input{

redis {

data_type => "list"

host => "192.168.255.146"

db => "1"

port => "6379"

key => "aisp-srsc"

password => "redis123456"

threads => 2

timeout => 10

}

}

filter {

grok {

patterns_dir => ["./patterns"]

match => { "message" => [

"%{TIMESTAMP_ISO8601:time} \- \[%{WZ:process}\] \- %{WZ:level} \- %{WZ:method} \- %{FKH:content}"

,"%{TIMESTAMP_ISO8601:time} \[%{WZ:process}\] %{WZ:level} %{WZ:method} \- %{FKH:content}"

]

}

}

date {

match => [ "time", "YYYY-MM-dd HH:mm:ss.SSS", "ISO8601" ]

target => [ "@timestamp" ]

}

}

output {

stdout{

codec => rubydebug

}

elasticsearch {

hosts => ["http://192.168.255.146:9200"]

index => "logstash-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

处理传给es的索引时区问题

input{

file {

path => "/data/projects/apache-tomcat-8.0.35/logs/*.txt"

}

}

filter {

grok {

patterns_dir => ["./patterns"]

match => { "message" => [

"%{TIMESTAMP_ISO8601:time} %{IP:client} \- \- \[%{HTTPDATE:time_local}\] \"%{WZ:request}\" %{NUMBER:statu} %{NUMBER:duration}"

]

}

}

date {

match => [ "time", "YYYY-MM-dd HH:mm:ss.SSS", "ISO8601" ]

target => [ "@timestamp" ]

}

ruby {

code => "event.set('index_day', event.get('@timestamp').time.localtime.strftime('%Y.%m.%d'))"

}

#ruby {

#code => "event.set('index_day', event.timestamp.time.localtime.strftime('%Y.%m.%d'))"

#code => "event.set('index_day', event.get('@timestamp').time.localtime.strftime('%Y.%m.%d'))"

#}

#ruby {

# code => "event.set('test_time', event.get('@timestamp').time.localtime + 8*60*60)"

#}

#ruby {

# code => "event.set('index_time', event.get('test_time').time.localtime.strftime('%Y.%m.%d'))"

#}

}

output {

stdout{

codec => rubydebug

}

elasticsearch {

hosts => ["http://192.168.255.138:9200"]

#index => "logstash-%{+YYYY.MM.dd}"

index => "logstash-%{index_day}"

}

}

7.3.2 匹配示例

示例1

tomcat的日志格式:

pattern="%h|%t|"%r"|%s|%b|"%{Referer}i"|"%{User-Agent}i"|"%{X-FORWARDED-FORi}i"|%{Host}i|%v"

nginx的日志格式:

log_format main '$remote_addr|$time_local|"$request"|'

'$status|$body_bytes_sent|"$http_referer"|'

'"$http_user_agent"|"$http_x_forwarded_for"|$http_host|$server_name';

logstash匹配:

match => { "message" => [

"%{IP:remote_addr}\|\[%{HTTPDATE:time_local}\]\|\"%{WORD:method} %{WZ:request} HTTP/%{NUMBER:httpversion}\"\|%{NUMBER:status}\|%{WZ:body_bytes_sent}\|%{QS:http_referer}\|%{QS:http_user_agent}\|%{QS:http_x_forwarded_for}\|%{WZ:http_host}\|%{WZ:server_name}","%{IP:remote_addr}\|%{HTTPDATE:time_local}\|\"%{WORD:method} %{WZ:request} HTTP/%{NUMBER:httpversion}\"\|%{NUMBER:status}\|%{WZ:body_bytes_sent}\|%{QS:http_referer}\|%{QS:http_user_agent}\|%{QS:http_x_forwarded_for}\|%{WZ:http_host}\|%{WZ:server_name}"

]

正则文件内容:

WZ (.*)

示例2

日志:

192.168.255.1 - - [06/Mar/2019:15:03:07 +0800] \"GET /favicon.ico HTTP/1.1\" 200 21630

匹配:

"%{IP:client} \- \- \[%{HTTPDATE:time_local}\] \"%{WZ:request}\" %{NUMBER:statu} %{NUMBER:duration}"

55.3.244.1 GET /index.html 15824 0.043

%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}

2019-03-29 09:37:44.919

%{TIMESTAMP_ISO8601:time}

06/Mar/2019:15:03:07 +0800

%{HTTPDATE:time}

八、Ansible

8.1 ansible-playbook批量部署Nginx示例

环境:三台机器A、B、C: 192.168.255.128、192.168.255.129、192.168.255.130

要求:A能免密ssh连接B、C.(免密登录的方法见本文档 “Linux ssh免密登录”)

安装ansible: sudo yum install ansible

[root@master-node ansible]# ls

ansible.cfg hosts nginx.yaml roles

[root@master-node ansible]# vim hosts

hosts内容

[webservers]

192.168.255.129 ansible_connection=ssh ansible_ssh_user=lgz ansible_ssh_pass='rootlgz' ansible_become_pass='rootlgz'

192.168.255.130 ansible_connection=ssh ansible_ssh_user=lgz ansible_ssh_pass='rootlgz' ansible_become_pass='rootlgz'

tasks

- name: copy package

copy: src=nginx-1.13.6.tar.gz dest=/data/tmp/nginx-1.13.6.tar.gz

tags: cppkg

- name: tar nginx

shell: cd /data/tmp/;tar -zxvf nginx-1.13.6.tar.gz

#- name: yum install

# yum: name={{ item }} state=latest

# become: yes

# become_method: sudo

# with_items:

# - openssl-devel

# - pcre-devel

# - zlib-devel

- name: yum install

shell: yum -y install openssl-devel pcre-devel zlib-devel

become: yes

become_method: sudo

- name: install nginx

shell: cd /data/tmp/nginx-1.13.6;./configure --prefix=/data/projects/nginx --user=lgz --group=lgz --with-http_ssl_module;make && make install

- name: copy conf file

template: src=nginx.conf dest=/data/projects/nginx/conf/nginx.conf

- name: copy supvisor-nginx

template: src=supervisor-nginx.conf dest=/data/projects/supervisor/programs/supervisor-nginx.conf

- name: start supervisor service

shell: cd /data/projects/supervisor/; supervisord -c supervisord.conf

#- name: systemctl init

# template: src=nginx.service dest=/usr/lib/systemd/system/nginx.service

#- name: start nginx service

# service: name=nginx state=started enabled=true

- name: yum test httpd

shell: yum -y install httpd

become: yes

become_method: sudo

常用命令:

// 检测语法

ansible-playbook --syntax-check XXXX.yaml

// 测试部署

ansible-playbook -C XXXX.yaml

// 正式部署

ansible-playbook XXXX.yaml

// 指定hosts文件

ansible-playbook XXXX.yaml -i <$PATH>/hosts

参考:https://blog.csdn.net/rwslr6/article/details/78376052

九、开机启动脚本示例

有一个脚本boot_supervisor.sh

#!/bin/bash

#chkconfig:345 61 61

#description: start supervisor

su - app -c '/data/projects/common/supervisord/boot.sh'

注意:脚本中必须有#chkconfig:345 61 61 #description: start supervisor 这两项,否则无法添加服务

把脚本放到/etc/rc.d/init.d/ 目录下

把服务添加到配置chkconfig --add boot_supervisor.sh

查看服务的状态 chkconfig --list boot_supervisor.sh

删除开机启动的服务chkconfig --del boot_supervisor.sh

再重启机器就会执行这个脚本。

十、Linux 定时任务

crontab命令用于设置周期性被执行的指令,该命令从标准输入设备读取指令,并将其存放于“crontab”文件中,以供之后读取和执行。

**cron 系统调度进程,**可以使用它在每天的非高峰负荷时间段运行作业,或在一周或一月中的不同时段运行。cron是系统主要的调度进程,可以在无需人工干预的情况下运行作业。

crontab命令允许用户提交、编辑或删除相应的作业。每一个用户都可以有一个crontab文件来保存调度信息。系统管理员可以通过cron.deny 和 cron.allow 这两个文件来禁止或允许用户拥有自己的crontab文件。

检车是否安装crontab:rpm -qa|grep crontab

启动:systemctl start crond

[root@master-node ~]# ls /etc/cron

cron.d/ cron.daily/ cron.deny cron.hourly/ cron.monthly/ crontab cron.weekly/

cron.daily是每天执行一次的job

cron.weekly是每个星期执行一次的job

cron.monthly是每月执行一次的job

cron.hourly是每个小时执行一次的job

cron.d是系统自动定期需要做的任务

crontab是设定定时任务执行文件

cron.deny文件就是用于控制不让哪些用户使用Crontab的功能

每个用户都有自己的cron配置文件,通过crontab -e 就可以编辑

crontab文件格式:

* * * * * command

minute hour day month week command

分 时 天 月 星期 命令

minute: 表示分钟,可以是从0到59之间的任何整数。

**hour:**表示小时,可以是从0到23之间的任何整数。

**day:**表示日期,可以是从1到31之间的任何整数。

**month:**表示月份,可以是从1到12之间的任何整数。

**week:**表示星期几,可以是从0到7之间的任何整数,这里的0或7代表星期日。

command:要执行的命令,可以是系统命令,也可以是自己编写的脚本文件。

星号(*):代表所有可能的值,例如month字段如果是星号,则表示在满足其它字段的制约条件后每月都执行该命令操作。

逗号(,):可以用逗号隔开的值指定一个列表范围,例如,“1,2,5,7,8,9”。

中杠(-):可以用整数之间的中杠表示一个整数范围,例如“2-6”表示“2,3,4,5,6”。

正斜线(/):可以用正斜线指定时间的间隔频率,例如“0-23/2”表示每两小时执行一次。同时正斜线可以和星号一起使用,例如*/10,如果用在minute字段,表示每十分钟执行一次。

例子:每隔5分钟执行脚本

*/5 * * * * sh /home/app/work/test/test-cron.sh

每分钟执行脚本

* * * * * sh /home/app/work/test/test-cron.sh

查看当前用户的定时任务:crontab -l

删除当前用户的定时任务:crontab -r

十一、书栈网BookStack

https://www.bookstackapp.com/

十二、nginx

环境:

# 必须

yum -y install pcre-devel zlib-devel openssl openssl-devel

1、安装

安装包下载地址:http://nginx.org/en/download.html

cd /usr/local/nginx-1.12.2

./configure

./configure --prefix=/data/projects/nginx --user=app --group=apps --with-http_ssl_module

make & make install

# 启动

/data/projects/nginx/sbin/nginx

2、相关模块

–with-http_ssl_module // 支持HTTPS模块

–add-module=/usr/local/nginx-module-vts // 支持监控nginx模块

–with-http_stub_status_module //查看nginx运行状态模块

用法

vi nginx.conf

location /nginx-status {

stub_status on;

access_log off;

}

查看nginx的运行状态

curl http://192.168.255.136/nginx-status

Active connections: 6

server accepts handled requests

640 640 6747

Reading: 0 Writing: 1 Waiting: 5

解释

active connections – 表示Nginx正在处理的活动连接数为6个

server - 表示Nginx启动到现在共处理了640个连接

accepts - 表示Nginx启动到现在共成功创建640次握手

handled requests - 表示总共处理了6747次请求

reading - 读取客户端的连接数.

writing - 响应数据到客户端的数量

waiting - 开启 keep-alive 的情况下,这个值等于 active – (reading+writing), 意思就是 Nginx 已经处理完正在等候下一次请求指令的驻留连接.

prome收集该数据方法:

1、docker方式:docker run -d -p 9113:9113 fish/nginx-exporter -nginx.scrape_uri=http://192.168.255.128/nginx-status

查看结果

http://192.168.255.128:9113/metrics

2、自定义脚本方式:

安装环境

pip install requests

pip install flask

pip install prometheus_client

编写脚本json_exporter.py,内容如下

from prometheus_client import start_http_server, Metric, REGISTRY

import json

import requests

import sys

import time

import string

url = 'http://192.168.255.128/nginx-status'

class JsonCollector(object):

def __init__(self, endpoint):

self._endpoint = endpoint

def collect(self):

r = requests.get(url)

if r.status_code != 200:

print("Error: request failed! status_code=",r.status_code)

return

response = r.content

#print("response:\n"+response)

list_response = response.split()

json_resp='{"connection": '+list_response[2]+', \

"acc":'+list_response[7]+', \

"hand":'+list_response[8]+', \

"reque":'+list_response[9]+', \

"read":'+list_response[11]+', \

"write":'+list_response[13]+', \

"wait":'+list_response[15]+'}'

#print("json_resp:\n"+json_resp)

final = json.loads(json_resp)

# Convert requests and duration to a summary in seconds

metric = Metric('svc_requests_duration_seconds','Requests time taken in seconds', 'summary')

metric.add_sample('Active connections', value=final['connection'], labels={})

metric.add_sample('accepts',value=final['acc'], labels={})

metric.add_sample('requests',value=final['reque'], labels={})

metric.add_sample('Reading',value=final['read'], labels={})

metric.add_sample('Writing',value=final['write'], labels={})

metric.add_sample('Waiting',value=final['wait'], labels={})

yield metric

if __name__ == '__main__':

# Usage: json_exporter.py port endpoint

start_http_server(int(sys.argv[1]))

REGISTRY.register(JsonCollector(sys.argv[2]))

while True: time.sleep(1)

运行脚本

python json_exporter.py 1234 http://192.168.255.128/nginx-status/metrics.json

查看结果

http://192.168.255.128:1234/metrics

3、给nginx添加新的模块

以添加–with-http_stub_status_module为例

思路:nginx -V查看当前nginx的编译参数,添加需要的模块重新./configure,再make编译(一定不要make install否则会直接覆盖),备份替换…/sbin/nginx二进制文件。

[app@localhost sbin]$ ./nginx -V

# 当前nginx的版本

nginx version: nginx/1.15.6

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-28) (GCC)

# 当前nginx的编译参数

configure arguments: --prefix=/home/app/nginx --user=app --group=apps

# 添加需要的模块重新./configure

./configure --prefix=/home/app/nginx --user=app --group=apps --with-http_stub_status_module

make

cp $PATH/sbin/nginx $PATH/sbin/nginx.bk

cp ./objs/nginx $PATH/sbin/

然后相关修改配置文件,从新启动。

十三、redis

redis官网:https://redis.io/

下载地址:http://download.redis.io/releases/redis-4.0.12.tar.gz

1、安装

有root权限

1、安装

tar xzf redis-4.0.12.tar.gz

cd redis-4.0.12

make

# make如果出现zmalloc.h:50:31: 致命错误:jemalloc/jemalloc.h:没有那个文件或目录,使用make MALLOC=libc

make PREFIX=/usr/local/redis install

cp /usr/local/src/redis-4.0.12/redis.conf /usr/local/redis/

2、配置

cd /usr/local/redis

vim redis.conf

redis.conf使用密码远程连接配置

# 改为后台启动

daemonize no ==> daemonize yes

# 永久设置密码

# requirepass foobared ==> requirepass password

# 把bind 127.0.0.1加上注释,远程登录需要

bind 127.0.0.1 ==> # bind 127.0.0.1

redis.conf内网免密连接配置

daemonize yes

protected-mode no

bind 0.0.0.0

3、启动

# 关闭防火墙

systemctl stop firewalld

# 启动

./bin/redis-server ./redis.conf

# 查看进程

ps aux|grep redis

# 客户端连接redis,输入ping,返回pong,证明成功安装

./redis-cli

ps: 普通用户没有root权限情况下安装使用

tar -zxf redis-4.0.12.tar.gz

cd redis-4.0.12

make

src/redis-server

可能会有一些警告,但是redis可用。

2、常用语法

连接客户端,info查看redis的信息。

常用语法,见:https://blog.csdn.net/lyl0724/article/details/77070908

十四、解压、压缩

1、解压

解压tar.gz到当前目录:

tar zxvf $name.tar.gz

解压tar.gz到指定目录:

tar zxvf $name.tar.gz -C $target_dir

2、压缩

基本压缩

tar zcvf $TAR_NAME.tar.gz $dir

排除某个文件夹或者文件

tar zcvf $TAR_NAME.tar.gz --exclude=排除的文件或文件夹 压缩的目录

如:

2.1、排除a文件夹下的a.txt文件

tar zcvf a.tar.gz a --exclude=a/a.txt

如果要排除多个,就多添加–exclude选项

2.2、排除a文件夹下的b文件夹

tar zcvf a.tar.gz a --exclude=a/b

注意:b文件夹后面不能有/,如a/b/, 如果要排除多个,就多添加–exclude选项

十五、tomcat监控

github地址:https://github.com/prometheus/jmx_exporter

准备好tomcat.yml和jmx_prometheus_javaagent-0.3.1.jar

tomcat的监控配置:https://github.com/prometheus/jmx_exporter/blob/master/example_configs/tomcat.yml

tomcat监控jar包:https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar

一、web应用以war包在tomcat中运行

vim tomcat/bin/catalina.sh

加入:

JAVA_OPTS="-javaagent:/$PATH/jmx_prometheus_javaagent-0.3.1.jar=9151:/$PATH/tomcat.yml"

$PATH是文件的存储路径。

启动tomcat

访问:http://IP:9151/metrics就可以看到tomcat的数据。

二、web应用以jar方式运行(待验证)

java -javaagent:/$PATH/jmx_prometheus_javaagent-0.3.1.jar=9151:/$PATH/tomcat.yml -jar yourWebJar.jar

十六、pip离线安装包

pip download -r requirements.txt -d /tmp/paks/

pip install --no-index --find-links=/packs/ -r requirements.txt

零零散散的

docker run --net=host --env itemName="fdn" --env appId=6001 --env appsId=6000 -v /data/projects/logs/fdn:/data/projects/logs/fdn:rw --name mytomcat --rm -d mytomcat:8.5.35

使其centos7虚拟机静态ip

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

IPADDR=192.168.255.128

HWADDR=00:0C:29:80:EE:9C

GATEWAY=192.168.255.2

DNS1=8.8.8.8

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=97f35be6-94b0-48d8-9a4b-e9f20361ea6c

DEVICE=ens33

ONBOOT=yes

添加用户/组

groupadd apps

useradd app -g apps

passwd app

权限

给projects目录给同组下的其他用户读写权限(也可以是文件)

给组添加权限:chmod g+rw projects

修改权限所有用户可读写和执行

chmod 755 /data/projects/apache-tomcat-8.0.35/conf/*

添加sudo权限

vim /etc/sudoers

root ALL=(ALL) ALL

app ALL=(ALL) NOPASSWD:ALL

统计行数

对文本文件统计有关键字的行数

grep -o '关键字' $file |wc -l

示例:

对文本文件统计含有关键字“getPricingResult code:非0”的行数

grep 'getPricingResult code:[^0]' ./t1.log | wc -l

不只对一个文件,可以对多个文件

grep 'getPricingResult code:[^0]' ./*.log | wc -l

查看文件首尾指定行数的内容

head -n 2 $file

tail -n 2 $file

文件查找

find ./* -name string_interpolation.rb