hive分布式安装部署

hive的安装与部署

开始本次操作前,确保Hadoop和zk顺利启动。

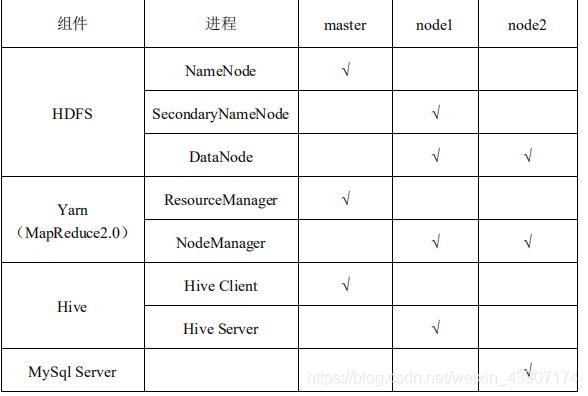

如图所示,mysql server应安装在node2节点上,Hiver Client 安装在了

master 节点,MySql Server 安装在 node2 节点。

本次安装需要如下的安装包:

mysql-connector-java-5.1.5-bin.jar

apache-hive-2.3.7-bin.tar

首先在node2节点配置。

将mysql-connector-java-5.1.5-bin.jar传输到node2节点。

1.安装数据库(使用离线源)

[root@node2 ~]# yum -y install mariadb-server

2、启动mysql服务,并设置开机自启

[root@node2 ~]# systemctl start mariadb

[root@node2 ~]# systemctl enable mariadb

3、初始化mysql并设置密码并测试登陆mysql

[root@node2 ~]# mysql_secure_installation

**这里我的密码设置为000000。

4.登录数据库并且创建用户

[root@node2 ~]# mysql -u root -p000000

创建用户:

create user ‘root’@’%’ identified by ‘000000’;

允许远程连接:

grant all privileges on . to ‘root’@’%’ with grant option;

刷新权限:

flush privileges;

5、Node2将jar包发给node1

[root@node2 ~]# scp mysql-connector-java-5.1.5-bin.jar node1:/root

二、在master和node1节点配置

1、传输tar包到master节点

使用SecureFX进行传输

2、Master解压hive压缩包,并将解压后的文件输给node1

root@master ~]# tar -zxvf apache-hive-2.3.7-bin.tar.gz

[root@master ~]# scp apache-hive-2.3.7-bin node1:/root

3.修改环境变量(master和node1)

[root@master ~]# vi /etc/profile

[root@node1 ~]# vi /etc/profile

添加:

export HIVE_HOME=/root/apache-hive-2.3.7-bin export PATH=$PATH:$HIVE_HOME/bin验证环境变量:

[root@master ~]# source /etc/profile

[root@node1 ~]# source /etc/profile

4.mater配置客户端

更换jar包

[root@master ~]# cp /root/apache-hive-2.3.7-bin/lib/jline-2.12.jar /opt/bigdata/hadoop-3.0.0/lib

5、添加hive-site.xml

[root@master ~]# cd /usr/hive/apache-hive-2.3.7-bin/conf

[root@master conf]# vi hive-site.xml

hive.metastore.warehouse.dir

/user/hive_remote/warehouse

hive.metastore.local

false

hive.metastore.uris

thrift://node1:9083

6.在node1节点将jar包复制到lib中

[root@node1 ~]# cp mysql-connector-java-5.1.5-bin.jar apache-hive-2.3.7-bin/lib/

node1复制配置文件以及配置

[root@node1 ~]# cd /usr/hive/apache-hive-2.3.7-bin/conf

[root@node1 conf]# cp hive-env.sh.template hive-env.sh

在hive-env.sh添加:

HADOOP_HOME=/opt/bigdata/hadoop-3.0.0/(根据自己的真实路径来)

创建 hive-site.xml 文件

[root@node1 conf]# vi hive-site.xml

hive.metastore.warehouse.dir

/user/hive_remote/warehouse

javax.jdo.option.ConnectionURL

jdbc:mysql://node2:3306/hive?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

root

javax.jdo.option.ConnectionPassword

000000

hive.metastore.schema.verification

false

datanucleus.schema.autoCreateAll

true

启动hive,并进行测试操作(查看数据库)

[root@node1 ~]cd /usr/hive/apache-hive-2.3.7-bin/

[root@node1 apache-hive-2.3.7-bin]bin/hive

which: no hbase in (/opt/bigdata/hadoop-3.0.0/bin:/opt/bigdata/hadoop-3.0.0/sbin:/opt/bigdata/jdk1.8.0_161/bin:/root/zookeeper-3.4.14/bin:/opt/bigdata/hadoop-3.0.0/bin:/opt/bigdata/hadoop-3.0.0/sbin:/opt/bigdata/jdk1.8.0_161/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/apache-hive-2.3.7-bin/bin:/root/bin:/root/apache-hive-2.3.7-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/apache-hive-2.3.7-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/bigdata/hadoop-3.0.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]Logging initialized using configuration in jar:file:/root/apache-hive-2.3.7-bin/lib/hive-common-2.3.7.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;(查看数据库的命令)

OK

default

Time taken: 10.89 seconds, Fetched: 1 row(s)

hive>