Tekton CD 之实战篇(三):从Github到k8s集群

Tekton CD 之实战篇(二):从Github到k8s集群

(如果对于云原生技术感兴趣,欢迎关注微信公众号“云原生手记”)

CICD逻辑图

CD概述

我这边讲的CD是将用户的应用部署进k8s集群,现在大家都在使用k8s集群了,k8s管理应用比较方便,部署应用更是方便,直接kubectl apply -f 文件名就可以了。这边我要讲的CD两种方式:

- 一种是通过kubectl命令实现的;

- 一种是通过client-go实现的,原理是一样的,只是实现方式不同。

当然,目前有的CD工具例如 Argo CD,也是很好的CD工具,已经很成熟了。我个人在项目中还没有使用 Argo CD(我还不会,哈哈哈…),当然,因为我需要将每次的部署信息进行统计上传到统一管理的流水线平台做统计,所以暂时选择使用client-go做CD部署,方便写代码嘛。

kubectl实现方式

首先需要做个Kubectl镜像,用户使用时,需要指定两条路径:

- 应用yaml文件的路径;

- kubeconfig文件的路径。

所以kubeconfig最好放在用户工程中,这样就能在CD中,根据用户指定的kubeconfig路径将应用部署在指定集群。当然tekton部署的环境,必须要和kubeconfig指定的环境,网络互通。

制作Kubectl镜像

其实在dockerhub上已经有了kubectl镜像,大家可以直接使用,如果需要修改dockerfile文件,请参看下面的链接,kubectl镜像构建参看这个地址, github链接(https://github.com/bitnami/bitnami-docker-kubectl) 里面由各个k8s版本的kubectl制作镜像的Dockerfile,我的k8s集群是v1.17的,这边就参看v1.17的kubectl的Dockerfile:

FROM docker.io/bitnami/minideb:buster

LABEL maintainer "Bitnami "

COPY prebuildfs /

# Install required system packages and dependencies

RUN install_packages ca-certificates curl gzip procps tar wget

RUN wget -nc -P /tmp/bitnami/pkg/cache/ https://downloads.bitnami.com/files/stacksmith/kubectl-1.17.4-1-linux-amd64-debian-10.tar.gz && \

echo "5991e0bc3746abb6a5432ea4421e26def1a295a40f19969bb7b8d7f67ab6ea7d /tmp/bitnami/pkg/cache/kubectl-1.17.4-1-linux-amd64-debian-10.tar.gz" | sha256sum -c - && \

tar -zxf /tmp/bitnami/pkg/cache/kubectl-1.17.4-1-linux-amd64-debian-10.tar.gz -P --transform 's|^[^/]*/files|/opt/bitnami|' --wildcards '*/files' && \

rm -rf /tmp/bitnami/pkg/cache/kubectl-1.17.4-1-linux-amd64-debian-10.tar.gz

RUN apt-get update && apt-get upgrade -y && \

rm -r /var/lib/apt/lists /var/cache/apt/archives

ENV BITNAMI_APP_NAME="kubectl" \

BITNAMI_IMAGE_VERSION="1.17.4-debian-10-r97" \

HOME="/" \

OS_ARCH="amd64" \

OS_FLAVOUR="debian-10" \

OS_NAME="linux" \

PATH="/opt/bitnami/kubectl/bin:$PATH"

USER 1001

ENTRYPOINT [ "kubectl" ]

CMD [ "--help" ]

这个kubectl的Dockerfile做了两件事:

- 1、安装kuebctl ;

- 2、进入容器,运行kubetcl --help命令

我需要改成的功能是:

- 1、安装kubectl;

运行kubetcl apply -f . 命令(这边操作打算使用task的step中的shell脚本的方式实现)。

下面是我的Dockerfile文件,删掉了一下脚本,我要执行自己的命令,就把写这些删了:

ENTRYPOINT [ "kubectl" ]

CMD [ "--help" ]

指定yaml和Kubeconfig路径

我这边使用tekton的参数形式,将yaml路径传递进容器,而kubeconfig文件,我是用configmap的形式将他挂载到pod中的/tekton/home/.kube/目录下。

configmap.yaml文件:

apiVersion: v1

kind: ConfigMap

metadata:

name: kubectl-config

namespace: nanjun

data:

config: |

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5RENDQWJDZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJd01EUXhPREV5TkRNMU0xb1hEVE13TURReE5qRXlORE0xTTFvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBS1lzCnR5OVBmREt1S01WZ0NXRURHVlhqakcxSm8vNGhibk15UWowbzRjS3FyQ0F4VVpkNXNEUVd2dHJmR1BJUXJsemQKMEpPOXo1SnhvZ01Lb2grMGdmYUcxTDEyRzVzWWYxenNUcmNtUGFReG5qNk1LajdScVBua2ZTdW1xUDZZRklORApCM3ZnMnZCUCtoQkhNZCtGTjZBcVFGZlQwaHNTNEtCd2dkMWx3aXlyQXIzZVRsWlVCUjFZWmxKZkFyRUVOb01BCjdrYVdwa3I2N2ZxTGdMVFlCRXNIREx3VmE2RExSMGJjSlZGS0VMWXV2VkZ5eTlxSnFMQmIrVWR2ZVU4L3dZeGQKT1pHVGNydzQ5WE5LZ3EyT1J3bHBxRi9DSXl2OThKcVBIbnlLQnpVN1ZrOVFxQWE5SFBIYXpUbHYvMmd0ZmgxSAp1aFpqYjRTOTluMHJUYWNyUVNVQ0F3RUFBYU1qTUNFd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFLR3lNQVJHSnFNT1BkNkt0anhCaGs1SUNBTVIKenN0Q1RnVGQzT2ZjRzloVlBwVndGQmhSejd0ZFpSNWljRHpyU2JOV29KdzdpRVhlbURpTkhQdGxVM1dWa2dOQgpNWkNkb1hYOEhBVEFyV1Y1aXpZTnU3Nmd2bW5MUnVPRkhGOWNhRExOcnFUdEs4WmlwSXFIdlI1SGtpblZkWW9kClRZdU01QjdEQTZRRWE0K1lsMWFHQkNPMUlzd2RWaEpqVzhVd2IyK1Nibm9lWi95NE5XVWRzYTI1K2M1dWlVMUMKZ3JNNmdxQ1dteS9KQUxKSlBVRUsvYlppZ0Y2eUZOR290V2hhVlBJbG5VdXFFbW9SNWRnV0hDYkJQZXNrYm5yYQpNRTBvNVFWVzdXaUxzS25IS0ZneFhsN1EveWdlQm1WY3A1bXBoZTJiS21LcFh5dGpNc1U4bno1LzYzYz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: https://10.142.113.40:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: coderaction

name: coderaction

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: coderaction

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Im5CUjhEOGxtTU9naUhKQlFiX1VRUGFZV3AyTmNlWWl4Rjd5OUZ3RUg5c1EifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJja2EiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoiY2thLTA0MjgtdG9rZW4tOTlwazIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiY2thLTA0MjgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiOTQ2NTRjYy01ZDA3LTRmZDMtODcxNS1mMzkzYmQzYTExN2YiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6Y2thOmNrYS0wNDI4In0.LnhZxoNnftaUYc0xO2uTvn9TccvPgAnDelV1aIfWC7d0CVC_SHOS_AfSH3P1Yyq-Ny0yP8l1MtdbMfF_p3t_suG3ouleZMoIx9Lmt7WIq53adEINTh3fiaZ0zfEvZowNAxVJyodkwNiUiUprMlN9oh0bJNjwkOkjogx-9eEItSGVHq3eZ3XBlJ-g3GAJ27tEClMWg0raCGhmZKCT4m0rzoBNTf32QsNXOJGC97KN4Gx7Am2RWBTrpztCw5E39lJgYwYiDn7uHBf6fBQagj4bV6vTB4YJg-w7pDxUIwrRAgqNLPqwAcAvmeurEBBVuHdT43WXB1kLdz-vZwl4cob_Nw

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM4akNDQWRxZ0F3SUJBZ0lJRTdOODA3Wm9lbHN3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TURBME1UZ3hNalF6TlROYUZ3MHlNVEEwTVRneE1qUXpOVGRhTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXRRSkRkSUovWmZkMmpJTXMKSFh1SmlpcC8xUWpxMFUzakZrVDlDL3cxcEZ2VlRqaFhqL0VYYkF5NStzcTJycG1sSlZMRlQ1R1U5b0MrNnN0MgpCVXpqS1NlT3NsNHFlNTc0WXlXM285M1VrNVVtUXVuMnp4RzFBNDR0WXJURXJZdkpYNGhFcHBrLzBCbVpiOXdqClJaWDFTTnZzQjFseC9CTDlCVmhaOHM1Wm1QSmdLcm95dm0vSm5ycmFvcnV5S1RreENZVmxwbHVBblFGb0tUMjcKRFA0YVZsZkZKWTExUnhzZXhSayt4L2hBVWt6VE9jVXFNVHU4Y25aVkliRFYrZ1Y5blNPUVBMelRDOVNNbi9DaApGdHA2Nm5QWFpkQWIxUWpCWW5RV2FTZTkwcTQzWTVYT0grcmhSREFsZEZLMER5OEhteUhjTDd4NVEyVUpRQ2hoCnpmaE1tUUlEQVFBQm95Y3dKVEFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFEdklVMWZJQmw2ZC8veTd1VzZnby9FRFZBYlpTckt5eGNOVgprMUdqdVRBR0dpNldja2FkQ2IwdWJIUTkxWS9GbVRyRm5nb25rckpuSWF2R2R1bUh5KzZCKzZpSUliSE1PeUdaCjduSTJMZWExNkl2MEJkOGE3amhzbTJuaWMwWlVXOVhQMElobjhQT0hMK0MyZ05lcDBIQ3pCRVFjQVA3bjMvR2oKZm9CTjhjTi9vNnhXQUR0U2U0UjR0YWJuYWtGZTVxU1Y0K2pBTmRyTXVYWDFDdjNUNlN6VGxPbVVZL0Z6QXlKTApnell2YUd1QnhxZlpmMkxoUXcxVElsZW82ajdkV0xWeGhySk15MlJKWG4wV1VlU2VRb3lFUVNzdDFkaVhyNmtkCkRrcTNsaE1EN1IzQ2Y0NXBlcU1CYmRTWlg0S3p2Yzd1SE1MTjVkeXpwV25Hb2FhbFhzST0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb2dJQkFBS0NBUUVBdFFKRGRJSi9aZmQyaklNc0hYdUppaXAvMVFqcTBVM2pGa1Q5Qy93MXBGdlZUamhYCmovRVhiQXk1K3NxMnJwbWxKVkxGVDVHVTlvQys2c3QyQlV6aktTZU9zbDRxZTU3NFl5VzNvOTNVazVVbVF1bjIKenhHMUE0NHRZclRFcll2Slg0aEVwcGsvMEJtWmI5d2pSWlgxU052c0IxbHgvQkw5QlZoWjhzNVptUEpnS3JveQp2bS9KbnJyYW9ydXlLVGt4Q1lWbHBsdUFuUUZvS1QyN0RQNGFWbGZGSlkxMVJ4c2V4UmsreC9oQVVrelRPY1VxCk1UdThjblpWSWJEVitnVjluU09RUEx6VEM5U01uL0NoRnRwNjZuUFhaZEFiMVFqQlluUVdhU2U5MHE0M1k1WE8KSCtyaFJEQWxkRkswRHk4SG15SGNMN3g1UTJVSlFDaGh6ZmhNbVFJREFRQUJBb0lCQUYxU3JtNmFmWTZYMktJMwpXdjVVWENSRkp5VXg5TWMyN2Zia1dNYmVJTlg5bHVzK056NzZZVVlQQmJBYzViVDllRnpXNE8zV05FUW5Pc2VaCllONzR0a0hZcUVTa01pa01YQ25hSDJVNEVNcUtZbkNyYWRsMjJxbmJtdURDTElrQmdqQmo5R2trcC9ibHkrc1YKUjRZdis0ZTJBMm9DbnJjRkh6aXJSYXplNE9qdVpyZS8wYzM1SGFzSGtiMUhuRGRDbVNLcmdyQloybURpZTUvWgpPdWJCem82UjI1UG1Yb0xUczFUamZzbDB1bnE5UWYrR2sxUDg1U0txb1Qza2dGRDJZc1VraHZjalRRdmNuWkIyCmlYOVBBMnRUNlhxaGJxMnFmRHRLei8wM1k1MGxqQnZ6c0NmSWp4bHViSHdSWHhwNGh5SXI0V2xFQ0xwakNtY1AKc0ovVTY1RUNnWUVBN2hFblVqNUZlVEljRWZxNC9jYVMzY29hWHNTMXBHMXJBcEY5TjVXdjNjbDdJMGxwd0lHYwo5L1E0TVYzWFM3VVo0eDhKZyt4MGRJbGl2RjVnT0IzcFZXR3luMnhUeHIxbDlGMzllYzFwcU9VNllQSStoM2svCm1NRGlpR1NyekRnOUtVUlFlM3dVOTNjbzNudGdSZ3doaVcrVnVzandITlRDaUV0MnAzVDIrcDBDZ1lFQXdxVFAKTGw5dG1tZ3pqbXpNVVRmcmlHckY2VGlEMzlFbCtWSzFoNVNEMUc2ZEZINCt4QnJMVVAxTkVIK2djZEdQeWY4aQpveE1nM3dwejF6L1gwdk93cWkvTFFEdVhrdE1jN3NtVkVkVGplL0VtNk5RbFpxSlFReXhNM1YyQkhMZDVLUFl2CmN1ZUlmWm9hY0V4VG9sQXUvY0pzbjQ0NjhHbHNoK1Vqbm9QM2l5MENnWUFjejFDZDRGRlNBR0ZyUDVkQmh0VmgKSjhNWE11RDBmQlZXSXpzdkRkdFJrTDlwSHNwQWRLOEZSclhDSzZRUlVtSkduUXZ1dmgrOXRwNlBRekNMdWZyeAp6VGZybVJWdVdKOU0razdoZloxS3hpclJicDlvajZERm9Kb0pmWDFZNG5sc1ZBc1ZWb2ZIQnRHWVV2L3NtaTA0Cno1c2tGb3NRUWlNa2tWVlRvSkQrOVFLQmdFQ1ZFSTB4YXB0dDhaVlRNaVBNcXlEVFZLR0NkL2NlWFR3eG5qdkQKSWs2cytQK2d0OUMzbHpoakkxdlREUGhXOFIrendObGM4bTR1K0txMTZ6VjZWK2JQL3Q5c0ptbTRGSVNDYkN6RApkMHRiZzI2RFhYbUZaNTR5SjdyWFdJeWZyOXJRZklQaW9ONFQ4S3ZNRjMvbW5RRGpyc2p1RjA1SG5KUW1pa0FCClIzUnRBb0dBYitQTGVUVWFHMDc4M3pSckJuWENyVi9SaXBjT3U2czBjdkxiQ2x6dGw5Ymc1QStITEFHUzB5SmYKWWUwUS9JSGZ0N1Vjb0UwRytOaWVFUmE5c2Y3SDFJNk1ZcFUvYzdKdHlNNmdWbS9vMGZibHNjL3JHdVl3MDVtSwo1WE9hUlFhNkM4eWllVVVtZ3dnaXhjcnZ3YlJ2UmZPaHMwQlhRMW44a3VxWDBuS2REY0U9Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

在CI之上增加部署步骤:

这边主要修改下task.yaml,增加yaml文件路径的参数和部署步骤,还有kubeconfig文件的挂载。

task.yaml:

apiVersion: tekton.dev/v1alpha1

kind: Task

metadata:

name: build-and-push

namespace: nanjun

spec:

inputs:

resources:

- name: golang-resource

type: git

params:

- name: dockerfile-path

type: string

default: $(inputs.resources.golang-resource.path)/

description: dockerfile path

- name: yaml-path #需要指定的yaml文件路径

type: string

default: $(inputs.resources.golang-resource.path)/yaml

description: yaml path

outputs:

resources:

- name: builtImage

type: image

steps:

- name: build-with-golangenv

image: golang

script: |

#!/usr/bin/env sh

cd $(inputs.resources.golang-resource.path)/

export GO111MODULE=on

export GOPROXY=https://goproxy.io

go mod download

go build -o main .

- name: image-build-and-push

image: docker:stable

script: |

#!/usr/bin/env sh

docker login registry.nanjun

docker build --rm --label buildNumber=1 -t $(outputs.resources.builtImage.url) $(inputs.params.dockerfile-path)

docker push $(outputs.resources.builtImage.url)

volumeMounts:

- name: docker-sock

mountPath: /var/run/docker.sock

- name: hosts

mountPath: /etc/hosts

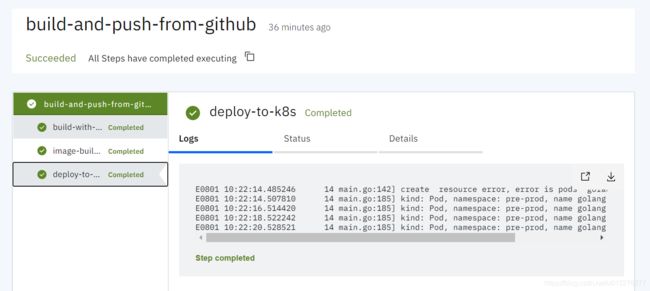

- name: deploy-to-k8s #新增部署步骤

image: registry.nanjun/tekton/kubectl:v1.0

script: |

#!/usr/bin/env sh

path=$(inputs.params.yaml-path)

ls $path

ls $HOME/.kube/

cat $HOME/.kube/config

files=$(ls $path)

for filename in $files

do

kubectl apply -f $path"/"$filename

if [ $? -ne 0 ]; then

echo "failed"

exit 1

else

echo "succeed"

fi

done

volumeMounts:

- name: kubeconfig

mountPath: /tekton/home/.kube/

volumes:

- name: docker-sock

hostPath:

path: /var/run/docker.sock

- name: hosts

hostPath:

path: /etc/hosts

- name: kubeconfig # 挂载kubeconfig文件

configMap:

name: kubectl-config

kubectl形式部署的yaml文件

具体的文件请去以下github仓库中查看,在kubectl2apply文件下。

github仓库地址:https://github.com/fishingfly/githubCICD.git

CI思路参看上一篇《Tekton CI 之实战篇(一):从Github到Harbor仓库》,这边只是在task中增加了Kubectl部署的步骤。

client-go实现CD

使用client-go去部署的原因是我需要将CICD中的一些部署结果上传到统计平台,写代码比用kubectl方便灵活。这边部署的逻辑,就是把yaml所代表的资源对象先删除再创建的过程,比较粗暴,当然之后自己可以增加代码去实现灰度发布的流程(有机会的话,我后面会更新)。最后我将client-go打成镜像,部署时需要指定存放在工程中的yaml文件和Kubeconfig文件的路径:

flag.StringVar(&kubeconfigPath, "kubeconfig_path", "./test-project/deploy/develop/kubeconfig", "kubeconfig_path")

flag.StringVar(&yamlPath, "yaml_path", "./test-project/deploy/develop/yaml", "yaml_path")

这边我使用了client-go的unstructured包可以对接1.17所有的资源对象。以下是一段部署逻辑代码:

// 需要做下逻辑判断,先查询该资源是否存在,存在就删除,并且会2秒一次轮询是否删除完了,删除完了就创建。

_, err = dclient.Resource(mapping.Resource).Namespace(namespace).Create(&unstruct, metav1.CreateOptions{})

if err != nil {

glog.Errorf("create resource error, error is %s\n", err.Error())

if k8sErrors.IsAlreadyExists(err) || k8sErrors.IsInvalid(err) {// 已经存在那就删除重新创建 或者port被占用

if err := dclient.Resource(mapping.Resource).Namespace(namespace).Delete(unstruct.GetName(), &metav1.DeleteOptions{}); err != nil {

glog.Errorf("delete resource error, error is %s\n", err.Error())

return err

}

//删除操作发生后,资源不会立即清理掉,此时创建会存在is being deleting的错误

if err = pollGetResource(mapping, dclient, unstruct, namespace); err != nil {// 轮询查看资源是否删除干净

glog.Errorf("yaml file is %s, delete resource error, error is %s\n", v, err.Error())

return err

}

_, err = dclient.Resource(mapping.Resource).Namespace(namespace).Create(&unstruct, metav1.CreateOptions{})//创建资源

if err != nil {

glog.Errorf("create resource error, error is %s\n", err.Error())

return err

}

} else {

return err

}

}

下面是轮询函数,2秒一次轮询,10分钟超时时间,超时就算失败:

func pollGetResource(mapping *meta.RESTMapping, dclient dynamic.Interface, unstruct unstructured.Unstructured, namespace string) error {

for {

select {

case <-time.After(time.Minute * 10): //十分钟后没删除完,那就算失败了

glog.Error("pollGetResource time out, something wrong with k8s resource")

return errors.New("ErrWaitTimeout")

default:

resource, err := dclient.Resource(mapping.Resource).Namespace(namespace).Get(unstruct.GetName(), metav1.GetOptions{})// get对象

if err != nil {

if k8sErrors.IsNotFound(err) {

glog.Warningf("kind is %s, namespace is %s, name is %s, this app has been deleted.", unstruct.GetKind(), namespace, unstruct.GetName())

return nil

} else {

glog.Errorf("get resource failed, error is %s", err.Error())

return err

}

}

if resource != nil && resource.GetName() == unstruct.GetName() {// 判断资源存在,或者获取资源报错。

glog.Errorf("kind: %s, namespace: %s, name %s still exists", unstruct.GetKind(), namespace, unstruct.GetName())

}

}

time.Sleep(time.Second * 2)// 2秒循环一次

}

}

总结

目前由于个人水平原因,用的都是普通的方式实现CD,后面会尝试使用Argo CD来实现自动化部署。目前我的两种CD方式,存在kubeconfig被暴露的隐患,尤其是client-go的CD形式,kubeconfig文件直接暴露在工程中,有安全问题。总结下两种方式使用场景:

- kubectl方式适合简单的部署;

- client-go方式适用于需要统计CICD全流程数据的场景中。

本文用到的代码及yaml文件在下面这两个链接内:

- https://github.com/fishingfly/githubCICD.git

- https://github.com/fishingfly/deployYamlByClient-go.git

未来展望

近期将推出k8s scheduler源码分析系列,在那之后将持续推出client-go实战系列,记得关注我哦!

最后的最后,要是您觉得我写的东西对您有所帮助的话,打赏下呗!我会更有动力的!哈哈哈。。。

祝大家工作顺利,生活愉快,每一份努力都不被辜负!