python进阶—OpenCV之图像特征检测与描述

文章目录

- Harris角点检测

- Harris角点检测

- Corner with SubPixel Accuracy

- Shi-Tomasi角点检测

- 尺度不变特征变换算法SIFT (Scale-Invariant Feature Transform)

- SURF (Speeded-Up Robust Features)

- FAST Algorithm for Corner Detection

这是官方教程的第四篇,OpenCV之图像特征检测,继续学习opencv。

原教程花了很大篇幅介绍图像的特征是什么含义,如何描述他们,并用“拼图”游戏来解释它们。简单说图像的特征就是一些边边角角、变化非常明显的区域,能在图片中明显定位的元素,然后用一些数据去描述这些特征。

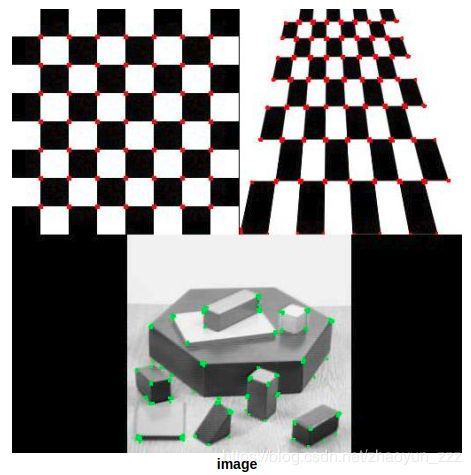

Harris角点检测

Harris角点检测是Chris Harris和Mike Stephens在1988年提出的。主要用于运动图像的追踪。当时的普遍想法是利用边缘进行追踪,但是当相机或物体运动时你不知道朝哪个方向,相机的几何变换也是未知的,所以边缘匹配很难达到预期的效果。即使是当时最优秀的边缘检测算子Canny算子,它的边缘检测也依赖于阈值的选取。所以Harris等人放弃了匹配边缘,转而寻找一些特殊的点来匹配,这些点是边缘线段的连接点,它们表征了图像的结构,代表了图像局部的特征。

Harris角点检测

- 函数原型:dst = cv.cornerHarris( src, blockSize, ksize, k[, dst[, borderType]] )

- src:输入图像,单通道8bit灰度图像或者float32型的图像

- dst:角点检测后图像,图像类型为CV_32FC1,尺寸与原图一致

- blockSize:邻域尺寸大小

- ksize:sobel边缘检测窗口大小

- k:系数值,通常取值范围为0.04 ~ 0.06之间

- borderType:边沿像素推断方法,即边沿类型

import numpy as np

import cv2 as cv

filename = 'chessboard.png'

img = cv.imread(filename)

gray = cv.cvtColor(img,cv.COLOR_BGR2GRAY)

gray = np.float32(gray)

dst = cv.cornerHarris(gray,2,3,0.04)

#result is dilated for marking the corners, not important

dst = cv.dilate(dst,None)

# Threshold for an optimal value, it may vary depending on the image.

img[dst>0.01*dst.max()]=[0,0,255]

cv.imshow('dst',img)

if cv.waitKey(0) & 0xff == 27:

cv.destroyAllWindows()

Corner with SubPixel Accuracy

Harris角点检测存在很多缺陷,如角点是像素级别的,速度较慢,准确度不高等

- 函数原型:corners = cv.cornerSubPix( image, corners, winSize, zeroZone, criteria )

- image:输入图像

- corners:输入的初始角点坐标集合,通常是Harris角点检测的结果,输出时修改为精确检测的检点坐标

- winSize:计算亚像素角点时考虑的区域的大小,大小为NXN; N=(winSize*2+1);For example, if winSize=Size(5,5) , then a 5∗2+1×5∗2+1=11×11 search window is used.

- zeroZone:类似于winSize,搜索窗口中dead region大小的一半,Size(-1,-1)表示忽略,主要用于避免自相关矩阵中可能的奇点(自己翻译,不明所以)

- criteria:评判规则,表示计算亚像素时停止迭代的标准,可选的值有cv::TermCriteria::MAX_ITER 、cv::TermCriteria::EPS(可以是两者其一,或两者均选),前者表示迭代次数达到了最大次数时停止,后者表示角点位置变化的最小值已经达到最小时停止迭代。二者均使用cv::TermCriteria()构造函数进行指定

import numpy as np

import cv2 as cv

filename = 'chessboard2.jpg'

img = cv.imread(filename)

gray = cv.cvtColor(img,cv.COLOR_BGR2GRAY)

# find Harris corners

gray = np.float32(gray)

dst = cv.cornerHarris(gray,2,3,0.04)

dst = cv.dilate(dst,None)

ret, dst = cv.threshold(dst,0.01*dst.max(),255,0)

dst = np.uint8(dst)

# find centroids

ret, labels, stats, centroids = cv.connectedComponentsWithStats(dst)

# define the criteria to stop and refine the corners

criteria = (cv.TERM_CRITERIA_EPS + cv.TERM_CRITERIA_MAX_ITER, 100, 0.001)

corners = cv.cornerSubPix(gray,np.float32(centroids),(5,5),(-1,-1),criteria)

# Now draw them

res = np.hstack((centroids,corners))

res = np.int0(res)

img[res[:,1],res[:,0]]=[0,0,255]

img[res[:,3],res[:,2]] = [0,255,0]

cv.imwrite('subpixel5.png',img)

图中红色点表示Harris角点检测的结果,绿色点表示经过SubPixel优化过的角点检测结果。

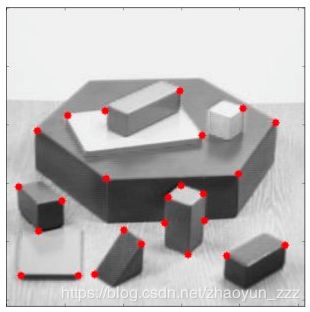

Shi-Tomasi角点检测

Shi-Tomasi 算法是Harris 算法的改进。Harris 算法最原始的定义是将矩阵 M 的行列式值与 M 的迹相减,再将差值同预先给定的阈值进行比较。后来Shi 和Tomasi 提出改进的方法,若两个特征值中较小的一个大于最小阈值,则会得到强角点。

- 函数原型:corners = cv.goodFeaturesToTrack( image, maxCorners, qualityLevel, minDistance[, corners[, mask[, blockSize[, useHarrisDetector[, k]]]]] )

- image:8位或32位单通道灰度图像

- corners:角点向量,保存的是检测到的角点的坐标

- maxCorners:定义可以检测到的角点的数量的最大值,maxCorners <= 0 表示无限制

- qualityLevel:检测到的角点的质量等级,角点特征值小于qualityLevel*最大特征值的点将被舍弃 For example, if the best corner has the quality measure = 1500, and the qualityLevel=0.01 , then all the corners with the quality measure less than 15 are rejected.

- minDistance:两个角点间最小间距,以像素为单位

- mask:感兴趣的角点检测区域,指定角点检测区域,类型为CV_8UC1

- blockSize:计算协方差矩阵时的窗口大小

- useHarrisDetector:是否使用Harris角点检测,为false,则使用Shi-Tomasi算子

- k:Harris角点检测算子用的中间参数,一般取经验值0.04~0.06;useHarrisDetector参数为false时,该参数不起作用

import numpy as np

import cv2 as cv

from matplotlib import pyplot as plt

img = cv.imread('blox.jpg')

gray = cv.cvtColor(img,cv.COLOR_BGR2GRAY)

corners = cv.goodFeaturesToTrack(gray,25,0.01,10)

corners = np.int0(corners)

for i in corners:

x,y = i.ravel()

cv.circle(img,(x,y),3,255,-1)

plt.imshow(img),plt.show()

尺度不变特征变换算法SIFT (Scale-Invariant Feature Transform)

SIFT算法的特点:

- SIFT特征是图像的局部特征,其对旋转、尺度缩放、亮度变化保持不变性,对视角变化、仿射变换、噪声也保持一定程度的稳定性;

- 独特性(Distinctiveness)好,信息量丰富,适用于在海量特征数据库中进行快速、准确的匹配;

- 多量性,即使少数的几个物体也可以产生大量的SIFT特征向量;

- 高速性,经优化的SIFT匹配算法甚至可以达到实时的要求;

- 可扩展性,可以很方便的与其他形式的特征向量进行联合。

SIFT算法在一定程度上可解决目标的自身状态、场景所处的环境和成像器材的成像特性等因素影响图像配准/目标识别跟踪的性能,主要有以下几点:

- 目标的旋转、缩放、平移

- 图像仿射/投影变换

- 光照影响

- 目标遮挡

- 杂物场景

- 噪声

import numpy as np

import cv2 as cv

img = cv.imread('test.jpg')

gray= cv.cvtColor(img,cv.COLOR_BGR2GRAY)

sift = cv.xfeatures2d.SIFT_create()

kp = sift.detect(gray,None)

img=cv.drawKeypoints(gray,kp,img)

cv.imwrite('sift_keypoints.jpg',img)

- 函数 sift.compute() 用来计算这些关键点的描述符。例如: kp; des = sift:compute(gray; kp)。

- 函数 sift.detectAndCompute()一步到位直接找到关键点并计算出其描述符。

这里需要描述一下函数:cv.drawKeypoints

- outImage = cv.drawKeypoints( image, keypoints, outImage[, color[, flags]] )

- image:查找关键点的图像

- keypoints:在图像中查找到的关键点

- outImage:输出图像,根据flag的取值,会在输出图像画上flag只是的信息

- color:关键点颜色

- flags:在输出图像上描绘关键的标志,取值如下

cv2.DRAW_MATCHES_FLAGS_DEFAULT, cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS, cv2.DRAW_MATCHES_FLAGS_DRAW_OVER_OUTIMG, cv2.DRAW_MATCHES_FLAGS_NOT_DRAW_SINGLE_POINTS

SURF (Speeded-Up Robust Features)

SIFT 算法可以进行关键点检测和描述,但是这种算法的执行速度比较慢。

与 SIFT 相同 OpenCV 也提供了 SURF 的相关函数。首先我们要初始化一个 SURF 对象,同时设置好可选参数: 64/128 维描述符, Upright/Normal 模式等。

>>> img = cv.imread('fly.png',0)

# Create SURF object. You can specify params here or later.

# Here I set Hessian Threshold to 400

>>> surf = cv.xfeatures2d.SURF_create(400)

# Find keypoints and descriptors directly

>>> kp, des = surf.detectAndCompute(img,None)

>>> len(kp)

699

#在一幅图像中显示 699 个关键点太多了,我们把它缩减到 50 个再绘制到图片上。

# Check present Hessian threshold

>>> print( surf.getHessianThreshold() )

400.0

# We set it to some 50000. Remember, it is just for representing in picture.

# In actual cases, it is better to have a value 300-500

>>> surf.setHessianThreshold(50000)

# Again compute keypoints and check its number.

>>> kp, des = surf.detectAndCompute(img,None)

>>> print( len(kp) )

47

>>> img2 = cv.drawKeypoints(img,kp,None,(255,0,0),4)

>>> plt.imshow(img2),plt.show()

# 不检测关键点的方向

# Check upright flag, if it False, set it to True

>>> print( surf.getUpright() )

False

>>> surf.setUpright(True)

# Recompute the feature points and draw it

>>> kp = surf.detect(img,None)

>>> img2 = cv.drawKeypoints(img,kp,None,(255,0,0),4)

>>> plt.imshow(img2),plt.show()

# 关键点描述符的大小,如果是 64 维的就改成 128 维

# Find size of descriptor

>>> print( surf.descriptorSize() )

64

# That means flag, "extended" is False.

>>> surf.getExtended()

False

# So we make it to True to get 128-dim descriptors.

>>> surf.extended = True

>>> kp, des = surf.detectAndCompute(img,None)

>>> print( surf.descriptorSize() )

128

>>> print( des.shape )

(47, 128)

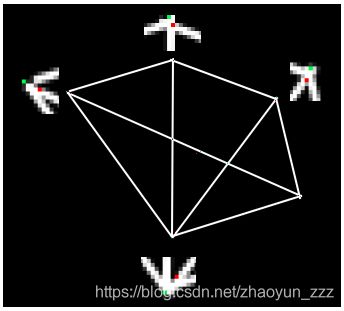

FAST Algorithm for Corner Detection

- cv.FAST_FEATURE_DETECTOR_TYPE_5_8,

- cv.FAST_FEATURE_DETECTOR_TYPE_7_12

- cv.FAST_FEATURE_DETECTOR_TYPE_9_16

以上3个选择是邻域大小的选择

import numpy as np

import cv2 as cv

from matplotlib import pyplot as plt

img = cv.imread('simple.jpg',0)

# Initiate FAST object with default values

fast = cv.FastFeatureDetector_create()

# find and draw the keypoints

kp = fast.detect(img,None)

img2 = cv.drawKeypoints(img, kp, None, color=(255,0,0))

# Print all default params

print( "Threshold: {}".format(fast.getThreshold()) )

print( "nonmaxSuppression:{}".format(fast.getNonmaxSuppression()) )

print( "neighborhood: {}".format(fast.getType()) )

print( "Total Keypoints with nonmaxSuppression: {}".format(len(kp)) )

cv.imwrite('fast_true.png',img2)

# Disable nonmaxSuppression

fast.setNonmaxSuppression(0)

kp = fast.detect(img,None)

print( "Total Keypoints without nonmaxSuppression: {}".format(len(kp)) )

img3 = cv.drawKeypoints(img, kp, None, color=(255,0,0))

cv.imwrite('fast_false.png',img3)

至此,学习遇到瓶颈了,会有一大段时间不会更新OpenCV的内容。