hadoop2理解读取文件在流程

F5:进入方法

F6:单步执行

F7:推出当前方法

F8:跳过当前断点

(1)读取文件代码

package com.jn.hadoop.hdfs;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class ReadHdfs {

/**

* @param args

* @throwsURISyntaxException

* @throws IOException

*/

public static void main(String[] args) {

FileSystem fs;

try {

fs = FileSystem.get(new URI("hdfs://192.168.41.13:9000"), new Configuration());

InputStream in = fs.open(new Path("/good.txt"));

OutputStream out = new FileOutputStream("d://good.txt");

IOUtils.copyBytes(in, out, 4096,true);

} catch (IOException e) {

e.printStackTrace();

} catch (URISyntaxException e) {

e.printStackTrace();

}

}

}

(2)第一句执行过程

fs = FileSystem.get(new URI("hdfs://192.168.41.13:9000"), new Configuration());

(1) 类加载器进行加载

public abstract class ClassLoader

private synchronized ClassloadClassInternal(String name)

throws ClassNotFoundException

{

return loadClass(name);

(2) URI类进入

publicfinalclass URI

implementsComparable

(3) URI类进行实例化

public URI(String str) throwsURISyntaxException {

new Parser(str).parse(false);

}

(4) 类加载Configuration类

private synchronized ClassloadClassInternal(String name)

throws ClassNotFoundException

{

returnloadClass(name);

}

(5) 初始化Configuration类

构造器

public Configuration() {

this(true);

}

调用构造器的重载

public Configuration(boolean loadDefaults) {

this.loadDefaults = loadDefaults;

updatingResource = new HashMap

synchronized(Configuration.class) {

REGISTRY.put(this, null);

}

}

(6) 执行进入类FileOutputStream,

public static FileSystem get(URI uri, Configuration conf) throws IOException {

String scheme =uri.getScheme();

String authority = uri.getAuthority();

if (scheme == null && authority == null) { // usedefault FS

return get(conf);

}

if (scheme != null && authority == null) { // noauthority

URI defaultUri = getDefaultUri(conf);

if (scheme.equals(defaultUri.getScheme()) // ifscheme matches default

&& defaultUri.getAuthority()!= null) { // & default has authority

return get(defaultUri,conf); // return default

}

}

String disableCacheName = String.format("fs.%s.impl.disable.cache", scheme);

if (conf.getBoolean(disableCacheName, false)) {

return createFileSystem(uri,conf);

}

return CACHE.get(uri, conf);

}

(7) 执行进入类get方法

FileSystem get(URI uri, Configuration conf)throws IOException{

Key key = new Key(uri,conf);//对用户权限与组的校验。

return getInternal(uri, conf,key);

}

(8) 执行进入类getInternal方法

单例设计模式,懒汉式。

private FileSystemgetInternal(URI uri, Configuration conf, Key key) throws IOException{

FileSystem fs;

synchronized (this) {

fs = map.get(key);

}

if (fs != null) {

return fs;

}

fs = createFileSystem(uri, conf);

synchronized (this) { // refetch the lock again

FileSystem oldfs = map.get(key);

if (oldfs != null) { // a filesystem is created while lock is releasing

fs.close(); // close the new file system

return oldfs; // return the old file system

}

// now insert the new file system into the map

if (map.isEmpty()

&& !ShutdownHookManager.get().isShutdownInProgress()){

ShutdownHookManager.get().addShutdownHook(clientFinalizer, SHUTDOWN_HOOK_PRIORITY);

}

fs.key = key;

map.put(key, fs);

if (conf.getBoolean("fs.automatic.close", true)) {

toAutoClose.add(key);

}

return fs;

}

}

从配置文件中获取FileSystem的实现类DistributedFileSystem。

classorg.apache.hadoop.hdfs.DistributedFileSystem

public static Classextends FileSystem> getFileSystemClass(String scheme,

Configuration conf) throws IOException {

if (!FILE_SYSTEMS_LOADED) {

loadFileSystems();

}

Classextends FileSystem> clazz = null;

if (conf != null) {

clazz = (Classextends FileSystem>)conf.getClass("fs." + scheme + ".impl", null);

}

if (clazz == null) {

clazz = SERVICE_FILE_SYSTEMS.get(scheme);

}

if (clazz == null) {

throw new IOException("NoFileSystem for scheme: " + scheme);

}

return clazz;

}

进行fs的创建

private static FileSystem createFileSystem(URI uri, Configuration conf

) throws IOException {

Class clazz = getFileSystemClass(uri.getScheme(),conf);

if (clazz == null) {

throw new IOException("NoFileSystem for scheme: " +uri.getScheme());

}

FileSystem fs =(FileSystem)ReflectionUtils.newInstance(clazz, conf);//利用反射创建fs,多态,用父类来接收子类。

fs.initialize(uri, conf);

return fs;

}

DistributedFileSystem类中

public void initialize(URI uri, Configuration conf) throws IOException {

super.initialize(uri,conf);

setConf(conf);

String host = uri.getHost();

if (host == null) {

throw new IOException("IncompleteHDFS URI, no host: "+ uri);

}

homeDirPrefix = conf.get(

DFSConfigKeys.DFS_USER_HOME_DIR_PREFIX_KEY,

DFSConfigKeys.DFS_USER_HOME_DIR_PREFIX_DEFAULT);

this.dfs = new DFSClient(uri, conf, statistics);

this.uri = URI.create(uri.getScheme()+"://"+uri.getAuthority());

this.workingDir = getHomeDirectory();

}

DFSClient类中

public DFSClient(URI nameNodeUri, Configuration conf,

FileSystem.Statistics stats)

throws IOException {

this(nameNodeUri,null,conf, stats);

}

@VisibleForTesting

public DFSClient(URI nameNodeUri, ClientProtocol rpcNamenode,

Configuration conf, FileSystem.Statisticsstats)

throws IOException {

// Copyonly the required DFSClient configuration

NameNodeProxies.ProxyAndInfo

进行创建代理对象,NameNodeProxies对RPC进行封装

proxyInfo = NameNodeProxies.createProxy(conf,nameNodeUri,

ClientProtocol.class,nnFallbackToSimpleAuth);

}

NameNodeProxies类

public static

Configuration conf, InetSocketAddressnnAddr, Class

UserGroupInformation ugi, boolean withRetries,

AtomicBoolean fallbackToSimpleAuth) throws IOException {

T proxy;

if (xface ==ClientProtocol.class) {

//进行创建代理

proxy = (T) createNNProxyWithClientProtocol(nnAddr,conf, ugi,

withRetries, fallbackToSimpleAuth);

}

}

NameNodeProxies类

private static ClientProtocolcreateNNProxyWithClientProtocol(

InetSocketAddress address, Configurationconf, UserGroupInformation ugi,

boolean withRetries, AtomicBooleanfallbackToSimpleAuth)

throws IOException {

RPC.setProtocolEngine(conf,ClientNamenodeProtocolPB.class, ProtobufRpcEngine.class);

final RetryPolicy defaultPolicy =

RetryUtils.getDefaultRetryPolicy(

conf,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_ENABLED_KEY,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_ENABLED_DEFAULT,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_SPEC_KEY,

DFSConfigKeys.DFS_CLIENT_RETRY_POLICY_SPEC_DEFAULT,

SafeModeException.class);

final long version = RPC.getProtocolVersion(ClientNamenodeProtocolPB.class);

// RPC获取服务端代理对象

ClientNamenodeProtocolPB proxy = RPC.getProtocolProxy(

ClientNamenodeProtocolPB.class, version, address,ugi, conf,

NetUtils.getDefaultSocketFactory(conf),

org.apache.hadoop.ipc.Client.getTimeout(conf),defaultPolicy,

fallbackToSimpleAuth).getProxy();

//创建一些策略

RetryPolicy createPolicy = RetryPolicies

.retryUpToMaximumCountWithFixedSleep(5,

HdfsConstants.LEASE_SOFTLIMIT_PERIOD, TimeUnit.MILLISECONDS);

//对代理进行增强

ClientProtocoltranslatorProxy =

new ClientNamenodeProtocolTranslatorPB(proxy);

return (ClientProtocol) RetryProxy.create(

ClientProtocol.class,

new DefaultFailoverProxyProvider<ClientProtocol>(

ClientProtocol.class, translatorProxy),

methodNameToPolicyMap,

defaultPolicy);

利用动态代理创建proxy

public static

FailoverProxyProvider

Map

RetryPolicy defaultPolicy) {

return Proxy.newProxyInstance(

proxyProvider.getInterface().getClassLoader(),

newClass[] { iface },

newRetryInvocationHandler

methodNameToPolicyMap)

);

}

//创建ProxyAndInfo

public static

returnnewProxyAndInfo

}

//实例化ProxyAndInfo

public ProxyAndInfo(PROXYTYPE proxy,Text dtService,

InetSocketAddress address) {

this.proxy = proxy;

this.dtService = dtService;

this.address = address;

}

@InterfaceAudience.Private

public class DFSClient implements java.io.Closeable, RemotePeerFactory,

DataEncryptionKeyFactory {

//是一个接口

final ClientProtocolnamenode;

//服务端代理对象。

this.namenode = proxyInfo.getProxy();

}

ClientProtocol接口的实现由服务端来完成。

(3)第二句执行。

public FSDataInputStream open(Path f, final int bufferSize)

throws IOException {

statistics.incrementReadOps(1);

Path absF = fixRelativePart(f);

return newFileSystemLinkResolver

@Override

public FSDataInputStream doCall(final Path p)

throws IOException,UnresolvedLinkException {

finalDFSInputStream dfsis =

dfs.open(getPathName(p), bufferSize, verifyChecksum);

return dfs.createWrappedInputStream(dfsis);

}

@Override

public FSDataInputStream next(final FileSystem fs, final Path p)

throws IOException {

return fs.open(p, bufferSize);

}

}.resolve(this, absF);

}

//调用open方法

public DFSInputStream open(String src, int buffersize, boolean verifyChecksum)

throws IOException,UnresolvedLinkException {

checkOpen();

// Get block info from namenode

return new DFSInputStream(this, src, buffersize, verifyChecksum);

}

//创建DFSInputStream对象

DFSInputStream(DFSClient dfsClient, Stringsrc, int buffersize, boolean verifyChecksum

) throws IOException,UnresolvedLinkException {

this.dfsClient = dfsClient;

this.verifyChecksum = verifyChecksum;

this.buffersize = buffersize;

this.src = src;

this.cachingStrategy =

dfsClient.getDefaultReadCachingStrategy();

openInfo();

}

//打开文件,在这里发现尝试了三次。

synchronized void openInfo()throws IOException, UnresolvedLinkException {

lastBlockBeingWrittenLength = fetchLocatedBlocksAndGetLastBlockLength();

int retriesForLastBlockLength = dfsClient.getConf().retryTimesForGetLastBlockLength;

while (retriesForLastBlockLength > 0) {

// Gettinglast block length as -1 is a special case. When cluster

//restarts, DNs may not report immediately. At this time partial block

//locations will not be available with NN for getting the length. Lets

// retryfor 3 times to get the length.

if (lastBlockBeingWrittenLength == -1) {

DFSClient.LOG.warn("Last block locations not available. "

+ "Datanodes might not have reported blocks completely."

+ " Will retry for " +retriesForLastBlockLength + "times");

waitFor(dfsClient.getConf().retryIntervalForGetLastBlockLength);

lastBlockBeingWrittenLength =fetchLocatedBlocksAndGetLastBlockLength();

} else {

break;

}

retriesForLastBlockLength--;

}

if (retriesForLastBlockLength == 0) {

throw new IOException("Couldnot obtain the last block locations.");

}

}

//获取文件的源数据信息

private long fetchLocatedBlocksAndGetLastBlockLength() throws IOException {

//就可以获取块的信息,从而就可以获取文件。流中持有源数据信息。

finalLocatedBlocks newInfo = dfsClient.getLocatedBlocks(src, 0);

static LocatedBlocks callGetBlockLocations(ClientProtocolnamenode,

String src, long start, long length)

throws IOException {

try {

returnnamenode.getBlockLocations(src, start, length);

} catch(RemoteException re) {

throwre.unwrapRemoteException(AccessControlException.class,

FileNotFoundException.class,

UnresolvedPathException.class);

}

}

//进入服务端进行执行。

public LocatedBlocks getBlockLocations(String src, long offset, long length)

throws AccessControlException,FileNotFoundException,

UnresolvedLinkException, IOException {

GetBlockLocationsRequestProto req =GetBlockLocationsRequestProto

.newBuilder()

.setSrc(src)

.setOffset(offset)

.setLength(length)

.build();

try {

GetBlockLocationsResponseProto resp = rpcProxy.getBlockLocations(null,

req);

return resp.hasLocations() ?

PBHelper.convert(resp.getLocations()): null;

} catch (ServiceException e) {

throw ProtobufHelper.getRemoteException(e);

}

}

看源码的关键是看每个方法的功能,某个类是如何创建的。某个集合中装什么,集合是在那里创建的。

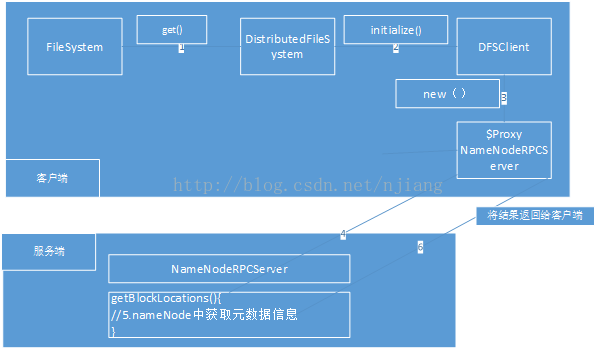

(4)图解读取文件:

注:在读取文件的时候默认读取文件元数据信息3次,3次都失败就会读取失败。