(九)pytorch学习笔记---内容为学习资源摘录整合の梯度下降方法--RMSProp(4)

RMSProp

RMSprop 是由 Geoff Hinton 在他 Coursera 课程中提出的一种适应性学习率方法,至今仍未被公开发表。前面我们提到了 Adagrad 算法有一个问题,就是学习率分母上的变量 s 不断被累加增大,最后会导致学习率除以一个比较大的数之后变得非常小,这不利于我们找到最后的最优解,所以 RMSProp 的提出就是为了解决这个问题。

RMSProp 算法

def rmsprop(parameters, sqrs, lr, alpha):

eps = 1e-10

for param, sqr in zip(parameters, sqrs):

sqr[:] = alpha * sqr + (1 - alpha) * param.grad.data ** 2

div = lr / torch.sqrt(sqr + eps) * param.grad.data

param.data = param.data - div

import numpy as np

import torch

from torchvision.datasets import MNIST # 导入 pytorch 内置的 mnist 数据

from torch.utils.data import DataLoader

from torch import nn

from torch.autograd import Variable

import time

import matplotlib.pyplot as plt

%matplotlib inline

def data_tf(x):

x = np.array(x, dtype='float32') / 255

x = (x - 0.5) / 0.5 # 标准化,这个技巧之后会讲到

x = x.reshape((-1,)) # 拉平

x = torch.from_numpy(x)

return x

train_set = MNIST('./data', train=True, transform=data_tf, download=True) # 载入数据集,申明定义的数据变换

test_set = MNIST('./data', train=False, transform=data_tf, download=True)

# 定义 loss 函数

criterion = nn.CrossEntropyLoss()

train_data = DataLoader(train_set, batch_size=64, shuffle=True)

# 使用 Sequential 定义 3 层神经网络

net = nn.Sequential(

nn.Linear(784, 200),

nn.ReLU(),

nn.Linear(200, 10),

)

# 初始化梯度平方项

sqrs = []

for param in net.parameters():

sqrs.append(torch.zeros_like(param.data))

# 开始训练

losses = []

idx = 0

start = time.time() # 记时开始

for e in range(5):

train_loss = 0

for im, label in train_data:

im = Variable(im)

label = Variable(label)

# 前向传播

out = net(im)

loss = criterion(out, label)

# 反向传播

net.zero_grad()

loss.backward()

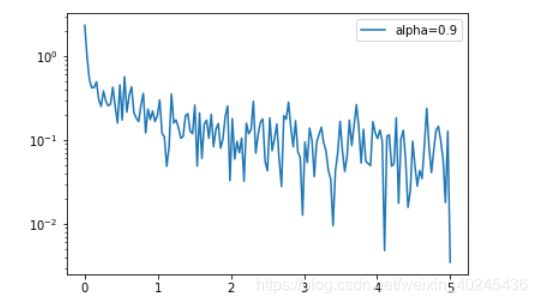

rmsprop(net.parameters(), sqrs, 1e-3, 0.9) # 学习率设为 0.001,alpha 设为 0.9

# 记录误差

train_loss += loss.data[0]

if idx % 30 == 0:

losses.append(loss.data[0])

idx += 1

print('epoch: {}, Train Loss: {:.6f}'

.format(e, train_loss / len(train_data)))

end = time.time() # 计时结束

print('使用时间: {:.5f} s'.format(end - start))

epoch: 0, Train Loss: 0.363507

epoch: 1, Train Loss: 0.161640

epoch: 2, Train Loss: 0.120954

epoch: 3, Train Loss: 0.101136

epoch: 4, Train Loss: 0.085934

使用时间: 58.86966 sx_axis = np.linspace(0, 5, len(losses), endpoint=True)

plt.semilogy(x_axis, losses, label='alpha=0.9')

plt.legend(loc='best')

train_data = DataLoader(train_set, batch_size=64, shuffle=True)

# 使用 Sequential 定义 3 层神经网络

net = nn.Sequential(

nn.Linear(784, 200),

nn.ReLU(),

nn.Linear(200, 10),

)

# 初始化梯度平方项

sqrs = []

for param in net.parameters():

sqrs.append(torch.zeros_like(param.data))

# 开始训练

losses = []

idx = 0

start = time.time() # 记时开始

for e in range(5):

train_loss = 0

for im, label in train_data:

im = Variable(im)

label = Variable(label)

# 前向传播

out = net(im)

loss = criterion(out, label)

# 反向传播

net.zero_grad()

loss.backward()

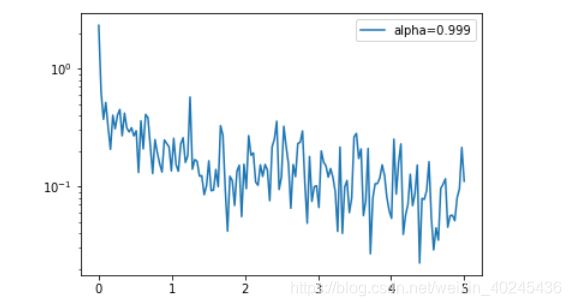

rmsprop(net.parameters(), sqrs, 1e-3, 0.999) # 学习率设为 0.001,alpha 设为 0.999

# 记录误差

train_loss += loss.data[0]

if idx % 30 == 0:

losses.append(loss.data[0])

idx += 1

print('epoch: {}, Train Loss: {:.6f}'

.format(e, train_loss / len(train_data)))

end = time.time() # 计时结束

print('使用时间: {:.5f} s'.format(end - start))

epoch: 0, Train Loss: 0.471134

epoch: 1, Train Loss: 0.188616

epoch: 2, Train Loss: 0.148085

epoch: 3, Train Loss: 0.124590

epoch: 4, Train Loss: 0.107619

使用时间: 70.13240 sx_axis = np.linspace(0, 5, len(losses), endpoint=True)

plt.semilogy(x_axis, losses, label='alpha=0.999')

plt.legend(loc='best')

当然 pytorch 也内置了 rmsprop 的方法,非常简单,只需要调用 torch.optim.RMSprop() 就可以了,下面是例子

train_data = DataLoader(train_set, batch_size=64, shuffle=True)

# 使用 Sequential 定义 3 层神经网络

net = nn.Sequential(

nn.Linear(784, 200),

nn.ReLU(),

nn.Linear(200, 10),

)

optimizer = torch.optim.RMSprop(net.parameters(), lr=1e-3, alpha=0.9)

# 开始训练

start = time.time() # 记时开始

for e in range(5):

train_loss = 0

for im, label in train_data:

im = Variable(im)

label = Variable(label)

# 前向传播

out = net(im)

loss = criterion(out, label)

# 反向传播

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 记录误差

train_loss += loss.data[0]

print('epoch: {}, Train Loss: {:.6f}'

.format(e, train_loss / len(train_data)))

end = time.time() # 计时结束

print('使用时间: {:.5f} s'.format(end - start))

epoch: 0, Train Loss: 0.372473

epoch: 1, Train Loss: 0.164288

epoch: 2, Train Loss: 0.122384

epoch: 3, Train Loss: 0.100739

epoch: 4, Train Loss: 0.088391

使用时间: 85.15531 s