机器学习实战——决策树

本文记录的是《机器学习实战》和《统计学习方法》中决策树的原理和实现。

1、决策树

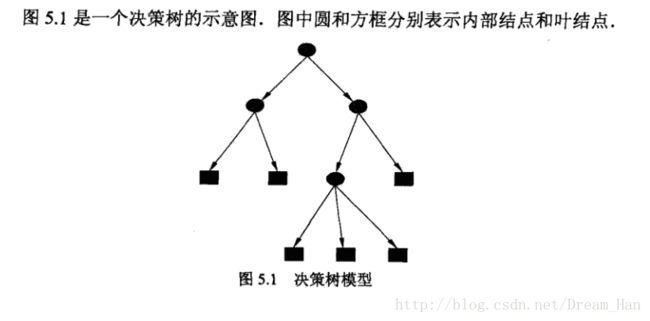

定义:分类决策树模型是一种描述对实例进行分类的树形结构。决策树由节点(node)和有向边(directed edge)组成。节点有两种类型:内部结点和叶结点,内部结点表示一个特征或者属性,叶结点表示一个类。

用决策树进行分类,从根结点开始,对实例的某一特征进行测试,根据测试结构,将实例分配到其子结点;这时,每一个子结点对用着特征的一个取值,如此递归的对实例进行测试并分配,直至到达叶结点。最后将实例分到叶结点的类中。

决策树的一般流程:

(1)收集数据:可以使用任何方法。

(2)准备数据:树构造算法只适用于标称型数据,因此数值型数据必须离散化。

(3)分析数据:可以使用任何方法,构造树完成之后,我们应该检查图形是否符合预期。

(4)训练算法:构造树的数据结构。

(5)测试算法:使用经验树计算错误率。

(6)使用算法:此步骤可以适用于任何监督学习算法,而使用决策树可以更好地理解数据

的内在含义。

目前常用的决策树算法有ID3算法、改进的C4.5算法和CART算法。

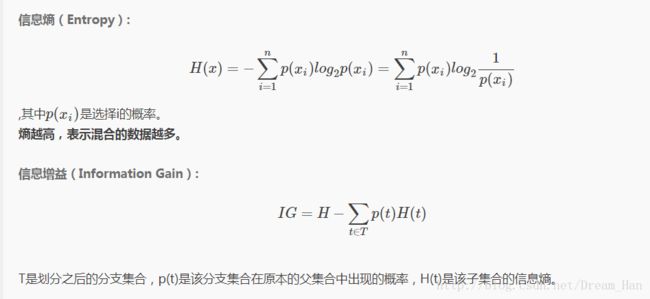

2、ID3 算法原理和实现

ID3算法最早是由罗斯昆(J. Ross Quinlan)于1975年在悉尼大学提出的一种分类预测算法,算法以信息论为基础,其核心是“信息熵”。ID3算法通过计算每个属性的信息增益,认为信息增益高的是好属性,每次划分选取信息增益最高的属性为划分标准,重复这个过程,直至生成一个能完美分类训练样例的决策树。

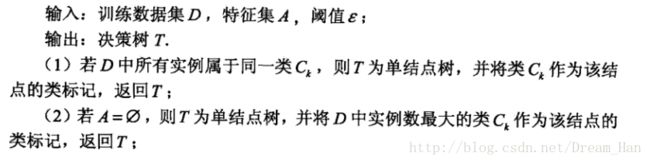

《统计学习方法》中该部分的描述:

下面是用python具体的实现:

1.首先创建一个数据集:

# -*- coding: utf-8 -*-

from math import log

import operator

import treePlotter

# 创建数据集

def createDataSet():

dataSet = [[1, 1, 'yes'],

[1, 1, 'yes'],

[1, 0, 'no'],

[0, 1, 'no'],

[0, 1, 'no']]

labels = ['no surfacing', 'flippers']

# change to discrete values

return dataSet, labels2.计算香农熵:

# 计算香农熵

def calcShannonEnt(dataSet):

numEntries = len(dataSet)

labelCounts = {}

for featVec in dataSet: # the the number of unique elements and their occurance

currentLabel = featVec[-1]

if currentLabel not in labelCounts.keys():

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

#print(labelCounts)

shannonEnt = 0.0

for key in labelCounts:

prob = float(labelCounts[key])/numEntries

shannonEnt -= prob * log(prob, 2) # log base 2

return shannonEnt

myDat, labels = createDataSet()

print(calcShannonEnt(myDat))

myDat[0][-1] = 'maybe'

print(myDat, labels)

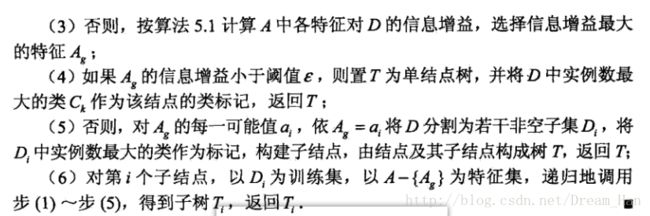

print('Ent changed: ', calcShannonEnt(myDat))输出为:

可以看出,在myDat[0][-1]更改之后,熵变大了。

3.分离数据

# 分离数据

def splitDataSet(dataSet, axis, value):

retDataSet = []

for featVec in dataSet:

if featVec[axis] == value: # 判断axis列的值是否为value

reducedFeatVec = featVec[:axis] # [:axis]表示前axis列,即若axis为2,就是取featVec的前axis列

print(reducedFeatVec) # [axis+1:]表示从跳过axis+1行,取接下来的数据

reducedFeatVec.extend(featVec[axis+1:]) # 列表扩展

print(reducedFeatVec)

retDataSet.append(reducedFeatVec)

print(retDataSet)

return retDataSet

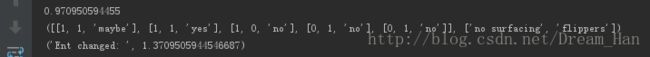

print 'splitDataSet is :', splitDataSet(myDat, 1, 1)

输出结果:

以axis = 1为基准即第二列,删除了value != 1的数据,并重新组合。

4.选择最优特征分离:

# 选择最优特征进行分离

def chooseBestFeatureToSplit(dataSet):

numFeatures = len(dataSet[0]) - 1 # the last column is used for the labels

# print numFeatures

baseEntropy = calcShannonEnt(dataSet)

bestInfoGain = 0.0

bestFeature = -1

for i in range(numFeatures):

# 计算每一特征对应的熵 ,然后:iterate over all the features

featList = [example[i] for example in dataSet]

#create a list of all the examples of this feature

#print 'featList:', featList

uniqueVals = set(featList) # get a set of unique values

# print(uniqueVals)

newEntropy = 0.0

for value in uniqueVals:

subDataSet = splitDataSet(dataSet, i, value)

# print (subDataSet)

prob = len(subDataSet)/float(len(dataSet)) # 计算子数据集在总的数据集中的比值

newEntropy += prob * calcShannonEnt(subDataSet)

# print(newEntropy)

infoGain = baseEntropy - newEntropy

#calculate the info gain; ie reduction in entropy

if (infoGain > bestInfoGain): #compare this to the best gain so far

bestInfoGain = infoGain #if better than current best, set to best

bestFeature = i

return bestFeature

# 选出最优的特征,并返回特征角标 returns an integer

print 'the best feature is:', chooseBestFeatureToSplit(myDat)

输出结果为:

![]()

即最优特征为0对应的。

5. 统计出现次数最多的分类名称

# 统计出现次数最多的分类名称

def majorityCnt(classList):

classCount={}

for vote in classList:

if vote not in classCount.keys():

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.iteritems(), key=operator.itemgetter(1), reverse=True)

# 使用程序第二行导入运算符模块的itemgetter方法,按照第二个元素次序进行排序,逆序 :从大到小

return sortedClassCount[0][0]

6.创建决策树

def createTree(dataSet,labels):

classList = [example[-1] for example in dataSet]

if classList.count(classList[0]) == len(classList):

return classList[0] # stop splitting when all of the classes are equal

if len(dataSet[0]) == 1: # stop splitting when there are no more features in dataSet

return majorityCnt(classList)

bestFeat = chooseBestFeatureToSplit(dataSet)

bestFeatLabel = labels[bestFeat]

myTree = {bestFeatLabel: {}}

del(labels[bestFeat])

featValues = [example[bestFeat] for example in dataSet] # 抽取最优特征下的数值,重新组合成list,

# print "featValues:", featValues

uniqueVals = set(featValues)

# print "uniqueVals:", uniqueVals

for value in uniqueVals:

subLabels = labels[:] # copy all of labels, so trees don't mess up(搞错) existing labels

myTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value), subLabels)

#print myTree

return myTree

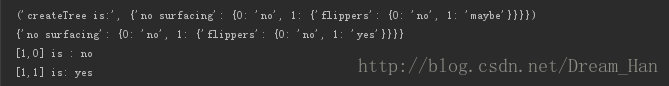

print('createTree is :', createTree(myDat, labels))

myDat, labels = createDataSet()

mytree = createTree(myDat, labels)

代码输出:

![]()

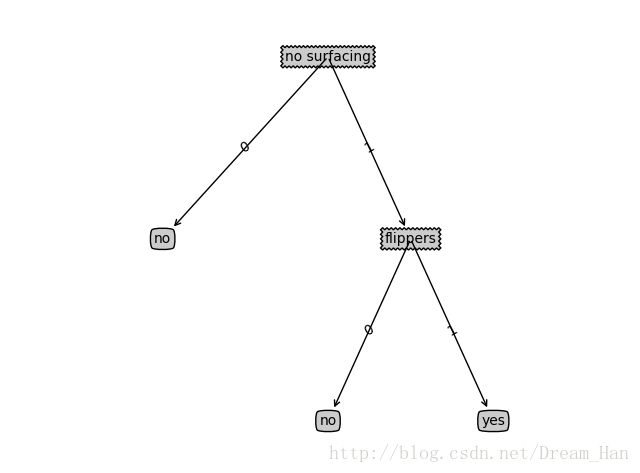

7.决策树模型:

def classify(inputTree, featLabels, testVec):

firstStr = inputTree.keys()[0] # 找到输入树当中键值[0]位置的值给firstStr

#print 'firstStr is:', firstStr

secondDict = inputTree[firstStr]

#print 'secondDict is:', secondDict

featIndex = featLabels.index(firstStr) # index方法查找当前列表中第一个匹配firstStr变量的元素的索引

#print 'featIndex', featIndex

for key in secondDict.keys():

if testVec[featIndex] == key:

# 判断节点是否为字典来以此判断是否为叶子节点

if type(secondDict[key]).__name__ == 'dict':

classLabel = classify(secondDict[key], featLabels, testVec)

else:

classLabel = secondDict[key]

return classLabel

myDat, labels = createDataSet()

mytree = createTree(myDat, labels)

print mytree

myDat, labels = createDataSet()

classlabel_1 = classify(mytree, labels, [1, 0])

print '[1,0] is :', classlabel_1

classlabel_2 = classify(mytree, labels, [1, 1])

print '[1,1] is:', classlabel_2def storeTree(inputTree,filename):

import pickle

fw = open(filename,'w')

pickle.dump(inputTree,fw)

fw.close()

#读取树

def grabTree(filename):

import pickle

fr = open(filename)

return pickle.load(fr)

# 测试并打印

storeTree(mytree, 'classifierstorage.txt')

print grabTree('classifierstorage.txt')3.使用决策树预测隐形眼镜的类型

# 使用决策树预测隐形眼镜的类型

fr = open('lenses.txt')

lenses = [inst.strip().split('\t') for inst in fr.readlines()]

print lenses

lensesLabels = ['age', 'prescript', 'astigmatic', 'tearRata']

lensesTree = createTree(lenses, lensesLabels)

print lensesTree

treePlotter.createPlot(lensesTree)4.绘制树图形

import matplotlib.pyplot as plt

decisionNode = dict(boxstyle="sawtooth", fc="0.8")

leafNode = dict(boxstyle="round4", fc="0.8")

arrow_args = dict(arrowstyle="<-")

def getNumLeafs(myTree):

numLeafs = 0

firstStr = myTree.keys()[0]

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':#test to see if the nodes are dictonaires, if not they are leaf nodes

numLeafs += getNumLeafs(secondDict[key])

else: numLeafs +=1

return numLeafs

def getTreeDepth(myTree):

maxDepth = 0

firstStr = myTree.keys()[0]

secondDict = myTree[firstStr]

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':#test to see if the nodes are dictonaires, if not they are leaf nodes

thisDepth = 1 + getTreeDepth(secondDict[key])

else: thisDepth = 1

if thisDepth > maxDepth: maxDepth = thisDepth

return maxDepth

def plotNode(nodeTxt, centerPt, parentPt, nodeType):

createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction',

xytext=centerPt, textcoords='axes fraction',

va="center", ha="center", bbox=nodeType, arrowprops=arrow_args )

def plotMidText(cntrPt, parentPt, txtString):

xMid = (parentPt[0]-cntrPt[0])/2.0 + cntrPt[0]

yMid = (parentPt[1]-cntrPt[1])/2.0 + cntrPt[1]

createPlot.ax1.text(xMid, yMid, txtString, va="center", ha="center", rotation=30)

def plotTree(myTree, parentPt, nodeTxt):#if the first key tells you what feat was split on

numLeafs = getNumLeafs(myTree) #this determines the x width of this tree

depth = getTreeDepth(myTree)

firstStr = myTree.keys()[0] #the text label for this node should be this

cntrPt = (plotTree.xOff + (1.0 + float(numLeafs))/2.0/plotTree.totalW, plotTree.yOff)

plotMidText(cntrPt, parentPt, nodeTxt)

plotNode(firstStr, cntrPt, parentPt, decisionNode)

secondDict = myTree[firstStr]

plotTree.yOff = plotTree.yOff - 1.0/plotTree.totalD

for key in secondDict.keys():

if type(secondDict[key]).__name__=='dict':#test to see if the nodes are dictonaires, if not they are leaf nodes

plotTree(secondDict[key],cntrPt,str(key)) #recursion

else: #it's a leaf node print the leaf node

plotTree.xOff = plotTree.xOff + 1.0/plotTree.totalW

plotNode(secondDict[key], (plotTree.xOff, plotTree.yOff), cntrPt, leafNode)

plotMidText((plotTree.xOff, plotTree.yOff), cntrPt, str(key))

plotTree.yOff = plotTree.yOff + 1.0/plotTree.totalD

#if you do get a dictonary you know it's a tree, and the first element will be another dict

def createPlot(inTree):

fig = plt.figure(1, facecolor='white')

fig.clf()

axprops = dict(xticks=[], yticks=[])

createPlot.ax1 = plt.subplot(111, frameon=False, **axprops) #no ticks

#createPlot.ax1 = plt.subplot(111, frameon=False) #ticks for demo puropses

plotTree.totalW = float(getNumLeafs(inTree))

plotTree.totalD = float(getTreeDepth(inTree))

plotTree.xOff = -0.5/plotTree.totalW; plotTree.yOff = 1.0;

plotTree(inTree, (0.5,1.0), '')

plt.show()

#def createPlot():

# fig = plt.figure(1, facecolor='white')

# fig.clf()

# createPlot.ax1 = plt.subplot(111, frameon=False) #ticks for demo puropses

# plotNode('a decision node', (0.5, 0.1), (0.1, 0.5), decisionNode)

# plotNode('a leaf node', (0.8, 0.1), (0.3, 0.8), leafNode)

# plt.show()

def retrieveTree(i):

listOfTrees =[{'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}},

{'no surfacing': {0: 'no', 1: {'flippers': {0: {'head': {0: 'no', 1: 'yes'}}, 1: 'no'}}}}

]

return listOfTrees[i]

mytree = retrieveTree(0)

createPlot(mytree)代码输出:

4.小结

优点:计算复杂度不高,输出结果易于理解,对中间值的缺失不敏感,可以处理不相关特征数据。

缺点:可能会产生过度匹配问题。

适用数据类型:数值型和标称型

ID3算法只有树的生成,所以该算法生成的树很容易过拟合,后面的C4.5和CART,以及决策树的剪枝会在详细说明。