天眼查新方式信息爬取

#本文通过新的方式爬取突破

由于公司列表页信息很少反爬,除了公司名称其他信息都没有,所以可以取巧提取注册时间注册资本信息

访问过多过快也会封,测试可以通过随机UA突破

另外公司具体信息详情页可能不同公司展示xpath位置不一样,所以用re

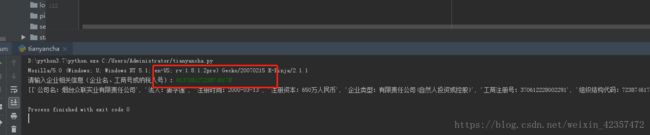

另外经营范围下载回来出现&#x开头的乱码,#&#xxx 的格式其实是unicode,用HTMLParser库解析#最后实现结果:基本3秒能查出5家相关企业具体公司信息 更新下,天眼查有更新,现在可以通过企业名和工商号或者纳税号进行查询

import requests

from lxml import etree

import random

import re

# import HTMLParser

from html.parser import HTMLParser

proxy = {

"http": 'http://125.70.13.77:8080',

"http": 'https://183.6.129.212:41949'

}

USER_AGENTS = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

]

dd=random.choice(USER_AGENTS)

print(dd)

headers={

"Referer": "https://www.baidu.com/",

"User-Agent": "%s"%dd

}

def down_load(url):

cc=requests.get(url=url,headers=headers,proxies=proxy)

# cc=etree.HTML(cc)

# cc.encode("utf-8").decode("utf-8")

cc.encoding="utf-8"

return cc.text

i=input("请输入企业相关信息(企业名、工商号或纳税人号):")

first_url="https://m.tianyancha.com/search?key=%s"%i

# first_url="http://www.baidu.com"

a=down_load(first_url)

a=etree.HTML(a)

detail_url=a.xpath('//div[contains(@class,"col-xs-10")]/a/@href')

boss=a.xpath('//div[@class="search_row_new_mobil"]//a/text()')

the_registered_capital=a.xpath('//div[@class="search_row_new_mobil"]/div/div[2]/span/text()')

the_registered_time=a.xpath('//div[@class="search_row_new_mobil"]/div/div[3]/span/text()')

# print(detail_url,boss,the_registered_capital,the_registered_time)

gs=[]

gs1={}

for ii in range(len(boss)):

aa=down_load(detail_url[ii])

bb=etree.HTML(aa)

company=bb.xpath('//div[@class="over-hide"]/div/text()')[0]

industry = re.findall("行业:(.*?) ",aa,re.S)[0]

the_enterprise_type = re.findall("企业类型:(.*?) ",aa,re.S)[0]

registration_number = re.findall("工商注册号:(.*?) ",aa,re.S)[0]

organization_code = re.findall("组织结构代码:(.*?) ",aa,re.S)[0]

credit_code = re.findall("统一信用代码:(.*?) ",aa,re.S)[0]

business_period = re.findall("经营期限:(.*?) ",aa,re.S)[0]

# approval_date = aa.xpath('/html/body/div[3]/div[1]/div[7]/div/div[11]/span[2]/text()')[0]

registration_authority =re.findall("登记机关:(.*?) ",aa,re.S)[0]

registered_address =re.findall("注册地址:(.*?) ",aa,re.S)[0]

scope_of_business =re.findall('(.*?) ',aa,re.S)[0]

h=HTMLParser() #&#xxx;‘ 的格式其实是unicode,&#后面跟的是unicode字符的十进制值,解决字体这样的方法

scope_of_business=h.unescape(scope_of_business)

new=["公司名:"+company,"法人:"+boss[ii],"注册时间:"+the_registered_time[ii],"注册资本:"+the_registered_capital[ii],"企业类型:"+the_enterprise_type,"工商注册号:"+registration_number,"组织结构代码:"+organization_code,"统一信用代码:"+credit_code,"经营年限:"+business_period,"登记机关:"+registration_authority,"注册地址:"+registered_address,"经营范围:"+scope_of_business]

# print(new)

gs1[ii+1]=["公司名:"+company,"法人:"+boss[ii],"注册时间:"+the_registered_time[ii],"注册资本:"+the_registered_capital[ii],"企业类型:"+the_enterprise_type,"工商注册号:"+registration_number,"组织结构代码:"+organization_code,"统一信用代码:"+credit_code,"经营年限:"+business_period,"登记机关:"+registration_authority,"注册地址:"+registered_address,"经营范围:"+scope_of_business]

gs.append(new)

print(gs)

# print(gs1)

也可以参考看下上篇:通过scrapy结合selenium抓取天眼查

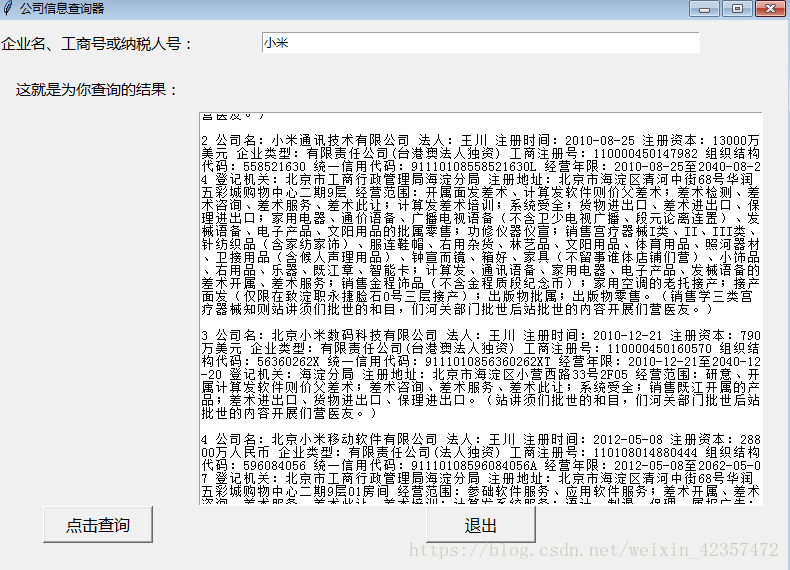

后续通过tkinter制作的可视化界面

import tkinter as tk

import requests

from lxml import etree

import random

import re

from html.parser import HTMLParser

window=tk.Tk()

window.title("公司信息查询器")

window.geometry("790x550+500+200")

l=tk.Label(window,text="企业名、工商号或纳税人号:",font="微软雅黑 11",height=2)

l.grid()

l1=tk.Label(window,text="这就是为你查询的结果:",font="微软雅黑 11",height=2)

l1.grid()

var=tk.StringVar()

e=tk.Entry(window,width=62)

e.grid(row=0,column=1)

e1=tk.Text(window,height=30)

# e1=tk.Entry(window,textvariable=var,width=60,)

e1.grid(row=2,column=1)

def click():

content=e.get()

proxy = {

"http": 'http://125.70.13.77:8080',

"http": 'https://183.6.129.212:41949'

}

USER_AGENTS = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

]

dd = random.choice(USER_AGENTS)

headers = {

"Referer": "https://www.baidu.com/",

"User-Agent": "%s" % dd

}

def down_load(url):

cc = requests.get(url=url, headers=headers, proxies=proxy)

# cc=etree.HTML(cc)

# cc.encode("utf-8").decode("utf-8")

cc.encoding = "utf-8"

return cc.text

# i = input("请输入企业相关信息(企业名、工商号或纳税人号):")

first_url = "https://m.tianyancha.com/search?key=%s" % content

# first_url="http://www.baidu.com"

a = down_load(first_url)

a = etree.HTML(a)

detail_url = a.xpath('//div[contains(@class,"col-xs-10")]/a/@href')

boss = a.xpath('//div[@class="search_row_new_mobil"]//a/text()')

the_registered_capital = a.xpath('//div[@class="search_row_new_mobil"]/div/div[2]/span/text()')

the_registered_time = a.xpath('//div[@class="search_row_new_mobil"]/div/div[3]/span/text()')

# print(detail_url,boss,the_registered_capital,the_registered_time)

gs = []

gs1 = {}

for ii in range(len(boss)):

aa = down_load(detail_url[ii])

bb = etree.HTML(aa)

company = bb.xpath('//div[@class="over-hide"]/div/text()')[0]

industry = re.findall("行业:(.*?) ", aa, re.S)[0]

the_enterprise_type = re.findall("企业类型:(.*?) ", aa, re.S)[0]

registration_number = re.findall("工商注册号:(.*?) ", aa, re.S)[0]

organization_code = re.findall("组织结构代码:(.*?) ", aa, re.S)[0]

credit_code = re.findall("统一信用代码:(.*?) ", aa, re.S)[0]

business_period = re.findall("经营期限:(.*?) ", aa, re.S)[0]

# approval_date = aa.xpath('/html/body/div[3]/div[1]/div[7]/div/div[11]/span[2]/text()')[0]

registration_authority = re.findall("登记机关:(.*?) ", aa, re.S)[0]

registered_address = re.findall("注册地址:(.*?) ", aa, re.S)[0]

scope_of_business = re.findall('(.*?) ', aa, re.S)[0]

h = HTMLParser() # &#xxx;‘ 的格式其实是unicode,&#后面跟的是unicode字符的十进制值,解决字体这样的方法

scope_of_business = h.unescape(scope_of_business)

new = [ii+1,"公司名:" + company, "法人:" + boss[ii], "注册时间:" + the_registered_time[ii],

"注册资本:" + the_registered_capital[ii], "企业类型:" + the_enterprise_type, "工商注册号:" + registration_number,

"组织结构代码:" + organization_code, "统一信用代码:" + credit_code, "经营年限:" + business_period,

"登记机关:" + registration_authority, "注册地址:" + registered_address, "经营范围:" + scope_of_business]

gs1[ii + 1] = ["公司名:" + company, "法人:" + boss[ii], "注册时间:" + the_registered_time[ii],

"注册资本:" + the_registered_capital[ii], "企业类型:" + the_enterprise_type,

"工商注册号:" + registration_number, "组织结构代码:" + organization_code, "统一信用代码:" + credit_code,

"经营年限:" + business_period, "登记机关:" + registration_authority, "注册地址:" + registered_address,

"经营范围:" + scope_of_business]

e1.insert("end", new)

e1.insert("end", "\n\n") #换行骚操作

# gs.append(new)

# print(gs)

# bb=response["translateResult"][0][0]["tgt"]

# print(bb)

# print(type(bb))

# e1.insert("end",gs)

b=tk.Button(window,text="点击查询",command=click,width=10,font="微软雅黑 12")

b.grid(row=6,column=0)

b1=tk.Button(window,text="退出",command=window.quit,width=10,font="微软雅黑 12")

b1.grid(row=6,column=1)

window.mainloop()

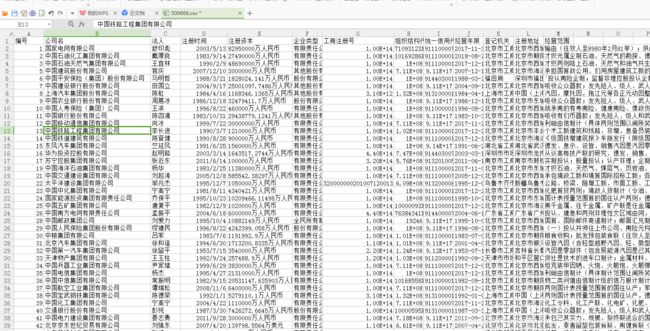

后续有目标的爬取1000来家公司信息,进行了一定的改造

1.padas转化成list—

#padas 转化成list

content=pd.read_csv(r"C:\Users\Administrator\Desktop\5005.csv",encoding="utf-8").values.tolist()

2.大批量数据程序的异常判断问题 try,不然程序中途爬的全部丢失重头来过,主要两个方面去重和断点续爬

import requests

from lxml import etree

import random

import re

import csv

import pandas as pd

# from multiprocess import Pool

# import HTMLParser

from html.parser import HTMLParser

from fake_useragent import UserAgent

# ua=UserAgent()

proxy = {

"https": 'https://114.116.10.21:3128',

"http": 'http://47.105.151.97:80',

"https": 'https://113.200.56.13:8010',

"https": 'https://14.20.235.220:9797',

"https": 'https://119.31.210.170:7777',

"http": 'http://221.193.222.7:8060',

"http": 'http://115.223.222.206:9000',

"http": 'http://106.12.3.84:80',

"http": 'http://49.81.125.62:9000',

"http": 'http://119.29.26.242:8080',

"http": 'http://118.24.98.96:9999',

"http": 'http://183.129.207.84:52264',

"http": 'http://121.10.71.82:8118',

"http": 'http://113.16.160.101:8118',

}

USER_AGENTS = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

]

dd=random.choice(USER_AGENTS)

headers={

"Referer": "https://www.baidu.com/",

"User-Agent": "%s"%dd,

# "User-Agent":ua.random

}

def down_load(url):

cc=requests.get(url=url,headers=headers,proxies=proxy,verify=True) #,proxies=proxy,verify=True

# cc=etree.HTML(cc)

# cc.encode("utf-8").decode("utf-8")

cc.encoding="utf-8"

return cc.text

#padas 转化成list

content=pd.read_csv(r"C:\Users\Administrator\Desktop\5005.csv",encoding="utf-8").values.tolist()

gs = []

for m in range(1101,len(content)+1):

i=content[m][0]

# i=input("请输入企业相关信息(企业名、工商号或纳税人号):")

first_url="https://m.tianyancha.com/search?key=%s"%i

# first_url="http://www.baidu.com"

a=down_load(first_url)

a=etree.HTML(a)

detail_url=a.xpath('//div[contains(@class,"col-xs-10")]/a/@href')[0]

# boss=a.xpath('//div[@class="search_row_new_mobil"]//a/text()')[0]

if a.xpath('/html/body/div[3]/div[3]/div[1]/div[4]/div/div/div[4]/span/text()') =="未公开" or a.xpath('/html/body/div[3]/div[3]/div[1]/div[4]/div/div/div[4]/span/text()') =="仍注册" or a.xpath('//div[@class="search_row_new_mobil"]/div/div[2]/span/text()')[0] =="-" or a.xpath('//div[@class="search_row_new_mobil"]/div/div[3]/span/text()')[0] =="-":

pass

else:

the_registered_capital = a.xpath('//div[@class="search_row_new_mobil"]/div/div[2]/span/text()')[0]

the_registered_time = a.xpath('//div[@class="search_row_new_mobil"]/div/div[3]/span/text()')[0]

boss = a.xpath('//div[@class="search_row_new_mobil"]//a/text()')[0]

print(detail_url)

aa = down_load(detail_url)

bb = etree.HTML(aa)

try:

company = bb.xpath('//div[@class="over-hide"]/div/text()')[0]

# industry = re.findall("行业:(.*?) ", aa, re.S)[0]

the_enterprise_type = re.findall("企业类型:(.*?) ", aa, re.S)[0]

registration_number = re.findall("工商注册号:(.*?) ", aa, re.S)[0]

organization_code = re.findall("组织结构代码:(.*?) ", aa, re.S)[0]

credit_code = re.findall("统一信用代码:(.*?) ", aa, re.S)[0]

business_period = re.findall("经营期限:(.*?) ", aa, re.S)[0]

# approval_date = aa.xpath('/html/body/div[3]/div[1]/div[7]/div/div[11]/span[2]/text()')[0]

registration_authority = re.findall("登记机关:(.*?) ", aa, re.S)[0]

registered_address = re.findall("注册地址:(.*?) ", aa, re.S)[0]

scope_of_business = re.findall('(.*?) ', aa, re.S)[0]

h = HTMLParser() # &#xxx;‘ 的格式其实是unicode,&#后面跟的是unicode字符的十进制值,解决字体这样的方法

scope_of_business = h.unescape(scope_of_business)

new = [str(m+1),company,boss, the_registered_time,the_registered_capital,

the_enterprise_type,registration_number,organization_code,

credit_code, business_period,registration_authority,

registered_address,scope_of_business]

print(m+1)

# gs1[ii+1]=["公司名:"+company,"法人:"+boss[ii],"注册时间:"+the_registered_time[ii],"注册资本:"+the_registered_capital[ii],"企业类型:"+the_enterprise_type,"工商注册号:"+registration_number,"组织结构代码:"+organization_code,"统一信用代码:"+credit_code,"经营年限:"+business_period,"登记机关:"+registration_authority,"注册地址:"+registered_address,"经营范围:"+scope_of_business]

gs.append(new)

raise exception #抛出异常

except:

with open("5006663.csv", "w", encoding="utf-8",newline="") as f:

k = csv.writer(f, dialect="excel")

k.writerow(["编号", "公司名", "法人", "注册时间", "注册资本", "企业类型","工商注册号","组织结构代码","统一信用代码","经营年限","登记机关","注册地址","经营范围"])

for list in gs:

k.writerow(list)

with open("500666666666.csv", "w", encoding="utf-8",newline="") as f:

k = csv.writer(f, dialect="excel")

k.writerow(["编号", "公司名", "法人", "注册时间", "注册资本", "企业类型","工商注册号","组织结构代码","统一信用代码","经营年限","登记机关","注册地址","经营范围"])

for list in gs:

k.writerow(list)

# print(gs)

# print(gs1)

你可能感兴趣的:(爬虫)

【Python数据分析五十个小案例】使用自然语言处理(NLP)技术分析 Twitter 情感

小馒头学python

python 数据分析 自然语言处理

博客主页:小馒头学python本文专栏:Python爬虫五十个小案例专栏简介:分享五十个Python爬虫小案例项目简介什么是情感分析情感分析(SentimentAnalysis)是文本分析的一部分,旨在识别文本中传递的情感信息,例如正面、负面或中立情绪。为什么选择Twitter数据数据丰富:Twitter上每天产生数百万条推文,内容多样。即时性:适合实时分析。公开可用:提供API可轻松访问。NLP

强烈推荐的3款低代码爬虫,程序员必备

朱卫军 AI

低代码 爬虫 网络爬虫

网络爬虫是一种常见的数据采集技术,你可以从网页、APP上抓取任何想要的公开数据,当然需要在合法前提下。爬虫使用场景也很多,比如:搜索引擎机器人爬行网站,分析其内容,然后对其进行排名,比如百度、谷歌价格比较网站,部署机器人自动获取联盟卖家网站上的价格和产品描述,比如什么值得买市场研究公司,使用爬虫从论坛和社交媒体(例如,进行情感分析)提取数据。与屏幕抓取不同,屏幕抓取只复制屏幕上显示的像素,网络爬虫

计算机毕业设计吊炸天Python+Spark地铁客流数据分析与预测系统 地铁大数据 地铁流量预测

qq_80213251

java javaweb 大数据 课程设计 python

开发技术SparkHadoopPython爬虫Vue.jsSpringBoot机器学习/深度学习人工智能创新点Spark大屏可视化爬虫预测算法功能1、登录注册界面,用户登录注册,修改信息2、管理员用户:(1)查看用户信息;(2)出行高峰期的10个时间段;(3)地铁限流的10个时间段;(4)地铁限流的前10个站点;(6)可视化大屏实时显示人流量信息。3、普通用户:(1)出行高峰期的10(5)可视化大

如何评估代理IP服务对AI大模型训练的影响

http

2023年某头部AI公司的内部报告显示,在分布式训练场景下,因代理IP配置不当导致的算力浪费平均达15%。工程师们往往更关注GPU型号或算法优化,却容易忽略网络链路这个隐形变量。本文将以可复现的测试方法,拆解代理IP对训练效果的三大影响维度,手把手教您建立科学的评估体系。一、影响因子的精准拆解代理IP对训练效果的影响主要体现在三个层面:1.数据流速波动当爬虫节点通过代理IP采集训练数据时,实测

让浏览器AI起来:基于大模型Agent的浏览器自动化工具

深度学习机器

优质项目 大语言模型 计算机杂谈 人工智能 自动化 语言模型 开源

最近有个非常火的项目,利用大模型Agent驱动浏览器完成各种操作,如网页搜索、爬虫分析、机票酒店预定、股票监控等,号称全面替代所有在浏览器上的操作,试用方式还是比较简单的,以下将进行简单介绍。快速开始通过pip安装:pipinstallbrowser-use安装web自动化框架:playwrightinstallPlaywright微软开源的一个浏览器自动化框架,主要用于浏览器自动化执行web测试

新手教学系列——curl_cffi异步Session使用注意事项

程序员的开发手册

curl_cffi python 爬虫 反爬 踩坑 教程 避坑

在现代编程中,网络请求是应用程序交互的重要组成部分,尤其在爬虫和数据采集领域,异步请求的能力显得尤为关键。curl_cffi作为一个强大的库,使得Python开发者可以使用C语言的curl库高效地进行异步HTTP请求。本文将带您深入探索curl_cffi异步Session的使用注意事项,帮助您避免常见错误,并提供最佳实践,以提高您的编程技能。1.引言在快速发展的网络应用环境中,处理HTTP请求的效

新手教学系列——MacOS 10.13.6下如何使用curl_cffi模拟Chrome请求

程序员的开发手册

教程 爬虫抓取 Python macos chrome python 爬虫 反爬

在现代网络开发中,模拟浏览器请求已经成为一种常见需求,尤其是当需要绕过反爬虫机制时,普通的HTTP库往往捉襟见肘。本文将介绍一种强大的Python网络请求库——curl_cffi,并带你在MacOS10.13.6下完成从安装到成功使用的全过程。什么是curl_cffi?curl_cffi是一个基于C语言的libcurl库的Python封装,其特别之处在于它底层依赖了curl-impersonate

Python爬虫实战项目案例——爬取微信朋友圈

冷漠无情姐姐

python 爬虫 微信

项目实战 Appium爬取微信朋友圈 自动化爬取App数据基于移动端的自动化测试工具Appium的自动化爬取程序。步骤1、JDK-DownloadJDK,Appium要求用户必须配置JAVA环境,否则启动Seesion报错。2、Appium-DownloadAppium,安装过程请自行搜索。3、AndroidSDK-DownloadSDK4、Selenium-建议使用低版本的PythonSelen

Python爬虫实战——模拟登录爬取数据

Python爬虫项目

2025年爬虫实战项目 python 爬虫 开发语言 信息可视化

1.引言随着互联网的快速发展,很多网站都要求用户登录后才能访问某些特定的数据。比如,社交媒体平台、购物网站、在线教育平台、银行账户等,都会有专门的用户认证机制,以确保数据的安全性和私密性。然而,作为数据分析师或开发者,有时我们需要从这些平台上自动化地爬取用户数据,进行大规模的数据分析。为了实现这一目标,我们通常需要绕过这些登录机制,模拟登录过程,获取登录后的用户数据。在本篇博客中,我们将学习如何使

探秘PSPider:一款强大的Python爬虫框架

马冶娆

探秘PSPider:一款强大的Python爬虫框架pspider一个简单的分布式爬虫框架项目地址:https://gitcode.com/gh_mirrors/pspi/pspider项目简介是一个基于Python构建的分布式网络爬虫框架,专为数据挖掘和信息提取而设计。该项目旨在简化网络爬虫的开发过程,让开发者可以更专注于业务逻辑,而非底层的并发处理和数据存储。通过提供清晰的API接口和灵活的插件

市场调研数据中台架构:Python 爬虫集群突破反爬限制的工程实践

西攻城狮北

架构 python 爬虫 实战案例

引言在当今数据驱动的商业环境中,市场调研数据对于企业的决策至关重要。为了构建一个高效的数据中台架构,我们需要从多个数据源采集数据,而网络爬虫是获取公开数据的重要手段之一。然而,许多网站为了保护数据,设置了各种反爬机制,如IP封禁、验证码、动态内容加载等。本文将详细介绍如何使用Python爬虫集群突破这些反爬限制,并结合实际工程实践,提供完整的代码示例和优化建议。一、项目背景与需求分析1.市场调研数

计算机学报论文字数要求,常见EI学报综述类文章分析

文艺范理工生

综述文章 计算机学报 研究进展 内容分析 学术出版物

想写篇综述文章,所以对一些学报进行了简单分析,呵呵自己对期刊没有一视同仁,所以分析得有祥有略。一、《软件学报》综述类文章分析(2008.1-2010.11,共82篇)1、题目:(1)直接描述研究内容(48,58.5%)a)MIMO多跳无线网b)标识路由关键技术c)车用自组网信息广播d)复杂嵌入式实时系统体系结构设计与分析语言-AADLe)高速长距离网络传输协议f)广域网分布式Web爬虫g)互联网无

selenium用法详解【从入门到实战】【Python爬虫】【4万字

m0_60635609

程序员 selenium python 爬虫

driver.find_element_by_id(‘xxx’).send_keys(Keys.ENTER)使用Backspace来删除一个字符driver.find_element_by_id(‘xxx’).send_keys(Keys.BACK_SPACE)Ctrl+A全选输入框中内容driver.find_element_by_id(‘xxx’).send_keys(Keys.CONTROL

【Python爬虫系列】_031.Scrapy_模拟登陆&中间件

失心疯_2023

Python爬虫系列 python 爬虫 scrapy 中间件 面向切面 requests AOP

课程推荐我的个人主页:失心疯的个人主页入门教程推荐:Python零基础入门教程合集虚拟环境搭建:Python项目虚拟环境(超详细讲解)PyQt5系列教程:PythonGUI(PyQt5)教程合集Oracle数据库教程:Oracle数据库教程合集MySQL数据库教程:MySQL数据库教程合集

Python爬虫实战:从零到一构建数据采集系统

DevKevin

爬虫 python 爬虫 开发语言

文章目录前言一、准备工作1.1环境配置1.2选择目标网站二、爬虫实现步骤2.1获取网页内容2.2解析HTML2.3数据保存三、完整代码示例四、优化与扩展4.1反爬应对策略4.2动态页面处理4.3数据可视化扩展五、注意事项六、总结互动环节前言在大数据时代,数据采集是开发者的必备技能之一,而Python凭借其简洁的语法和丰富的库(如requests、BeautifulSoup)成为爬虫开发的首选语言。

100天精通Python(爬虫篇)——第112天:爬虫到底是违法还是合法呢?(附上相关案例和法条)

袁袁袁袁满

100天精通Python python 爬虫 爬虫到底是违法还是合法呢 爬虫的合法性 爬虫须知 网络爬虫 爬虫工程师

文章目录一、爬虫到底是违法还是合法呢?二、爬虫技术可能触犯的法律风险2.1爬虫引发不正当竞争案例1案例2法条说明分析说明2.2爬虫侵犯用户个人信息案例法条说明分析说明2.3爬虫非法入侵计算机系统获取数据案例法条说明分析说明2.4提供非法爬虫工具案例法条说明分析说明2.5爬虫破坏计算机信息系统案例法条说明分析说明三、爬虫如何避免触犯法律红线?四、总结一、爬虫到底是违法还是合法呢?爬虫技术是一种自动化

解析Python网络爬虫:核心技术、Scrapy框架、分布式爬虫(选择题、填空题、判断题)(第1、2、3、4、5、6、7、10、11章)

一口酪

python 爬虫 scrapy

第一章【填空题】网络爬虫又称网页蜘蛛或(网络机器人)网络爬虫能够按照一定的(规则),自动请求万维网站并提取网络数据。根据使用场景的不同,网络爬虫可分为(通用爬虫)和(聚焦爬虫)两种。爬虫可以爬取互联网上(公开)且可以访问到的网页信息。【判断题】爬虫是手动请求万维网网站且提取网页数据的程序。×爬虫爬取的是网站后台的数据。×通用爬虫用于将互联网上的网页下载到本地,形成一个互联网内容的镜像备份。√聚焦爬

Python爬虫:分布式爬虫架构与Scrapy-Redis实现

挖掘机技术我最强

爬虫专栏 python 爬虫 分布式

摘要在面对大规模的数据爬取任务时,单台机器的爬虫往往效率低下且容易受到性能瓶颈的限制。分布式爬虫通过利用多台机器同时进行数据爬取,可以显著提高爬取效率和处理能力。本文将介绍分布式爬虫的架构原理,并详细讲解如何使用Scrapy-Redis实现分布式爬虫。一、引言随着互联网数据量的持续增长,许多爬虫任务需要处理海量的网页数据。单台机器的资源有限,在面对大规模爬取任务时,可能会出现爬取速度慢、内存不足等

利用爬虫获取淘宝商品描述:实战案例指南

数据小小爬虫

爬虫

在电商领域,商品描述是消费者了解产品细节、做出购买决策的重要依据。精准获取淘宝商品描述不仅能帮助商家优化产品信息,还能为市场研究和数据分析提供丰富的数据资源。本文将详细介绍如何利用爬虫技术精准获取淘宝商品描述,并分享关键技术和代码示例。一、前期准备(一)环境搭建确保你的开发环境已安装以下必要的库:HttpClient:用于发送HTTP请求。Jsoup:用于解析HTML页面。JSON处理库:如org

【Python爬虫(24)】Redis:Python爬虫的秘密武器

奔跑吧邓邓子

Python爬虫 python 爬虫 redis 开发语言 缓存

【Python爬虫】专栏简介:本专栏是Python爬虫领域的集大成之作,共100章节。从Python基础语法、爬虫入门知识讲起,深入探讨反爬虫、多线程、分布式等进阶技术。以大量实例为支撑,覆盖网页、图片、音频等各类数据爬取,还涉及数据处理与分析。无论是新手小白还是进阶开发者,都能从中汲取知识,助力掌握爬虫核心技能,开拓技术视野。目录一、Redis数据结构大揭秘1.1字符串(String)1.2哈希

【Python爬虫(12)】正则表达式:Python爬虫的进阶利刃

奔跑吧邓邓子

Python爬虫 python 爬虫 正则表达式 进阶 高级

【Python爬虫】专栏简介:本专栏是Python爬虫领域的集大成之作,共100章节。从Python基础语法、爬虫入门知识讲起,深入探讨反爬虫、多线程、分布式等进阶技术。以大量实例为支撑,覆盖网页、图片、音频等各类数据爬取,还涉及数据处理与分析。无论是新手小白还是进阶开发者,都能从中汲取知识,助力掌握爬虫核心技能,开拓技术视野。目录一、引言二、正则表达式高级语法详解2.1分组(Grouping)2

使用Python爬取天气数据并解析!

Python_trys

python 开发语言 Python学习 Python爬虫 代码 天气爬取 计算机

包含编程籽料、学习路线图、爬虫代码、安装包等!【点击领取】在本文中,我们将使用Python编写一个简单的爬虫程序,从天气网站爬取天气数据,并解析出我们需要的信息。我们将以中国天气网(www.weather.com.cn)为例,爬取指定城市的天气数据。1.准备工作在开始之前,请确保你已经安装了以下Python库:requests:用于发送HTTP请求。BeautifulSoup:用于解析HTML文档

【全栈】SprintBoot+vue3迷你商城(12)

杰九

spring boot java vue.js

【全栈】SprintBoot+vue3迷你商城(12)往期的文章都在这里啦,大家有兴趣可以看一下后端部分:【全栈】SprintBoot+vue3迷你商城(1)【全栈】SprintBoot+vue3迷你商城(2)【全栈】SprintBoot+vue3迷你商城-扩展:利用python爬虫爬取商品数据【全栈】SprintBoot+vue3迷你商城(3)【全栈】SprintBoot+vue3迷你商城(4)

python中网络爬虫框架

你可以自己看

python python 爬虫 开发语言

Python中有许多强大的网络爬虫框架,它们帮助开发者轻松地抓取和处理网页数据。最常用的Python网络爬虫框架有以下几个:1.ScrapyScrapy是Python中最受欢迎的网络爬虫框架之一,专为大规模网络爬取和数据提取任务而设计。它功能强大、效率高,支持异步处理,是数据采集和网络爬虫的首选。Scrapy的主要特点:支持异步请求,爬取速度非常快。内置了处理请求、响应、解析HTML等常用的功能。

从零开始:用Python爬取网站的汽车品牌和价格数据

亿牛云爬虫专家

python 爬虫代理 代理IP python 懂车帝 汽车 价格 爬虫 爬虫代理 代理IP

场景:在一个现代化的办公室里,工程师小李和产品经理小张正在讨论如何获取懂车帝网站的汽车品牌和价格数据。小张:小李,我们需要获取懂车帝网站上各个汽车品牌的价格和评价数据,以便为用户提供更准确的购车建议。小李:明白了。我们可以使用Python编写爬虫来抓取这些信息。不过,考虑到反爬机制,我们需要使用代理IP来避免被封禁。小张:对,代理IP很重要。你打算怎么实现?小李:我计划使用爬虫代理的域名、端口、用

深度解析:使用 Headless 模式 ChromeDriver 进行无界面浏览器操作

亿牛云爬虫专家

爬虫代理 python 代理IP Headless ChromeDriver Chrome 无界面 爬虫代理 代理IP 动态加载

一、问题背景(传统爬虫的痛点)数据采集是现代网络爬虫技术的核心任务之一。然而,传统爬虫面临多重挑战,主要包括:反爬机制:许多网站通过检测请求头、IP地址、Cookie等信息识别爬虫,进而限制或拒绝访问。动态加载内容:一些页面的内容是通过JavaScript渲染的,传统的HTTP请求无法直接获取这些动态数据。为了解决这些问题,无界面浏览器(HeadlessBrowser)技术应运而生。无界面浏览器是

【全栈】SprintBoot+vue3迷你商城-细节解析(2):分页

杰九

vue.js spring boot java

【全栈】SprintBoot+vue3迷你商城-细节解析(2):分页往期的文章都在这里啦,大家有兴趣可以看一下后端部分:【全栈】SprintBoot+vue3迷你商城(1)【全栈】SprintBoot+vue3迷你商城(2)【全栈】SprintBoot+vue3迷你商城-扩展:利用python爬虫爬取商品数据【全栈】SprintBoot+vue3迷你商城(3)【全栈】SprintBoot+vue3

【MySQL】表空间丢失处理(Tablespace is missing for table 错误处理)

m0_74824823

面试 学习路线 阿里巴巴 mysql 数据库

问题背景最近,我在运行一个基于Python爬虫的项目时,爬虫需要频繁与MySQL数据库交互。不幸的是,在数据爬取过程中,Windows系统突然强制更新并重启。这次意外中断导致MySQL数据库的三个表格(2022年、2023年和2024年的数据表)出现了“Tablespaceismissing”的错误。起初,我尝试了常规的CHECKTABLE和REPAIRTABLE方法,但这些都没有解决问题。最终,

Python爬虫TLS

dme.

Python爬虫零基础入门 爬虫 python

TLS指纹校验原理和绕过浏览器可以正常访问,但是用requests发送请求失败。后端是如何监测得呢?为什么浏览器可以返回结果,而requests模块不行呢?https://cn.investing.com/equities/amazon-com-inc-historical-data1.指纹校验案例1.1案例:ascii2dhttps://ascii2d.net/importrequestsres

python爬虫Selenium库详细教程_python爬虫之selenium库的使用详解

嘻嘻哈哈学编程

程序员 python 爬虫 selenium

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。需要这份系统化学习资料的朋友,可以戳这里获取一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!2.2访问页面2.3查找元素2.3.1单个元素下面

java的(PO,VO,TO,BO,DAO,POJO)

Cb123456

VO TO BO POJO DAO

转:

http://www.cnblogs.com/yxnchinahlj/archive/2012/02/24/2366110.html

-------------------------------------------------------------------

O/R Mapping 是 Object Relational Mapping(对象关系映

spring ioc原理(看完后大家可以自己写一个spring)

aijuans

spring

最近,买了本Spring入门书:spring In Action 。大致浏览了下感觉还不错。就是入门了点。Manning的书还是不错的,我虽然不像哪些只看Manning书的人那样专注于Manning,但怀着崇敬 的心情和激情通览了一遍。又一次接受了IOC 、DI、AOP等Spring核心概念。 先就IOC和DI谈一点我的看法。IO

MyEclipse 2014中Customize Persperctive设置无效的解决方法

Kai_Ge

MyEclipse2014

高高兴兴下载个MyEclipse2014,发现工具条上多了个手机开发的按钮,心生不爽就想弄掉他!

结果发现Customize Persperctive失效!!

有说更新下就好了,可是国内Myeclipse访问不了,何谈更新...

so~这里提供了更新后的一下jar包,给大家使用!

1、将9个jar复制到myeclipse安装目录\plugins中

2、删除和这9个jar同包名但是版本号较

SpringMvc上传

120153216

springMVC

@RequestMapping(value = WebUrlConstant.UPLOADFILE)

@ResponseBody

public Map<String, Object> uploadFile(HttpServletRequest request,HttpServletResponse httpresponse) {

try {

//

Javascript----HTML DOM 事件

何必如此

JavaScript html Web

HTML DOM 事件允许Javascript在HTML文档元素中注册不同事件处理程序。

事件通常与函数结合使用,函数不会在事件发生前被执行!

注:DOM: 指明使用的 DOM 属性级别。

1.鼠标事件

属性

动态绑定和删除onclick事件

357029540

JavaScript jquery

因为对JQUERY和JS的动态绑定事件的不熟悉,今天花了好久的时间才把动态绑定和删除onclick事件搞定!现在分享下我的过程。

在我的查询页面,我将我的onclick事件绑定到了tr标签上同时传入当前行(this值)参数,这样可以在点击行上的任意地方时可以选中checkbox,但是在我的某一列上也有一个onclick事件是用于下载附件的,当

HttpClient|HttpClient请求详解

7454103

apache 应用服务器 网络协议 网络应用 Security

HttpClient 是 Apache Jakarta Common 下的子项目,可以用来提供高效的、最新的、功能丰富的支持 HTTP 协议的客户端编程工具包,并且它支持 HTTP 协议最新的版本和建议。本文首先介绍 HTTPClient,然后根据作者实际工作经验给出了一些常见问题的解决方法。HTTP 协议可能是现在 Internet 上使用得最多、最重要的协议了,越来越多的 Java 应用程序需

递归 逐层统计树形结构数据

darkranger

数据结构

将集合递归获取树形结构:

/**

*

* 递归获取数据

* @param alist:所有分类

* @param subjname:对应统计的项目名称

* @param pk:对应项目主键

* @param reportList: 最后统计的结果集

* @param count:项目级别

*/

public void getReportVO(Arr

访问WEB-INF下使用frameset标签页面出错的原因

aijuans

struts2

<frameset rows="61,*,24" cols="*" framespacing="0" frameborder="no" border="0">

MAVEN常用命令

avords

Maven库:

http://repo2.maven.org/maven2/

Maven依赖查询:

http://mvnrepository.com/

Maven常用命令: 1. 创建Maven的普通java项目: mvn archetype:create -DgroupId=packageName

PHP如果自带一个小型的web服务器就好了

houxinyou

apache 应用服务器 Web PHP 脚本

最近单位用PHP做网站,感觉PHP挺好的,不过有一些地方不太习惯,比如,环境搭建。PHP本身就是一个网站后台脚本,但用PHP做程序时还要下载apache,配置起来也不太很方便,虽然有好多配置好的apache+php+mysq的环境,但用起来总是心里不太舒服,因为我要的只是一个开发环境,如果是真实的运行环境,下个apahe也无所谓,但只是一个开发环境,总有一种杀鸡用牛刀的感觉。如果php自己的程序中

NoSQL数据库之Redis数据库管理(list类型)

bijian1013

redis 数据库 NoSQL

3.list类型及操作

List是一个链表结构,主要功能是push、pop、获取一个范围的所有值等等,操作key理解为链表的名字。Redis的list类型其实就是一个每个子元素都是string类型的双向链表。我们可以通过push、pop操作从链表的头部或者尾部添加删除元素,这样list既可以作为栈,又可以作为队列。

&nbs

谁在用Hadoop?

bingyingao

hadoop 数据挖掘 公司 应用场景

Hadoop技术的应用已经十分广泛了,而我是最近才开始对它有所了解,它在大数据领域的出色表现也让我产生了兴趣。浏览了他的官网,其中有一个页面专门介绍目前世界上有哪些公司在用Hadoop,这些公司涵盖各行各业,不乏一些大公司如alibaba,ebay,amazon,google,facebook,adobe等,主要用于日志分析、数据挖掘、机器学习、构建索引、业务报表等场景,这更加激发了学习它的热情。

【Spark七十六】Spark计算结果存到MySQL

bit1129

mysql

package spark.examples.db

import java.sql.{PreparedStatement, Connection, DriverManager}

import com.mysql.jdbc.Driver

import org.apache.spark.{SparkContext, SparkConf}

object SparkMySQLInteg

Scala: JVM上的函数编程

bookjovi

scala erlang haskell

说Scala是JVM上的函数编程一点也不为过,Scala把面向对象和函数型编程这两种主流编程范式结合了起来,对于熟悉各种编程范式的人而言Scala并没有带来太多革新的编程思想,scala主要的有点在于Java庞大的package优势,这样也就弥补了JVM平台上函数型编程的缺失,MS家.net上已经有了F#,JVM怎么能不跟上呢?

对本人而言

jar打成exe

bro_feng

java jar exe

今天要把jar包打成exe,jsmooth和exe4j都用了。

遇见几个问题。记录一下。

两个软件都很好使,网上都有图片教程,都挺不错。

首先肯定是要用自己的jre的,不然不能通用,其次别忘了把需要的lib放到classPath中。

困扰我很久的一个问题是,我自己打包成功后,在一个同事的没有装jdk的电脑上运行,就是不行,报错jvm.dll为无效的windows映像,如截图

最后发现

读《研磨设计模式》-代码笔记-策略模式-Strategy

bylijinnan

java 设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

/*

策略模式定义了一系列的算法,并将每一个算法封装起来,而且使它们还可以相互替换。策略模式让算法独立于使用它的客户而独立变化

简单理解:

1、将不同的策略提炼出一个共同接口。这是容易的,因为不同的策略,只是算法不同,需要传递的参数

cmd命令值cvfM命令

chenyu19891124

cmd

cmd命令还真是强大啊。今天发现jar -cvfM aa.rar @aaalist 就这行命令可以根据aaalist取出相应的文件

例如:

在d:\workspace\prpall\test.java 有这样一个文件,现在想要将这个文件打成一个包。运行如下命令即可比如在d:\wor

OpenJWeb(1.8) Java Web应用快速开发平台

comsci

java 框架 Web 项目管理 企业应用

OpenJWeb(1.8) Java Web应用快速开发平台的作者是我们技术联盟的成员,他最近推出了新版本的快速应用开发平台 OpenJWeb(1.8),我帮他做做宣传

OpenJWeb快速开发平台以快速开发为核心,整合先进的java 开源框架,本着自主开发+应用集成相结合的原则,旨在为政府、企事业单位、软件公司等平台用户提供一个架构透

Python 报错:IndentationError: unexpected indent

daizj

python tab 空格 缩进

IndentationError: unexpected indent 是缩进的问题,也有可能是tab和空格混用啦

Python开发者有意让违反了缩进规则的程序不能通过编译,以此来强制程序员养成良好的编程习惯。并且在Python语言里,缩进而非花括号或者某种关键字,被用于表示语句块的开始和退出。增加缩进表示语句块的开

HttpClient 超时设置

dongwei_6688

httpclient

HttpClient中的超时设置包含两个部分:

1. 建立连接超时,是指在httpclient客户端和服务器端建立连接过程中允许的最大等待时间

2. 读取数据超时,是指在建立连接后,等待读取服务器端的响应数据时允许的最大等待时间

在HttpClient 4.x中如下设置:

HttpClient httpclient = new DefaultHttpC

小鱼与波浪

dcj3sjt126com

一条小鱼游出水面看蓝天,偶然间遇到了波浪。 小鱼便与波浪在海面上游戏,随着波浪上下起伏、汹涌前进。 小鱼在波浪里兴奋得大叫:“你每天都过着这么刺激的生活吗?简直太棒了。” 波浪说:“岂只每天过这样的生活,几乎每一刻都这么刺激!还有更刺激的,要有潮汐变化,或者狂风暴雨,那才是兴奋得心脏都会跳出来。” 小鱼说:“真希望我也能变成一个波浪,每天随着风雨、潮汐流动,不知道有多么好!” 很快,小鱼

Error Code: 1175 You are using safe update mode and you tried to update a table

dcj3sjt126com

mysql

快速高效用:SET SQL_SAFE_UPDATES = 0;下面的就不要看了!

今日用MySQL Workbench进行数据库的管理更新时,执行一个更新的语句碰到以下错误提示:

Error Code: 1175

You are using safe update mode and you tried to update a table without a WHERE that

枚举类型详细介绍及方法定义

gaomysion

enum javaee

转发

http://developer.51cto.com/art/201107/275031.htm

枚举其实就是一种类型,跟int, char 这种差不多,就是定义变量时限制输入的,你只能够赋enum里面规定的值。建议大家可以看看,这两篇文章,《java枚举类型入门》和《C++的中的结构体和枚举》,供大家参考。

枚举类型是JDK5.0的新特征。Sun引进了一个全新的关键字enum

Merge Sorted Array

hcx2013

array

Given two sorted integer arrays nums1 and nums2, merge nums2 into nums1 as one sorted array.

Note:You may assume that nums1 has enough space (size that is

Expression Language 3.0新特性

jinnianshilongnian

el 3.0

Expression Language 3.0表达式语言规范最终版从2013-4-29发布到现在已经非常久的时间了;目前如Tomcat 8、Jetty 9、GlasshFish 4已经支持EL 3.0。新特性包括:如字符串拼接操作符、赋值、分号操作符、对象方法调用、Lambda表达式、静态字段/方法调用、构造器调用、Java8集合操作。目前Glassfish 4/Jetty实现最好,对大多数新特性

超越算法来看待个性化推荐

liyonghui160com

超越算法来看待个性化推荐

一提到个性化推荐,大家一般会想到协同过滤、文本相似等推荐算法,或是更高阶的模型推荐算法,百度的张栋说过,推荐40%取决于UI、30%取决于数据、20%取决于背景知识,虽然本人不是很认同这种比例,但推荐系统中,推荐算法起的作用起的作用是非常有限的。

就像任何

写给Javascript初学者的小小建议

pda158

JavaScript

一般初学JavaScript的时候最头痛的就是浏览器兼容问题。在Firefox下面好好的代码放到IE就不能显示了,又或者是在IE能正常显示的代码在firefox又报错了。 如果你正初学JavaScript并有着一样的处境的话建议你:初学JavaScript的时候无视DOM和BOM的兼容性,将更多的时间花在 了解语言本身(ECMAScript)。只在特定浏览器编写代码(Chrome/Fi

Java 枚举

ShihLei

java enum 枚举

注:文章内容大量借鉴使用网上的资料,可惜没有记录参考地址,只能再传对作者说声抱歉并表示感谢!

一 基础 1)语法

枚举类型只能有私有构造器(这样做可以保证客户代码没有办法新建一个enum的实例)

枚举实例必须最先定义

2)特性

&nb

Java SE 6 HotSpot虚拟机的垃圾回收机制

uuhorse

java HotSpot GC 垃圾回收 VM

官方资料,关于Java SE 6 HotSpot虚拟机的garbage Collection,非常全,英文。

http://www.oracle.com/technetwork/java/javase/gc-tuning-6-140523.html

Java SE 6 HotSpot[tm] Virtual Machine Garbage Collection Tuning

&

#最后实现结果:基本3秒能查出5家相关企业具体公司信息

#最后实现结果:基本3秒能查出5家相关企业具体公司信息