Architecture

| The following RAC Architecture should only be used for test environments. |

For our RAC test environment, we use a normal linux server, acting as a shared storage server using NFS. We can use NFS to provide shared storage for a RAC installation. NFS is an abbreviation of Network File System, a platform independent technology created by Sun Microsystems that allows shared access to files stored on computers via an interface called the Virtual File System (VFS) that runs on top of TCP/IP.

Network Configuration

Each node must have one static IP address for the public network and one static IP address for the private cluster interconnect. The private interconnect should only be used by Oracle. Note that the /etc/hosts settings are the same for both nodes Gentic and Cellar.

| Device |

IP Address |

Subnet |

Gateway |

Purpose |

| eth0 |

192.168.138.35 |

255.255.255.0 |

192.168.138.1 |

Connects Gentic to the public network |

| eth1 |

192.168.137.35 |

255.255.255.0 |

|

Connects Gentic to Cellar (private) |

| /etc/hosts |

| 127.0.0.1 localhost.localdomain localhost

#

# Public Network - (eth0)

192.168.138.35 gentic

192.168.138.36 cellar

# Private Interconnect - (eth1)

192.168.137.35 gentic-priv

192.168.137.36 cellar-priv

# Public Virtual IP (VIP) addresses for - (eth0)

192.168.138.130 gentic-vip

192.168.138.131 cellar-vip |

| Device |

IP Address |

Subnet |

Gateway |

Purpose |

| eth0 |

192.168.138.36 |

255.255.255.0 |

192.168.138.1 |

Connects Cellar to the public network |

| eth1 |

192.168.137.36 |

255.255.255.0 |

|

Connects Cellar to Gentic (private) |

| /etc/hosts |

| 127.0.0.1 localhost.localdomain localhost

#

# Public Network - (eth0)

192.168.138.35 gentic

192.168.138.36 cellar

# Private Interconnect - (eth1)

192.168.137.35 gentic-priv

192.168.137.36 cellar-priv

# Public Virtual IP (VIP) addresses for - (eth0)

192.168.138.130 gentic-vip

192.168.138.131 cellar-vip |

Note that the virtual IP addresses only need to be defined in the /etc/hosts file (or your DNS) for both nodes. The public virtual IP addresses will be configured automatically by Oracle when you run the Oracle Universal Installer, which starts Oracle's Virtual Internet Protocol Configuration Assistant (VIPCA). All virtual IP addresses will be activated when the srvctl start nodeapps -n command is run. This is the Host Name/IP Address that will be configured in the client(s) tnsnames.ora file.

| Virtual IP address |

A public internet protocol (IP) address for each node, to be used as the Virtual IP address (VIP) for client connections. If a node fails, then Oracle Clusterware fails over the VIP address to an available node. This address should be in the/etc/hosts file on any node. The VIP should not be in use at the time of the installation, because this is an IP address that Oracle Clusterware manages. When Automatically Failover occurs, two things happen:

- The new node re-arps the world indicating a new MAC address for the address. For directly connected clients, this usually causes them to see errors on their connections to the old address.

- Subsequent packets sent to the VIP go to the new node, which will send error RST packets back to the clients. This results in the clients getting errors immediately.

This means that when the client issues SQL to the node that is now down, or traverses the address list while connecting, rather than waiting on a very long TCP/IP time-out (~10 minutes), the client receives a TCP reset. In the case of SQL, this is ORA-3113. In the case of connect, the next address in tnsnames is used. Going one step further is making use of Transparent Application Failover (TAF). With TAF successfully configured, it is possible to completely avoid ORA-3113 errors alltogether. |

| Public IP address |

The public IP address name must be resolvable to the hostname. You can register both the public IP and the VIP address with the DNS. If you do not have a DNS, then you must make sure that both public IP addresses are in the node /etc/hosts file (for all cluster nodes) |

| Private IP address |

A private IP address for each node serves as the private interconnect address for internode cluster communication only. The following must be true for each private IP address: - It must be separate from the public network

- It must be accessible on the same network interface on each node

- It must be connected to a network switch between the nodes for the

private network; crosscable interconnects are not supported The private interconnect is used for internode communication by both Oracle Clusterware and Oracle RAC. The private IP address must be available in each node's /etc/hosts file. |

Enterprise Linux Installation and Setup

We use Oracle Enterprise Linux 5.0. A general pictorial guide to the operating system installation can be found here. More specifically, it should be a server installation with a minimum of 2G swap, firewall and secure Linux disabled. We have installed everything for our test environment, Oracle recommends a default server installation.

Disable SELINUX (on both Nodes) /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

SELINUX=disabled

Disable Firewall (on both Nodes)

Check to ensure that the firewall option is turned off.

root> /etc/rc.d/init.d/iptables status

root> /etc/rc.d/init.d/iptables stop

root> chkconfig iptables off

Synchronize Time with NTP (on both Nodes)

Ensure that each member node of the cluster is set as closely as possible to the same date and time. Oracle strongly recommends using the Network Time Protocol feature of most operating systems for this purpose, with all nodes using the same reference Network Time Protocol server.

root> /etc/init.d/ntpd status

ntpd (pid 2295) is running...

/etc/ntp.conf

server swisstime.ethz.ch

restrict swisstime.ethz.ch mask 255.255.255.255 nomodify notrap noquery

Kernel Parameters (on both Nodes)

The kernel parameters will need to be defined on every node within the cluster every time the machine is booted. This section focuses on configuring both Linux servers - getting each one prepared for the Oracle RAC 11g installation. This includes verifying enough swap space, setting shared memory and semaphores, setting the maximum amount of file handles, setting the IP local port range, setting shell limits for the oracle user and activating all kernel parameters for the system.

/etc/sysctl.conf

# Additional Parameters added for Oracle 11

# -----------------------------------------

# semaphores: semmsl, semmns, semopm, semmni

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

net.ipv4.ip_local_port_range = 1024 65000

net.core.rmem_default = 4194304

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 262144

# Additional Parameters added for RAC

# -----------------------------------

net.ipv4.ipfrag_high_thresh = 524288

net.ipv4.ipfrag_low_thresh = 393216

net.ipv4.tcp_rmem = 4096 524288 16777216

net.ipv4.tcp_wmem = 4096 524288 16777216

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_sack = 0

net.ipv4.tcp_window_scaling = 1

net.core.optmem_max = 524287

net.core.netdev_max_backlog = 2500

sunrpc.tcp_slot_table_entries = 128

sunrpc.udp_slot_table_entries = 128

net.ipv4.tcp_mem = 16384 16384 16384

fs.file-max = 6553600

Run the following command to change the current kernel parameters

root> /sbin/sysctl -p

Limits for User Oracle (on both Nodes) /etc/security/limits.conf

# Limits for User Oracle

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

/etc/pam.d/login

# For Oracle

session required /lib/security/pam_limits.so

session required pam_limits.so

Services to Start (on both Nodes)

Start only the needed Services, this can be done with the chkconfig or service command.

root> chkconfig --list | grep "3:on"

crond 0:off 1:off 2:on 3:on 4:on 5:on 6:off

gpm 0:off 1:off 2:on 3:on 4:on 5:on 6:off

kudzu 0:off 1:off 2:off 3:on 4:on 5:on 6:off

netfs 0:off 1:off 2:off 3:on 4:on 5:on 6:off

network 0:off 1:off 2:on 3:on 4:on 5:on 6:off

nfs 0:off 1:off 2:off 3:on 4:off 5:off 6:off

nfslock 0:off 1:off 2:off 3:on 4:on 5:on 6:off

ntpd 0:off 1:off 2:off 3:on 4:off 5:on 6:off

portmap 0:off 1:off 2:off 3:on 4:on 5:on 6:off

sshd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

syslog 0:off 1:off 2:on 3:on 4:on 5:on 6:off

sysstat 0:off 1:off 2:on 3:on 4:off 5:on 6:off

xfs 0:off 1:off 2:on 3:on 4:on 5:on 6:off

root> service --status-all | grep "is running"

crond (pid 2458) is running...

gpm (pid 2442) is running...

rpc.mountd (pid 2391) is running...

nfsd (pid 2383 2382 2381 2380 2379 2378 2377 2376) is running...

rpc.rquotad (pid 2339) is running...

rpc.statd (pid 2098) is running...

ntpd (pid 2284) is running...

portmap (pid 2072) is running...

rpc.idmapd (pid 2426) is running...

sshd (pid 4191 4189 2257) is running...

syslogd (pid 2046) is running...

klogd (pid 2049) is running...

xfs (pid 2496) is running...

Create Accounts

Create the following groups and the user Oracle on all three hosts

root> groupadd -g 500 oinstall

root> groupadd -g 400 dba

root> useradd -u 400 -g 500 -G dba -c "Oracle Owner" -d /home/oracle -s /bin/bash oracle

root> passwd oracle

$HOME/.bash_profile

#!/bin/bash

# Akadia AG, Fichtenweg 10, CH-3672 Oberdiessbach

# --------------------------------------------------------------------------

# File: .bash_profile

#

# Autor: Martin Zahn, Akadia AG, 20.09.2007

#

# Purpose: Configuration file for BASH Shell

#

# Location: $HOME

#

# Certified: Oracle Enterprise Linux 5

# --------------------------------------------------------------------------

# User specific environment and startup programs

TZ=MET; export TZ

PATH=${PATH}:$HOME/bin

ENV=$HOME/.bashrc

BASH_ENV=$HOME/.bashrc

USERNAME=`whoami`

POSTFIX=/usr/local/postfix

# LANG=en_US.UTF-8

LANG=en_US

COLUMNS=130

LINES=45

DISPLAY=192.168.138.11:0.0

export USERNAME ENV COLUMNS LINES TERM PS1 PS2 PATH POSTFIX BASH_ENV LANG DISPLAY

# Setup the correct Terminal-Type

if [ `tty` != "/dev/tty1" ]

then

# TERM=linux

TERM=vt100

else

# TERM=linux

TERM=vt100

fi

# Setup Terminal (test on [ -t 0 ] is used to avoid problems with Oracle Installer)

# -t fd True if file descriptor fd is open and refers to a terminal.

if [ -t 0 ]

then

stty erase "^H" kill "^U" intr "^C" eof "^D"

stty cs8 -parenb -istrip hupcl ixon ixoff tabs

fi

# Set up shell environment

# set -u # error if undefined variable.

trap "echo -e 'logout $LOGNAME'" 0 # what to do on exit.

# Setup ORACLE 11 environment

if [ `uname -n` = "gentic" ]

then

ORACLE_SID=AKA1; export ORACLE_SID

fi

if [ `uname -n` = "cellar" ]

then

ORACLE_SID=AKA2; export ORACLE_SID

fi

ORACLE_HOSTNAME=`uname -n`; export ORACLE_HOSTNAME

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

ORACLE_HOME=${ORACLE_BASE}/product/11.1.0; export ORACLE_HOME

ORA_CRS_HOME=${ORACLE_BASE}/crs; export ORA_CRS_HOME

TNS_ADMIN=${ORACLE_HOME}/network/admin; export TNS_ADMIN

ORA_NLS11=${ORACLE_HOME}/nls/data; export ORA_NLS10

CLASSPATH=${ORACLE_HOME}/JRE:${ORACLE_HOME}/jlib:${ORACLE_HOME}/rdbms/jlib

export CLASSPATH

ORACLE_TERM=xterm; export ORACLE_TERM

ORACLE_OWNER=oracle; export ORACLE_OWNER

NLS_LANG=AMERICAN_AMERICA.WE8ISO8859P1; export NLS_LANG

LD_LIBRARY_PATH=${ORACLE_HOME}/lib:/lib:/usr/lib; export LD_LIBRARY_PATH

# Set up the search paths:

PATH=${POSTFIX}/bin:${POSTFIX}/sbin:${POSTFIX}/sendmail:${ORACLE_HOME}/bin

PATH=${PATH}:${ORA_CRS_HOME}/bin:/usr/local/bin:/bin:/sbin:/usr/bin:/usr/sbin

PATH=${PATH}:/usr/local/sbin:/usr/bin/X11:/usr/X11R6/bin

PATH=${PATH}:.

export PATH

# Set date in European-Form

echo -e " "

date '+Date: %d.%m.%y Time: %H:%M:%S'

echo -e " "

uname -a

# Clean shell-history file .sh_history

: > $HOME/.bash_history

# Show last login

cat .lastlogin

term=`tty`

echo -e "Last login at `date '+%H:%M, %h %d'` on $term" >.lastlogin

echo -e " "

if [ $LOGNAME = "root" ]

then

echo -e "WARNING: YOU ARE SUPERUSER !!!"

echo -e " "

fi

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# Set Shell Limits for user oracle

if [ $USER = "oracle" ]

then

ulimit -u 16384 -n 65536

fi

# Umask new files to rw-r--r--

umask 022

$HOME/.bashrc

alias more=less

alias up='cd ..'

alias kk='ls -la | less'

alias ll='ls -la'

alias ls='ls -F'

alias ps='ps -ef'

alias home='cd $HOME'

alias which='type -path'

alias h='history'

#

# Do not produce core dumps

#

# ulimit -c 0

PS1="`whoami`@\h:\w> "

export PS1

PS2="> "

export PS2

NFS Configuration

The Oracle Clusterware Shared Files are the Oracle Cluster Registry (OCR) and the CRS Voting Disk. They will be installed by the Oracle Installer on the Shared Disk on the NFS-Server. Besides this two shared Files all Oracle Datafiles will be created on the Shared Disk.

Create and export Shared Directories on NFS-Server (Opal)

root@opal> mkdir -p /u01/crscfg

root@opal> mkdir -p /u01/votdsk

root@opal> mkdir -p /u01/oradat

root@opal> chown -R oracle:oinstall /u01/crscfg

root@opal> chown -R oracle:oinstall /u01/votdsk

root@opal> chown -R oracle:oinstall /u01/oradat

root@opal> chmod -R 775 /u01/crscfg

root@opal> chmod -R 775 /u01/votdsk

root@opal> chmod -R 775 /u01/oradat

/etc/exports

/u01/crscfg *(rw,sync,no_wdelay,insecure_locks,no_root_squash)

/u01/votdsk *(rw,sync,no_wdelay,insecure_locks,no_root_squash)

/u01/oradat *(rw,sync,insecure,root_squash,no_subtree_check)

Export Options:

| rw |

Allow both read and write requests on this NFS volume. The default is to disallow any request which changes the filesystem. This can also be made explicit by using the ro option. |

| sync |

Reply to requests only after the changes have been committed to stable storage.

In this and future releases, sync is the default, and async must be explicit requested if needed. To help make system adminstrators aware of this change, 'exportfs' will issue a warning if neither sync nor async is specified. |

| no_wdelay |

This option has no effect if async is also set. The NFS server will normally delay committing a write request to disc slightly if it suspects that another related write request may be in progress or may arrive soon. This allows multiple write requests to be committed to disc with the one operation which can improve performance. If an NFS server received mainly small unrelated requests, this behaviour could actually reduce performance, so no_wdelay is available to turn it off. The default can be explicitly requested with the wdelay option. |

| no_root_squash |

root_squash map requests from uid/gid 0 to the anonymous uid/gid. no_root_squash turns off root squashing. |

| insecure |

The insecure option allows clients with NFS implementations that don't use a reserved port for NFS |

| no_subtree_check |

This option enables subtree checking, which does add another level of security, but can be unreliability in some circumstances. If a subdirectory of a filesystem is exported, but the whole filesystem isn't then whenever a NFS request arrives, the server must check not only that the accessed file is in the appropriate filesystem (which is easy) but also that it is in the exported tree (which is harder). This check is called the subtree_check. In order to perform this check, the server must include some information about the location of the file in the "filehandle" that is given to the client. This can cause problems with accessing files that are renamed while a client has them open (though in many simple cases it will still work). Subtree checking is also used to make sure that files inside directories to which only root has access can only be accessed if the filesystem is exported with no_root_squash (see below), even if the file itself allows more general access. |

For more information see: man exports

root@opal> service nfs restart Shutting down NFS mountd: [ OK ]

Shutting down NFS daemon: [ OK ]

Shutting down NFS quotas: [ OK ]

Shutting down NFS services: [ OK ]

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS daemon: [ OK ]

Starting NFS mountd: [ OK ]

root@opal> exportfs -v

/u01/crscfg (rw,no_root_squash,no_subtree_check,insecure_locks,anonuid=65534,anongid=65534)

/u01/votdsk (rw,no_root_squash,no_subtree_check,insecure_locks,anonuid=65534,anongid=65534)

/u01/oradat (rw,wdelay,insecure,root_squash,no_subtree_check,anonuid=65534,anongid=65534)

Mount Shared Directories on all RAC Nodes (Cellar, Gentic)

root> mkdir -p /u01/crscfg

root> mkdir -p /u01/votdsk

root> mkdir -p /u01/oradat

root> chown -R oracle:oinstall /u01/crscfg

root> chown -R oracle:oinstall /u01/votdsk

root> chown -R oracle:oinstall /u01/oradat

root> chmod -R 775 /u01/crscfg

root> chmod -R 775 /u01/votdsk

root> chmod -R 775 /u01/oradat

/etc/fstab

opal:/u01/crscfg /u01/crscfg nfs

rw,bg,hard,nointr,tcp,vers=3,timeo=300,rsize=32768,wsize=32768,actimeo=0 0 0

opal:/u01/votdsk /u01/votdsk nfs

rw,bg,hard,nointr,tcp,vers=3,timeo=300,rsize=32768,wsize=32768,actimeo=0 0 0

opal:/u01/oradat /u01/oradat nfs user,tcp,rsize=32768,wsize=32768,hard,intr,noac,nfsvers=3 0 0

Mount Options:

| rw |

Mount the file system read-write |

| bg |

If the first NFS mount attempt times out, retry the mount in the background. After a mount operation is backgrounded, all subsequent mounts on the same NFS server will be backgrounded immediately, without first attempting the mount. A missing mount point is treated as a timeout, to allow for nested NFS mounts. |

| hard |

If an NFS file operation has a major timeout then report "server not responding" on the console and continue retrying indefinitely. This is the default. |

| nointr |

This will not allow NFS operations (on hard mounts) to be interrupted while waiting for a response from the server. |

| tcp |

Mount the NFS filesystem using the TCP protocol instead of the default UDP protocol. Many NFS servers only support UDP. |

| vers |

vers is an alternative to nfsvers and is compatible with many other operating systems |

| timeo |

The value in tenths of a second before sending the first retransmission after an RPC timeout. The default value depends on whether proto=udp or proto=tcp is in effect (see below). The default value for UDP is 7 tenths of a second. The default value for TCP is 60 seconds. After the first timeout, the timeout is doubled after each successive timeout until a maximum timeout of 60 seconds is reached or the enough retransmissions have occured to cause a major timeout. Then, if the filesystem is hard mounted, each new timeout cascade restarts at twice the initial value of the previous cascade, again doubling at each retransmission. The maximum timeout is always 60 seconds. |

| rsize |

The number of bytes NFS uses when reading files from an NFS server. The rsize is negotiated between the server and client to determine the largest block size that both can support. The value specified by this option is the maximum size that could be used; however, the actual size used may be smaller. Note: Setting this size to a value less than the largest supported block size will adversely affect performance. |

| wsize |

The number of bytes NFS uses when writing files to an NFS server. The wsize is negotiated between the server and client to determine the largest block size that both can support. The value specified by this option is the maximum size that could be used; however, the actual size used may be smaller. Note: Setting this size to a value less than the largest supported block size will adversely affect performance. |

| actimeo |

Using actimeo sets all of acregmin, acregmax, acdirmin, and acdirmax to the same value. There is no default value. |

| nfsvers |

Use an alternate RPC version number to contact the NFS daemon on the remote host. This option is useful for hosts that can run multiple NFS servers. The default value depends on which kernel you are using. |

| noac |

Disable all forms of attribute caching entirely. This extracts a significant performance penalty but it allows two different NFS clients to get reasonable results when both clients are actively writing to a common export on the server. |

root> service nfs restart

Shutting down NFS mountd: [ OK ]

Shutting down NFS daemon: [ OK ]

Shutting down NFS quotas: [ OK ]

Shutting down NFS services: [ OK ]

Starting NFS services: [ OK ]

Starting NFS quotas: [ OK ]

Starting NFS daemon: [ OK ]

Starting NFS mountd: [ OK ]

root> service netfs restart

Unmounting NFS filesystems: [ OK ]

Mounting NFS filesystems: [ OK ]

Mounting other filesystems: [ OK ]

Enabling SSH User Equivalency Setup SSH User Equivalency

Before you can install and use Oracle Real Application clusters, you must configure the secure shell (SSH) for the "oracle" UNIX user account on all cluster nodes. The goal here is to setup user equivalence for the "oracle" UNIX user account. User equivalence enables the "oracle" UNIX user account to access all other nodes in the cluster (running commands and copying files) without the need for a password.

Installing Oracle Clusterware and the Oracle Database software is only performed from one node in a RAC cluster. When running the Oracle Universal Installer (OUI) on that particular node, it will use the ssh and scp commands to run remote commands on and copy files (the Oracle software) to all other nodes within the RAC cluster.

oracle@cellar> mkdir ~/.ssh

oracle@cellar> chmod 700 ~/.ssh

oracle@cellar> /usr/bin/ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is: 47:49:95:36:70:9a:cf:54:8b:96:43:db:39:ce:bd:bf oracle@cellar

oracle@gentic> mkdir ~/.ssh

oracle@gentic> chmod 700 ~/.ssh

oracle@gentic> /usr/bin/ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is: 90:4c:82:48:f1:f1:08:56:dc:e9:c8:98:ca:94:0c:31 oracle@gentic

oracle@cellar> cd ~/.ssh

oracle@cellar> scp id_rsa.pub gentic:/home/oracle/.ssh/authorized_keys

oracle@gentic> cd ~/.ssh

oracle@gentic> scp id_rsa.pub cellar:/home/oracle/.ssh/authorized_keys

oracle@cellar> cat id_rsa.pub >> authorized_keys

oracle@cellar> ssh cellar date

Tue Sep 18 15:15:26 CEST 2007

oracle@cellar> ssh gentic date

Tue Sep 18 15:15:32 CEST 2007

oracle@gentic> cat id_rsa.pub >> authorized_keys

oracle@gentic> ssh cellar date

Tue Sep 18 15:15:26 CEST 2007

oracle@gentic> ssh gentic date

Tue Sep 18 15:15:32 CEST 2007

Check authorized_keys and known_hosts

oracle@cellar:~/.ssh> cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAv2TjN0KTuvqxr3XBHG2JFecCqZ0aPqGO/8cqBtdg

X9qQuLIP5zGpKGrDcRVULvLncGSifVbDvV89LGFnXiv0FZ+8PHD1snGX5M4YyUMcv362wAaW3g2k

Gp1ky0jQias5CZKtC42f94qt6rU1gm4E6Xh7U2QsLkEC0gPiYlGR2Zey4X01Eb18kM55eeGSFjoo

v58T99MjdHFmxEWWvckhwudYZ4sFYbGxqJgywKtSNT0WI9HAGL3LNLBBjmLbbAnxrI1iDqTGMQIq

zTf+p/E+2K/LrG9oUrN3qdT0EGciD0lcxO6Ke7O/npnCscRoUKPlIChsIN4ruJxikurOMzb37Q==

oracle@gentic

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAwQpfO1b5wSF99b/XRZny/xC9/d2l1Y2oF+YT3Qle

8VumvmNBawCmSucUd9q8Jp6PdgTJLpMO60BwbhsrlqCqAUZ2iCgLBsFvAGjQMrBy1b01yRDGlfi3

pyH1FycuzcyD6S+WSa4CH0A7obAr71CDThzU8LRvGMftXsYN+yKPFYhoXUbw0OC7MQs0BfVKaUo/

CXhMKTYUqPdALm0I0TdlQ2uYpg7iXLIxAVV+qB4jH5RaMWRrFETtp9OErkkACA5O/lb8Fy0gYcDs

M6Sqnv9Nw596vSKn7CXATu8C9HgIbpwdGVc+TwEiQKdMKbgT7z5Ep8LFHrwSm8GtSChR/ILdvw==

oracle@cellar

oracle@cellar:~/.ssh> cat known_hosts

gentic,192.168.138.35 ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEArQ7O8QZDY84

O6NA2FDUlHQiqc1v+wVpweJAL27kHU8rnqQirExCeVEuySgIqvkNcUf5JlpKold8T0ctB

lHsByaeQKYIhnM+roTay5x+2sNQvLKXsiNcGKu0FdGQPXv5lykO4eXNXl1aFx7FVCHHTS

GUQppAkBmpi1jUOFwU2mFWyI9e2j6V7aeXvmnb6pnmtjxkHqBaGfBA6YDvanxxJOn0967

1CNzgT6fVk+3UBH+8uhMs9dXqnrBKUNz9Ts2+uUfPAP+K1uR2nrG2O+D1UwguFYEm/JH4

XHQYgpihvncEt/EDDmhcTodzWfZP6Rn+iWfWkj9hbC8f7khfNRwRiNQ==

cellar,192.168.138.36 ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAuUXTa+JUl7Z

8ovFDczcN8+sAxzrAgfpyjgyDPfdJwWnM70uft8SaOUyf+iWq7kmBi4kBPm3xfYzw2qNT

Nukw6pSAHkxJuKznU9lYPKtyNWW0+ftXtgiqwEob2yFoagMOCUwRQlIEgl3UFWu6Kb2Tn

Di7O08FIXsNgKNe575PH1L6V0lcHoS7KgQt8bev6YqqdjVL25Nvk1TLhEH2toQfkLXL3w

InZEnPolGT8uc+MtUEJ+YkKPpMvh++Hd5BNUeY1AwVIt5RC7usJ70hS4W/sTCn77qz0yC

KGxgWO2POyfB2B5xOy0UYjEbRcLoIq1YOtu1jc208UmJEa/Kj7dQnnw==

oracle@gentic:~/.ssh> cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAwQpfO1b5wSF99b/XRZny/xC9/d2l1Y2oF+YT3Qle

8VumvmNBawCmSucUd9q8Jp6PdgTJLpMO60BwbhsrlqCqAUZ2iCgLBsFvAGjQMrBy1b01yRDGlfi3

pyH1FycuzcyD6S+WSa4CH0A7obAr71CDThzU8LRvGMftXsYN+yKPFYhoXUbw0OC7MQs0BfVKaUo/

CXhMKTYUqPdALm0I0TdlQ2uYpg7iXLIxAVV+qB4jH5RaMWRrFETtp9OErkkACA5O/lb8Fy0gYcDs

M6Sqnv9Nw596vSKn7CXATu8C9HgIbpwdGVc+TwEiQKdMKbgT7z5Ep8LFHrwSm8GtSChR/ILdvw==

oracle@cellar

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAv2TjN0KTuvqxr3XBHG2JFecCqZ0aPqGO/8cqBtdg

X9qQuLIP5zGpKGrDcRVULvLncGSifVbDvV89LGFnXiv0FZ+8PHD1snGX5M4YyUMcv362wAaW3g2k

Gp1ky0jQias5CZKtC42f94qt6rU1gm4E6Xh7U2QsLkEC0gPiYlGR2Zey4X01Eb18kM55eeGSFjoo

v58T99MjdHFmxEWWvckhwudYZ4sFYbGxqJgywKtSNT0WI9HAGL3LNLBBjmLbbAnxrI1iDqTGMQIq

zTf+p/E+2K/LrG9oUrN3qdT0EGciD0lcxO6Ke7O/npnCscRoUKPlIChsIN4ruJxikurOMzb37Q==

oracle@gentic

oracle@gentic:~/.ssh> cat known_hosts

cellar,192.168.138.36 ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEAuUXTa+JUl7Z

8ovFDczcN8+sAxzrAgfpyjgyDPfdJwWnM70uft8SaOUyf+iWq7kmBi4kBPm3xfYzw2qNT

Nukw6pSAHkxJuKznU9lYPKtyNWW0+ftXtgiqwEob2yFoagMOCUwRQlIEgl3UFWu6Kb2Tn

Di7O08FIXsNgKNe575PH1L6V0lcHoS7KgQt8bev6YqqdjVL25Nvk1TLhEH2toQfkLXL3w

InZEnPolGT8uc+MtUEJ+YkKPpMvh++Hd5BNUeY1AwVIt5RC7usJ70hS4W/sTCn77qz0yC

KGxgWO2POyfB2B5xOy0UYjEbRcLoIq1YOtu1jc208UmJEa/Kj7dQnnw==

gentic,192.168.138.35 ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEArQ7O8QZDY84

O6NA2FDUlHQiqc1v+wVpweJAL27kHU8rnqQirExCeVEuySgIqvkNcUf5JlpKold8T0ctB

lHsByaeQKYIhnM+roTay5x+2sNQvLKXsiNcGKu0FdGQPXv5lykO4eXNXl1aFx7FVCHHTS

GUQppAkBmpi1jUOFwU2mFWyI9e2j6V7aeXvmnb6pnmtjxkHqBaGfBA6YDvanxxJOn0967

1CNzgT6fVk+3UBH+8uhMs9dXqnrBKUNz9Ts2+uUfPAP+K1uR2nrG2O+D1UwguFYEm/JH4

XHQYgpihvncEt/EDDmhcTodzWfZP6Rn+iWfWkj9hbC8f7khfNRwRiNQ==

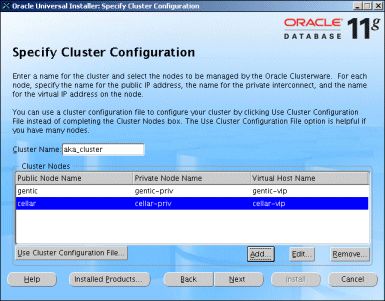

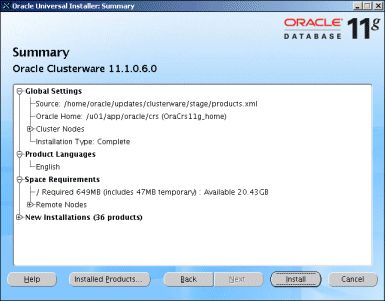

Install Oracle Clusterware

Perform the following installation procedures on only one node in the cluster! The Oracle Clusterware software will be installed to all other nodes in the cluster by the Oracle Universal Installer.

So, what exactly is the Oracle Clusterware responsible for? It contains all of the cluster and database configuration metadata along with several system management features for RAC. It allows the DBA to register and invite an Oracle instance (or instances) to the cluster. During normal operation, Oracle Clusterware will send messages (via a special ping operation) to all nodes configured in the cluster, often called the ?heartbeat?. If the heartbeat fails for any of the nodes, it checks with the Oracle Clusterware configuration files (on the shared disk) to distinguish between a real node failure and a network failure.

Create CRS Home, Cluster Registry and Voting Disk

Create CRS Home on both RAC Nodes

root> mkdir -p /u01/app/oracle/crs

root> chown -R oracle:oinstall /u01/app

root> chmod -R 775 /u01/app

root> mkdir -p /u01/app/oracle/crs

root> chown -R oracle:oinstall /u01/app

root> chmod -R 775 /u01/app

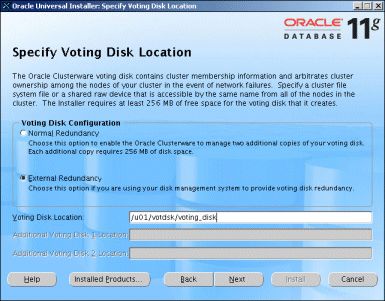

Create Cluster Registry and Voting Disk

oracle> touch /u01/crscfg/crs_registry

oracle> touch /u01/votdsk/voting_disk

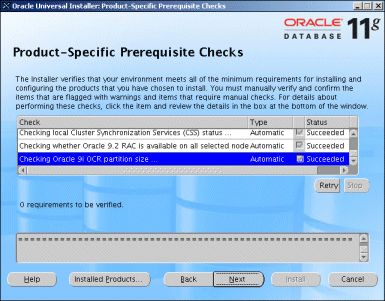

Check the Prerequisites

oracle> unzip linux_11gR1_clusterware.zip

oracle> cd clusterware

Before installing the clusterware, check the prerequisites have been met using the runcluvfy.sh utility

in the clusterware root directory.

oracle> ./runcluvfy.sh stage -pre crsinst -n gentic,cellar -verbose

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "gentic"

Destination Node Reachable?

------------------------------------ ------------------------

gentic yes

cellar yes

Result: Node reachability check passed from node "gentic".

Checking user equivalence...

Check: User equivalence for user "oracle"

Node Name Comment

------------------------------------ ------------------------

cellar passed

gentic passed

Result: User equivalence check passed for user "oracle".

Checking administrative privileges...

Check: Existence of user "oracle"

Node Name User Exists Comment

------------ ------------------------ ------------------------

cellar yes passed

gentic yes passed

Result: User existence check passed for "oracle".

Check: Existence of group "oinstall"

Node Name Status Group ID

------------ ------------------------ ------------------------

cellar exists 500

gentic exists 500

Result: Group existence check passed for "oinstall".

Check: Membership of user "oracle" in group "oinstall" [as Primary]

Node Name User Exists Group Exists User in Group Primary Comment

---------------- ------------ ------------ ------------ ------------ ------------

gentic yes yes yes yes passed

cellar yes yes yes yes passed

Result: Membership check for user "oracle" in group "oinstall" [as Primary] passed.

Administrative privileges check passed.

Checking node connectivity...

Interface information for node "cellar"

Interface Name IP Address Subnet Subnet Gateway Default Gateway Hardware

Address

---------------- ------------ ------------ ------------ ------------ ------------

eth0 192.168.138.36 192.168.138.0 0.0.0.0 192.168.138.1 00:30:48:28:E7:36

eth1 192.168.137.36 192.168.137.0 0.0.0.0 192.168.138.1 00:30:48:28:E7:37

Interface information for node "gentic"

Interface Name IP Address Subnet Subnet Gateway Default Gateway Hardware Address

---------------- ------------ ------------ ------------ ------------ ------------

eth0 192.168.138.35 192.168.138.0 0.0.0.0 192.168.138.1 00:30:48:29:BD:E8

eth1 192.168.137.35 192.168.137.0 0.0.0.0 192.168.138.1 00:30:48:29:BD:E9

Check: Node connectivity of subnet "192.168.138.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

cellar:eth0 gentic:eth0 yes

Result: Node connectivity check passed for subnet "192.168.138.0" with node(s) cellar,gentic.

Check: Node connectivity of subnet "192.168.137.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

cellar:eth1 gentic:eth1 yes

Result: Node connectivity check passed for subnet "192.168.137.0" with node(s) cellar,gentic.

Interfaces found on subnet "192.168.138.0" that are likely candidates for a private interconnect:

cellar eth0:192.168.138.36

gentic eth0:192.168.138.35

Interfaces found on subnet "192.168.137.0" that are likely candidates for a private interconnect:

cellar eth1:192.168.137.36

gentic eth1:192.168.137.35

WARNING:

Could not find a suitable set of interfaces for VIPs.

Result: Node connectivity check passed.

Checking system requirements for 'crs'...

Check: Total memory

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

gentic 1.98GB (2075156KB) 1GB (1048576KB) passed

cellar 1010.61MB (1034860KB) 1GB (1048576KB) failed

Result: Total memory check failed.

Check: Free disk space in "/tmp" dir

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

gentic 20.47GB (21460692KB) 400MB (409600KB) passed

cellar 19.1GB (20027204KB) 400MB (409600KB) passed

Result: Free disk space check passed.

Check: Swap space

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

gentic 2.44GB (2555896KB) 1.5GB (1572864KB) passed

cellar 2.44GB (2562356KB) 1.5GB (1572864KB) passed

Result: Swap space check passed.

Check: System architecture

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

gentic i686 i686 passed

cellar i686 i686 passed

Result: System architecture check passed.

Check: Kernel version

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

gentic 2.6.18-8.el5PAE 2.6.9 passed

cellar 2.6.18-8.el5PAE 2.6.9 passed

Result: Kernel version check passed.

Check: Package existence for "make-3.81"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic make-3.81-1.1 passed

cellar make-3.81-1.1 passed

Result: Package existence check passed for "make-3.81".

Check: Package existence for "binutils-2.17.50.0.6"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic binutils-2.17.50.0.6-2.el5 passed

cellar binutils-2.17.50.0.6-2.el5 passed

Result: Package existence check passed for "binutils-2.17.50.0.6".

Check: Package existence for "gcc-4.1.1"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic gcc-4.1.1-52.el5 passed

cellar gcc-4.1.1-52.el5 passed

Result: Package existence check passed for "gcc-4.1.1".

Check: Package existence for "libaio-0.3.106"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic libaio-0.3.106-3.2 passed

cellar libaio-0.3.106-3.2 passed

Result: Package existence check passed for "libaio-0.3.106".

Check: Package existence for "libaio-devel-0.3.106"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic libaio-devel-0.3.106-3.2 passed

cellar libaio-devel-0.3.106-3.2 passed

Result: Package existence check passed for "libaio-devel-0.3.106".

Check: Package existence for "libstdc++-4.1.1"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic libstdc++-4.1.1-52.el5 passed

cellar libstdc++-4.1.1-52.el5 passed

Result: Package existence check passed for "libstdc++-4.1.1".

Check: Package existence for "elfutils-libelf-devel-0.125"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic elfutils-libelf-devel-0.125-3.el5 passed

cellar elfutils-libelf-devel-0.125-3.el5 passed

Result: Package existence check passed for "elfutils-libelf-devel-0.125".

Check: Package existence for "sysstat-7.0.0"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic sysstat-7.0.0-3.el5 passed

cellar sysstat-7.0.0-3.el5 passed

Result: Package existence check passed for "sysstat-7.0.0".

Check: Package existence for "compat-libstdc++-33-3.2.3"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic compat-libstdc++-33-3.2.3-61 passed

cellar compat-libstdc++-33-3.2.3-61 passed

Result: Package existence check passed for "compat-libstdc++-33-3.2.3".

Check: Package existence for "libgcc-4.1.1"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic libgcc-4.1.1-52.el5 passed

cellar libgcc-4.1.1-52.el5 passed

Result: Package existence check passed for "libgcc-4.1.1".

Check: Package existence for "libstdc++-devel-4.1.1"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic libstdc++-devel-4.1.1-52.el5 passed

cellar libstdc++-devel-4.1.1-52.el5 passed

Result: Package existence check passed for "libstdc++-devel-4.1.1".

Check: Package existence for "unixODBC-2.2.11"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic unixODBC-2.2.11-7.1 passed

cellar unixODBC-2.2.11-7.1 passed

Result: Package existence check passed for "unixODBC-2.2.11".

Check: Package existence for "unixODBC-devel-2.2.11"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic unixODBC-devel-2.2.11-7.1 passed

cellar unixODBC-devel-2.2.11-7.1 passed

Result: Package existence check passed for "unixODBC-devel-2.2.11".

Check: Package existence for "glibc-2.5-12"

Node Name Status Comment

------------------------------ ------------------------------ ----------------

gentic glibc-2.5-12 passed

cellar glibc-2.5-12 passed

Result: Package existence check passed for "glibc-2.5-12".

Check: Group existence for "dba"

Node Name Status Comment

------------ ------------------------ ------------------------

gentic exists passed

cellar exists passed

Result: Group existence check passed for "dba".

Check: Group existence for "oinstall"

Node Name Status Comment

------------ ------------------------ ------------------------

gentic exists passed

cellar exists passed

Result: Group existence check passed for "oinstall".

Check: User existence for "nobody"

Node Name Status Comment

------------ ------------------------ ------------------------

gentic exists passed

cellar exists passed

Result: User existence check passed for "nobody".

System requirement failed for 'crs'

Pre-check for cluster services setup was unsuccessful.

Checks did not pass for the following node(s): cellar

The failed memory check on Cellar can be ignored.

Install Clusterware

Make sure that the X11-Server is started and reachable.

oracle> echo $DISPLAY

192.168.138.11:0.0

Load the SSH Keys into memory

oracle> exec /usr/bin/ssh-agent $SHELL

oracle> /usr/bin/ssh-add

Identity added: /home/oracle/.ssh/id_rsa (/home/oracle/.ssh/id_rsa)

Start the Installer, make sure that there are no errors shown in the Installer Window

oracle> ./runInstaller

Starting Oracle Universal Installer...

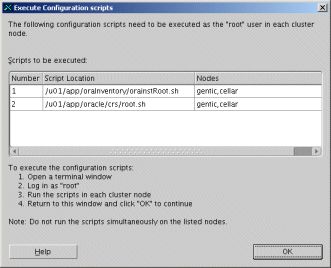

oracle> cd /u01/app/oraInventory

oracle> su

root> ./orainstRoot.sh

Changing permissions of /u01/app/oraInventory to 770.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete

oracle> cd /u01/app/oraInventory

oracle> su

root> ./orainstRoot.sh

Changing permissions of /u01/app/oraInventory to 770.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete

root> cd /u01/app/oracle/crs

root> ./root.sh

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up Network socket directories

Oracle Cluster Registry configuration upgraded successfully

The directory '/u01/app/oracle' is not owned by root. Changing owner to root

The directory '/u01/app' is not owned by root. Changing owner to root

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node :

node 1: gentic gentic-priv gentic

node 2: cellar cellar-priv cellar

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Now formatting voting device: /u01/votdsk/voting_disk

Format of 1 voting devices complete.

Date: 19.09.07 Time: 10:03:48

Linux gentic 2.6.18-8.el5PAE #1 SMP Tue Jun 5 23:39:57 EDT 2007 i686 i686 i386 GNU/Linux

Last login at 10:03, Sep 19 on /dev/pts/1

Startup will be queued to init within 30 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

Cluster Synchronization Services is active on these nodes.

gentic

Cluster Synchronization Services is inactive on these nodes.

cellar

Local node checking complete. Run root.sh on remaining nodes to start CRS daemons.

root> cd /u01/app/oracle/crs

root> ./root.sh

WARNING: directory '/u01/app/oracle' is not owned by root

WARNING: directory '/u01/app' is not owned by root

Checking to see if Oracle CRS stack is already configured

/etc/oracle does not exist. Creating it now.

Setting the permissions on OCR backup directory

Setting up Network socket directories

Oracle Cluster Registry configuration upgraded successfully

The directory '/u01/app/oracle' is not owned by root. Changing owner to root

The directory '/u01/app' is not owned by root. Changing owner to root

clscfg: EXISTING configuration version 4 detected.

clscfg: version 4 is 11 Release 1.

Successfully accumulated necessary OCR keys.

Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897.

node :

node 1: gentic gentic-priv gentic

node 2: cellar cellar-priv cellar

clscfg: Arguments check out successfully.

NO KEYS WERE WRITTEN. Supply -force parameter to override.

-force is destructive and will destroy any previous cluster

configuration.

Oracle Cluster Registry for cluster has already been initialized

Date: 19.09.07 Time: 10:10:11

Linux cellar 2.6.18-8.el5PAE #1 SMP Tue Jun 5 23:39:57 EDT 2007 i686 i686 i386 GNU/Linux

Last login at 10:10, Sep 19 on /dev/pts/0

Startup will be queued to init within 30 seconds.

Adding daemons to inittab

Expecting the CRS daemons to be up within 600 seconds.

Cluster Synchronization Services is active on these nodes.

gentic

cellar

Cluster Synchronization Services is active on all the nodes.

Waiting for the Oracle CRSD and EVMD to start

Oracle CRS stack installed and running under init(1M)

Running vipca(silent) for configuring nodeapps

Creating VIP application resource on (2) nodes...

Creating GSD application resource on (2) nodes...

Creating ONS application resource on (2) nodes...

Starting VIP application resource on (2) nodes...

Starting GSD application resource on (2) nodes...

Starting ONS application resource on (2) nodes...

Done.

Go back to the Installer.

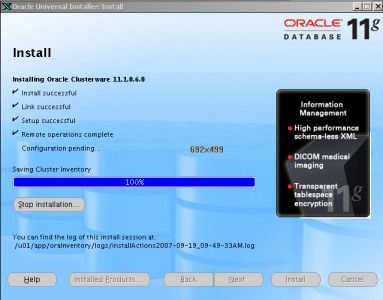

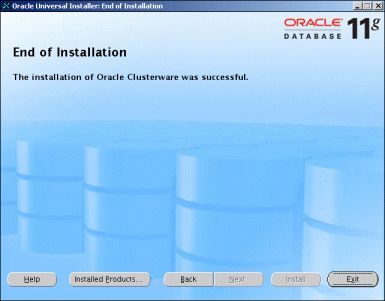

The clusterware installation is now complete, if you reboot the nodes, you will see that the clusterware now is automatically started with the script /etc/init.d/S96init.crs, many processes are now up and running.

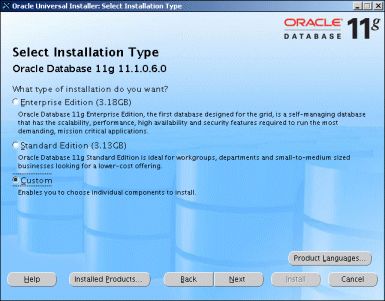

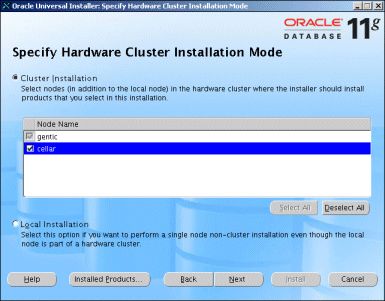

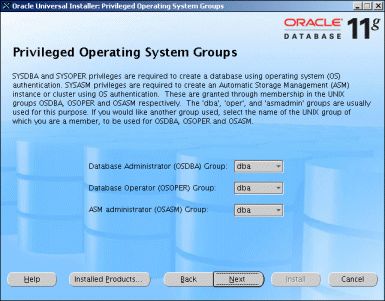

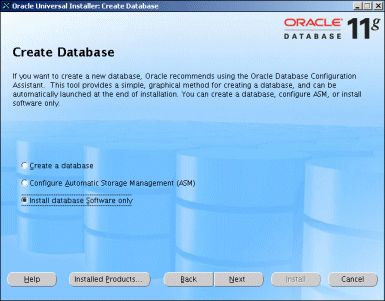

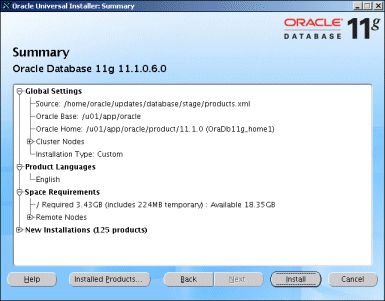

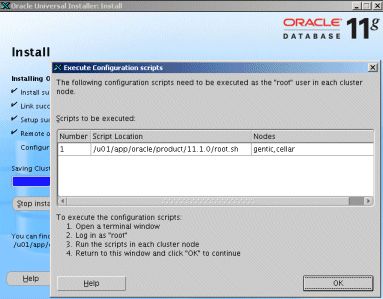

Install Oracle Database Software

Perform the following installation procedures on only one node in the cluster! The Oracle database software will be installed to all other nodes in the cluster by the Oracle Universal Installer.

You will not use the ?Create Database? option when installing the software. You will, instead, create the database using the Database Creation Assistant (DBCA) after the install.

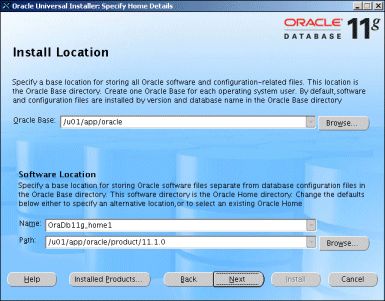

Create Oracle Home

Create Oracle Home on both RAC Nodes

root> mkdir -p /u01/app/oracle/product/11.1.0

root> chown -R oracle:oinstall /u01/app/oracle/product

root> chmod -R 775 /u01/app/oracle/product

root> mkdir -p /u01/app/oracle/product/11.1.0

root> chown -R oracle:oinstall /u01/app/oracle/product

root> chmod -R 775 /u01/app/oracle/product

Install Oracle Database Software

oracle> unzip linux_11gR1_database.zip

oracle> cd database

Make sure that the X11-Server is started and reachable.

oracle> echo $DISPLAY

192.168.138.11:0.0

Load the SSH Keys into memory

oracle> exec /usr/bin/ssh-agent $SHELL

oracle> /usr/bin/ssh-add

Identity added: /home/oracle/.ssh/id_rsa (/home/oracle/.ssh/id_rsa)

Start the Installer, make sure that there are no errors shown in the Installer Window

oracle> ./runInstaller

oracle> cd /u01/app/oracle/product/11.1.0

oracle> su

root> ./root.sh

oracle> cd /u01/app/oracle/product/11.1.0

oracle> su

root> ./root.sh

Create Listener Configuration

The process of creating the TNS listener only needs to be performed on one node in the cluster. All changes will be made and replicated to all nodes in the cluster. On one of the nodes bring up the NETCA and run through the process of creating a new TNS listener process and also configure the node for local access.

oracle> netca

- Choose Listener Configuration (listener.ora), Add, Listener Name: LISTENER

- Choose Naming Methods Configuration (sqlnet.ora), Local Naming

- Local Net Service Name Configuration (tnsnames.ora), Add, Service Name=AKA, TCP, Hostname=gentic, Port=1521

Note that the Configuration Files are stored in $TNS_ADMIN. We noted, that $TNS_ADMIN has to point to

$ORACLE_HOME/network/admin. If it points to another location, the listener is not successfully started!

oracle@gentic> echo $TNS_ADMIN

/u01/app/oracle/product/11.1.0/network/admin

# Akadia AG, Fichtenweg 10, CH-3672 Oberdiessbach listener.ora

# --------------------------------------------------------------------------

# File: listener.ora

#

# Autor: Martin Zahn, Akadia AG, 30.09.2007

#

# Purpose: Configuration file for Net Listener (RAC Configuration)

#

# Location: $TNS_ADMIN

#

# Certified: Oracle 11.1.0.6.0 on Enterprise Linux 5

# --------------------------------------------------------------------------

LISTENER_Gentic =

(DESCRIPTION_LIST =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = gentic-vip)(PORT = 1521)(IP = FIRST))

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.138.35)(PORT = 1521)(IP = FIRST))

)

)

SID_LIST_LISTENER_Gentic =

(SID_LIST =

(SID_DESC =

(SID_NAME = PLSExtProc)

(ORACLE_HOME = /u01/app/oracle/product/11.1.0)

(PROGRAM = extproc)

)

)

# Akadia AG, Fichtenweg 10, CH-3672 Oberdiessbach listener.ora

# --------------------------------------------------------------------------

# File: listener.ora

#

# Autor: Martin Zahn, Akadia AG, 30.09.2007

#

# Purpose: Configuration file for Net Listener (RAC Configuration)

#

# Location: $TNS_ADMIN

#

# Certified: Oracle 11.1.0.6.0 on Enterprise Linux 5

# --------------------------------------------------------------------------

LISTENER_CELLAR =

(DESCRIPTION_LIST =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = cellar-vip)(PORT = 1521)(IP = FIRST))

(ADDRESS = (PROTOCOL = TCP)(HOST = 192.168.138.36)(PORT = 1521)(IP = FIRST))

)

)

SID_LIST_LISTENER_CELLAR =

(SID_LIST =

(SID_DESC =

(SID_NAME = PLSExtProc)

(ORACLE_HOME = /u01/app/oracle/product/11.1.0)

(PROGRAM = extproc)

)

)

# Akadia AG, Fichtenweg 10, CH-3672 Oberdiessbach tnsnames.ora

# --------------------------------------------------------------------------

# File: tnsnames.ora

#

# Autor: Martin Zahn, Akadia AG, 30.09.2007

#

# Purpose: Configuration File for all Net Clients (RAC Configuration)

#

# Location: $TNS_ADMIN

#

# Certified: Oracle 11.1.0.6.0 on Enterprise Linux 5

# --------------------------------------------------------------------------

AKA =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = gentic-vip)(PORT = 1521))

(ADDRESS = (PROTOCOL = TCP)(HOST = cellar-vip)(PORT = 1521))

(LOAD_BALANCE = yes)

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = AKA.WORLD)

(FAILOVER_MODE =

(TYPE = SELECT)

(METHOD = BASIC)

(RETRIES = 180)

(DELAY = 5)

)

)

)

AKA2 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = cellar-vip)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = AKA.WORLD)

(INSTANCE_NAME = AKA2)

)

)

AKA1 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = gentic-vip)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = AKA.WORLD)

(INSTANCE_NAME = AKA1)

)

)

LISTENERS_AKA =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = gentic-vip)(PORT = 1521))

(ADDRESS = (PROTOCOL = TCP)(HOST = cellar-vip)(PORT = 1521))

)

EXTPROC_CONNECTION_DATA =

(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC0))

)

(CONNECT_DATA =

(SID = PLSExtProc)

(PRESENTATION = RO)

)

)

# Akadia AG, Fichtenweg 10, CH-3672 Oberdiessbach sqlnet.ora

# --------------------------------------------------------------------------

# File: sqlnet.ora

#

# Autor: Martin Zahn, Akadia AG, 30.09.2007

#

# Purpose: Configuration File for all Net Clients (RAC Configuration)

#

# Location: $TNS_ADMIN

#

# Certified: Oracle 11.1.0.6.0 on Enterprise Linux 5

# -------------------------------------------------------------------------- NAMES.DIRECTORY_PATH= (TNSNAMES)

oracle@gentic> srvctl stop listener -n gentic -l LISTENER_GENTIC

oracle@gentic> srvctl start listener -n gentic -l LISTENER_GENTIC

oracle@cellar> srvctl stop listener -n gentic -l LISTENER_CELLAR

oracle@cellar> srvctl start listener -n gentic -l LISTENER_CELLAR

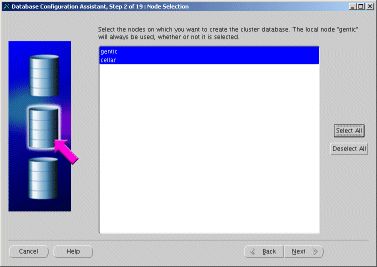

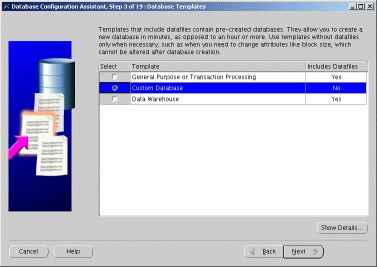

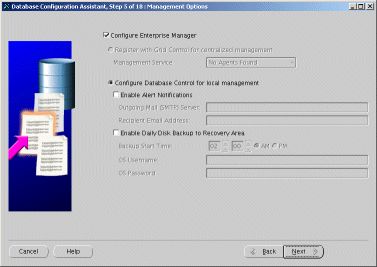

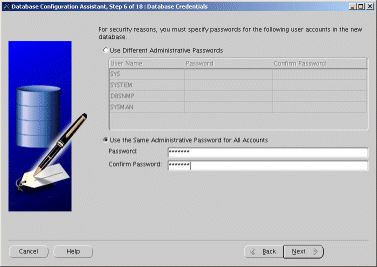

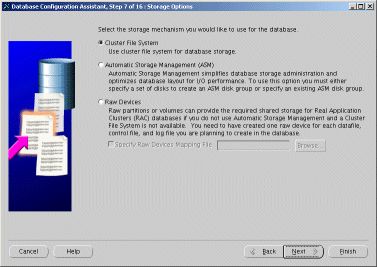

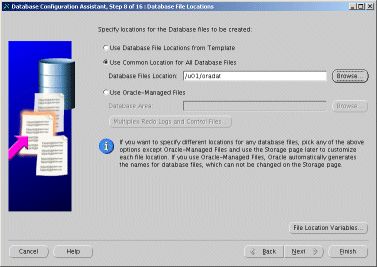

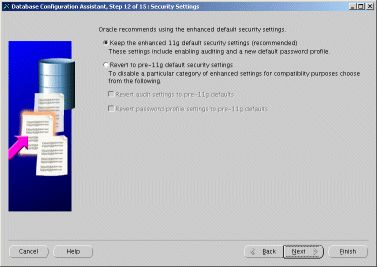

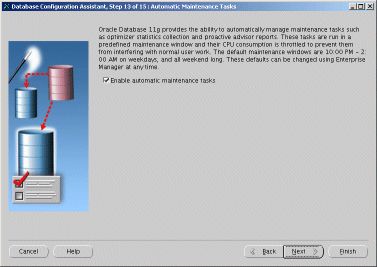

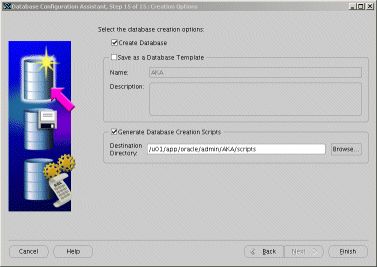

Create the Cluster Database DBCA

For the Clustered Database, it's easier to create the database directly with DBCA. Oracle strongly recommends that you use DBCA to create a clustered database. The scripts which can be generated by DBCA are for reference purposes only. They can be found in /u01/app/oracle/admin/AKA/scripts. We tried to create the Cluster Database using the scripts, but without success. The following Figure shows the location for most of the needed Database File Locations.

The following Table shows the PATH to the corresponding Files

| File |

Location |

Shared |

| audit_file_dest |

/u01/app/oracle/admin/AKA/adump |

No |

| background_dump_dest |

/u01/app/oracle/diag/rdbms/aka/AKA1/trace

/u01/app/oracle/diag/rdbms/aka/AKA2/trace |

No |

| core_dump_dest |

/u01/app/oracle/diag/rdbms/aka/AKA1/cdump

/u01/app/oracle/diag/rdbms/aka/AKA2/cdump |

No |

| user_dump_dest |

/u01/app/oracle/diag/rdbms/aka/AKA1/trace

/u01/app/oracle/diag/rdbms/aka/AKA2/trace |

No |

dg_broker_config_file1

dg_broker_config_file2 |

/u01/app/oracle/product/11.1.0/dbs/dr1AKA.dat

/u01/app/oracle/product/11.1.0/dbs/dr2AKA.dat |

No |

| diagnostic_dest |

/u01/app/oracle |

No |

| Alert.log (log.xml) |

/u01/app/oracle/diag/rdbms/aka/AKA1/alert |

No |

| LISTENER.ORA |

/u01/app/oracle/product/11.1.0/network/admin |

No |

| TNSNAMES.ORA |

/u01/app/oracle/product/11.1.0/network/admin |

No |

| SQLNET.ORA |

/u01/app/oracle/product/11.1.0/network/admin |

No |

| spfile |

/u01/oradat/AKA/spfileAKA.ora |

Yes |

| INIT.ORA |

/u01/app/oracle/product/11.1.0/dbs/initAKA1.ora

/u01/app/oracle/product/11.1.0/dbs/initAKA2.ora linked to spfile /u01/oradat/AKA/spfileAKA.ora |

Yes |

| control_files |

/u01/oradat/AKA/control01.ctl,

/u01/oradat/AKA/control02.ctl

/u01/oradat/AKA/control03.ctl |

Yes |

| SYSTEM Tablespace |

/u01/oradat/AKA/system01.dbf |

Yes |

| SYSAUX Tablespace |

/u01/oradat/AKA/sysaux01.dbf |

Yes |

| TEMP Tablespace |

/u01/oradat/AKA/temp01.dbf |

Yes |

| UNDO Tablespace |

/u01/oradat/AKA/undotbs01.dbf

/u01/oradat/AKA/undotbs02.dbf |

Yes |

| Redolog Files |

/u01/oradat/AKA/redo01.log

/u01/oradat/AKA/redo02.log

/u01/oradat/AKA/redo03.log

/u01/oradat/AKA/redo04.log |

Yes |

| USERS Tablespace |

/u01/oradat/AKA/users01.dbf |

Yes |

| Cluster Registry File |

/u01/crscfg/crs_registry |

Yes |

| Voting Disk |

/u01/votdsk/voting_disk |

Yes |

| Enterprise Manager Console |

https://gentic:1158/em |

Yes |

Now, create this Database using DBCA.

oracle@gentic> dbca

Check created Database

The srvctl utility shows the current configuration and status of the RAC database.

Display configuration for the AKA Cluster Database

oracle@gentic> srvctl config database -d AKA

gentic AKA1 /u01/app/oracle/product/11.1.0

cellar AKA2 /u01/app/oracle/product/11.1.0

Status of all instances and services

oracle@gentic> srvctl status database -d AKA

Instance AKA1 is running on node gentic

Instance AKA2 is running on node cellar

Status of node applications on a particular node

oracle@gentic> srvctl status nodeapps -n gentic

VIP is running on node: gentic

GSD is running on node: gentic

Listener is running on node: gentic

ONS daemon is running on node: gentic

Display the configuration for node applications - (VIP, GSD, ONS, Listener)

oracle@gentic> srvctl config nodeapps -n gentic -a -g -s -l

VIP exists.: /gentic-vip/192.168.138.130/255.255.255.0/eth0

GSD exists.

ONS daemon exists.

Listener exists.

All running instances in the cluster

sqlplus system/manager@AKA1

SELECT inst_id,

instance_number,

instance_name,

parallel,

status,

database_status,

active_state,

host_name host

FROM gv$instance

ORDER BY inst_id;

INST_ID INSTANCE_NUMBER INSTANCE_NAME PAR STATUS DATABASE_STATUS ACTIVE_ST HOST

---------- --------------- ---------------- --- ------------ ----------------- --------- --------

1 1 AKA1 YES OPEN ACTIVE NORMAL gentic

2 2 AKA2 YES OPEN ACTIVE NORMAL cellar

SELECT name FROM v$datafile

UNION

SELECT member FROM v$logfile

UNION

SELECT name FROM v$controlfile

UNION

SELECT name FROM v$tempfile;

NAME

--------------------------------------

/u01/oradat/AKA/control01.ctl

/u01/oradat/AKA/control02.ctl

/u01/oradat/AKA/control03.ctl

/u01/oradat/AKA/redo01.log

/u01/oradat/AKA/redo02.log

/u01/oradat/AKA/redo03.log

/u01/oradat/AKA/redo04.log

/u01/oradat/AKA/sysaux01.dbf

/u01/oradat/AKA/system01.dbf

/u01/oradat/AKA/temp01.dbf

/u01/oradat/AKA/undotbs01.dbf

/u01/oradat/AKA/undotbs02.dbf

/u01/oradat/AKA/users01.dbf

The V$ACTIVE_INSTANCES view can also display the current status of the instances.

SELECT * FROM v$active_instances;

INST_NUMBER INST_NAME

----------- ----------------------------------------------

1 gentic:AKA1

2 cellar:AKA2

Finally, the GV$ allow you to display global information for the whole RAC.

SELECT inst_id, username, sid, serial#

FROM gv$session

WHERE username IS NOT NULL;

INST_ID USERNAME SID SERIAL#

---------- ------------------------------ ---------- ----------

1 SYSTEM 113 137

1 DBSNMP 114 264

1 DBSNMP 116 27

1 SYSMAN 118 4

1 SYSMAN 121 11

1 SYSMAN 124 25

1 SYS 125 18

1 SYSMAN 126 14

1 SYS 127 7

1 DBSNMP 128 370

1 SYS 130 52

1 SYS 144 9

1 SYSTEM 170 608

2 DBSNMP 117 393

2 SYSTEM 119 1997

2 SYSMAN 123 53

2 DBSNMP 124 52

2 SYS 127 115

2 SYS 128 126

2 SYSMAN 129 771

2 SYSMAN 134 18

2 DBSNMP 135 5

2 SYSMAN 146 42

2 SYSMAN 170 49

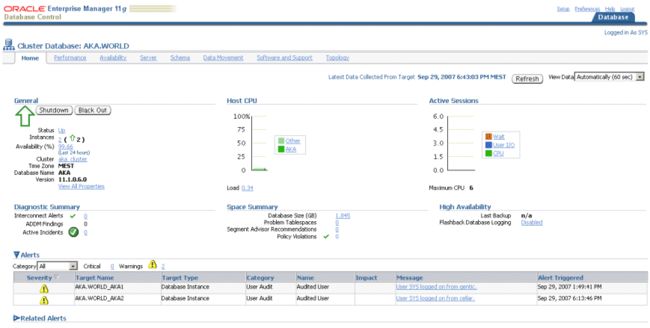

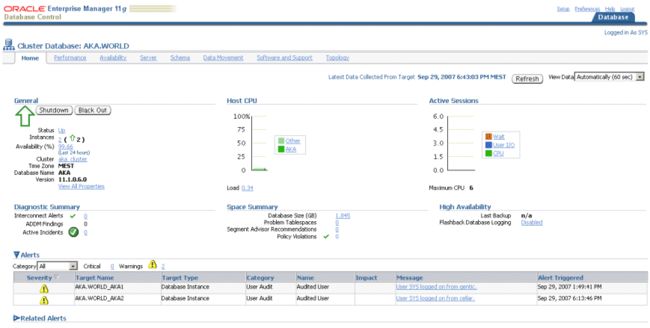

Start Enterprise Manager Console

The Enterprise Manager Console is shared for the whole Cluster, in our Example it listens on

https://gentic:1158/em

Stopping the Oracle RAC 11g Environment

At this point, we've installed and configured Oracle RAC 11g entirely and have a fully functional clustered database.

All services - including Oracle Clusterware, all Oracle instances, Enterprise Manager Database Console will start automatically on each reboot of the Linux nodes.

There are times, however, when you might want to shut down a node and manually start it back up. Or you may find that Enterprise Manager is not running and need to start it.

oracle@gentic> emctl stop dbconsole

oracle@gentic> srvctl stop instance -d AKA -i AKA1

oracle@gentic> srvctl stop nodeapps -n gentic

oracle@cellar> emctl stop dbconsole

oracle@cellar> srvctl stop instance -d AKA -i AKA2

oracle@cellar> srvctl stop nodeapps -n cellar

Starting the Oracle RAC 11g Environment

The first step is to start the node applications (Virtual IP, GSD, TNS Listener, and ONS). When the node applications are successfully started, then bring up the Oracle instance (and related services) and the Enterprise Manager Database console.

oracle@gentic> srvctl start nodeapps -n gentic

oracle@gentic> srvctl start instance -d AKA -i AKA1

oracle@gentic> emctl start dbconsole

oracle@cellar> srvctl start nodeapps -n cellar

oracle@cellar> srvctl start instance -d AKA -i AKA2

oracle@cellar> emctl start dbconsole

Start/Stop All Instances with SRVCTL

Start/stop all the instances and their enabled services

oracle@gentic> srvctl start database -d AKA

oracle@gentic> srvctl stop database -d AKA

Transparent Application Failover (TAF)

When considering the availability of the Oracle database, Oracle RAC 11g provides a superior solution with its advanced failover mechanisms. Oracle RAC 11g includes the required components that all work within a clustered configuration responsible for providing continuous availability; when one of the participating systems fail within the cluster, the users are automatically migrated to the other available systems.

A major component of Oracle RAC 11g that is responsible for failover processing is the Transparent Application Failover (TAF) option. All database connections (and processes) that lose connections are reconnected to another node within the cluster. The failover is completely transparent to the user.

One important note is that TAF happens automatically within the OCI libraries. Thus your application (client) code does not need to change in order to take advantage of TAF. Certain configuration steps, however, will need to be done on the Oracle TNS file tnsnames.ora.

Setup the tnsnames.ora File on the Oracle Client (Host VIPER)

Before demonstrating TAF, we need to verify that a valid entry exists in the tnsnames.ora file on a non-RAC client machine. Ensure that you have the Oracle RDBMS software installed. For our Test we use an Oracle 10.2.0.3 Non-RAC Client. Check that the following Service AKA is included in the tnsnames.ora File on the Non-RAC Client.

AKA =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = gentic-vip)(PORT = 1521))

(ADDRESS = (PROTOCOL = TCP)(HOST = cellar-vip)(PORT = 1521))

(LOAD_BALANCE = yes)

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = AKA.WORLD)

(FAILOVER_MODE =

(TYPE = SELECT)

(METHOD = BASIC)

(RETRIES = 180)

(DELAY = 5)

)

)

)

Setup of the sqlnet.ora File on the Oracle Client (Host VIPER)

Make sure, that tnsnames.ora on the Oracle Client will be used.

NAMES.DIRECTORY_PATH= (TNSNAMES)

TAF Test

Our Test is initiated from the Non-RAC Client VIPER where Oracle 10.2.0.3 is installed

oracle@viper> ping gentic-vip

PING gentic-vip (192.168.138.130) 56(84) bytes of data.

64 bytes from gentic-vip (192.168.138.130): icmp_seq=1 ttl=64 time=2.17 ms

64 bytes from gentic-vip (192.168.138.130): icmp_seq=2 ttl=64 time=1.20 ms

oracle@viper> ping cellar-vip

PING cellar-vip (192.168.138.131) 56(84) bytes of data.

64 bytes from cellar-vip (192.168.138.131): icmp_seq=1 ttl=64 time=2.41 ms

64 bytes from cellar-vip (192.168.138.131): icmp_seq=2 ttl=64 time=1.30 ms

oracle@viper> tnsping AKA

TNS Ping Utility for Linux: Version 10.2.0.3.0 - Production on 30-SEP-2007 11:35:46

Used parameter files: /home/oracle/config/10.2.0/sqlnet.ora

Used TNSNAMES adapter to resolve the alias

Attempting to contact (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = gentic-vip)

(PORT = 1521)) (ADDRESS = (PROTOCOL = TCP)(HOST = cellar-vip)(PORT = 1521))

(LOAD_BALANCE = yes) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = AKA.WORLD)

(FAILOVER_MODE = (TYPE = SELECT) (METHOD = BASIC) (RETRIES = 180) (DELAY = 5))))OK (0 msec)

SQL Query to Check the Session's Failover Information

The following SQL query can be used to check a session's failover type, failover method, and if a failover has occurred. We will be using this query throughout this example.

oracle@viper> sqlplus system/manager@AKA

SQL*Plus: Release 10.2.0.3.0 - Production on Sun Sep 30 11:38:45 2007

Copyright (c) 1982, 2006, Oracle. All Rights Reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.1.0.6.0 - Production

With the Partitioning, Real Application Clusters, OLAP, Data Mining

and Real Application Testing options

SQL> COLUMN instance_name FORMAT a13

COLUMN host_name FORMAT a9

COLUMN failover_method FORMAT a15

COLUMN failed_over FORMAT a11

SELECT DISTINCT

v.instance_name AS instance_name,

v.host_name AS host_name,

s.failover_type AS failover_type,

s.failover_method AS failover_method,

s.failed_over AS failed_over

FROM v$instance v, v$session s

WHERE s.username = 'SYSTEM';

INSTANCE_NAME HOST_NAME FAILOVER_TYPE FAILOVER_METHOD FAILED_OVER

------------- --------- ------------- --------------- -----------

AKA2 cellar SELECT BASIC NO

We can see, that we are connected to the Instance AKA2 which is running on Cellar. Now we stop this Instance without disconnecting from VIPER.

oracle@cellar> srvctl status database -d AKA

Instance AKA1 is running on node gentic

Instance AKA2 is running on node cellar

oracle@cellar> srvctl stop instance -d AKA -i AKA2 -o abort

oracle@cellar> srvctl status database -d AKA

Instance AKA1 is running on node gentic

Instance AKA2 is not running on node cellar

Now let's go back to our SQL session on VIPER and rerun the SQL statement:

SQL> COLUMN instance_name FORMAT a13

COLUMN host_name FORMAT a9

COLUMN failover_method FORMAT a15

COLUMN failed_over FORMAT a11

SELECT DISTINCT

v.instance_name AS instance_name,

v.host_name AS host_name,

s.failover_type AS failover_type,

s.failover_method AS failover_method,

s.failed_over AS failed_over

FROM v$instance v, v$session s

WHERE s.username = 'SYSTEM';

INSTANCE_NAME HOST_NAME FAILOVER_TYPE FAILOVER_METHOD FAILED_OVER

------------- --------- ------------- --------------- -----------

AKA1 gentic SELECT BASIC YES

We can see that the above session has now been failed over to instance AKA1 on Gentic.

Facts Sheet RAC

Here, we present some important Facts around the RAC Architecture.

-

Oracle RAC databases differ architecturally from single-instance Oracle databases in that each Oracle RAC database instance also has:

- At least one additional thread of redo for each instance

- An instance-specific undo tablespace (there are 2 UNDO Tablespaces for a 2-Node RAC)

-

All data files, control files, SPFILEs, and redo log files in Oracle RAC environments must reside on cluster-aware shared disks so that all of the cluster database instances can access these storage components.

-

Oracle recommends that you use one shared server parameter file (SPFILE) with instance-specific entries. Alternatively, you can use a local file system to store instance-specific parameter files (PFILEs).

Troubles during the Installation

We encountered the following troubles during our Installation

-

Make sure the hostname's during SSH User Equivalency Setup are identically in /etc/hosts,$HOME/.ssh/authorized_keys and $HOME/.ssh/known_hosts. For example if one host is called gentic in one file and gentic.localhost in another file the Installation will fail.

-

We noticed, that it's extremely hard to setup a Clustered Database with the generated Scripts from DBCA. The Database can be created, but it is difficult to register it with the Cluster Software using SVCTL.

|