FFmpeg In Android - tutorial-4-Spawning Threads创建线程

Overview 概述

Last time we added audio support by taking advantage of SDL’s audio functions. SDL started a thread that made callbacks to a function we defined every time it needed audio. Now we’re going to do the same sort of thing with the video display. This makes the code more modular and easier to work with - especially when we want to add syncing. So where do we start?

上面通过 SDL 的音频功能添加了音频支持, SDL 启动一个线程在需要音频数据时就会调用回调函数,视频显示也将如此。这使得代码更加模块化,更容易协调,尤其是当我们想要添加音视频同步时。现在从哪开始呢?

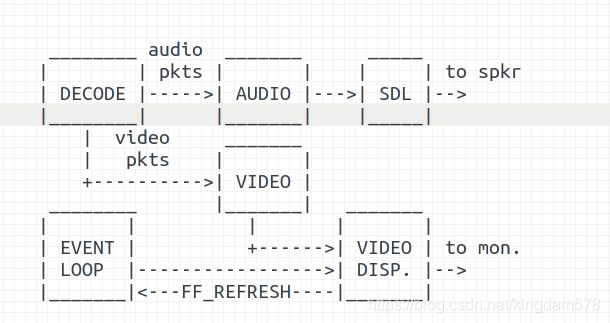

First we notice that our main function is handling an awful lot: it’s running through the event loop, reading in packets, and decoding the video. So what we’re going to do is split all those apart: we’re going to have a thread that will be responsible for decoding the packets; these packets will then be added to the queue and read by the corresponding audio and video threads. The audio thread we have already set up the way we want it; the video thread will be a little more complicated since we have to display the video ourselves. We will add the actual display code to the main loop. But instead of just displaying video every time we loop, we will integrate the video display into the event loop. The idea is to decode the video, save the resulting frame in another queue, then create a custom event (FF_REFRESH_EVENT) that we add to the event system, then when our event loop sees this event, it will display the next frame in the queue. Here’s a handy ASCII art illustration of what is going on:

首先注意到我们的主函数要处理的太多了:事件循环、读取数据包、解码视频。所以我们要做的是分开它们:创建一个负责解码数据包的线程;然后将数据包添加到队列中,并由相应的音频和视频的线程读取。我们已经这样设置了音频线程;视频线程将会复杂一点,因为我们要自己显示视频。我们将在主循环中添加显示代码。我们将把视频显示和事件循环放在一起,而不是每次循环显示视频。思路是这样的,解码视频,把产生的帧存到另一个队列里,然后创建一个自定义事件(FF_REFRESH_EVENT)并添加到事件系统里。那么当事件循环看到这个事件时,它将显示队列中的下一帧。下面是手绘的 ASCII 字符图,指明流程:

The main purpose of moving controlling the video display via the event loop is that using an SDL_Delay thread, we can control exactly when the next video frame shows up on the screen. When we finally sync the video in the next tutorial, it will be a simple matter to add the code that will schedule the next video refresh so the right picture is being shown on the screen at the right time.

将视频显示代码移动到事件循环里的主要意图是使用 SDL_Delay 线程,让我们可以精确控制屏幕显示下一帧的时 间。当我们最终在下一教程中同步视频时,会在其中添加代码,用来控制下一帧的刷新,这样让恰当的图像在恰当的时间显示。

Simplifying Code 简化代码

We’re also going to clean up the code a bit. We have all this audio and video codec information, and we’re going to be adding queues and buffers and who knows what else. All this stuff is for one logical unit, viz. the movie. So we’re going to make a large struct that will hold all that information called the VideoState.

下面我们将清理一下代码。我们有音频和视频编解码器的全部信息,也将添加队列和缓冲区,并且难说不会再添其它什么东西。所有这些都是一个逻辑单元,即电影。我们将创建一个大的结构体,名为 VideoState,包含所有的信息。

typedef struct VideoState {

AVFormatContext *pFormatCtx;

int videoStream, audioStream;

AVStream *audio_st;

AVCodecContext *audio_ctx;

PacketQueue audioq;

uint8_t audio_buf[(AVCODEC_MAX_AUDIO_FRAME_SIZE * 3) / 2];

unsigned int audio_buf_size;

unsigned int audio_buf_index;

AVPacket audio_pkt;

uint8_t *audio_pkt_data;

int audio_pkt_size;

AVStream *video_st;

AVCodecContext *video_ctx;

PacketQueue videoq;

VideoPicture pictq[VIDEO_PICTURE_QUEUE_SIZE];

int pictq_size, pictq_rindex, pictq_windex;

SDL_mutex *pictq_mutex;

SDL_cond *pictq_cond;

SDL_Thread *parse_tid;

SDL_Thread *video_tid;

char filename[1024];

int quit;

} VideoState;

Here we see a glimpse of what we’re going to get to. First we see the basic information - the format context and the indices of the audio and video stream, and the corresponding AVStream objects. Then we can see that we’ve moved some of those audio buffers into this structure. These (audio_buf,audio_buf_size, etc.) were all for information about audio that was still lying around (or the lack thereof). We’ve added another queue for the video, and a buffer (which will be used as a queue; we don’t need any fancy queueing

stuff for this) for the decoded frames (saved as an overlay). The VideoPicture struct is of our own creations (we’ll see what’s in it when we come to it). We also notice that we’ve allocated pointers for the two extra threads we will create, and the quit flag and the filename of the movie.

这里可以看出我们下一步工作的一些眉目。首先,是基础信息,包括格式上下文(format contex),音视频流标志(indices),及相应的 AVStream 对象。可以看到,我们把一些音频缓冲区(buffers)信息放到结构体里了。这些(audio_buf、 audio_buf_size 等)都是与存在(或不在)缓冲区里的音频有关的信息。我们为视频添加了另一个队列,和解码帧缓冲区(它将用作队列,不需要什么花哨的数据结构)。 VideoPicture 是我们自定义的结构(当使用它的时侯,你会知道它的含义)。我们也注意到为额外创建的两个线程分配了指针,还有退出标志,电影文件名。

So now we take it all the way back to the main function to see how this changes our program. Let’s set up our VideoState struct:

下面回到主函数,看程序里的变化。让我们初始化 VideoState 结构:

int main(int argc, char *argv[]) {

SDL_Event event;

VideoState *is;

is = av_mallocz(sizeof(VideoState));

av_mallocz() is a nice function that will allocate memory for us and zero it out.

av_mallocz() 是一个很好的函数,分配内存并初始化为 0。

Then we’ll initialize our locks for the display buffer (pictq), because since the event loop calls our display function - the display function, remember, will be pulling pre-decoded frames from pictq. At the same time, our video decoder will be putting information into it - we don’t know who will get there first. Hopefully you recognize that this is a classic race condition. So we allocate it now before we start any threads. Let’s also copy the filename of our movie into our VideoState.

然后我们会初始化显示缓冲区 pictq 的锁,因为事件循环会调用显示函数,记住,显示函数将从 pictq 里获取预解码的帧。同时,我们视频解码器将在 pictq 里放入它的信息——我们不知道谁将先发生。希望你已经意识到这是一个典型的竞态条件(race condition)。所以开始任何线程之前,先初始化分配它。让我们把电影的文件名复制到 VideoState 里。

av_strlcpy(is->filename, argv[1], sizeof(is->filename));

is->pictq_mutex = SDL_CreateMutex();

is->pictq_cond = SDL_CreateCond();

av_strlcpy is a function from ffmpeg that does some extra bounds checking beyond strncpy.

av_strlcpy 是 ffmpeg 里添加了边界检查,比 strncpy 更好的函数。

Our First Thread 我们的第一个线程

Now let’s finally launch our threads and get the real work done:

让我们最终启动线程,完成实际的工作:

schedule_refresh(is, 40);

is->parse_tid = SDL_CreateThread(decode_thread, is);

if(!is->parse_tid) {

av_free(is);

return -1;

}

schedule_refresh is a function we will define later. What it basically does is tell the system to push a FF_REFRESH_EVENT after the specified number of milliseconds. This will in turn call the video refresh function when we see it in the event queue. But for now, let’s look at SDL_CreateThread().

我们稍后将会定义 schedule_refresh 函数。它所做的基本上是告诉系统在指定的毫秒数后发送 FF_REFRESH_EVENT 事件。当它在事件队列里出现时,会回调视频刷新函数。让我们先看一下 SDL_CreateThread()。

SDL_CreateThread() does just that - it spawns a new thread that has complete access to all the memory of the original process, and starts the thread running on the function we give it. It will also pass that function user-defined data. In this case, we’re calling decode_thread() and with our VideoState struct attached. The first half of the function has nothing new; it simply does the work of opening the file and finding the index of the audio and video streams. The only thing we do different is save the format context in our big struct. After we’ve found our stream indices, we call another function that we will define, stream_component_open(). This is a pretty natural way to split things up, and since we do a lot of similar things to set up the video and audio codec, we reuse some code by making this a function.

SDL_CreateThread(),可以创建一个和原始线程一样拥有完全访问权限的新线程,并运行传入的函数。在这里,我们调用了 decode_thread(),还有 VideoState 结构作为参数。函数的上半部分没有有什么新的,仅完成打开文件和查找的音视频流索引的工作。唯一不同的是,在我们大结构里保存格式上下文。我们找到了流标志后,将调用另一个我们定义的函数, stream_component_open()。大事化小是很自然的事,我们做了很多类似的事来设置视频和音频编解码器,我们将把它封装为函数,以重用代码。

The stream_component_open() function is where we will find our codec decoder, set up our audio options, save important information to our big struct, and launch our audio and video threads. This is where we would also insert other options, such as forcing the codec instead of autodetecting it and so forth. Here it is:

我们使用 stream_component_open() 函数查找解码器,初始化音频选项,将重要的信息保存到我们大的结构,并启动音频和视频的线程。在这个函数中我们还做了一些其它的,如强制指定而不是自动检测的编解码器种类等。代码如下:

int stream_component_open(VideoState *is, int stream_index) {

AVFormatContext *pFormatCtx = is->pFormatCtx;

AVCodecContext *codecCtx;

AVCodec *codec;

SDL_AudioSpec wanted_spec, spec;

if(stream_index < 0 || stream_index >= pFormatCtx->nb_streams) {

return -1;

}

codec = avcodec_find_decoder(pFormatCtx->streams[stream_index]->codec->codec_id);

if(!codec) {

fprintf(stderr, "Unsupported codec!\n");

return -1;

}

codecCtx = avcodec_alloc_context3(codec);

if(avcodec_copy_context(codecCtx, pFormatCtx->streams[stream_index]->codec) != 0) {

fprintf(stderr, "Couldn't copy codec context");

return -1; // Error copying codec context

}

if(codecCtx->codec_type == AVMEDIA_TYPE_AUDIO) {

// Set audio settings from codec info

wanted_spec.freq = codecCtx->sample_rate;

/* ...etc... */

wanted_spec.callback = audio_callback;

wanted_spec.userdata = is;

if(SDL_OpenAudio(&wanted;_spec, &spec;) < 0) {

fprintf(stderr, "SDL_OpenAudio: %s\n", SDL_GetError());

return -1;

}

}

if(avcodec_open2(codecCtx, codec, NULL) < 0) {

fprintf(stderr, "Unsupported codec!\n");

return -1;

}

switch(codecCtx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

is->audioStream = stream_index;

is->audio_st = pFormatCtx->streams[stream_index];

is->audio_ctx = codecCtx;

is->audio_buf_size = 0;

is->audio_buf_index = 0;

memset(&is-;>audio_pkt, 0, sizeof(is->audio_pkt));

packet_queue_init(&is-;>audioq);

SDL_PauseAudio(0);

break;

case AVMEDIA_TYPE_VIDEO:

is->videoStream = stream_index;

is->video_st = pFormatCtx->streams[stream_index];

is->video_ctx = codecCtx;

packet_queue_init(&is-;>videoq);

is->video_tid = SDL_CreateThread(video_thread, is);

is->sws_ctx = sws_getContext(is->video_st->codec->width, is->video_st->codec->height,

is->video_st->codec->pix_fmt, is->video_st->codec->width,

is->video_st->codec->height, PIX_FMT_YUV420P,

SWS_BILINEAR, NULL, NULL, NULL

);

break;

default:

break;

}

}

This is pretty much the same as the code we had before, except now it’s generalized for audio and video. Notice that instead of aCodecCtx, we’ve set up our big struct as the userdata for our audio callback. We’ve also saved the streams themselves as audio_st and video_st. We also have added our video queue and set it up in the same way we set up our audio queue. Most of the point is to launch the video and audio threads. These bits do it:

除了推广到用于到音频和视频之外,这和我们之前的代码几乎相同。注意,我们使用大结构作为音频回调的userdata,而不是 aCodecCtx。我们也把流存为 audio_st 和 video_st,并像设置音频队列一样添加了视频队列。该启动视频和音频线程了,代码如下:

SDL_PauseAudio(0);

break;

/* ...... */

is->video_tid = SDL_CreateThread(video_thread, is);

We remember SDL_PauseAudio() from last time, and SDL_CreateThread() is used as in the exact same way as before. We’ll get back to our video_thread() function.

记得我们上次用过 SDL_PauseAudio(), SDL_CreateThread() 的使用和上次完全一样。我们即将回到 video_thread() 函数。

Before that, let’s go back to the second half of our decode_thread() function. It’s basically just a for loop that will read in a packet and put it on the right queue:

在此之前,让我们回到我们的 decode_thread() 函数的下半。它基本上只是 for 循环,读取数据包中并把它放在正确的队列:

for(;;) {

if(is->quit) {

break;

}

// seek stuff goes here

if(is->audioq.size > MAX_AUDIOQ_SIZE ||

is->videoq.size > MAX_VIDEOQ_SIZE) {

SDL_Delay(10);

continue;

}

if(av_read_frame(is->pFormatCtx, packet) < 0) {

if((is->pFormatCtx->pb->error) == 0) {

SDL_Delay(100); /* no error; wait for user input */

continue;

} else {

break;

}

}

// Is this a packet from the video stream?

if(packet->stream_index == is->videoStream) {

packet_queue_put(&is-;>videoq, packet);

} else if(packet->stream_index == is->audioStream) {

packet_queue_put(&is-;>audioq, packet);

} else {

av_free_packet(packet);

}

}

Nothing really new here, except that we now have a max size for our audio and video queue, and we’ve added a check for read errors. The format context has a ByteIOContext struct inside it called pb. ByteIOContext is the structure that basically keeps all the low-level file information in it.

这里没有什么新东西,除了我们给音频和视频队列限定了一个最大值并且我们添加一个检测读错误的函数。格式上下文里面有一个叫做 pb 的 ByteIOContext 类型结构体。这个结构体是用来保存一些低级的文件信息。函数url_ferror 用来检测结构体并发现是否有些读取文件错误。

After our for loop, we have all the code for waiting for the rest of the program to end or informing it that we’ve ended. This code is instructive because it shows us how we push events - something we’ll have to later to display the video.

在循环以后,我们的代码是用等待其余的程序结束和提示我们已经结束的。这些代码是有用的,因为它指示出了如何驱动事件——后面我们显示视频会用到。

while(!is->quit) {

SDL_Delay(100);

}

fail:

if(1){

SDL_Event event;

event.type = FF_QUIT_EVENT;

event.user.data1 = is;

SDL_PushEvent(&event;);

}

return 0;

We get values for user events by using the SDL constant SDL_USEREVENT. The first user event should be assigned the value SDL_USEREVENT, the next SDL_USEREVENT + 1, and so on. FF_QUIT_EVENT is defined in our program as SDL_USEREVENT + 1. We can also pass user data if we like, too, and here we pass our pointer to the big struct. Finally we call SDL_PushEvent(). In our event loop switch, we just put this by the SDL_QUIT_EVENT section we had before. We’ll see our event loop in more detail; for now, just be assured that when we push the FF_QUIT_EVENT, we’ll catch it later and raise our quit flag.

我们使用 SDL 常量 SDL_USEREVENT 来从用户事件中得到值。第一个用户事件的值应当是 SDL_USEREVENT,下一个是 SDL_USEREVENT + 1 并且依此类推。在我们的程序中 FF_QUIT_EVENT 被定义成 SDL_USEREVENT + 2。如果喜欢,我们也可以传递用户数据,在这里我们传递的是大结构体的指针。最后我们调用 SDL_PushEvent() 函数。在我们的事件分支中,我们只是像以前放入 SDL_QUIT_EVENT 部分一样。我们将在自己的事件队列中详细讨论,现在只是确保我们正确放入了 FF_QUIT_EVENT 事件,我们将在后面捕捉到它并且设置我们的退出标志 quit。

Getting the Frame: video_thread 得到帧: video_thread

After we have our codec prepared, we start our video thread. This thread reads in packets from the video queue, decodes the video into frames, and then calls a queue_picture function to put the processed frame onto a picture queue:

当我们准备好解码器后,我们开始视频线程。这个线程从视频队列中读取包,把它解码成视频帧,然后调用queue_picture 函数把处理好的帧放入到图像队列中:

int video_thread(void *arg) {

VideoState *is = (VideoState *)arg;

AVPacket pkt1, *packet = &pkt1;

int frameFinished;

AVFrame *pFrame;

pFrame = av_frame_alloc();

for(;;) {

if(packet_queue_get(&is-;>videoq, packet, 1) < 0) {

// means we quit getting packets

break;

}

// Decode video frame

avcodec_decode_video2(is->video_st->codec, pFrame, &frameFinished, packet);

// Did we get a video frame?

if(frameFinished) {

if(queue_picture(is, pFrame) < 0) {

break;

}

}

av_free_packet(packet);

}

av_free(pFrame);

return 0;

}

Most of this function should be familiar by this point. We’ve moved our avcodec_decode_video2 function here, just replaced some of the arguments; for example, we have the AVStream stored in our big struct, so we get our codec from there. We just keep getting packets from our video queue until someone tells us to quit or we encounter an error.

这里的很多函数应该很熟悉吧。我们把 avcodec_decode_video 函数移到了这里,替换了一些参数,例如:我们把 AVStream 保存在我们自己的大结构体中,所以我们可以从那里得到编解码器的信息。我们仅仅是不断地从视频队列中取包一直到有人告诉我们要停止或者出错为止。

Queueing the Frame 把帧放入队列

Let’s look at the function that stores our decoded frame, pFrame in our picture queue. Since our picture queue is an AVFrame/YUV420p(presumably to allow the video display function to have as little calculation as possible), we need to convert our frame into that. The data we store in the picture queue is a struct of our making:

让我们看一下保存解码帧的函数, pFrame被存放在图像队列中。因为我们的图像队列是AVFrame/YUV420p的集合(基本上不用让视频显示函数再做计算了),我们需要把帧转换成相应的格式。我们保存到图像队列中的数据是我们自己做的一个结构体。

typedef struct VideoPicture {

AVFrame* yuvFrame;

int width, height; /* source height & width */

int allocated;

}VideoPicture;

Our big struct has a buffer of these in it where we can store them. However, we need to allocate the AVFrame ourselves (notice the allocated flag that will indicate whether we have done so or not).

我们的大结构体有一个可以保存这些的缓冲区。然而,我们需要自己来申请 AVFrame(注意: allocated标志会指明我们是否已经做了这个申请的动作)。

To use this queue, we have two pointers - the writing index and the reading index. We also keep track of how many actual pictures are in the buffer. To write to the queue, we’re going to first wait for our buffer to clear out so we have space to store our VideoPicture. Then we check and see if we have already allocated the overlay at our writing index. If not, we’ll have to allocate some space. We also have to reallocate the buffer if the size of the window has changed!

为了使用这个队列,我们有两个指针——写入指针和读取指针。我们还记录实际有多少图像在缓冲区。要写入到队列中,我们先要等待缓冲清空以便于有位置来保存我们的 VideoPicture。然后我们检查我们是否已经申请到了一个可以写入覆盖的索引号。如果没有,我们要申请一段空间。我们也要重新申请缓冲如果窗口的大小已经改变。然而,为了避免被锁定,尽量避免在这里申请(我现在还不太清楚原因;我相信是为了避免在其它线程中调用 SDL 覆盖函数的原因)。

int queue_picture(VideoState *is, AVFrame *pFrame)

{

VideoPicture *vp;

/* wait until we have space for a new pic */

SDL_LockMutex(is->pictq_mutex);

while(is->pictq_size >= VIDEO_PICTURE_QUEUE_SIZE && !is->quit) {

SDL_CondWait(is->pictq_cond, is->pictq_mutex);

}

SDL_UnlockMutex(is->pictq_mutex);

if(is->quit)

return -1;

// windex is set to 0 initially

vp = &is->pictq[is->pictq_windex];

if (!vp->allocated) {

SDL_LockMutex(screen_mutex);

vp->yuvFrame = av_frame_alloc();

vp->width = is->video_dec_ctx->width;

vp->height = is->video_dec_ctx->height;

av_image_fill_arrays(vp->yuvFrame->data, vp->yuvFrame->linesize, is->yuvBuffer,

AV_PIX_FMT_YUV420P, is->video_dec_ctx->width, is->video_dec_ctx->height,1);

vp->allocated = 1;

SDL_UnlockMutex(screen_mutex);

if(is->quit) {

return -1;

}

}

/* We have a place to put our picture on the queue */

if(vp->yuvFrame) {

sws_scale(is->sws_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0,

is->video_dec_ctx->height, vp->yuvFrame->data, vp->yuvFrame->linesize);

/* now we inform our display thread that we have a pic ready */

if(++is->pictq_windex == VIDEO_PICTURE_QUEUE_SIZE) {

is->pictq_windex = 0;

}

SDL_LockMutex(is->pictq_mutex);

is->pictq_size++;

SDL_UnlockMutex(is->pictq_mutex);

}

return 0;

}

Okay, we’re all settled and we have our AVFrame allocated and ready to receive a picture.

现在,都准备好了AVFrame也分配好内存来接收图像了。再看上面的代码:

/* We have a place to put our picture on the queue */

if(vp->yuvFrame) {

sws_scale(is->sws_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0,

is->video_dec_ctx->height, vp->yuvFrame->data, vp->yuvFrame->linesize);

/* now we inform our display thread that we have a pic ready */

if(++is->pictq_windex == VIDEO_PICTURE_QUEUE_SIZE) {

is->pictq_windex = 0;

}

SDL_LockMutex(is->pictq_mutex);

is->pictq_size++;

SDL_UnlockMutex(is->pictq_mutex);

}

The majority of this part is simply the code we used earlier used sws_scaleto convert AVFrame. The last bit is simply “adding” our value onto the queue. The queue works by adding onto it until it is full, and reading from it as long as there is something on it. Therefore everything depends upon the is->pictq_size value, requiring us to lock it. So what we do here is increment the write pointer (and rollover if necessary), then lock the queue and increase its size. Now our reader will know there is more information on

the queue, and if this makes our queue full, our writer will know about it.

这部分代码和前面用到的一样,主要是简单的用sws_scale来转换帧。最后一点只是简单的给队列加1。这个队列在写的时候会一直写入到满为止,在读的时候会一直读空为止。因此所有的都依赖于 is->pictq_size值,这要求我们必需要锁定它。这里我们做的是增加写指针(在必要的时候采用轮转的方式),然后锁定队列并且增加尺寸。现在我们的读函数将会知道队列中有了更多的信息,当队列满的时候,我们的写函数也会知道。

Displaying the Video 显示视频

That’s it for our video thread! Now we’ve wrapped up all the loose threads except for one – remember that we called the schedule_refresh() function way back? Let’s see what that actually did:

上面就是我们的视频线程要做的。现在我们封装了几乎所有的散乱的线程,还剩下一个——记得我们前面调用 schedule_refresh() 函数吗?让我们看一下实际中是如何做的:

/* schedule a video refresh in 'delay' ms */

static void schedule_refresh(VideoState *is, int delay) {

SDL_AddTimer(delay, sdl_refresh_timer_cb, is);

}

SDL_AddTimer() is an SDL function that simply makes a callback to the user-specfied function after a certain number of milliseconds (and optionally carrying some user data). We’re going to use this function to schedule video updates - every time we call this function, it will set the timer, which will trigger an event, which will have our main() function in turn call a function that pulls a frame from our picture queue and displays it! Phew!

SDL_AddTimer() 是一个 SDL 函数,按照给定的毫秒来调用(也可以带一些用户数据参数)用户特定的函数。我们将用这个函数来定时刷新视频——每次我们调用这个函数的时候,它将设置一个定时器来触发定时事件来把一帧从图像队列中显示到屏幕上。哈!

But first thing’s first. Let’s trigger that event. That sends us over to:

但是,让我们先触发那个事件:

static Uint32 sdl_refresh_timer_cb(Uint32 interval, void *opaque) {

SDL_Event event;

event.type = FF_REFRESH_EVENT;

event.user.data1 = opaque;

SDL_PushEvent(&event;);

return 0; /* 0 means stop timer */

}

Here is the now-familiar event push. FF_REFRESH_EVENT is defined here as SDL_USEREVENT + 1. One thing to notice is that when we return 0, SDL stops the timer so the callback is not made again.

这里向队列中写入了一个现在很熟悉的事件。 FF_REFRESH_EVENT 被定义成 SDL_USEREVENT+1。要注意的一件事是当返回 0 的时候, SDL 停止定时器,于是回调就不会再发生。

Now we’ve pushed an FF_REFRESH_EVENT, we need to handle it in our event loop:

现在我们产生了一个 FF_REFRESH_EVENT 事件,我们需要在事件循环中处理它:

for(;;) {

SDL_WaitEvent(&event;);

switch(event.type) {

/* ... */

case FF_REFRESH_EVENT:

video_refresh_timer(event.user.data1);

break;

and that sends us to this function, which will actually pull the data from our picture queue:

于是我们就运行到了这个函数,在这个函数中会把数据从图像队列中取出:

void video_refresh_timer(void *userdata) {

VideoState *is = (VideoState *)userdata;

VideoPicture *vp;

if(is->video_st) {

if(is->pictq_size == 0) {

schedule_refresh(is, 1);

} else {

vp = &is-;>pictq[is->pictq_rindex];

/* Timing code goes here */

schedule_refresh(is, 80);

/* show the picture! */

video_display(is);

/* update queue for next picture! */

if(++is->pictq_rindex == VIDEO_PICTURE_QUEUE_SIZE) {

is->pictq_rindex = 0;

}

SDL_LockMutex(is->pictq_mutex);

is->pictq_size--;

SDL_CondSignal(is->pictq_cond);

SDL_UnlockMutex(is->pictq_mutex);

}

} else {

schedule_refresh(is, 100);

}

}

For now, this is a pretty simple function: it pulls from the queue when we have something, sets our timer for when the next video frame should be shown, calls video_display to actually show the video on the screen, then increments the counter on the queue, and decreases its size. You may notice that we don’t actually do anything with vp in this function, and here’s why: we will.

Later. We’re going to use it to access timing information when we start syncing the video to the audio. See where it says “timing code here”? In that section, we’re going to figure out how soon we should show the next video frame, and then input that value into the schedule_refresh() function. For now we’re just putting in a dummy value of 80. Technically, you could guess and check this value, and recompile it for every movie you watch, but 1) it would drift after a while and 2) it’s quite silly. We’ll come back to it later, though.

现在,这只是一个极其简单的函数:当队列中有数据的时候,它从其中获得数据,为下一帧设置定时器,调用 video_display 函数来真正显示图像到屏幕上,然后把队列读索引值加 1,并且把队列的大小减 1。你可能会注意到在这个函数中我们并没有真正对 vp 做一些实际的动作,原因是这样的:我们将在后面处理。我们将在后面同步音频和视频的时候用它来访问时间信息。你会在这里看到这个注释信息“timing code here”。那里我们将讨论什么时候显示下一帧视频,然后把相应的值写入到 schedule_refresh() 函数中。现在我们只是随便写入一个值 80。从技术上来讲,你可以猜测并验证这个值,并且为每个电影重新编译程序,但是: 1)过一段时间它会漂移; 2)这种方式是很笨的。我们将在后面来讨论它。

We’re almost done; we just have one last thing to do: display the video! Here’s that video_display function:

我们几乎做完了;我们仅仅剩了最后一件事:显示视频!下面就是 video_display 函数:

void video_display(VideoState *is)

{

VideoPicture *vp;

vp = &is->pictq[is->pictq_rindex];

if (vp->yuvFrame) {

SDL_LockMutex(screen_mutex);

SDL_UpdateYUVTexture(sdlTexture, &sdlRect,

vp->yuvFrame->data[0], vp->yuvFrame->linesize[0],

vp->yuvFrame->data[1], vp->yuvFrame->linesize[1],

vp->yuvFrame->data[2], vp->yuvFrame->linesize[2]);

SDL_RenderClear( sdlRenderer );

SDL_RenderCopy( sdlRenderer, sdlTexture, NULL, &sdlRect);

SDL_RenderPresent( sdlRenderer );

SDL_UnlockMutex(screen_mutex);

}

}

Since our screen can be of any size (we set ours to 640x480 and there are ways to set it so it is resizable by the user), we need to dynamically figure out how big we want our movie rectangle to be. So first we need to figure out our movie’s aspect ratio, which is just the width divided by the height. Some codecs will have an odd sample aspect ratio, which is simply the width/height radio of a single pixel, or sample. Since the height and width values in our codec context are measured in pixels, the actual aspect ratio is equal to the aspect ratio times the sample aspect ratio. Some codecs will show an aspect ratio of 0, and this indicates that each pixel is simply of size 1x1. Then we scale the movie to fit as big in our screen as we can. The &-3 bit-twiddling in there simply rounds the value to the nearest multiple of 4. Then we center the movie, and call SDL_DisplayYUVOverlay(), making sure we use the screen mutex to access it.

因为我们的屏幕可以是任意尺寸(我们设置为 640x480 并且用户可以自己来改变尺寸),我们需要动态计算出我们显示的图像的矩形大小。所以一开始我们需要计算出电影的纵横比(aspect ratio),表示方式为宽度除以高度。某些编解码器会有奇数采样纵横比(sample aspect ration),只是简单表示了一个像素或者一个采样的宽度除以高度的比例。因为宽度和高度在我们的编解码器中是用像素为单位的,所以实际的纵横比与纵横比乘以采样纵横比相同。某些编解码器会显示纵横比为 0,这表示每个像素的纵横比为 1x1。然后我们把电影缩放到适合屏幕的尽可能大的尺寸。这里的 & -3 表示与 -3 做与运算,把值舍入到最为接近的 4 的倍数。 2然后我们把电影移到中心位置,接着调用 SDL_DisplayYUVOverlay() 函数。

So is that it? Are we done? Well, we still have to rewrite the audio code to use the new VideoStruct, but those are trivial changes, and you can look at those in the sample code. The last thing we have to do is to change our callback for ffmpeg’s internal “quit” callback function:

结果是什么?我们做完了吗?嗯,我们仍然要重新改写音频部分的代码来使用新的 VideoStruct 结构体,但是那些改变不大,你可以参考一下示例代码。最后我们要做的是改变 ffmpeg 提供的默认退出回调函数为我们的退出回调函数。

VideoState *global_video_state;

int decode_interrupt_cb(void) {

return (global_video_state && global_video_state->quit);

}

We set global_video_state to the big struct in main().

So that’s it! Go ahead and compile it:

我们在主函数中为大结构体设置了 global_video_state。

这就好了!让我们编译它:

g++ -std=c++14 -o tuturial03 tutorial03.cpp -I/INCLUDE_PATH -L/LIB_PATH -lavutil -lavformat -lavcodec -lswscale -lswresample -lavdevice -lz -lavutil -lm -lpthread -ldl

and enjoy your unsynced movie! Next time we’ll finally build a video player that actually works!

请享受一下没有经过同步的电影!下次我们将编译一个可以最终工作的电影播放器。

源码tutorial06.cpp