30机器学习项目实战-贷款申请最大化利润

唐宇迪《python数据分析与机器学习实战》学习笔记

30机器学习项目实战-贷款申请最大化利润

本文相关原始数据及代码:链接,密码:8v5y

一、数据清洗过滤无用特征

互联网贷款网站:https://www.lendingclub.com/info/download-data.action

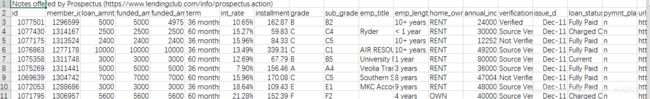

通过历史数据来决定是否放款,这里下载了2007-2011年的数据,大概有4万多个样本特征非常多:

这里对其进行一些预处理:

import pandas as pd

loans_2007 = pd.read_csv('LoanStats3a.csv', skiprows=1)

half_count = len(loans_2007) / 2

loans_2007 = loans_2007.dropna(thresh=half_count, axis=1)#去掉空值太多的行

#thresh=n,保留至少有 n 个非 NA 数的行

loans_2007 = loans_2007.drop(['desc', 'url'],axis=1)#去掉没用的“描述”‘链接’行

loans_2007.to_csv('loans_2007.csv', index=False)#保存为新文件

import pandas as pd

loans_2007 = pd.read_csv("loans_2007.csv")

print(loans_2007.iloc[0])#看一下第一行内容

print(loans_2007.shape[1])#看一下列数

id 1077501

member_id 1.2966e+06

loan_amnt 5000

funded_amnt 5000

funded_amnt_inv 4975

term 36 months

int_rate 10.65%

installment 162.87

grade B

sub_grade B2

emp_title NaN

emp_length 10+ years

home_ownership RENT

annual_inc 24000

verification_status Verified

issue_d Dec-2011

loan_status Fully Paid

pymnt_plan n

purpose credit_card

title Computer

zip_code 860xx

addr_state AZ

dti 27.65

delinq_2yrs 0

earliest_cr_line Jan-1985

inq_last_6mths 1

open_acc 3

pub_rec 0

revol_bal 13648

revol_util 83.7%

total_acc 9

initial_list_status f

out_prncp 0

out_prncp_inv 0

total_pymnt 5863.16

total_pymnt_inv 5833.84

total_rec_prncp 5000

total_rec_int 863.16

total_rec_late_fee 0

recoveries 0

collection_recovery_fee 0

last_pymnt_d Jan-2015

last_pymnt_amnt 171.62

last_credit_pull_d Nov-2016

collections_12_mths_ex_med 0

policy_code 1

application_type INDIVIDUAL

acc_now_delinq 0

chargeoff_within_12_mths 0

delinq_amnt 0

pub_rec_bankruptcies 0

tax_liens 0

Name: 0, dtype: object

52

id数据只是个编号对结果无影响所以不当成特征,具体的特征描述网站上可以看一下,loan_amnt 为申请额,funded_amnt 、funded_amnt_inv 为实际给的这种量是预测之后的事了,对预测无用,这种类似的量都丢除,只留申请时填写的数据。term还款日期。int_rate利率,这个指标可能越高是不是越不容易还款,可作特征。高度重复的特征也要去除,比如各种打分值其实说的是一个事,存在高度相关性。还有些特征,比如公司单位,要预测的话可能需要进行分级太麻烦,也去除。这里共52个特征,就照着上面的思路去清洗:

loans_2007 = loans_2007.drop(["id", "member_id", "funded_amnt", "funded_amnt_inv", "grade", "sub_grade", "emp_title", "issue_d"], axis=1)

loans_2007 = loans_2007.drop(["zip_code", "out_prncp", "out_prncp_inv", "total_pymnt", "total_pymnt_inv", "total_rec_prncp"], axis=1)

打印出候选特征,发现还是有点多32个:

loans_2007 = loans_2007.drop(["total_rec_int", "total_rec_late_fee", "recoveries", "collection_recovery_fee", "last_pymnt_d", "last_pymnt_amnt"], axis=1)

print(loans_2007.iloc[0])

print(loans_2007.shape[1])

loan_amnt 5000

term 36 months

int_rate 10.65%

installment 162.87

emp_length 10+ years

home_ownership RENT

annual_inc 24000

verification_status Verified

loan_status Fully Paid

pymnt_plan n

purpose credit_card

title Computer

addr_state AZ

dti 27.65

delinq_2yrs 0

earliest_cr_line Jan-1985

inq_last_6mths 1

open_acc 3

pub_rec 0

revol_bal 13648

revol_util 83.7%

total_acc 9

initial_list_status f

last_credit_pull_d Nov-2016

collections_12_mths_ex_med 0

policy_code 1

application_type INDIVIDUAL

acc_now_delinq 0

chargeoff_within_12_mths 0

delinq_amnt 0

pub_rec_bankruptcies 0

tax_liens 0

Name: 0, dtype: object

32

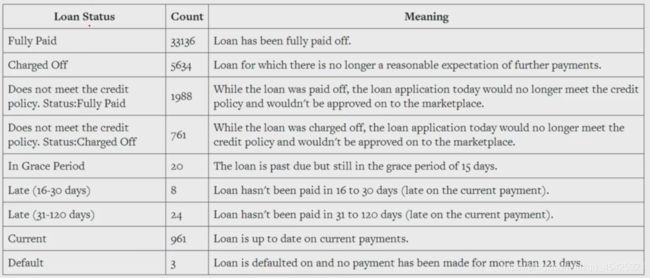

观察数据并没有哪个项明确规定标签值,无0/1这种。下面是网站上关于贷款状态’Loamn Status’列的评判。Fully Paid全额批准,可以打个1,Charged Off 没批准,评0,三的项是没满足要求的最终也不知道到底给贷款没,这里就不用了。后面其他指标的不确定性比较强也丢弃,例如Late(16-30days)批准延期了。所以最终选择Fully Paid和Charged Off作为Label值。

#取出拥有这两个属性的行

loans_2007 = loans_2007[(loans_2007['loan_status'] == "Fully Paid") | (loans_2007['loan_status'] == "Charged Off")]

#把属性和映射值做成字典

status_replace = {

"loan_status" : {

"Fully Paid": 1,

"Charged Off": 0,

}

}

loans_2007 = loans_2007.replace(status_replace)#应用字典进行替换

还剩30多列,观察发现有些列包含的值都是一样的,比如pymnt_plan列全为n、pub_rec全为0…对于预测完全无用,所以接下把这类列都去除。这里统计每列数据如果唯一值则去取,不过考虑到有些值可能为空值,所以这里先去除缺失值。

orig_columns = loans_2007.columns#取所有列

drop_columns = []

for col in orig_columns:#遍历,先去缺失再统计唯一属性

col_series = loans_2007[col].dropna().unique()

if len(col_series) == 1:

drop_columns.append(col)

loans_2007 = loans_2007.drop(drop_columns, axis=1)

print(drop_columns)

print(loans_2007.shape)

loans_2007.to_csv('filtered_loans_2007.csv', index=False)

[‘initial_list_status’, ‘collections_12_mths_ex_med’, ‘policy_code’, ‘application_type’, ‘acc_now_delinq’, ‘chargeoff_within_12_mths’, ‘delinq_amnt’, ‘tax_liens’]

(39560, 24)

总结:拿到这类复杂的数据后,就需要尽可能多的筛选去除无用特征,因为多特征容易造成过拟合。

二、数据预处理

拿到这里特征后不能直接用,还包含大量的缺失值、标点符号等等,这里先统计一下每列缺失值:

import pandas as pd

loans = pd.read_csv('filtered_loans_2007.csv')

null_counts = loans.isnull().sum()

print(null_counts)

loan_amnt 0

term 0

int_rate 0

installment 0

emp_length 1073

home_ownership 0

annual_inc 0

verification_status 0

loan_status 0

pymnt_plan 0

purpose 0

title 11

addr_state 0

dti 0

delinq_2yrs 0

earliest_cr_line 0

inq_last_6mths 0

open_acc 0

pub_rec 0

revol_bal 0

revol_util 50

total_acc 0

last_credit_pull_d 2

pub_rec_bankruptcies 697

dtype: int64

对于缺失值比较少的比如缺10个几十个这种,直接丢除含缺失值的样本。大量缺失的就要考虑丢特征。

接着观察这些特征值的类型:

loans = loans.drop("pub_rec_bankruptcies", axis=1)

loans = loans.dropna(axis=0)

print(loans.dtypes.value_counts())

object 12

float64 10

int64 1

dtype: int64

object_columns_df = loans.select_dtypes(include=["object"])

print(object_columns_df.iloc[0])

term 36 months

int_rate 10.65%

emp_length 10+ years

home_ownership RENT

verification_status Verified

pymnt_plan n

purpose credit_card

title Computer

addr_state AZ

earliest_cr_line Jan-1985

revol_util 83.7%

last_credit_pull_d Nov-2016

Name: 0, dtype: object

模型只接收数值型数据,字符型不认识,所以需要映射。

下面集中预处理一下:

mapping_dict = {

"emp_length": {

"10+ years": 10,

"9 years": 9,

"8 years": 8,

"7 years": 7,

"6 years": 6,

"5 years": 5,

"4 years": 4,

"3 years": 3,

"2 years": 2,

"1 year": 1,

"< 1 year": 0,

"n/a": 0

}

}

loans = loans.drop(["last_credit_pull_d", "earliest_cr_line", "addr_state", "title"], axis=1)

loans["int_rate"] = loans["int_rate"].str.rstrip("%").astype("float")#丢除%转数值

loans["revol_util"] = loans["revol_util"].str.rstrip("%").astype("float")

loans = loans.replace(mapping_dict)

cat_columns = ["home_ownership", "verification_status", "emp_length", "purpose", "term"]

dummy_df = pd.get_dummies(loans[cat_columns])#实现one hot encode

loans = pd.concat([loans, dummy_df], axis=1)#拼接到主体数据上

loans = loans.drop(cat_columns, axis=1) #丢除操作后的原数据

loans = loans.drop("pymnt_plan", axis=1)

loans.to_csv('cleaned_loans2007.csv', index=False)

三、利润最大化条件及做法

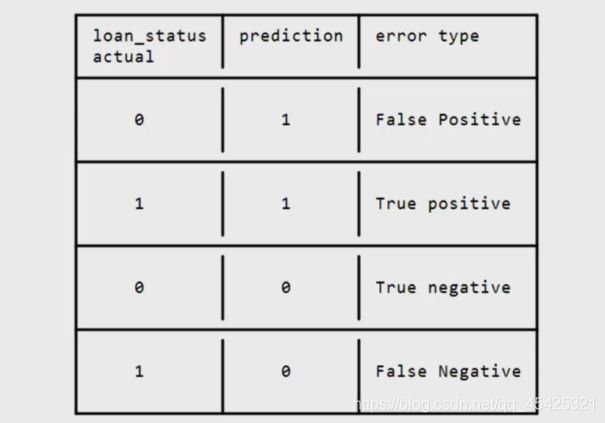

做这么多事无外乎是为了挣钱,如何将利润最大化是项目的核心。分为下面四种情况:

本来不会还钱,预测后借钱给他,则全赔了:-1000

本来会还钱,预测后借钱了,则赚个利息:1000*0.1= +100

本来不会还,预测后不借钱;或者本来会还,预测为不借,这两种情况都:0

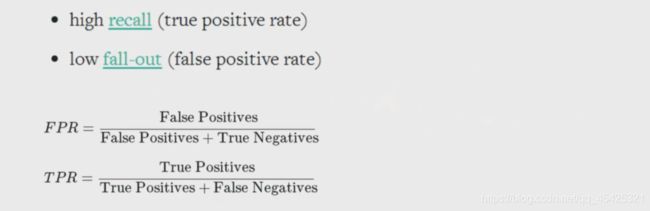

用什么指标作为评判标准呢?用精度显然无法挣钱。假设样本数不平衡,例如:借给了6个,不借给1个,假设全预测为借钱,精度也很高,但是却大概率赔钱。究其根本是希望,FP(不能还的人,预测借了)尽量的低,TP(能还的人,预测借了)尽量的大,因此用FPR和TPR衡量,FPR越小TPR越大越好。

四、模型建立及改善

4.1数据导入及构建

import pandas as pd

loans = pd.read_csv("cleaned_loans2007.csv")#数据导入

cols = loans.columns

train_cols = cols.drop("loan_status") #特征

features = loans[train_cols]

target = loans["loan_status"] #标签

4.2建立简单逻辑回归模型

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import cross_val_predict, KFold

lr = LogisticRegression()

kf = KFold(5,shuffle=True, random_state=1)

#交叉验证,将数据切5份,随机1个作为测试集,4个作为训练集

predictions = cross_val_predict(lr, features, target, cv=kf)#预测

predictions = pd.Series(predictions)

# False positives.

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

print(fp_filter)

fp = len(predictions[fp_filter])

# True positives.

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# False negatives.

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# True negatives

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# Rates

tpr = tp / float((tp + fn))#盈利 TPR

fpr = fp / float((fp + tn))#赔钱 FPR

print(tpr)

print(fpr)

print(predictions[:20])

0 False

1 True

2 False

…

38424 False

38425 False

38426 False

38427 False

Length: 38428, dtype: bool

0.9992125268800921

0.9977822953243393

0 1

1 1

…

18 1

19 1

dtype: int64

发现TPR和FPR的值都非常高,实际就是无论谁来,这个模型都预测为借钱,模型表达效果非常差。根本原因就数据样本的不均衡。通过数据增强造数据可以解决,但是显然不太好造。所以这里加上权重项,增加负样本的影响,降低正样本影响。

4.3逻辑回归模型添加权重

之前都是用的默认参数,这里通过**class_weight=“balanced”**调整正负样本权重,达到样本平衡。

balance权重

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import cross_val_predict

lr = LogisticRegression(class_weight="balanced")###样本均衡###

kf = KFold(5,shuffle=True, random_state=1)

predictions = cross_val_predict(lr, features, target, cv=kf)

predictions = pd.Series(predictions)

# False positives.

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

fp = len(predictions[fp_filter])

# True positives.

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# False negatives.

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# True negatives

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# Rates

tpr = tp / float((tp + fn))

fpr = fp / float((fp + tn))

print(tpr)

print(fpr)

print(predictions[:20])

loans['predicted_label']=predictions

matches = loans["predicted_label"] == loans["loan_status"]

#print('matches',matches)

correct_predictions = loans[matches]

print('len(correct_predictions)',len(correct_predictions))

print('float(len(admissions)',float(len(loans)))

accuracy = len(correct_predictions) / float(len(loans))

print('准确率',accuracy)

0.530666020534876

0.3435594160044354

0 0

1 0

2 0

3 1

4 1

5 0

6 0

7 0

8 0

9 1

10 1

11 0

12 0

13 1

14 0

15 0

16 1

17 1

18 1

19 0

dtype: int64

len(correct_predictions) 21073

float(len(admissions) 38428.0

准确率 0.5483761840324763

发现TPR和FPR都有所降低了,预测的结果也有0了模型有意义了,但是效果还是不太好。

自定义权重项:

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import cross_val_predict

penalty = {

0: 5,

1: 1

}#不用balance了,这里自定义权重项

lr = LogisticRegression(class_weight=penalty)

kf = KFold(5,shuffle=True, random_state=1)

predictions = cross_val_predict(lr, features, target, cv=kf)

predictions = pd.Series(predictions)

# False positives.

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

fp = len(predictions[fp_filter])

# True positives.

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# False negatives.

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# True negatives

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# Rates

tpr = tp / float((tp + fn))

fpr = fp / float((fp + tn))

print(tpr)

print(fpr)

loans['predicted_label']=predictions

matches = loans["predicted_label"] == loans["loan_status"]

#print('matches',matches)

correct_predictions = loans[matches]

print('len(correct_predictions)',len(correct_predictions))

print('float(len(admissions)',float(len(loans)))

accuracy = len(correct_predictions) / float(len(loans))

print('准确率',accuracy)

0.6916739861283581

0.49029754204398446

len(correct_predictions) 25595

float(len(admissions) 38428.0

准确率 0.6660507962943687

4.4随机森林模型

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_predict

rf = RandomForestClassifier(n_estimators=10,class_weight="balanced", random_state=1)

#print help(RandomForestClassifier)

kf = KFold(5,shuffle=True, random_state=1)

predictions = cross_val_predict(rf, features, target, cv=kf)

predictions = pd.Series(predictions)

# False positives.

fp_filter = (predictions == 1) & (loans["loan_status"] == 0)

fp = len(predictions[fp_filter])

# True positives.

tp_filter = (predictions == 1) & (loans["loan_status"] == 1)

tp = len(predictions[tp_filter])

# False negatives.

fn_filter = (predictions == 0) & (loans["loan_status"] == 1)

fn = len(predictions[fn_filter])

# True negatives

tn_filter = (predictions == 0) & (loans["loan_status"] == 0)

tn = len(predictions[tn_filter])

# Rates

tpr = tp / float((tp + fn))

fpr = fp / float((fp + tn))

print('随机森林',tpr)

print('随机森林',fpr)

print(predictions[:20])

loans['predicted_label']=predictions

matches = loans["predicted_label"] == loans["loan_status"]

#print('matches',matches)

correct_predictions = loans[matches]

print('len(correct_predictions)',len(correct_predictions))

print('float(len(admissions)',float(len(loans)))

accuracy = len(correct_predictions) / float(len(loans))

print('随机森林准确率',accuracy)

随机森林 0.9753460338613441

随机森林 0.9388283126963592

0 1

1 1

2 1

3 1

4 1

5 1

6 1

7 1

8 1

9 1

10 1

11 1

12 1

13 1

14 1

15 1

16 1

17 1

18 1

19 0

dtype: int64

len(correct_predictions) 32534

float(len(admissions) 38428.0

随机森林准确率 0.8466222546060165

五、总结

1.发现随机森林效果也不太好跟逻辑回归差不多 ,因此还可以:调节正负样本权重和模型的参数进行优化(例如树量等)。

2.也可以选择其他模型再对比效果,例如SVM之类的。也可以将其他模型和现在比较好的模型融合。

3.特征只是通过初步筛选,这么多也可能是过拟合了。现在使用的为原始特征,还可以通过计算挖掘深层次特征。