hadoop 2.4.1 安装全过程

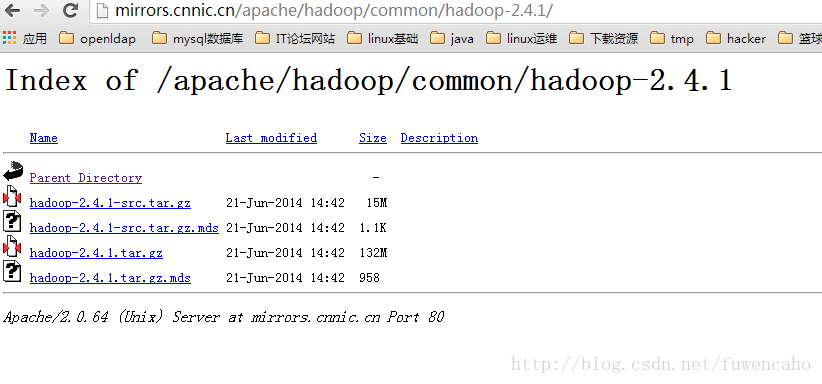

1:首先下载hadoop安装介质

地址:http://mirrors.cnnic.cn/apache/hadoop/common/hadoop-2.4.1/

2:安装虚拟机,配置好网络

网络配置,参见我的博文:http://fuwenchao.blog.51cto.com/6008712/1398629

我又三台虚拟机:

2.1:hd0

[root@localhost ~]# hostname

localhost.hadoop0

[root@localhost ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:5D:31:1C

inet addr:192.168.1.205 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe5d:311c/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:484 errors:0 dropped:0 overruns:0 frame:0

TX packets:99 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:48893 (47.7 KiB) TX bytes:8511 (8.3 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:27 errors:0 dropped:0 overruns:0 frame:0

TX packets:27 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2744 (2.6 KiB) TX bytes:2744 (2.6 KiB)

[root@localhost ~]# uname -a

Linux localhost.hadoop0 2.6.32-358.el6.x86_64 #1 SMP Fri Feb 22 00:31:26 UTC 2013 x86_64 x86_64 x86_64 GNU/Linux

[root@localhost ~]# 2.2:hd1

localhost.hadoop1 192.168.1.206

2.3:hd2

localhost.hadoop2 192.168.1.207

3:配置各个主机的映射关系

在每台主机的/etc/hosts文件中增加如下几行

192.168.1.205 localhost.hadoop0 hd0

192.168.1.206 localhost.hadoop1 hd1

192.168.1.207 localhost.hadoop2 hd2

4:hd0到hd1和hd2的无密码登陆

4.1:设置本地的无密码登陆

1、 进入.ssh文件夹

2、 ssh-keygen -t rsa 之后一路回 车(产生秘钥)

3、 把id_rsa.pub 追加到授权的 key 里面去(cat id_rsa.pub >> authorized_keys)

4、 重启 SSH 服 务命令使其生效 :service sshd restart(这里RedHat下为sshdUbuntu下为ssh)

此时已经可以进行ssh localhost的无密码登陆

【注意】:以上操作在每台机器上面都要进行。

4.2:设置无密码的远程登录

[root@localhost ~]# cd .ssh

[root@localhost .ssh]# ls

authorized_keys id_rsa id_rsa.pub known_hosts

[root@localhost .ssh]# scp authorized_keys root@hd1:~/.ssh/authorized_keys_from_hd0[root@localhost .ssh]# ls

authorized_keys authorized_keys_from_hd0 id_rsa id_rsa.pub known_hosts

[root@localhost .ssh]# cat authorized_keys_from_hd0 >>authorized_keys

[root@localhost .ssh]# 至此hd0到hd1的免密码登陆就设置好了,hd0到hd2的免密码登陆同理

5:安装JDK

6:关闭服务器的防火墙

[root@localhost jdk1.7]# service iptables stop

iptables: Flushing firewall rules: [ OK ]

iptables: Setting chains to policy ACCEPT: filter [ OK ]

iptables: Unloading modules: [ OK ]

[root@localhost jdk1.7]# service iptables status

iptables: Firewall is not running.

[root@localhost jdk1.7]# chkconfig iptables off

[root@localhost jdk1.7]# 看下iptables状态

[root@localhost jdk1.7]# chkconfig --list iptables

iptables 0:off 1:off 2:off 3:off 4:off 5:off 6:off

[root@localhost jdk1.7]# 7:将下载好的hadoop安装包上传到服务器

[root@localhost Hadoop_install_need]# pwd

/root/Hadoop_install_need

[root@localhost Hadoop_install_need]# ls

hadoop-2.4.1.tar.gz JDK

[root@localhost Hadoop_install_need]# 8:解压缩文件

tar: hadoop-2.4.1/share/doc/hadoop/hadoop-streaming/images/icon_success_sml.gif: Cannot open: No such file or directory

hadoop-2.4.1/share/doc/hadoop/hadoop-streaming/images/apache-maven-project-2.png

tar: hadoop-2.4.1/share/doc/hadoop/hadoop-streaming/images/apache-maven-project-2.png: Cannot open: No such file or directory整了半天也不知道是啥原因,因为文件是一样,命令是一样,为什么在前两台上正常执行,在第三台却会报错呢

gunzip aa.tar.gz tar xvf aa.tar--

[root@localhost Hadoop_install_need]# gunzip hadoop-2.4.1.tar.gz

gzip: hadoop-2.4.1.tar: No space left on device原来如此,磁盘空间不足,df看下

[root@localhost Hadoop_install_need]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 3.9G 3.9G 0 100% /

tmpfs 495M 72K 495M 1% /dev/shm

/dev/sda1 985M 40M 895M 5% /boot

/dev/sda5 8.1G 147M 7.5G 2% /hadoop法克鱿呀

---为了解决上面的问题,出现了一点小状况,将三个虚拟机重新安装了一遍,配置还是跟以前一样,只不过这次全部选择最小化安装了

现在的进度是jdk安装好了,hadoop解压到了/hd/hdinstall下面,接着往下吧

9:关闭防火墙

10:开始安装hadoop2.4.1

首先解压

[root@hd1 hdsoft]# tar -zxvf hadoop-2.4.1.tar.gz 复制到

[root@hd1 hdinstall]# pwd

/hd/hdinstall

[root@hd1 hdinstall]# ll

total 8

drwxr-xr-x. 10 67974 users 4096 Jul 21 04:45 hadoop-2.4.1

drwxr-xr-x. 8 uucp 143 4096 May 8 04:50 jdk1.7

[root@hd1 hdinstall]# 配置之前,需要在hd0本地文件系统创建以下文件夹:

/root/dfs/name

/root/dfs/data

/root/tmp

修改配置文件--7个

~/hadoop-2.2.0/etc/hadoop/hadoop-env.sh

~/hadoop-2.2.0/etc/hadoop/yarn-env.sh

~/hadoop-2.2.0/etc/hadoop/slaves

~/hadoop-2.2.0/etc/hadoop/core-site.xml

~/hadoop-2.2.0/etc/hadoop/hdfs-site.xml

~/hadoop-2.2.0/etc/hadoop/mapred-site.xml

~/hadoop-2.2.0/etc/hadoop/yarn-site.xml

以上个别文件默认不存在的,可以复制相应的template文件获得。改成如下

配置文件1:hadoop-env.sh

修改JAVA_HOME值(export JAVA_HOME=/hd/hdinstall/jdk1.7)

配置文件2:yarn-env.sh

修改JAVA_HOME值(export JAVA_HOME=/hd/hdinstall/jdk1.7)

配置文件3:slaves (这个文件里面保存所有slave节点)

[root@hd1 hadoop]# more slaves

hd1

hd2

[root@hd1 hadoop]# [root@hd1 hadoop]# more core-site.xml

fs.defaultFS

hdfs://hd0:9000

io.file.buffer.size

131072

hadoop.tmp.dir

file:/root/tmp

Abase for other temporary directories.

hadoop.proxyuser.hduser.hosts

*

hadoop.proxyuser.hduser.groups

*

[root@hd1 hadoop]# 配置文件5:hdfs-site.xml

[root@hd1 hadoop]# more hdfs-site.xml

dfs.namenode.secondary.http-address

hd0:9001

dfs.namenode.name.dir

file:/root/dfs/name

dfs.datanode.data.dir

file:/root/dfs/data

dfs.replication

3

dfs.webhdfs.enabled

true

[root@hd1 hadoop]# 配置文件6:mapred-site.xml

[root@hd1 hadoop]# more mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hd0:10020

mapreduce.jobhistory.webapp.address

hd0:19888

[root@hd1 hadoop]# 配置文件7:yarn-site.xml

[root@hd1 hadoop]# more yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.address

hd0:8032

yarn.resourcemanager.scheduler.address

hd0:8030

yarn.resourcemanager.resource-tracker.address

hd0:8031

yarn.resourcemanager.admin.address

hd0:8033

yarn.resourcemanager.webapp.address

hd0:8088

[root@hd1 hadoop]# hd1 和 hd2 同上配置,你可以把上面的配置文件复制过去!

配置完成,准备起航

[root@hd0 bin]# pwd

/hd/hdinstall/hadoop-2.4.1/bin

[root@hd0 bin]# 格式化namenode

./hdfs namenode –format启动hdfs

../sbin/start-dfs.sh但是此时报错了,信息如下

[root@hd0 bin]# ../sbin/start-dfs.sh

14/07/21 01:45:06 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /hd/hdinstall/hadoop-2.4.1/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It's highly recommended that you fix the library with 'execstack -c ', or link it with '-z noexecstack'.

hd0]

HotSpot(TM): ssh: Could not resolve hostname HotSpot(TM): Name or service not known

64-Bit: ssh: Could not resolve hostname 64-Bit: Name or service not known

sed: -e expression #1, char 6: unknown option to `s'

VM: ssh: Could not resolve hostname VM: Name or service not known

Java: ssh: Could not resolve hostname Java: Name or service not known

library: ssh: Could not resolve hostname library: Name or service not known

You: ssh: Could not resolve hostname You: Name or service not known

have: ssh: Could not resolve hostname have: Name or service not known

loaded: ssh: Could not resolve hostname loaded: Name or service not known

stack: ssh: Could not resolve hostname stack: Name or service not known

VM: ssh: Could not resolve hostname VM: Name or service not known

disabled: ssh: Could not resolve hostname disabled: Name or service not known

guard.: ssh: Could not resolve hostname guard.: Name or service not known

warning:: ssh: Could not resolve hostname warning:: Name or service not known

try: ssh: Could not resolve hostname try: Name or service not known

fix: ssh: Could not resolve hostname fix: Name or service not known

might: ssh: Could not resolve hostname might: Name or service not known

have: ssh: Could not resolve hostname have: Name or service not known

will: ssh: Could not resolve hostname will: Name or service not known

the: ssh: Could not resolve hostname the: Name or service not known

guard: ssh: Could not resolve hostname guard: Name or service not known

which: ssh: Could not resolve hostname which: Name or service not known

The: ssh: Could not resolve hostname The: Name or service not known

stack: ssh: Could not resolve hostname stack: Name or service not known

now.: ssh: Could not resolve hostname now.: Name or service not known

recommended: ssh: Could not resolve hostname recommended: Name or service not known

It's: ssh: Could not resolve hostname It's: Name or service not known

with: ssh: Could not resolve hostname with: Name or service not known

-c: Unknown cipher type 'cd'

fix: ssh: Could not resolve hostname fix: Name or service not known

or: ssh: Could not resolve hostname or: Name or service not known

to: ssh: connect to host to port 22: Connection refused

that: ssh: Could not resolve hostname that: Name or service not known

library: ssh: Could not resolve hostname library: Name or service not known

it: ssh: Could not resolve hostname it: No address associated with hostname

'execstack: ssh: Could not resolve hostname 'execstack: Name or service not known

with: ssh: Could not resolve hostname with: Name or service not known

highly: ssh: Could not resolve hostname highly: Name or service not known

The authenticity of host 'hd0 (192.168.1.205)' can't be established.

RSA key fingerprint is c0:a4:cb:1b:91:30:0f:33:82:92:9a:e9:ac:1d:ef:11.

Are you sure you want to continue connecting (yes/no)? '-z: ssh: Could not resolve hostname '-z: Name or service not known

',: ssh: Could not resolve hostname ',: Name or service not known

link: ssh: Could not resolve hostname link: No address associated with hostname

you: ssh: Could not resolve hostname you: Name or service not known

the: ssh: Could not resolve hostname the: Name or service not known

noexecstack'.: ssh: Could not resolve hostname noexecstack'.: Name or service not known

Server: ssh: Could not resolve hostname Server: Name or service not known

^Chd0: Host key verification failed.

hd1: starting datanode, logging to /hd/hdinstall/hadoop-2.4.1/logs/hadoop-root-datanode-hd1.out

hd2: starting datanode, logging to /hd/hdinstall/hadoop-2.4.1/logs/hadoop-root-datanode-hd2.out

Starting secondary namenodes [Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /hd/hdinstall/hadoop-2.4.1/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It's highly recommended that you fix the library with 'execstack -c ', or link it with '-z noexecstack'.

hd0]

sed: -e expression #1, char 6: unknown option to `s'

Java: ssh: Could not resolve hostname Java: Name or service not known

warning:: ssh: Could not resolve hostname warning:: Name or service not known

You: ssh: Could not resolve hostname You: Name or service not known

Server: ssh: Could not resolve hostname Server: Name or service not known

might: ssh: Could not resolve hostname might: Name or service not known

library: ssh: Could not resolve hostname library: Name or service not known

64-Bit: ssh: Could not resolve hostname 64-Bit: Name or service not known

VM: ssh: Could not resolve hostname VM: Name or service not known

have: ssh: Could not resolve hostname have: Name or service not known

disabled: ssh: Could not resolve hostname disabled: Name or service not known

have: ssh: Could not resolve hostname have: Name or service not known

HotSpot(TM): ssh: Could not resolve hostname HotSpot(TM): Name or service not known

which: ssh: Could not resolve hostname which: Name or service not known

guard.: ssh: Could not resolve hostname guard.: Name or service not known

will: ssh: Could not resolve hostname will: Name or service not known

loaded: ssh: Could not resolve hostname loaded: Name or service not known

guard: ssh: Could not resolve hostname guard: Name or service not known

stack: ssh: Could not resolve hostname stack: Name or service not known

stack: ssh: Could not resolve hostname stack: Name or service not known

the: ssh: Could not resolve hostname the: Name or service not known

recommended: ssh: Could not resolve hostname recommended: Name or service not known

now.: ssh: Could not resolve hostname now.: Name or service not known

The: ssh: Could not resolve hostname The: Name or service not known

VM: ssh: Could not resolve hostname VM: Name or service not known

you: ssh: Could not resolve hostname you: Name or service not known

to: ssh: connect to host to port 22: Connection refused

-c: Unknown cipher type 'cd'

try: ssh: Could not resolve hostname try: Name or service not known

It's: ssh: Could not resolve hostname It's: Name or service not known

highly: ssh: Could not resolve hostname highly: Name or service not known

fix: ssh: Could not resolve hostname fix: Name or service not known

library: ssh: Could not resolve hostname library: Name or service not known

fix: ssh: Could not resolve hostname fix: Name or service not known

The authenticity of host 'hd0 (192.168.1.205)' can't be established.

RSA key fingerprint is c0:a4:cb:1b:91:30:0f:33:82:92:9a:e9:ac:1d:ef:11.

Are you sure you want to continue connecting (yes/no)? it: ssh: Could not resolve hostname it: No address associated with hostname

that: ssh: Could not resolve hostname that: Name or service not known

or: ssh: Could not resolve hostname or: Name or service not known

with: ssh: Could not resolve hostname with: Name or service not known

the: ssh: Could not resolve hostname the: Name or service not known

with: ssh: Could not resolve hostname with: Name or service not known

',: ssh: Could not resolve hostname ',: Name or service not known

link: ssh: Could not resolve hostname link: No address associated with hostname

'-z: ssh: Could not resolve hostname '-z: Name or service not known

'execstack: ssh: Could not resolve hostname 'execstack: Name or service not known

noexecstack'.: ssh: Could not resolve hostname noexecstack'.: Name or service not known

^Chd0: Host key verification failed.

14/07/21 01:52:15 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 谷歌被封,百度半天解决了

export HADOOP_PREFIX=/hd/hdinstall/hadoop-2.4.1/

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_PREFIX}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_PREFIX/lib"重新启动hdfs

[root@hd0 sbin]# ./start-dfs.sh

14/07/21 05:01:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [hd0]

The authenticity of host 'hd0 (192.168.1.205)' can't be established.

RSA key fingerprint is c0:a4:cb:1b:91:30:0f:33:82:92:9a:e9:ac:1d:ef:11.

Are you sure you want to continue connecting (yes/no)? yes

hd0: Warning: Permanently added 'hd0,192.168.1.205' (RSA) to the list of known hosts.

hd0: starting namenode, logging to /hd/hdinstall/hadoop-2.4.1/logs/hadoop-root-namenode-hd0.out

hd1: datanode running as process 1347. Stop it first.

hd2: datanode running as process 1342. Stop it first.

Starting secondary namenodes [hd0]

hd0: starting secondarynamenode, logging to /hd/hdinstall/hadoop-2.4.1/logs/hadoop-root-secondarynamenode-hd0.out

14/07/21 05:02:06 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@hd0 sbin]# 成功

此时在hd0上面运行的进程有:namenode secondarynamenode

hd1和hd2上面运行的进程有:datanode

hd0:

[root@hd0 sbin]# ps -ef|grep name

root 2076 1 12 05:01 ? 00:00:29 /hd/hdinstall/jdk1.7/bin/java -Dproc_namenode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop-root-namenode-hd0.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.namenode.NameNode

root 2210 1 10 05:01 ? 00:00:23 /hd/hdinstall/jdk1.7/bin/java -Dproc_secondarynamenode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop-root-secondarynamenode-hd0.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS -Dhdfs.audit.logger=INFO,NullAppender -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode

root 2349 1179 0 05:05 pts/1 00:00:00 grep name

[root@hd1 name]# ps -ef|grep data

root 1347 1 4 04:45 ? 00:01:08 /hd/hdinstall/jdk1.7/bin/java -Dproc_datanode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop-root-datanode-hd1.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -server -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.datanode.DataNode

root 1571 1183 0 05:10 pts/1 00:00:00 grep datahd2:

[root@hd2 dfs]# ps -ef|grep data

root 1342 1 2 04:45 ? 00:01:46 /hd/hdinstall/jdk1.7/bin/java -Dproc_datanode -Xmx1000m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/hd/hdinstall/hadoop-2.4.1/logs -Dhadoop.log.file=hadoop-root-datanode-hd2.log -Dhadoop.home.dir=/hd/hdinstall/hadoop-2.4.1 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,RFA -Djava.library.path=/hd/hdinstall/hadoop-2.4.1/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -server -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=ERROR,RFAS -Dhadoop.security.logger=INFO,RFAS org.apache.hadoop.hdfs.server.datanode.DataNode

root 1605 1186 0 05:45 pts/1 00:00:00 grep data

[root@hd2 dfs]# 启动yarn: ./sbin/start-yarn.sh

[root@hd0 sbin]# ./start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /hd/hdinstall/hadoop-2.4.1//logs/yarn-root-resourcemanager-hd0.out

hd1: starting nodemanager, logging to /hd/hdinstall/hadoop-2.4.1/logs/yarn-root-nodemanager-hd1.out

hd2: starting nodemanager, logging to /hd/hdinstall/hadoop-2.4.1/logs/yarn-root-nodemanager-hd2.out此时在hd0上面运行的进程有:namenode 、secondary namenode、 resource manager

hd1和hd2上面运行的进程有:datanode、 node managet

查看集群状态

[root@hd0 sbin]# cd ../bin/

[root@hd0 bin]# ./hdfs dfsadmin -report

14/07/21 05:10:55 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Configured Capacity: 10321133568 (9.61 GB)

Present Capacity: 8232607744 (7.67 GB)

DFS Remaining: 8232558592 (7.67 GB)

DFS Used: 49152 (48 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Datanodes available: 2 (2 total, 0 dead)

Live datanodes:

Name: 192.168.1.206:50010 (hd1)

Hostname: hd1

Decommission Status : Normal

Configured Capacity: 5160566784 (4.81 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 1044275200 (995.90 MB)

DFS Remaining: 4116267008 (3.83 GB)

DFS Used%: 0.00%

DFS Remaining%: 79.76%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Mon Jul 21 05:10:59 CST 2014

Name: 192.168.1.207:50010 (hd2)

Hostname: hd2

Decommission Status : Normal

Configured Capacity: 5160566784 (4.81 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 1044250624 (995.88 MB)

DFS Remaining: 4116291584 (3.83 GB)

DFS Used%: 0.00%

DFS Remaining%: 79.76%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Last contact: Mon Jul 21 05:11:02 CST 2014查看HDFS: http://192.168.1.205:50070

--待续