分布式一致性Raft算法

文章目录

- 1 raft算法详解

- 2 raft算法实例

- 2.1 nacos中的raft算法实现

- 2.2 hazelcast

在之前的博客中,zookeeper原理及apache zookeeper源码阅读,我在里面介绍了关于zk选主算法:分布式一致性算法Paxos,对于Paxos算法的理解,一直都是公认的生涩难懂,在这个博客中,我们会学习另一个分布式一致性算法,那就是raft

1 raft算法详解

首先,raft算法是一种基于日志复制的一致性算法,并且raft的结果等价于paxos算法,同时它是一种更加简单,更易理解的分布式一致性算法(来自于raft论文)

如果你准备去学习raft算法的话,强烈的建议你阅读raft的论文

In Search of an Understandable Consensus Algorithm

如果你不是看的懂英文,github里面也有对应的中文翻译的版本,

https://github.com/brandonwang001/raft_translation

同时github中也有人对于raft的原理使用动图展示的形式进行讲解【这个强烈建议你看一遍】:

Raft Understandable Distributed Consensus

个人总结:

对于Raft算法,所有的节点都会有这三个状态:Follower, Candidate,Leader

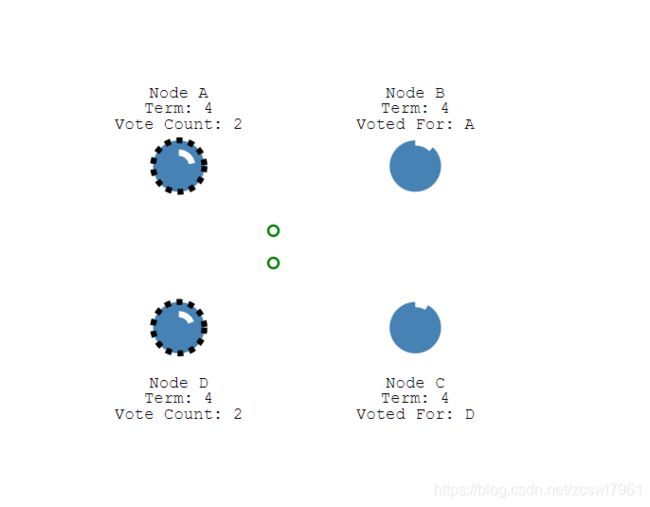

选举过程(Leader Election):

并且,初始状态下,所有的节点都是Follower状态,当followers状态的节点在一定(election timeout)时间内没有收到leader的心跳信息后,就会处于Candidate状态,

处于Candidate状态的节点,这个时候会请求其他的节点,进行自我Leader的选举,如果收到多数的选举票数的话,那么该节点就会处于Leader节点

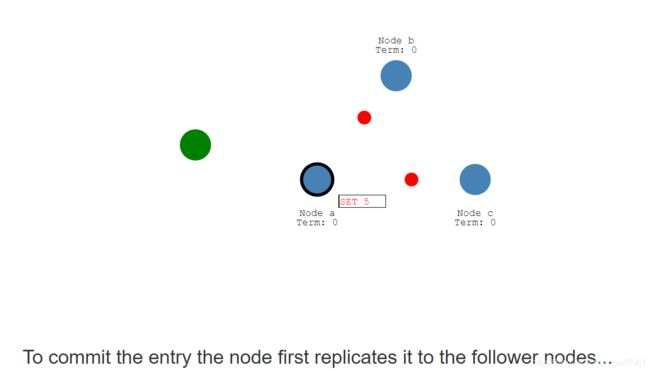

日志复制(Log Replication):

此时,选举出了Leader之后,所有的数据改变都是在Leader节点上,

每一个改变,都会建立在leader 节点上的日志记录上(暂存),Leader节点会向Follower节点发起数据复制的请求,当被Leader节点记录的日志的数据被大多数(majority)Follower节点所复制记录(暂存到log)之后,那么,认为本次服务数据有效,当前改变的数值会被记录到Leader Node 中去

然后Leader节点会向Follower节点发起通知,同时Follower节点会将复制的数据从log中记录到Follower Node中,本次数据复制完成。

到这里,我们都是看到了对于Raft正常的情况下的顺利操作,但是实际的情况往往复杂的多,

对于Follower在election timeout内没有收到来自leader的心跳之后进入Candidate状态,该情况可能来自于分布式系统中还没有选举Leader,或者是此时被选举出来的Leader已经挂掉,或者是当前的Follower节点和Leader节点出现了网络故障,无法连接。

- 1,当Follower在election timeout内没有收到Leader的心跳之后,首先会变成Candidate状态,然后本地Node会记录一个新的Term(选举周期 )

- 2,当前处于Candidate状态的Node会投自己一票

- 3,并行给其他节点发送 RequestVote RPCs

- 4,等待其他,

- 如果等待的节点还没有在这次Term进行投票的话,那么就会投给当前发起RequestVote RPCs的Candidate节点

- 成为Leader的节点会间隔一定时间headbeat time 发送心跳数据

在选举的过程中,处于Follower状态的节点在选举的时候必须满足这几点:

- 在任一Term内,每一个Follower节点只能投一次票

- 同时,发起投票的Candidate状态的节点知道的信息不能比Follower少

Raft算法是如何保证同一个时刻含有两个Candidate,并且同时平分选票的过程

通过每一个节点设置随机的election time加上集群中设置奇数个节点

Raft节点当Leader节点收到了Client发起的请求,写入到Log Entry之后,Crash之后,系统如何进行处理 ?

这种情况是属于分布式一致性的集群错误,Raft通过缩短election time(随机范围:50ms and 300ms)来快速进行系统Leader的确认,当处于Leader的节点接收请求之后挂掉,此时分布式系统会再次确认新得Leader(此时其他可用的Node会在一个新的Term中),此时如果原始的Leader启动,会接收到新得Leader的心跳,会记录到最新的Term和Log Entry信息

2 raft算法实例

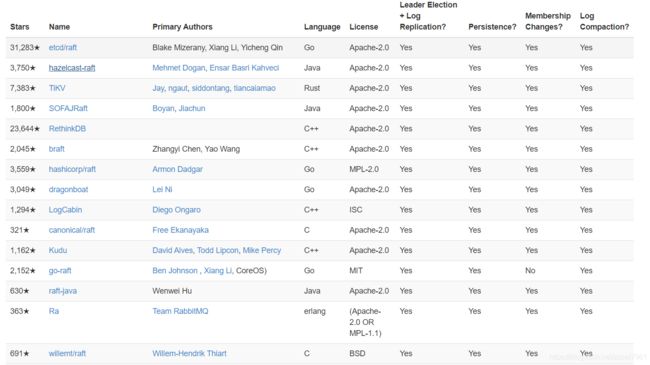

对于raft算法,各个平台的语言都对其进行了大量的实现,raft.github.io 上对于raft的算法实现starts也进行了统计

2.1 nacos中的raft算法实现

nacos中的Raft算法的实现,是基于其搭建分布式集群环境下,提供的分布式一致性的数据服务。

服务通过Rest接口发起注册到Nacos Service时,内部调用InstanceController#register 进行注册逻辑操作,最终通过consistencyService.put(key, instances); 进行注册服务的持久化操作

consistencyService 通过注册的Instance的ephemeral 成员变量来决定使用

PersistentConsistencyService(Raft)和EphemeralConsistencyService(Distro)

Nacos的分布式一致性的实现都是基于ConsistencyService接口进行实现,该接口提供了基础的

put,remove,get,listen,unlisten,isAvailable

方法

/**

* Put a data related to a key to Nacos cluster

*

* @param key key of data, this key should be globally unique

* @param value value of data

* @throws NacosException

* @see

*/

void put(String key, Record value) throws NacosException;

/**

* Remove a data from Nacos cluster

*

* @param key key of data

* @throws NacosException

*/

void remove(String key) throws NacosException;

/**

* Get a data from Nacos cluster

*

* @param key key of data

* @return data related to the key

* @throws NacosException

*/

Datum get(String key) throws NacosException;

/**

* Listen for changes of a data

*

* @param key key of data

* @param listener callback of data change

* @throws NacosException

*/

void listen(String key, RecordListener listener) throws NacosException;

/**

* Cancel listening of a data

*

* @param key key of data

* @param listener callback of data change

* @throws NacosException

*/

void unlisten(String key, RecordListener listener) throws NacosException;

/**

* Tell the status of this consistency service

*

* @return true if available

*/

boolean isAvailable();

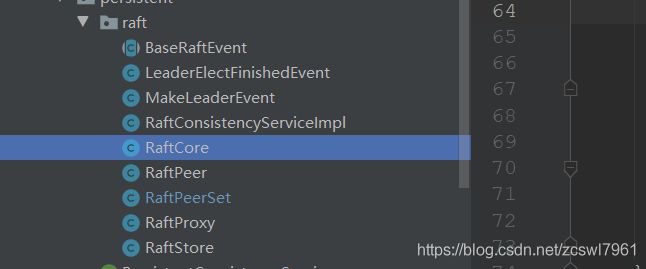

Nacos中Raft实现的核心包:

同时提供了一个RaftController(com.alibaba.nacos.naming.controllers.RaftController),RestAPI接口实现,作为Leader节点的数据交换和心跳检测

Nacos对于Raft实现的几个核心包:

- RaftCore: 实现Raft功能的核心算法,核心实现

- RaftPeer: Raft节点的对象,其成员变量描述了单个节点的信息

- RaftPeerSet:当前系统持有的系统所有的RaftPeer

- RaftStore :持久化到磁盘文件的工具

其中Raft内部的几个核心成员变量:

/**

* 记录监听

*/

private volatile Map> listeners = new ConcurrentHashMap<>();

/**

* 当前持久化的数据

*/

private volatile ConcurrentMap datums = new ConcurrentHashMap<>();

/**

* 当前节点持有的同伴节点的信息

*/

@Autowired

private RaftPeerSet peers;

/**

* naming中关键的配置属性信息

*/

@Autowired

private SwitchDomain switchDomain;

/**

* 公共的配置信息

*/

@Autowired

private GlobalConfig globalConfig;

/**

* 用于转发请求给Leader

*/

@Autowired

private RaftProxy raftProxy;

/**

* 持久化节点信息到磁盘的累

*/

@Autowired

private RaftStore raftStore;

进入到RaftCore的init()方法,该方法由@PostConstruct注释,其内部会注册MasterElection(选主),HeartBeat(心跳检测)两个任务,

选主:

public void run() {

try {

if (!peers.isReady()) {

return;

}

RaftPeer local = peers.local();

local.leaderDueMs -= GlobalExecutor.TICK_PERIOD_MS;

if (local.leaderDueMs > 0) {

return;

}

// reset timeout

local.resetLeaderDue();

local.resetHeartbeatDue();

sendVote();

} catch (Exception e) {

Loggers.RAFT.warn("[RAFT] error while master election {}", e);

}

}

选择任务交由线程池执行,每次执行首先需要判断当前节点持有的集群所有节点peers处于ready状态,

该ready状态交由RaftPeerSet中的onChangeServerList 触发更新,我们在看RaftPeerSet类的时候,会发现其类依赖于serverListManager类

@Component

@DependsOn("serverListManager")

public class RaftPeerSet implements ServerChangeListener, ApplicationContextAware {

....

}

ServerListManager类中启动后,首先执行init() 方法

@PostConstruct

public void init() {

GlobalExecutor.registerServerListUpdater(new ServerListUpdater());

GlobalExecutor.registerServerStatusReporter(new ServerStatusReporter(), 5000);

}

init方法内注册了两个定时任务ServerListUpdater和ServerStatusReporter,

其中ServerListUpdater任务会首先检测当前节点中的cluster.conf配置的集群节点服务地址,

@Override

public void run() {

try {

List refreshedServers = refreshServerList();

List oldServers = servers;

if (CollectionUtils.isEmpty(refreshedServers)) {

Loggers.RAFT.warn("refresh server list failed, ignore it.");

return;

}

boolean changed = false;

List newServers = (List) CollectionUtils.subtract(refreshedServers, oldServers);

if (CollectionUtils.isNotEmpty(newServers)) {

servers.addAll(newServers);

changed = true;

Loggers.RAFT.info("server list is updated, new: {} servers: {}", newServers.size(), newServers);

}

List deadServers = (List) CollectionUtils.subtract(oldServers, refreshedServers);

if (CollectionUtils.isNotEmpty(deadServers)) {

servers.removeAll(deadServers);

changed = true;

Loggers.RAFT.info("server list is updated, dead: {}, servers: {}", deadServers.size(), deadServers);

}

if (changed) {

notifyListeners();

}

} catch (Exception e) {

Loggers.RAFT.info("error while updating server list.", e);

}

}

通过refreshServerList() 方法获取,当触发了当前配置服务节点和系统servers不一致时,触发notifyListeners(); 方法,最终会触发RaftPeerSet的onChangeServerList 方法

@Override

public void onChangeServerList(List latestMembers) {

Map tmpPeers = new HashMap<>(8);

for (Server member : latestMembers) {

if (peers.containsKey(member.getKey())) {

tmpPeers.put(member.getKey(), peers.get(member.getKey()));

continue;

}

RaftPeer raftPeer = new RaftPeer();

raftPeer.ip = member.getKey();

// first time meet the local server:

if (NetUtils.localServer().equals(member.getKey())) {

raftPeer.term.set(localTerm.get());

}

tmpPeers.put(member.getKey(), raftPeer);

}

// replace raft peer set:

peers = tmpPeers;

if (RunningConfig.getServerPort() > 0) {

ready = true;

}

Loggers.RAFT.info("raft peers changed: " + latestMembers);

}

改方法通过RunningConfig.getServerPort() ,通过判断当前服务启动节点port是否大于0 ,最终修改了当前ready的状态,

而serverPost修改的触发又是基于onApplicationEvent

(onApplicationEvent的触发是系统启动之后,晚于PostConstruct)

因此,我们可以确定,系统在进行选主时,当前节点服务已经完全启动完毕。

回到RaftCore#MasterElection的init方法

判断peers.isReady() 之后,每次执行会对当前RaftPeer的节点的leaderDueMs 进行随机递减(减少Raft平票概率),当递减至值小于0时,说明当前follower节点未接收到心跳,则自动变为Candidate,参与选票

每一个RaftPeer的leaderDueMs 的更改,我们可以从源码看到

- 1,发起投票之前

- 2,接收投票 receivedVote(RaftPeer remote)

public void sendVote() {

RaftPeer local = peers.get(NetUtils.localServer());

Loggers.RAFT.info("leader timeout, start voting,leader: {}, term: {}",

JSON.toJSONString(getLeader()), local.term);

peers.reset();

local.term.incrementAndGet();

local.voteFor = local.ip;

local.state = RaftPeer.State.CANDIDATE;

Map params = new HashMap<>(1);

params.put("vote", JSON.toJSONString(local));

for (final String server : peers.allServersWithoutMySelf()) {

final String url = buildURL(server, API_VOTE);

try {

HttpClient.asyncHttpPost(url, null, params, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("NACOS-RAFT vote failed: {}, url: {}", response.getResponseBody(), url);

return 1;

}

RaftPeer peer = JSON.parseObject(response.getResponseBody(), RaftPeer.class);

Loggers.RAFT.info("received approve from peer: {}", JSON.toJSONString(peer));

peers.decideLeader(peer);

return 0;

}

});

} catch (Exception e) {

Loggers.RAFT.warn("error while sending vote to server: {}", server);

}

}

}

}

处于Candidiate状态的节点,发起投票,首先会自增自身的Term,发起新的一轮投票,然后通过HttpClient 的方式,向当前节点保留的 Peers发起投票请求接口,当投票接口返回200时,处理对应的返回投票实体信息,进行判断投票:

peers.decideLeader(peer);

public RaftPeer decideLeader(RaftPeer candidate) {

peers.put(candidate.ip, candidate);

SortedBag ips = new TreeBag();

int maxApproveCount = 0;

String maxApprovePeer = null;

for (RaftPeer peer : peers.values()) {

if (StringUtils.isEmpty(peer.voteFor)) {

continue;

}

ips.add(peer.voteFor);

if (ips.getCount(peer.voteFor) > maxApproveCount) {

maxApproveCount = ips.getCount(peer.voteFor);

maxApprovePeer = peer.voteFor;

}

}

if (maxApproveCount >= majorityCount()) {

RaftPeer peer = peers.get(maxApprovePeer);

peer.state = RaftPeer.State.LEADER;

if (!Objects.equals(leader, peer)) {

leader = peer;

applicationContext.publishEvent(new LeaderElectFinishedEvent(this, leader));

Loggers.RAFT.info("{} has become the LEADER", leader.ip);

}

}

return leader;

}

该方法首先会追加对应的投票节点信息,并判断当前节点持有的RaftPeerSet 的节点个数是否大于majorityCount()

public int majorityCount() {

return peers.size() / 2 + 1;

}

即认为本地投票当前节点Candidate获取多数投票,成为leader

对于数据支付,Nacos是通过HeartBeat 实现,进入到HeartBeat的run方法

public void run() {

try {

if (!peers.isReady()) {

return;

}

RaftPeer local = peers.local();

local.heartbeatDueMs -= GlobalExecutor.TICK_PERIOD_MS;

if (local.heartbeatDueMs > 0) {

return;

}

local.resetHeartbeatDue();

sendBeat();

} catch (Exception e) {

Loggers.RAFT.warn("[RAFT] error while sending beat {}", e);

}

}

同样,针对于Leader发起的心跳检测,同样需要检验当前系统所持有的同类RaftPeerSet处于ready状态,然后持有一个heartbeatDueMs,每次调度都会自减,当heartbeatDueMs自减0以下之后,需要发起心跳,同时会重置当前的heartbeatDueMs 参数

public void sendBeat() throws IOException, InterruptedException {

RaftPeer local = peers.local();

if (local.state != RaftPeer.State.LEADER && !STANDALONE_MODE) {

return;

}

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("[RAFT] send beat with {} keys.", datums.size());

}

local.resetLeaderDue();

// build data

JSONObject packet = new JSONObject();

packet.put("peer", local);

JSONArray array = new JSONArray();

if (switchDomain.isSendBeatOnly()) {

Loggers.RAFT.info("[SEND-BEAT-ONLY] {}", String.valueOf(switchDomain.isSendBeatOnly()));

}

if (!switchDomain.isSendBeatOnly()) {

for (Datum datum : datums.values()) {

JSONObject element = new JSONObject();

if (KeyBuilder.matchServiceMetaKey(datum.key)) {

element.put("key", KeyBuilder.briefServiceMetaKey(datum.key));

} else if (KeyBuilder.matchInstanceListKey(datum.key)) {

element.put("key", KeyBuilder.briefInstanceListkey(datum.key));

}

element.put("timestamp", datum.timestamp);

array.add(element);

}

}

packet.put("datums", array);

// broadcast

Map params = new HashMap(1);

params.put("beat", JSON.toJSONString(packet));

String content = JSON.toJSONString(params);

ByteArrayOutputStream out = new ByteArrayOutputStream();

GZIPOutputStream gzip = new GZIPOutputStream(out);

gzip.write(content.getBytes(StandardCharsets.UTF_8));

gzip.close();

byte[] compressedBytes = out.toByteArray();

String compressedContent = new String(compressedBytes, StandardCharsets.UTF_8);

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("raw beat data size: {}, size of compressed data: {}",

content.length(), compressedContent.length());

}

for (final String server : peers.allServersWithoutMySelf()) {

try {

final String url = buildURL(server, API_BEAT);

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("send beat to server " + server);

}

HttpClient.asyncHttpPostLarge(url, null, compressedBytes, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("NACOS-RAFT beat failed: {}, peer: {}",

response.getResponseBody(), server);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

return 1;

}

peers.update(JSON.parseObject(response.getResponseBody(), RaftPeer.class));

if (Loggers.RAFT.isDebugEnabled()) {

Loggers.RAFT.debug("receive beat response from: {}", url);

}

return 0;

}

@Override

public void onThrowable(Throwable t) {

Loggers.RAFT.error("NACOS-RAFT error while sending heart-beat to peer: {} {}", server, t);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

}

});

} catch (Exception e) {

Loggers.RAFT.error("error while sending heart-beat to peer: {} {}", server, e);

MetricsMonitor.getLeaderSendBeatFailedException().increment();

}

}

}

}

心跳检测的逻辑也很简单,大致是:

判断当前系统处于集群状态,并且当前节点为Leader时,才发起心跳检测

重置当前系统的选主间隔时间leaderDueMs

附带心跳数据(根据switchDomain.isSendBeatOnly())判断,

通过HttpClient发起心跳数据,

同样,对于收到投票请求的节点而言,其内部的处理逻辑也不复杂:

public RaftPeer receivedVote(RaftPeer remote) {

if (!peers.contains(remote)) {

throw new IllegalStateException("can not find peer: " + remote.ip);

}

RaftPeer local = peers.get(NetUtils.localServer());

if (remote.term.get() <= local.term.get()) {

String msg = "received illegitimate vote" +

", voter-term:" + remote.term + ", votee-term:" + local.term;

Loggers.RAFT.info(msg);

if (StringUtils.isEmpty(local.voteFor)) {

local.voteFor = local.ip;

}

return local;

}

local.resetLeaderDue();

local.state = RaftPeer.State.FOLLOWER;

local.voteFor = remote.ip;

local.term.set(remote.term.get());

Loggers.RAFT.info("vote {} as leader, term: {}", remote.ip, remote.term);

return local;

}

1,获取当前节点RaftPeer

2,如果当前节点的Term大于等于发起投票的节点Term,(此时是非法投票数据,可能的原因是原始的Leader宕机,并且过后一段时间开机,发起了投票)

3,重置leaderDueMs选举时间间隔

4,设置当前节点为Follower,并且设置投票voteFor和term

对于节点接收心跳信息:

public RaftPeer makeLeader(RaftPeer candidate) {

// 如果当前节点持有的leader和当前的发起的节点不相同,需要当前节点去同步最新数据

if (!Objects.equals(leader, candidate)) {

leader = candidate;

applicationContext.publishEvent(new MakeLeaderEvent(this, leader));

Loggers.RAFT.info("{} has become the LEADER, local: {}, leader: {}",

leader.ip, JSON.toJSONString(local()), JSON.toJSONString(leader));

}

// 处理当前持有节点的同伴节点信息

for (final RaftPeer peer : peers.values()) {

Map params = new HashMap<>(1);

// 处理当前节点持有的属于leader的节点信息,此时需要及时通知当前节点PeerSet对应的leader,进行数据同步

if (!Objects.equals(peer, candidate) && peer.state == RaftPeer.State.LEADER) {

try {

String url = RaftCore.buildURL(peer.ip, RaftCore.API_GET_PEER);

HttpClient.asyncHttpGet(url, null, params, new AsyncCompletionHandler() {

@Override

public Integer onCompleted(Response response) throws Exception {

if (response.getStatusCode() != HttpURLConnection.HTTP_OK) {

Loggers.RAFT.error("[NACOS-RAFT] get peer failed: {}, peer: {}",

response.getResponseBody(), peer.ip);

peer.state = RaftPeer.State.FOLLOWER;

return 1;

}

update(JSON.parseObject(response.getResponseBody(), RaftPeer.class));

return 0;

}

});

} catch (Exception e) {

peer.state = RaftPeer.State.FOLLOWER;

Loggers.RAFT.error("[NACOS-RAFT] error while getting peer from peer: {}", peer.ip);

}

}

}

return update(candidate);

}

2.2 hazelcast

待研究。。。