OpenGL.Shader:志哥教你写一个滤镜直播客户端(11)视觉滤镜:再谈高斯滤波 卷积核降维运算

OpenGL.Shader:志哥教你写一个滤镜直播客户端(11)

一、优化的必要性

为何要再谈高斯滤波?因为高斯滤波是在实际中应用广泛,而且可能会用到比较大的卷积核(譬如说[5x5] [7x7] [9x9],注意都是奇数大小)。此时如果再使用之前介绍的简单高斯滤波实现,gpu显存会增大,此时程序性能就不太令人满意了。文章背景就是如此,那如何优化?利用卷积可分离性就是解决这种问题的一种思路。配合OpenGL.Shader的多着色器结合变能有效的把优化得以实现。

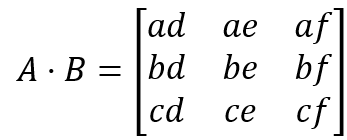

接下来先简单介绍什么是卷积可分离性。 假设A为列向量,B为行向量,则有A*B=B*A。例如n=3时,如下图所示:

根据以上理论,前面说到的高斯核Kernel2D可以理解为KernelX·KernelY,那么卷积过程就可以表示为:Dst=Src*Kernel2D=(Src*KernelX)*KernelY=(Src*KernelY)*KernelX。一般来说,无论对于何种卷积滤波,只要其卷积核可以拆解为两个行向量与列向量的乘积,那么就算卷积可分离。 此过程也可以理解成,把二维卷积核降到一维进行处理。

二、利用FBO实现卷积核降维

道理大家都懂了,那么该如何实现其降维优化的逻辑呢?我们可以利用FBO离屏渲染,先处理Src*KernelX的逻辑部分,把结果保存到FBO1,再以FBO1为输入与KernelY再次运算得出最终输出。GPUImage当中也是通过这里一思路实现其他复杂的卷积优化,和一些滤镜合并的操作,其仿照核心实现类GpuBaseFilterGroup.hpp,代码如下:

#ifndef GPU_FILTER_GROUP_HPP

#define GPU_FILTER_GROUP_HPP

#include

#include

#include "GpuBaseFilter.hpp"

class GpuBaseFilterGroup : public GpuBaseFilter {

// GpuBaseFilter virtual method

public:

GpuBaseFilterGroup()

{

mFBO_IDs = NULL;

mFBO_TextureIDs = NULL;

}

virtual void onOutputSizeChanged(int width, int height) {

if (mFilterList.empty()) return;

destroyFrameBufferObjs();

std::vector::iterator itr;

for(itr=mFilterList.begin(); itr!=mFilterList.end(); itr++)

{

GpuBaseFilter filter = *itr;

filter.onOutputSizeChanged(width, height);

}

createFrameBufferObjs(width, height);

}

virtual void destroy() {

destroyFrameBufferObjs();

std::vector::iterator itr;

for(itr=mFilterList.begin(); itr!=mFilterList.end(); itr++)

{

GpuBaseFilter filter = *itr;

filter.destroy();

}

mFilterList.clear();

GpuBaseFilter::destroy();

}

private:

void createFrameBufferObjs(int _width, int _height ) {

const int num = mFilterList.size() -1;

// 最后一次draw是在显示屏幕上

mFBO_IDs = new GLuint[num];

mFBO_TextureIDs = new GLuint[num];

glGenFramebuffers(num, mFBO_IDs);

glGenTextures(num, mFBO_TextureIDs);

for (int i = 0; i < num; i++) {

glBindTexture(GL_TEXTURE_2D, mFBO_TextureIDs[i]);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE); // GL_REPEAT

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE); // GL_REPEAT

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA16F, _width, _height, 0, GL_RGBA, GL_FLOAT, 0);

glBindFramebuffer(GL_FRAMEBUFFER, mFBO_IDs[i]);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, mFBO_TextureIDs[i], 0);

glBindTexture(GL_TEXTURE_2D, 0);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

}

void destroyFrameBufferObjs() {

if (mFBO_TextureIDs != NULL) {

glDeleteTextures(length(mFBO_TextureIDs), mFBO_TextureIDs);

delete[] mFBO_TextureIDs;

mFBO_TextureIDs = NULL;

}

if (mFBO_IDs != NULL) {

glDeleteFramebuffers(length(mFBO_IDs), mFBO_IDs);

delete[] mFBO_IDs;

mFBO_IDs = NULL;

}

}

inline int length(GLuint arr[]) {

return sizeof(arr) / sizeof(arr[0]);

}

public:

std::vector mFilterList;

void addFilter(GpuBaseFilter filter) {

mFilterList.push_back(filter);

}

GLuint* mFBO_IDs;

GLuint* mFBO_TextureIDs;

};

#endif // GPU_FILTER_GROUP_HPP

代码调理清晰,内容易懂,虽然它是继承GpuBaseFilter,其实是为了兼容之前父类引用的做法。然后再覆写这个基础方法。关键是在onOutputSizeChanged当中,我们单独拿来说说:

virtual void onOutputSizeChanged(int width, int height) {

if (mFilterList.empty()) return;

// 检查Filter列表是否为空,为空没必要继续下去

destroyFrameBufferObjs();

// 销毁以后的fbo及其绑定纹理的缓存列表

std::vector::iterator itr;

for(itr=mFilterList.begin(); itr!=mFilterList.end(); itr++)

{

GpuBaseFilter filter = *itr;

filter.onOutputSizeChanged(width, height);

}

// 激活所有filter的sizechanged方法

createFrameBufferObjs(width, height);

// 创建对应的fbo及其绑定纹理

}

接着是私有方法createFrameBufferObjs,具体代码参照上方,注意一点的是,创建的数量是mFilterList.size() -1,因为最后一次的最终输出图像是渲染到屏幕上。

三、GpuGaussianBlurFilter2

最后一步,也是最复杂的一步,改造我们的高斯滤镜的实现。先看着色器代码

attribute vec4 position;

attribute vec4 inputTextureCoordinate;

const int GAUSSIAN_SAMPLES = 9;

uniform float widthFactor;

uniform float heightFactor;

varying vec2 blurCoordinates[GAUSSIAN_SAMPLES];

void main()

{

gl_Position = position;

vec2 singleStepOffset = vec2(widthFactor, heightFactor);

int multiplier = 0;

vec2 blurStep;

for (int i = 0; i < GAUSSIAN_SAMPLES; i++)

{

multiplier = (i - ((GAUSSIAN_SAMPLES - 1) / 2));

//-4,-3,-2,-1,0,1,2,3,4

blurStep = float(multiplier) * singleStepOffset;

blurCoordinates[i] = inputTextureCoordinate.xy + blurStep;

}

}

以当前顶点为中心,输出9个采样点的坐标位置, 其中初读代码singleStepOffset可能会有疑问,以往我们都把宽度因子和高度因子都一并传入,这样9个采样点的位置就是成45°对角倾斜。这里先不做解释,下面会有详细解读。

uniform sampler2D SamplerY;

uniform sampler2D SamplerU;

uniform sampler2D SamplerV;

uniform sampler2D SamplerRGB;

mat3 colorConversionMatrix = mat3(

1.0, 1.0, 1.0,

0.0, -0.39465, 2.03211,

1.13983, -0.58060, 0.0);

vec3 yuv2rgb(vec2 pos)

{

vec3 yuv;

yuv.x = texture2D(SamplerY, pos).r;

yuv.y = texture2D(SamplerU, pos).r - 0.5;

yuv.z = texture2D(SamplerV, pos).r - 0.5;

return colorConversionMatrix * yuv;

}

uniform int drawMode; //0为YUV,1为RGB

const int GAUSSIAN_SAMPLES = 9;

varying vec2 blurCoordinates[GAUSSIAN_SAMPLES];

void main()

{

vec3 fragmentColor = vec3(0.0);

if (drawMode==0)

{

fragmentColor += (yuv2rgb(blurCoordinates[0]) *0.05);

fragmentColor += (yuv2rgb(blurCoordinates[1]) *0.09);

fragmentColor += (yuv2rgb(blurCoordinates[2]) *0.12);

fragmentColor += (yuv2rgb(blurCoordinates[3]) *0.15);

fragmentColor += (yuv2rgb(blurCoordinates[4]) *0.18);

fragmentColor += (yuv2rgb(blurCoordinates[5]) *0.15);

fragmentColor += (yuv2rgb(blurCoordinates[6]) *0.12);

fragmentColor += (yuv2rgb(blurCoordinates[7]) *0.09);

fragmentColor += (yuv2rgb(blurCoordinates[8]) *0.05);

gl_FragColor = vec4(fragmentColor, 1.0);

}

else

{

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[0]).rgb *0.05);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[1]).rgb *0.09);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[2]).rgb *0.12);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[3]).rgb *0.15);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[4]).rgb *0.18);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[5]).rgb *0.15);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[6]).rgb *0.12);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[7]).rgb *0.09);

fragmentColor += (texture2D(SamplerRGB, blurCoordinates[8]).rgb *0.05);

gl_FragColor = vec4(fragmentColor, 1.0);

}

}

看着很复杂,又yuv又rgb的,其实最初GpuBaseFilter的设计就是兼容两种模式,之前是我偷懒没写全。这里写全了实际的内容很简单,根据9个坐标点进行纹理色值的采样,然后进行卷积运算。高斯核也剩了,简化为了9个权值系数,值得注意的是这9个系数不是乱定义的,是根据通用的高斯公式生成,而且都已经归一化,9个系数相加总数是等于一!

那么为啥我这里不能偷懒只写一种drawMode,顶点着色器的singleStepOffset造成顶点坐标的45°倾斜又如何理解?接着走下去。

class GpuGaussianBlurFilter2 : public GpuBaseFilterGroup {

GpuGaussianBlurFilter2()

{

GAUSSIAN_BLUR_VERTEX_SHADER = "...";

GAUSSIAN_BLUR_FRAGMENT_SHADER = "..."; //上方代码

}

~GpuGaussianBlurFilter2()

{

if(!GAUSSIAN_BLUR_VERTEX_SHADER.empty()) GAUSSIAN_BLUR_VERTEX_SHADER.clear();

if(!GAUSSIAN_BLUR_FRAGMENT_SHADER.empty()) GAUSSIAN_BLUR_FRAGMENT_SHADER.clear();

}

void init() {

GpuBaseFilter filter1;

filter1.init(GAUSSIAN_BLUR_VERTEX_SHADER.c_str(), GAUSSIAN_BLUR_FRAGMENT_SHADER.c_str());

mWidthFactorLocation1 = glGetUniformLocation(filter1.getProgram(), "widthFactor");

mHeightFactorLocation1 = glGetUniformLocation(filter1.getProgram(), "heightFactor");

mDrawModeLocation1 = glGetUniformLocation(filter1.getProgram(), "drawMode");

addFilter(filter1);

GpuBaseFilter filter2;

filter2.init(GAUSSIAN_BLUR_VERTEX_SHADER.c_str(), GAUSSIAN_BLUR_FRAGMENT_SHADER.c_str());

mWidthFactorLocation2 = glGetUniformLocation(filter2.getProgram(), "widthFactor");

mHeightFactorLocation2 = glGetUniformLocation(filter2.getProgram(), "heightFactor");

mDrawModeLocation2 = glGetUniformLocation(filter2.getProgram(), "drawMode");

addFilter(filter2);

}

... ...

}再看覆写父类(GpuBaseFilterGroup)的父类(GpuBaseFilter)的无参的方法init(),方便统一管理和代码引用。内容不难,就是创建两个着色器对象,都是用了同一套着色器,但是Shader的对象引用注意区分。

class GpuGaussianBlurFilter2 : public GpuBaseFilterGroup {

... ... 接上

public:

void onOutputSizeChanged(int width, int height) {

GpuBaseFilterGroup::onOutputSizeChanged(width, height);

}

void setAdjustEffect(float percent) {

mSampleOffset = range(percent * 100.0f, 0.0f, 2.0f);

}

}接着还是覆写父类(GpuBaseFilterGroup)的父类(GpuBaseFilter)的无参的方法onOutputSizeChanged,不需要做特殊的处理,直接使用父类GpuBaseFilterGroup的代码逻辑(内容见上方目录二)

class GpuGaussianBlurFilter2 : public GpuBaseFilterGroup {

... ... 接上

public:

void onDraw(GLuint SamplerY_texId, GLuint SamplerU_texId, GLuint SamplerV_texId,

void* positionCords, void* textureCords)

{

if (mFilterList.size()==0) return;

GLuint previousTexture = 0;

int size = mFilterList.size();

for (int i = 0; i < size; i++) {

GpuBaseFilter filter = mFilterList[i];

bool isNotLast = i < size - 1;

if (isNotLast) {

glBindFramebuffer(GL_FRAMEBUFFER, mFBO_IDs[i]);

}

glClearColor(0, 0, 0, 0);

if (i == 0) {

drawFilter1YUV(filter, SamplerY_texId, SamplerU_texId, SamplerV_texId, positionCords, textureCords);

}

if (i == 1) { //isNotLast=false, not bind FBO, draw on screen.

drawFilter2RGB(filter, previousTexture, positionCords, mNormalTextureCords);

}

if (isNotLast) {

glBindFramebuffer(GL_FRAMEBUFFER, 0);

previousTexture = mFBO_TextureIDs[i];

}

}

}

}到重点渲染方法onDraw,覆写爷爷类GpuBaseFilter,是所有滤镜接口的通用方法。代码逻辑参照GPUImage进行简化。额,感觉没啥好说,因为这个onDraw方法是GpuGaussianBlurFilter2的具体实现,并不具有没有通用性,按照(目录一)高斯滤镜的优化实现逻辑就可以了。

i==0时,先进行src*kernelX的离屏渲染。进入具体查看drawFilter1YUV的内容。

class GpuGaussianBlurFilter2 : public GpuBaseFilterGroup {

... ... 接上

private:

void drawFilter1YUV(GpuBaseFilter filter,

GLuint SamplerY_texId, GLuint SamplerU_texId, GLuint SamplerV_texId,

void* positionCords, void* textureCords)

{

if (!filter.isInitialized())

return;

glUseProgram(filter.getProgram());

glUniform1i(mDrawModeLocation1, 0);

//glUniform1f(mSampleOffsetLocation1, mSampleOffset);

glUniform1f(mWidthFactorLocation1, mSampleOffset / filter.mOutputWidth);

glUniform1f(mHeightFactorLocation1, 0);

glVertexAttribPointer(filter.mGLAttribPosition, 2, GL_FLOAT, GL_FALSE, 0, positionCords);

glEnableVertexAttribArray(filter.mGLAttribPosition);

glVertexAttribPointer(filter.mGLAttribTextureCoordinate, 2, GL_FLOAT, GL_FALSE, 0, textureCords);

glEnableVertexAttribArray(filter.mGLAttribTextureCoordinate);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, SamplerY_texId);

glUniform1i(filter.mGLUniformSampleY, 0);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, SamplerU_texId);

glUniform1i(filter.mGLUniformSampleU, 1);

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_2D, SamplerV_texId);

glUniform1i(filter.mGLUniformSampleV, 2);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

glDisableVertexAttribArray(filter.mGLAttribPosition);

glDisableVertexAttribArray(filter.mGLAttribTextureCoordinate);

glBindTexture(GL_TEXTURE_2D, 0);

}

}注意看着哦,所有的通用Shader应用对象索引,都是指定传入的filter,只有三个特殊对象索引特殊处理。i==0时,先进行src*kernelX的离屏渲染。第一次我们是从视频原生数据yuv进行图像采样,所以要使用drawMode=0,即YUV模式。然后,widthFactor传入 SampleOffset / 屏幕宽度 作为顶点着色器的宽度因子。但是!!!heightFactor传入0!即当前纵向不进行偏移,所以顶点着色器就不会出现45°阶梯式的采样偏移了,此时完成了 src*kernalX的离屏渲染。

趁热打铁,当i==1,这一次是循环的最后一次,不再需要离屏渲染,是直接输出到屏幕。注意previousTexture缓存了i==0离屏渲染的所绑定的纹理id,其承载着i==0即src*kernelX的渲染结果。我们以此为输入,进行drawFilter2RGB

class GpuGaussianBlurFilter2 : public GpuBaseFilterGroup {

... ... 接上

private:

void drawFilter2RGB(GpuBaseFilter filter, GLuint _texId, void* positionCords, void* textureCords)

{

if (!filter.isInitialized())

return;

glUseProgram(filter.getProgram());

glUniform1i(mDrawModeLocation2, 1);

//glUniform1f(mSampleOffsetLocation2, mSampleOffset);

glUniform1f(mWidthFactorLocation2, 0);

glUniform1f(mHeightFactorLocation2, mSampleOffset / filter.mOutputHeight);

glVertexAttribPointer(filter.mGLAttribPosition, 2, GL_FLOAT, GL_FALSE, 0, positionCords);

glEnableVertexAttribArray(filter.mGLAttribPosition);

glVertexAttribPointer(filter.mGLAttribTextureCoordinate, 2, GL_FLOAT, GL_FALSE, 0, textureCords);

glEnableVertexAttribArray(filter.mGLAttribTextureCoordinate);

glActiveTexture(GL_TEXTURE3);

glBindTexture(GL_TEXTURE_2D, _texId);

glUniform1i(filter.mGLUniformSampleRGB, 3);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

glDisableVertexAttribArray(filter.mGLAttribPosition);

glDisableVertexAttribArray(filter.mGLAttribTextureCoordinate);

glBindTexture(GL_TEXTURE_2D, 0);

}

}还是那三个特殊处理的shader引用索引。此时传入的是rgb纹理,drawMode使用rgb模式1,然后这一次widthFactor为0,heightFactor传入mSampleOffset / 屏幕高度,完成最后一步的 (src*kernelX)*kernelY。

四、总结

最终测试可以在GpuFilterRender::checkFilterChange,把GpuGaussianBlurFilter的引用替换成GpuGaussianBlurFilter2。大家可以对比效果可以发现2的实现比1要更明显,那是因为GpuGaussianBlurFilter只是一个简单的3x3高斯核,GpuGaussianBlurFilter2是9x9的运算结果。虽然看着2比1的运算量要大,但是再看看GPU的显存情况,降低了差不多一半,性能得到明显改善。

此篇不单只是get到卷积核的降维优化实现,还get到了多shader的分级渲染方法,这是不是可以考虑考虑多滤镜的组合效果实现呢 ?代码同步至:https://github.com/MrZhaozhirong/NativeCppApp /src/main/cpp/gpufilter/filter/GpuGaussianBlurFilter2.hpp