scrapy框架下爬取51job网站信息,并存储到表格中

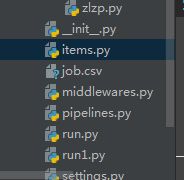

1. 通过命令创建项目

scrapy startproject JobSpider

2. 用pycharm打开项目

3. 通过命令创建爬虫

scrapy genspider job baidu.com

scrapy startproject JobSpider

2. 用pycharm打开项目

3. 通过命令创建爬虫

scrapy genspider job baidu.com

4. 配置settings

robots_obey=False

Download_delay=0.5

Cookie_enable=FalseDOWNLOADER_MIDDLEWARES = {

'JobSpider.middlewares.JobUserAgentMiddleware': 543,

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None

}"""调用Pipeline中自己写的类"""

ITEM_PIPELINES = {

'JobSpider.pipelines.ToCsvPipeline': 300,

}可以直接粘现成的

# 自己添加的获取useragent类

class JobUserAgentMiddleware(object):

"""This middleware allows spiders to override the user_agent"""

def __init__(self, user_agent='Scrapy', name=''):

self.user_agent = UserAgent()

@classmethod

def from_crawler(cls, crawler):

# o = cls(crawler.settings['USER_AGENT'],'ZhangSAN)

o = cls()

# cls后的数据会自动赋值给构造函数的对应参数

# crawler.signals.connect(o.spider_opened, signal=signals.spider_opened)

return o

# 这个函数不能删,否则会报错

def spider_opened(self, spider):

# 等号右边代码的含义是 从spider中获得user_agent的属性

# 如果没有默认为self.user_agent的内容

# self.user_agent = getattr(spider, 'user_agent', self.user_agent)

pass

def process_request(self, request, spider):

if self.user_agent:

# b 转换为二进制,不能改

request.headers.setdefault(b'User-Agent', self.user_agent.random)

6. 开始解析数据

在Terminal终端里面,创建文件 scrapy genspider job baidu.com

2) 函数1跳转到函数2使用 yield scrapy.Request(url,callback,meta,dont_filter)

# -*- coding: utf-8 -*-

import scrapy

from ..items import JobspiderItem

# --老师--51job数据获取方法一

class JobSpider(scrapy.Spider):

name = 'job'

allowed_domains = ['51job.com']

start_urls = [

'http://search.51job.com/list/010000%252C020000%252C030200%252C040000,000000,0000,00,9,99,python,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=',

'http://search.51job.com/list/010000%252C020000%252C030200%252C040000,000000,0000,00,9,99,php,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=',

'http://search.51job.com/list/010000%252C020000%252C030200%252C040000,000000,0000,00,9,99,html,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=',

]

def parse(self, response):

yield scrapy.Request(

url=response.url,

callback=self.parse_job_info,

meta={},

dont_filter=True

)

def parse_next_page(self, response):

"""

解析下一页

:param response:

:return:

"""

next_page = response.xpath("//li[@class='bk'][2]/a/@href").extract_first('')

if next_page:

yield scrapy.Request(

url=next_page,

callback=self.parse_job_info,

meta={},

dont_filter=True

)

"""

递归:如果一个函数内部自己调用自己

这种形式就叫做递归

"""

def parse_job_info(self, response):

"""

解析工作信息

:param response:

:return:

"""

job_div_list = response.xpath("//div[@id='resultList']/div[@class='el']")

for job_div in job_div_list:

job_name = job_div.xpath("p/span/a/@title").extract_first('无工作名称').strip().replace(",", "/")

job_company_name = job_div.xpath("span[@class='t2']/a/@title").extract_first('无公司名称').strip()

job_place = job_div.xpath("span[@class='t3']/text()").extract_first('无地点名称').strip()

job_salary = job_div.xpath("span[@class='t4']/text()").extract_first('面议').strip()

job_time = job_div.xpath("span[@class='t5']/text()").extract_first('无时间信息').strip()

job_type = '51job' if '51job.com' in response.url else '其它'

print(job_type, job_name, job_company_name, job_place, job_salary, job_time)

"""

数据清洗:负责清除数据两端的空格,空行,特殊符号等

常用操作一般是strip

包括清除无效数据,例如数据格式不完整的数据

以及重复的数据

"""

item = JobspiderItem()

item['job_name'] = job_name

item['job_company_name'] = job_company_name

item['job_place'] = job_place

item['job_salary'] = job_salary

item['job_time'] = job_time

item['job_type'] = job_type

item['fan_kui_lv'] = "没有反馈率"

yield item

yield scrapy.Request(

url=response.url,

callback=self.parse_next_page,

dont_filter=True,

)

7. 将数据封装到items,记得yield item

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class JobspiderItem(scrapy.Item):

# define the fields for your item here like:

job_name = scrapy.Field()

job_company_name = scrapy.Field()

job_place = scrapy.Field()

job_salary = scrapy.Field()

job_time = scrapy.Field()

job_type = scrapy.Field()

fan_kui_lv = scrapy.Field()

8. 自定义pipelines将数据存储到数据库/文件中

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

"""

Pipeline:俗称管道,用于接收爬虫返回的item数据

"""

class JobspiderPipeline(object):

def process_item(self, item, spider):

return item

"""

以下代码 在settings.py中调用

"""

class ToCsvPipeline(object):

def process_item(self, item, spider):

with open("job.csv", "a", encoding="gb18030") as f:

job_name = item['job_name']

job_company_name = item['job_company_name']

job_place = item['job_place']

job_salary = item['job_salary']

job_time = item['job_time']

job_type = item['job_type']

fan_kui_lv = item['fan_kui_lv']

job_info = [job_name, job_company_name, job_place, job_salary, job_time, job_type, fan_kui_lv, "\n"]

# 逗号,换格

f.write(",".join(job_info))

# 把item传递给下一个Pipeline做处理

return item

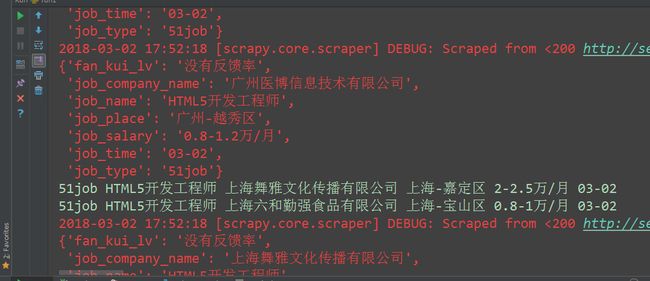

9.执行结果如下:

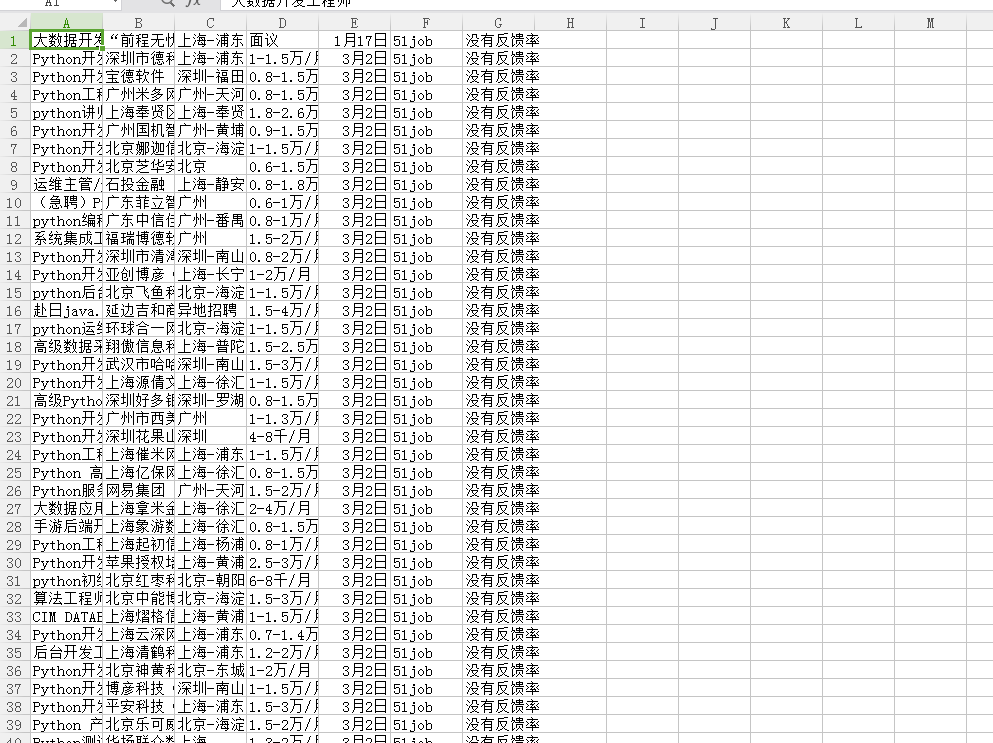

生成表格: