33、SpringCloud分布式日志采集

微服务的项目大多都有好几个工程,总的来说可以使用elk+logstash+elasticsearch+kibana做分布式日志处理,ogstash接收日志,elasticsearch 处理数据,kibana展示数据。

(1)打开防火墙

firewall-cmd --zone=public --add-port=5601/tcp --permanent

firewall-cmd --zone=public --add-port=9200/tcp --permanent

firewall-cmd --zone=public --add-port=5044/tcp --permanent

firewall-cmd --zone=public --add-port=9600/tcp --permanent

firewall-cmd --reload

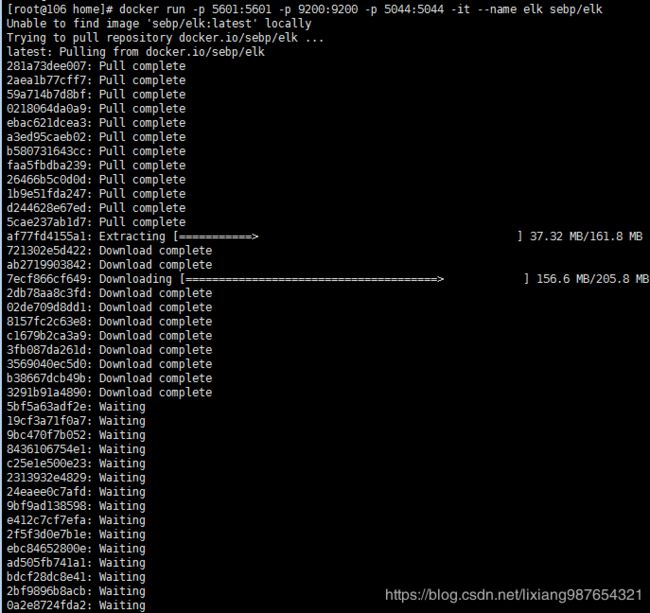

(2)运行elk

elk使用现成的docker镜像

docker run -p 5601:5601 -p 9200:9200 -p 5044:5044 -it --name elk sebp/elk

运行结果:

运行报错:

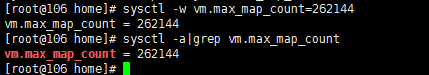

解决方案:

切换到root用户,之心命令:

sysctl -w vm.max_map_count=262144

查看结果:

sysctl -a|grep vm.max_map_count

上述方法修改后,重启虚拟机将失效,所以/etc/sysctl.conf文件最后添加一行

vm.max_map_count=262144

重启,删除容器docker rm 提示的容器id,重新启动

docker run -p 5601:5601 -p 9200:9200 -p 5044:5044 -it --name elk sebp/elk

等待几分钟,后运行结果:

说明:

5601 - Kibana web 接口

9200 - Elasticsearch JSON 接口

5044 - Logstash 日志接收接口

(3)修改配置

枚举docker容器

docker ps

![]()

进入容器

docker exec -it 689da25080c0 sh

测试:

(1)执行命令:/opt/logstash/bin/logstash -e 'input { stdin { } } output { elasticsearch { hosts => ["localhost"] } }'

如果看到这样的报错信息 Logstash could not be started because there is already another instance using the configured data directory. If you wish to run multiple instances, you must change the "path.data" setting.

请执行命令:service logstash stop

然后在执行就可以了

(2)当命令成功被执行后,看到:Successfully started Logstash API endpoint {:port=>9600} 信息后,

输入:this is a dummy entry 然后回车,模拟一条日志进行测试。

(3)打开浏览器,输入:http://

这里输入:http://192.168.12.106:9200/_search?pretty

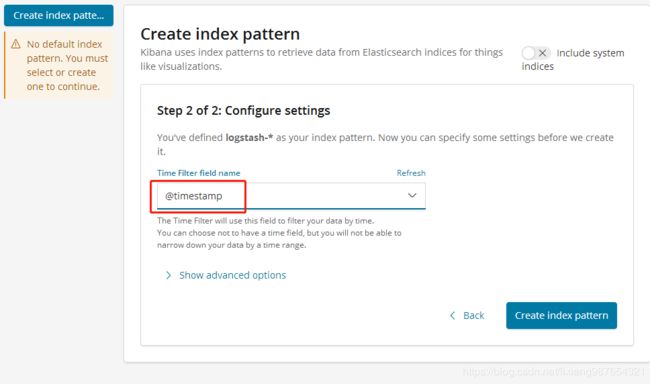

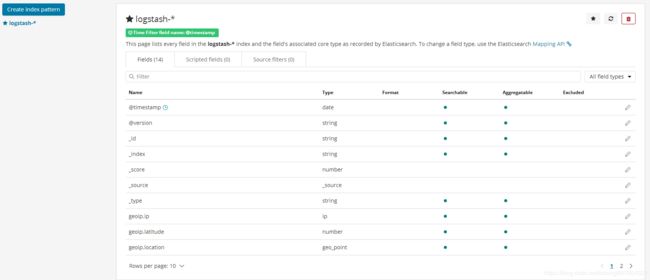

(4)模拟数据成功后,创建index pattern(只有模拟数据才可以创建索引)

选择过滤属性名:

(5)配置springcloud项目的logstash

首先,引入logstash库:

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>4.9version>

dependency>

logback.xml配置

<configuration>

<property name="PROJECT_NAME" value="dys" />

<property name="LOG_HOME" value="/app/logs/${PROJECT_NAME}" />

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} [%X{ip}] [%thread] %-5level %logger{60} - %msg%n" />

<property name="LOG_CHARSET" value="UTF-8" />

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

appender>

<appender name="PROJECT-COMMON" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-common.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-common_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-ERROR" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-error.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>ERRORlevel>

filter>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-error_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-BIZ" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-biz.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-biz_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-DAL" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-dal.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-dal_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-INTEG" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-integ.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-integ_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-SHIRO" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-shiro.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-shiro_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-CAS" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-cas.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-cas_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-MYBATIS" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-mybatis.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-mybatis_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-DUBBO" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-dubbo.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-dubbo_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-APACHECOMMON" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-apachecommon.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-apachecommon_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-ZOOKEEPER" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-zookeeper.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-zookeeper_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-SPRING" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-spring.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-spring_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-QUARTZ" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-quartz.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-quartz_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-APACHEHTTP" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-apachehttp.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-apachehttp_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="PROJECT-OTHER" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_HOME}/${PROJECT_NAME}-other.logfile>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<pattern>${LOG_PATTERN}pattern>

<charset>${LOG_CHARSET}charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_HOME}/${PROJECT_NAME}-other_%d{yyyy-MM-dd}.log

fileNamePattern>

<maxHistory>15maxHistory>

rollingPolicy>

appender>

<appender name="logstash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>192.168.12.106:5044destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder" />

<keepAliveDuration>5 minuteskeepAliveDuration>

appender>

<root level="INFO">

<appender-ref ref="CONSOLE" />

<appender-ref ref="PROJECT-COMMON" />

<appender-ref ref="PROJECT-ERROR" />

<appender-ref ref="logstash" />

root>

<logger name="com.donwait.controller" level="debug" additivity="false">

<appender-ref ref="PROJECT-BIZ" />

<appender-ref ref="PROJECT-ERROR" />

<appender-ref ref="logstash" />

logger>

<logger name="org.apache.shiro" level="debug" additivity="false">

<appender-ref ref="PROJECT-SHIRO" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.jasig.cas" level="debug" additivity="false">

<appender-ref ref="PROJECT-CAS" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.mybatis" level="debug" additivity="false">

<appender-ref ref="PROJECT-MYBATIS" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.apache.zookeeper" level="debug" additivity="false">

<appender-ref ref="PROJECT-ZOOKEEPER" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.I0Itec.zkclient" level="debug" additivity="false">

<appender-ref ref="PROJECT-ZOOKEEPER" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="com.alibaba.dubbo" level="debug" additivity="false">

<appender-ref ref="PROJECT-DUBBO" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.apache.commons" level="debug" additivity="false">

<appender-ref ref="PROJECT-APACHECOMMON" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.springframework" level="debug" additivity="false">

<appender-ref ref="PROJECT-SPRING" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.quartz" level="debug" additivity="false">

<appender-ref ref="PROJECT-QUARTZ" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="org.apache.http" level="debug" additivity="false">

<appender-ref ref="PROJECT-APACHEHTTP" />

<appender-ref ref="PROJECT-ERROR" />

logger>

<logger name="com.baidu.disconf" level="info" additivity="false">

<appender-ref ref="PROJECT-COMMON" />

<appender-ref ref="PROJECT-ERROR" />

logger>

configuration>

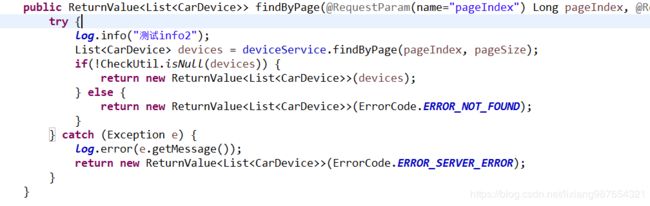

测试日志:

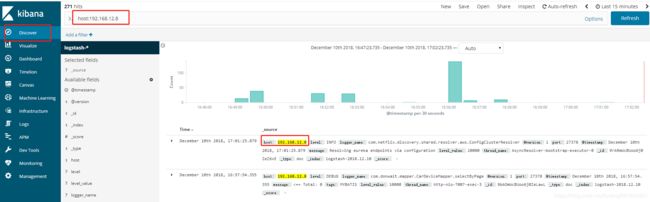

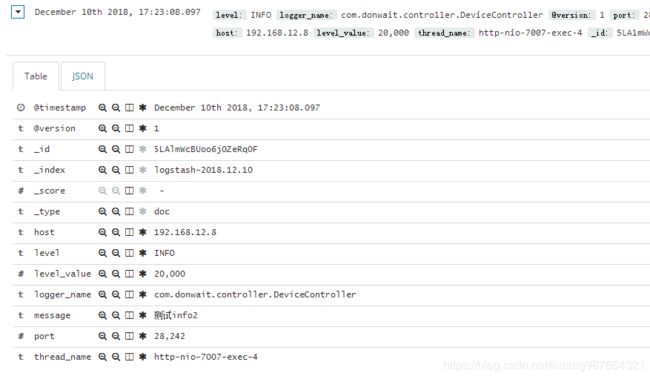

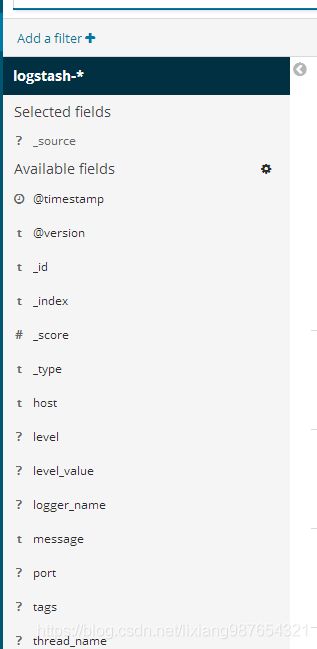

查看日志(并筛选,查看所有用*)

可以按左边任意选项搜索:

)

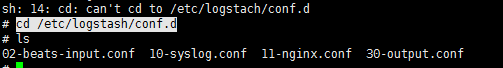

注意如果根据如下操作将配置配置在vim 02-beats-input.conf是无效的!!!(经过测试无效,暂时没有去查找其原因),仅供参考!

进入配置目录

cd /etc/logstash/conf.d

编辑配置文件02-beats-input.conf

vim 02-beats-input.conf

将如下配置:

修改为:

或:

output {

elasticsearch {

hosts => ["192.168.160.66:9200","192.168.160.88:9200","192.168.160.166:9200"]

index => "applog"

}

stdout { codec => rubydebug } //若不需要在控制台中输出,此行可以删除

}

redis读取配置:

input {

redis {

codec => json

host => "192.168.2.246"

port => 56379

key => "data-mgr"

data_type => "list"

}

}

注意:

(1)其中port为端口号,codec表示通过json格式,elasticsearch.hosts表示elasticsearch的地址,这里可以是集群。index为日志存储的elasticsearch索引。

(2)由于sebp/elk中logstash的input的方式默认是filebeat,首先们需要进入elk容器中修改input方式。logstash默认会将etc/logstash/conf.d/中的配置文件进行整合然后启动,修改完成后重新启动容器:

docker restart elk

也可以自己将配置文件test.conf:

input {

tcp {

##host:port就是上面appender中的 destination,这里其实把logstash作为服务,开启9250端口接收logback发出的消息

host => "127.0.0.1"

port => 9100

mode => "server"

tags => ["tags"]

codec => json_lines

}

}

output {

stdout { codec => rubydebug }

#输出到es

elasticsearch { hosts => "127.0.0.1:9200" }

#输出到一个文件中

file {

path => "D:/study/springcloud_house/ELK/logstash-6.1.1/out"

codec => line

}

}

然后启动使用自己的配置:.\bin\logstash -f test.conf

访问界面:http://192.168.12.106:5601,进入进入kibana界面

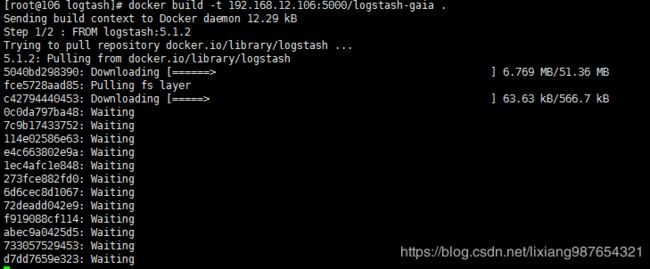

docker images

docker push

快来成为我的朋友或合作伙伴,一起交流,一起进步!

QQ群:961179337

微信:lixiang6153

邮箱:[email protected]

公众号:IT技术快餐

更多资料等你来拿!