android binder 机制 (ServiceManager)

Binder机制作为一种IPC通信机制,在android系统中扮演了非常重要的角色,因此我也花了一些时间来研究它,按照我的理解,下面我将从4个方面来讲一下Binder,如有不对的地方,还希望大家多多指教。下面的例子都将以MediaServer来讲。

一、ServiceManager

ServiceManager在Binder系统中相当与DNS,Server会先在这里注册,然后Client会在这里查询服务以获得与Service所在的Server进程建立通信的通路。

在与ServiceManager的通信中,书上是以addService为例来讲,我这里将以getService为例来讲。直接上代码。

/*static*/const sp&

IMediaDeathNotifier::getMediaPlayerService()

{

ALOGV("getMediaPlayerService");

Mutex::Autolock _l(sServiceLock);

if (sMediaPlayerService == 0) {

sp sm = defaultServiceManager();

sp binder;

do {

binder = sm->getService(String16("media.player"));

if (binder != 0) {

break;

}

ALOGW("Media player service not published, waiting...");

usleep(500000); // 0.5 s

} while (true);

if (sDeathNotifier == NULL) {

sDeathNotifier = new DeathNotifier();

}

binder->linkToDeath(sDeathNotifier);

sMediaPlayerService = interface_cast(binder);

}

ALOGE_IF(sMediaPlayerService == 0, "no media player service!?");

return sMediaPlayerService;

}

首先我们来看defaultServiceManager(),这是一个单例模式,实现如下:

sp defaultServiceManager() {

if (gDefaultServiceManager != NULL) return gDefaultServiceManager;

{

AutoMutex _l(gDefaultServiceManagerLock);

while (gDefaultServiceManager == NULL) {

gDefaultServiceManager = interface_cast(

ProcessState::self()->getContextObject(NULL));

if (gDefaultServiceManager == NULL)

sleep(1);

}

}

return gDefaultServiceManager;

} 其中,ProcessState::self()->getContextObject(NULL)会返回一个BpBinder(0),那么就有:

gDefaultServiceManager = interface_cast

根据interface_cast的定义,就变成了:

gDefaultServiceManager =BpServiceManager(BpBinder(0));接下来看下面这句话的实现:

binder = sm->getService(String16("media.player"));

由前面的分析,sm为BpServiceManager的实例,我们直接到IserviceManager.cpp里面找到BpServiceManager的实现,并找到getService方法,其核心实现调用了checkService方法,实现如下:virtual sp checkService( const String16& name) const

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply);

return reply.readStrongBinder();

}

这里,我们会有一个疑问:remote()返回什么。

先看BpServiceManager的定义:

classBpServiceManager : public BpInterface

template

classBpInterface : public INTERFACE, public BpRefBase

把模板替换一下,变成

classBpInterface : public IServiceManager, public BpRefBase

OK,在BpRefBase里面找到了remote()的定义:

inline IBinder* remote(){ return mRemote; }

mRemote什么时候赋值的呢?我们再来看BpServiceManager的构造函数:

BpServiceManager(const sp& impl)

: BpInterface(impl)

{

} inline BpInterface::BpInterface(const sp& remote)

: BpRefBase(remote)

{

} BpRefBase::BpRefBase(const sp& o)

: mRemote(o.get()), mRefs(NULL), mState(0)

{

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

if (mRemote) {

mRemote->incStrong(this); // Removed on first IncStrong().

mRefs = mRemote->createWeak(this); // Held for our entire lifetime.

}

}

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

……

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

……

err = waitForResponse(reply);

……

return err;

}

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = statusBuffer;

tr.offsets_size = 0;

tr.data.ptr.offsets = NULL;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

int32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = mIn.readInt32();

switch (cmd) {

…

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(size_t),

freeBuffer, this);

} else {

err = *static_cast(tr.data.ptr.buffer);

freeBuffer(NULL,

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(size_t), this);

}

} else {

freeBuffer(NULL,

reinterpret_cast(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast(tr.data.ptr.offsets),

tr.offsets_size/sizeof(size_t), this);

continue;

}

}

goto finish;

……

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

} status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (long unsigned int)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (long unsigned int)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

if (mProcess->mDriverFD <= 0) {

err = -EBADF;

}

} while (err == -EINTR);

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < (ssize_t)mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

return NO_ERROR;

}

return err;

}在talkWithDriver中,IPCThreadState不断的写和读取Binder驱动,于是首先writeTransactionData中mOut中准备的数据被写到了Binder驱动,之后,便开始等待Binder中有新的数据出现,谁会往里面写数据呢?应该是目标进程才对,且让我们来看一下这部分是怎么实现的吧。

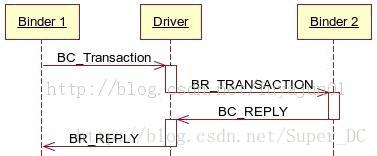

在Binder IPC通信过程中,进程间通信都要先通过向Binder驱动发送BC_XXX命令,然后Binder 驱动稍做处理后通过对应的BR_XXX将命令转给给目标进程。

如果有返回值,进程也是先将返回结果以BC_REPLY的形式先发给Binder驱动,然后通过驱动以BR_REPLY命令转发。

Binder1往Driver中写数据后,Binder驱动首先会判断当前命令接收方是Service Manager还是普通的Server端,判断依据是tr->target.handle.if(tr->target.handle == 0) 表示该命令是发送特殊结点,即Service Manager,而else 针对一般情况,我们需要判断Binder驱动中有没有对应的结点引用,正常情况下应该是能够找到handle对应的Binder结点引用的。通过结点引用,我们就可以定位到处理命令的Binder结点(实体结点)。

在上面的writeTransactionData中,tr->target.handle == 0,故Service Manager进程会收到BR_TRANSACTION命令,Service Manager在处理完命令后,会把结果通过BC_REPLY消息写回Binder驱动,使得上面的waitForResponse(存在与Client进程中)可以得到BR_REPLY的response,从而完成一次交互。

接下来,我们先跳到Service_Manager.c中,来看看Service Manager进程是怎么处理BR_TRANSACTION命令的。int main(int argc, char **argv)

{

struct binder_state *bs;

void *svcmgr = BINDER_SERVICE_MANAGER;

bs = binder_open(128*1024);

if (binder_become_context_manager(bs)) {

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

svcmgr_handle = svcmgr;

binder_loop(bs, svcmgr_handler);

return 0;

}

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

unsigned readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

在主循环中,Service Manager进程不断的操作Binder驱动,读取到数据后,便调用binder_parse来处理。

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uint32_t *ptr, uint32_t size, binder_handler func)

{

int r = 1;

uint32_t *end = ptr + (size / 4);

while (ptr < end) {

uint32_t cmd = *ptr++;

#if TRACE

fprintf(stderr,"%s:\n", cmd_name(cmd));

#endif

switch(cmd) {

……

case BR_TRANSACTION: {

struct binder_txn *txn = (void *) ptr;

if ((end - ptr) * sizeof(uint32_t) < sizeof(struct binder_txn)) {

ALOGE("parse: txn too small!\n");

return -1;

}

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

res = func(bs, txn, &msg, &reply);

// 将结果写回Binder驱动

binder_send_reply(bs, &reply, txn->data, res);

}

ptr += sizeof(*txn) / sizeof(uint32_t);

break;

}

……

default:

ALOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}这里的func就是main里面的svcmgr,svcmgr函数用来处理各种命令,包括add_service和get_service等,处理完后,调用binder_send_reply将reply写回binder驱动,从而返回给其客户端进程。我们来看看svcmgr的实现。

int svcmgr_handler(struct binder_state *bs,

struct binder_txn *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

unsigned len;

void *ptr;

uint32_t strict_policy;

int allow_isolated;

// ALOGI("target=%p code=%d pid=%d uid=%d\n",

// txn->target, txn->code, txn->sender_pid, txn->sender_euid);

if (txn->target != svcmgr_handle)

return -1;

// Equivalent to Parcel::enforceInterface(), reading the RPC

// header with the strict mode policy mask and the interface name.

// Note that we ignore the strict_policy and don't propagate it

// further (since we do no outbound RPCs anyway).

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n", str8(s));

return -1;

}

switch(txn->code) {

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len);

ptr = do_find_service(bs, s, len, txn->sender_euid);

if (!ptr)

break;

bio_put_ref(reply, ptr);

return 0;

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

ptr = bio_get_ref(msg);

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

if (do_add_service(bs, s, len, ptr, txn->sender_euid, allow_isolated))

return -1;

break;

case SVC_MGR_LIST_SERVICES: {

unsigned n = bio_get_uint32(msg);

si = svclist;

while ((n-- > 0) && si)

si = si->next;

if (si) {

bio_put_string16(reply, si->name);

return 0;

}

return -1;

}

default:

ALOGE("unknown code %d\n", txn->code);

return -1;

}

bio_put_uint32(reply, 0);

return 0;

}是的,我们看到了SVC_MGR_CHECK_SERVICE,SVC_MGR_ADD_SERVICE等命令都最终在这里得到了妥善的处理。

OK,到这里,获取Service的整个流程就完了。