ASR-深度卷积神经网络之语音识别

记录自己学习语音识别的过程,现在将这段时间的经验给记录下来!

直接将程序放在这上面吧!

这个程序是用的一个深度卷积神经网络来做的,整个程序分为以下几个模块,分别是声学模型和语言模型

在这里主要说的声学模型的,就是将文字转化为拼音,至于将拼音转换为文字则属于语言模型的事情!

从语音文件中获取特征的主要部分,在file_wav.py文件中

def read_wav_data(filename):

wav = wave.open(filename,"rb")

num_frame = wav.getnframes()

num_channel=wav.getnchannels()

framerate=wav.getframerate()

num_sample_width=wav.getsampwidth()

str_data = wav.readframes(num_frame)

wav.close()

wave_data = np.fromstring(str_data, dtype = np.short)

wave_data.shape = -1, num_channel

wave_data = wave_data.T

return wave_data, framerate

def GetMfccFeature(wavsignal, fs):

feat_mfcc=mfcc(wavsignal[0],fs)

feat_mfcc_d=delta(feat_mfcc,2)

feat_mfcc_dd=delta(feat_mfcc_d,2)

wav_feature = np.column_stack((feat_mfcc, feat_mfcc_d, feat_mfcc_dd))

return wav_feature

# 汉明窗

x=np.linspace(0, 400 - 1, 400, dtype = np.int64)

w = 0.54 - 0.46 * np.cos(2 * np.pi * (x) / (400 - 1) )

def GetFrequencyFeature3(wavsignal, fs):

if(16000 != fs):

raise ValueError('[Error] ASRT currently only supports wav audio files with a sampling rate of 16000 Hz, but this audio is ' + str(fs) + ' Hz. ')

# wav波形 加时间窗以及时移10ms

time_window = 25 # 帧长: 25ms,单位ms

window_length = fs / 1000 * time_window # 计算窗长度的公式,目前全部为400固定值

'''

采样点(s) = fs

采样点(ms)= fs / 1000

采样点(帧)= fs / 1000 * 帧长

'''

wav_arr = np.array(wavsignal)

#wav_length = len(wavsignal[0])

wav_length = wav_arr.shape[1]

range0_end = int(len(wavsignal[0])/fs*1000 - time_window) // 10

data_input = np.zeros((range0_end, 200), dtype = np.float)

data_line = np.zeros((1, 400), dtype = np.float)

for i in range(0, range0_end):

p_start = i * 160

p_end = p_start + 400

data_line = wav_arr[0, p_start:p_end]

data_line = data_line * w

data_line = np.abs(fft(data_line)) / wav_length

data_input[i]=data_line[0:200]

#print(data_input.shape)

data_input = np.log(data_input + 1)

return data_input读取文件信息的程序在file_dict.py中,具体的代码在下面

def GetSymbolList(datapath):

txt_obj=open(datapath + 'dict.txt','r',encoding='UTF-8')

txt_text=txt_obj.read()

txt_lines=txt_text.split('\n')

list_symbol=[]

for i in txt_lines:

if(i!=''):

txt_l=i.split('\t')

list_symbol.append(txt_l[0])

txt_obj.close()

list_symbol.append('_')

return list_symbol

def GetSymbolList_trash2(datapath):

datapath_ = datapath.strip('dataset\\')

system_type = plat.system()

if (system_type == 'Windows'):

datapath_+='\\'

elif (system_type == 'Linux'):

datapath_ += '/'

else:

print('*[Message] Unknown System\n')

datapath_ += '/'

txt_obj=open(datapath_ + 'dict.txt','r',encoding='UTF-8')

txt_text=txt_obj.read()

txt_lines=txt_text.split('\n')

list_symbol=[]

for i in txt_lines:

if(i!=''):

txt_l=i.split('\t')

list_symbol.append(txt_l[0])

txt_obj.close()

list_symbol.append('_')

return list_symbol程序的获取语音矩阵及标签的程序在readdata24.py程序中,

personalized_dict = {

'子': [['zi3']]

}

load_phrases_dict(personalized_dict)

class DataSpeech():

def __init__(self, path, type, LoadToMem=False, MemWavCount=10000):

system_type = plat.system()

self.datapath = path

self.type = type

self.slash = ''

if (system_type == 'Windows'):

self.slash = '\\'

elif (system_type == 'Linux'):

self.slash = '/'

else:

print('*[Message] Unknown System\n')

self.slash = '/'

if (self.slash != self.datapath[-1]):

self.datapath = self.datapath + self.slash

self.dic_wavlist_thchs30 = {}

self.dic_symbollist_thchs30 = {}

self.dic_wavlist_stcmds = {}

self.dic_symbollist_stcmds = {}

self.SymbolNum = 0 # 记录拼音符号数量

self.list_symbol = self.GetSymbolList() # 全部汉语拼音符号列表

self.list_wavnum = [] # wav文件标记列表

self.list_symbolnum = [] # symbol标记列表

self.DataNum = 0 # 记录数据量

self.LoadDataList()

self.wavs_data = []

self.LoadToMem = LoadToMem

self.MemWavCount = MemWavCount

pass

def LoadDataList(self):

if (self.type == 'train'):

filename_wavlist_thchs30 = 'thchs30' + self.slash + 'train.wav.txt'

filename_wavlist_stcmds = 'st-cmds' + self.slash + 'train.wav.txt'

filename_symbollist_thchs30 = 'thchs30' + self.slash + 'train.syllable.txt'

filename_symbollist_stcmds = 'st-cmds' + self.slash + 'train.syllable.txt'

elif (self.type == 'dev'):

filename_wavlist_thchs30 = 'thchs30' + self.slash + 'dev.wav.txt'

filename_wavlist_stcmds = 'st-cmds' + self.slash + 'dev.wav.txt'

filename_symbollist_thchs30 = 'thchs30' + self.slash + 'dev.syllable.txt'

filename_symbollist_stcmds = 'st-cmds' + self.slash + 'dev.syllable.txt'

elif (self.type == 'test'):

filename_wavlist_thchs30 = 'thchs30' + self.slash + 'test.wav.txt'

filename_wavlist_stcmds = 'st-cmds' + self.slash + 'test.wav.txt'

filename_symbollist_thchs30 = 'thchs30' + self.slash + 'test.syllable.txt'

filename_symbollist_stcmds = 'st-cmds' + self.slash + 'test.syllable.txt'

else:

filename_wavlist = '' # 默认留空

filename_symbollist = ''

self.dic_wavlist_thchs30, self.list_wavnum_thchs30 = get_wav_list(self.datapath + filename_wavlist_thchs30)

self.dic_wavlist_stcmds, self.list_wavnum_stcmds = get_wav_list(self.datapath + filename_wavlist_stcmds)

self.dic_symbollist_thchs30, self.list_symbolnum_thchs30 = get_wav_symbol1(

self.datapath + filename_symbollist_thchs30)

self.dic_symbollist_stcmds, self.list_symbolnum_stcmds = get_wav_symbol1(

self.datapath + filename_symbollist_stcmds)

self.DataNum = self.GetDataNum()

def GetDataNum(self):

num_wavlist_thchs30 = len(self.dic_wavlist_thchs30)

num_symbollist_thchs30 = len(self.dic_symbollist_thchs30)

num_wavlist_stcmds = len(self.dic_wavlist_stcmds)

num_symbollist_stcmds = len(self.dic_symbollist_stcmds)

if (

num_wavlist_thchs30 == num_symbollist_thchs30 and num_wavlist_stcmds == num_symbollist_stcmds):

DataNum = num_wavlist_thchs30 + num_wavlist_stcmds

else:

DataNum = -1

return DataNum

def GetData(self, n_start, n_amount=1):

bili = 2

if (self.type == 'train'):

bili = 2

# 读取一个文件

if (n_start % bili == 0):

filename = self.dic_wavlist_thchs30[self.list_wavnum_thchs30[n_start // bili]]

list_symbol = self.dic_symbollist_thchs30[

self.list_symbolnum_thchs30[n_start // bili]]

else:

n = n_start // bili * (bili - 1)

yushu = n_start % bili

length = len(self.list_wavnum_stcmds)

filename = self.dic_wavlist_stcmds[self.list_wavnum_stcmds[(n + yushu - 1) % length]]

list_symbol = self.dic_symbollist_stcmds[

self.list_symbolnum_stcmds[(n + yushu - 1) % length]]

if ('Windows' == plat.system()):

filename = filename.replace('/', '\\')

wavsignal, fs = read_wav_data(self.datapath + filename)

feat_out = self.HanziToNum(list_symbol)

data_input = GetFrequencyFeature3(wavsignal, fs)

data_input = data_input.reshape(data_input.shape[0], data_input.shape[1], 1)

data_label = np.array(feat_out)

return data_input, data_label

def HanziToNum(self,Hanzi):

txt_obj = open('dict.txt', 'r', encoding='UTF-8')

txt_text = txt_obj.read()

txt_lines = txt_text.split('\n')

list_symbol1 = [] # 初始化符号列表

for i in txt_lines:

if (i != ''):

txt_l = i.split('\t')

list_symbol1.append(txt_l[0])

txt_obj.close()

pinyin = lazy_pinyin(Hanzi[0], style=Style.TONE3)

NumList = []

for i in pinyin:

NumList.append(list_symbol1.index(i) + 1)

return NumList

def data_genetator(self, batch_size=32, audio_length=1600):

labels = np.zeros((batch_size, 1), dtype=np.float)

while True:

X = np.zeros((batch_size, audio_length, 200, 1), dtype=np.float)

y = np.zeros((batch_size, 64), dtype=np.int16)

input_length = []

label_length = []

for i in range(batch_size):

ran_num = random.randint(0, self.DataNum - 1)

data_input, data_labels = self.GetData(ran_num)

input_length.append(data_input.shape[0] // 8 + data_input.shape[0] % 8)

X[i, 0:len(data_input)] = data_input

y[i, 0:len(data_labels)] = data_labels

label_length.append([len(data_labels)])

label_length = np.matrix(label_length)

input_length = np.array([input_length]).T

yield [X, y, input_length, label_length], labels

pass

def GetSymbolList(self):

txt_obj = open('dict.txt', 'r', encoding='UTF-8')

txt_text = txt_obj.read()

txt_lines = txt_text.split('\n')

list_symbol = []

for i in txt_lines:

if (i != ''):

txt_l = i.split('\t')

list_symbol.append(txt_l[0])

txt_obj.close()

list_symbol.append('_')

self.SymbolNum = len(list_symbol)

return list_symbol

def GetSymbolNum(self):

return len(self.list_symbol)

def SymbolToNum(self, symbol):

if (symbol != ''):

return self.list_symbol.index(symbol)

return self.SymbolNum

def NumToVector(self, num):

v_tmp = []

for i in range(0, len(self.list_symbol)):

if (i == num):

v_tmp.append(1)

else:

v_tmp.append(0)

v = np.array(v_tmp)

return v程序中readdata24.py前面的这些代码,主要是利用pypinyin第三方库 转换一些汉字多音字的拼音到你指定的拼音(而不是默认的拼音)

personalized_dict = {

'子': [['zi3']]

}

load_phrases_dict(personalized_dict)在SpeechModel.py文件中,主要是深度学习模型建立过程的训练部分,具体部分程序在下面

class ModelSpeech():

def __init__(self, datapath):

MS_OUTPUT_SIZE = 1425

self.MS_OUTPUT_SIZE = MS_OUTPUT_SIZE

self.label_max_string_length = 64

self.AUDIO_LENGTH = 1600

self.AUDIO_FEATURE_LENGTH = 200

self._model, self.base_model = self.CreateModel()

self.datapath = datapath

self.slash = ''

system_type = plat.system()

if(system_type == 'Windows'):

self.slash='\\'

elif(system_type == 'Linux'):

self.slash='/'

else:

print('*[Message] Unknown System\n')

self.slash='/' # 正斜杠

if(self.slash != self.datapath[-1]):

self.datapath = self.datapath + self.slash

def CreateModel(self):

input_data = Input(name='the_input', shape=(self.AUDIO_LENGTH, self.AUDIO_FEATURE_LENGTH, 1))

layer_h1 = Conv2D(32, (3,3), use_bias=False, activation='relu', padding='same', kernel_initializer='he_normal')(input_data)

layer_h1 = Dropout(0.05)(layer_h1)

layer_h2 = Conv2D(32, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h1)

layer_h3 = MaxPooling2D(pool_size=2, strides=None, padding="valid")(layer_h2) #

layer_h3 = Dropout(0.05)(layer_h3)

layer_h4 = Conv2D(64, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h3)

layer_h4 = Dropout(0.1)(layer_h4)

layer_h5 = Conv2D(64, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h4)

layer_h6 = MaxPooling2D(pool_size=2, strides=None, padding="valid")(layer_h5)

layer_h6 = Dropout(0.1)(layer_h6)

layer_h7 = Conv2D(128, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h6)

layer_h7 = Dropout(0.15)(layer_h7)

layer_h8 = Conv2D(128, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h7)

layer_h9 = MaxPooling2D(pool_size=2, strides=None, padding="valid")(layer_h8)

layer_h9 = Dropout(0.15)(layer_h9)

layer_h10 = Conv2D(128, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h9)

layer_h10 = Dropout(0.2)(layer_h10)

layer_h11 = Conv2D(128, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h10)

layer_h12 = MaxPooling2D(pool_size=1, strides=None, padding="valid")(layer_h11)

layer_h12 = Dropout(0.2)(layer_h12)

layer_h13 = Conv2D(128, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h12)

layer_h13 = Dropout(0.2)(layer_h13)

layer_h14 = Conv2D(128, (3,3), use_bias=True, activation='relu', padding='same', kernel_initializer='he_normal')(layer_h13)

layer_h15 = MaxPooling2D(pool_size=1, strides=None, padding="valid")(layer_h14)

layer_h16 = Reshape((200, 3200))(layer_h15)

layer_h16 = Dropout(0.3)(layer_h16)

layer_h17 = Dense(128, activation="relu", use_bias=True, kernel_initializer='he_normal')(layer_h16)

layer_h17 = Dropout(0.3)(layer_h17)

layer_h18 = Dense(self.MS_OUTPUT_SIZE, use_bias=True, kernel_initializer='he_normal')(layer_h17)

y_pred = Activation('softmax', name='Activation0')(layer_h18)

model_data = Model(inputs = input_data, outputs = y_pred)

labels = Input(name='the_labels', shape=[self.label_max_string_length], dtype='float32')

input_length = Input(name='input_length', shape=[1], dtype='int64')

label_length = Input(name='label_length', shape=[1], dtype='int64')

loss_out = Lambda(self.ctc_lambda_func, output_shape=(1,), name='ctc')([y_pred, labels, input_length, label_length])

model = Model(inputs=[input_data, labels, input_length, label_length], outputs=loss_out)

model.summary()

opt = Adam(lr = 0.001, beta_1 = 0.9, beta_2 = 0.999, decay = 0.0, epsilon = 10e-8)

model.compile(loss={'ctc': lambda y_true, y_pred: y_pred}, optimizer = opt)

test_func = K.function([input_data], [y_pred])

#测试函数

print('[*Info] Create Model Successful, Compiles Model Successful. ')

return model, model_data里面还有语言模型的部分是LanguageModel.py,因为后面 我没用,就是利用隐式马尔科夫链,将识别出的拼音转换为汉字,有兴趣的可以自己来看下,部分程序在下面

class ModelLanguage():

def __init__(self, modelpath):

self.modelpath = modelpath

system_type = plat.system()

self.slash = ''

if(system_type == 'Windows'):

self.slash = '\\'

elif(system_type == 'Linux'):

self.slash = '/'

else:

print('*[Message] Unknown System\n')

self.slash = '/'

if(self.slash != self.modelpath[-1]):

self.modelpath = self.modelpath + self.slash

pass

def LoadModel(self):

self.dict_pinyin = self.GetSymbolDict('dict.txt')

self.model1 = self.GetLanguageModel(self.modelpath + 'language_model1.txt')

self.model2 = self.GetLanguageModel(self.modelpath + 'language_model2.txt')

self.pinyin = self.GetPinyin(self.modelpath + 'dic_pinyin.txt')

model = (self.dict_pinyin, self.model1, self.model2 )

return model

pass

def SpeechToText(self, list_syllable):

r=''

length = len(list_syllable)

if(length == 0):

return ''

# 先取出一个字,即拼音列表中第一个字

str_tmp = [list_syllable[0]]

for i in range(0, length - 1):

# 依次从第一个字开始每次连续取两个字拼音

str_split = list_syllable[i] + ' ' + list_syllable[i+1]

#print(str_split,str_tmp,r)

# 如果这个拼音在汉语拼音状态转移字典里的话

if(str_split in self.pinyin):

# 将第二个字的拼音加入

str_tmp.append(list_syllable[i+1])

else:

# 否则不加入,然后直接将现有的拼音序列进行解码

str_decode = self.decode(str_tmp, 0.0000)

#print('decode ',str_tmp,str_decode)

if(str_decode != []):

r += str_decode[0][0]

# 再重新从i+1开始作为第一个拼音

str_tmp = [list_syllable[i+1]]

#print('最后:', str_tmp)

str_decode = self.decode(str_tmp, 0.0000)

#print('剩余解码:',str_decode)

if(str_decode != []):

r += str_decode[0][0]

return r语音文件就放在pac文件夹下面,里面有两个文件夹,分别存放语音文件的txt文件及其对应的标签文件(也就是语音说的汉字内容)。只需要按照我文件给的例子 添加你自己的语音文件及修改txt文件就行了

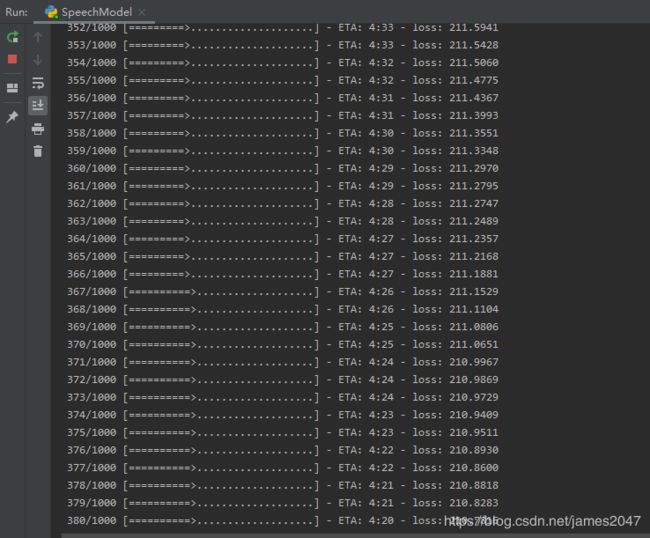

训练声学模型的时候,只需要运行SpeechModel.py就可以了,

asrserver.py和Client.py是与前端测试结果有关的代码,可以自己摘出来,自己写一下,作为测试单个语音的程序!

在自己添加dict.txt列表中的汉字时,要注意要和SpeechModel.py文件中的1425相匹配,1425要比dict.txt的文字的行数多1。

程序用的采样率16k的音频,8k的音频要改file_wav.txt文件中的代码。

程序压缩包的下载地址在下面,kreas==2.3.1 ,tensorflow-gpu==2.0.0,其他版本我试的时候会出现两个第三方库不匹配的问题。

程序压缩包地址:https://download.csdn.net/download/james2047/12679224,

需要稍微改动一些,在model_speech下面建立一个m251的文件夹,储存训练好保存的文件!另外就是dict.txt中没有出现的汉字及其拼音要加进去!

等待压缩包审核完毕。

下面是部分训练截图