论文笔记:Deep Learning from Temporal Coherence in Video

Abstract

The coherence is used as a supervisory signal over the unlabeled data, and is used to improve the performance on a supervised task of interest.

work on some pose invariant object and face recognition tasks

1、Introduction

Analyse & Comparison

Classical semi-supervised learning and transduction

If the unlabeld data is coming from heterogeneous sources then in general no class membership assumptions can be made, and cannot rely on these methods.

A source of images constrained by temporal coherence

Leverage temporal coherence in video to boost the performance of object recognition tasks.

A deep convolutional network architecture + training objective with a temporal coherence regularizer

Can be applied to other sequential data with temporal coherence, and when minimizing other choices of loss

Dataset and article structure

2、Exploiting Video Coherence with CNNs( describe)

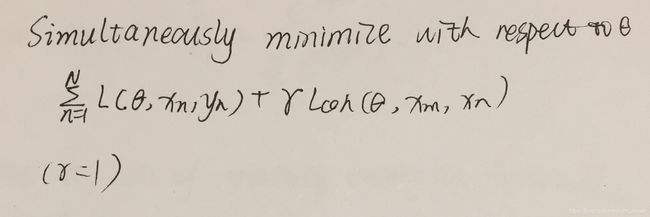

A convolutional neural

A chain of filters and resolution redution steps

- take the topology of 2D data into account

- the locality of filters significantly reduces the number of connections and henceforth parameters to be learned, reducing overfitting problems

- Resolution reduction operation better tolerance against slight distortions in translation, scale or rotation

2.1 Convolution and Subsampling

- Convolution

- Subsampling layers

- Non-linearity function like tanh( * ) ( applied after each convolution and subsampling layer)

- Final classical fully-connected layer

- Softmax layer(Interpret these values as probabilities)

Suppose given a set of training examplies {(x_n,y_n)}

NLL

use SGD optimization

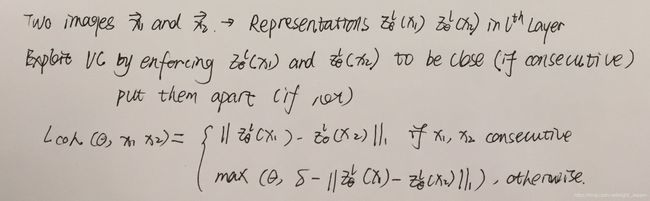

2.2 Leveraging Video Coherence

In order to limit the number of hyper-parameters, giving the same weight to each task and minization achieved by alternating stochastic updates from each of the two tasks

Algorithm

3. Previous and Related Work

Temporal Coherence Learning

Wiskoot and Sjnowski

learn invariant features from unsupervise video based on reconstruction loss —> can be used for a supervised task but is not trained at the same time

Becker

Using a fully connect neural network algorithm which introdces extra neurons, called contextual gating units

applied to rotating objectes, showed improvements over not taking into accout the temporal context

Becker and Hinton

IMAX method

Maximizing the mutual information between different output units (apply to learning spacial or temporal coherency.

Drawback

- Trapped in poor local minima

Semi-Supervised Learning

Transdctive

Like TSVMs, maximizing the margin on a set of unlabeled examples which come from the same distribution as the training data.

Like make an assumption that the decision rule lies in a region of low density.

Graph-based

Use the unlabeled data by consturcing a graph based on a chosen similarity

Use in an unsupervised rather than supervised setup

drawback

- computation

- make an assumption that the decision rule lies in a region of low density

4、Experiments

object and face recognition

4.1 Object Recognition

4.1.1 Datasets

- COLL100 100 objects, each 72X72 pixels, 72 different views(5 degree)

- COLL 100-Like video dataset

- Animal Set

http://ml.nec-labs.com/download/data/videoembed.

4.1.2 Methods

- SVM using a polynomial kernel

- A nearest neighbor classifier on the direct images

- An eigenspace plus spline recognition model

- A SpinGlass Markov Random Field

- A hierarchical view-tuned network for visual recognition tasks

SpinGlass MRF

Use an energy function

Reduce space

VTU

- Build a hierarchy of biologically inspired feature detectors

- Applies Gabor filters at four orientations

4.1.3 Results

- 1、Use a standar CNN without utilizing any temporal information to establish a baseline for the authors’ contirbution.

- 2、Explore scenarios based on the source of the unlabeled video.(Mentioned above. In all of these scenarios, the labeled training and testing data for the supervised task belong to COLL.

- For comporability with the setting available from other studies, the authors chose two experimental setups.

- All 100 objects of COLL are considered in the experiment

- Only 30 labeled objects out of 100 are studied( for both training and testing)

In either case, 4 out of 72 views (at 0, 90, 180, 270 degrees) per object are used for traing, and the rest of the 68 views are used for testing

All the reported numbers are based on averaging the classification rate on the test data over 10 training runs.

4.2 Face Recognition

Dataset

10 different gray scale images for each of the 40 distince subjects

Placed in a “video” sequency by concatenating 40 segments, one for each subject, ordering according to the numbering system in the dataset

- Labeled k = 1,2 or 5 images per subject and compared to the baselines NN, PCA, LDA and MRF

5. Conclusion

- 1.Video acts a pseudo-supervisory signal that improves the internal representation of images by preserving translations in consecutive frames.

- 2.Training labeled and unlabeled data simultaneously. Temporal coherence of unlabeled data acts as a regularizer for the supervised task

- 3.Useful for non=visual tasks as well sequence information has structure

-

- Probably the more similar the objects are, the more beneficial the data is.

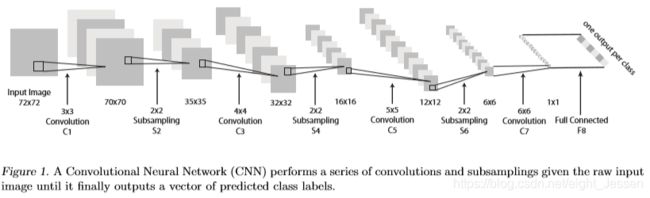

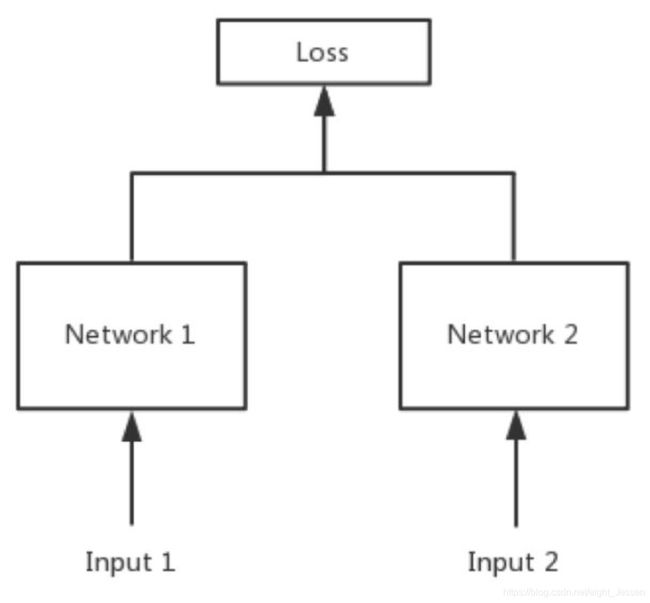

Siamese network

孪生神经网络

通过共享权值来实现,左右两个网络的权重一模一样

如果不共享权值,叫做伪孪生神经网络 pseudo-siamese network

用途

- 衡量两个输入的相似度——>Loss (二分类)

- 孪生神经网络处理两个输入“比较类似”的情况。伪孪生神经网络处理两个输入“有一定差别”的情况。

具体 - 词汇的语义相似度分析,签名匹配,人脸匹配

- 手写体识别

- 图像上,视觉跟踪算法

https://link.springer.com/chapter/10.1007/978-3-319-48881-3_56

Loss Function选择

- Softmax

- 传统的siamese network使用Contrastive Loss

- cosine距离

- exp function

cosine和exp在NLP中的区别

cosine更适用欲词汇级别的语义相似度度量,exp更适用于句子级别,段落级别的文本相似度度量。原因可能是cosine仅仅计算两个向量的夹角,exp还能够保存两个向量的长度信息。

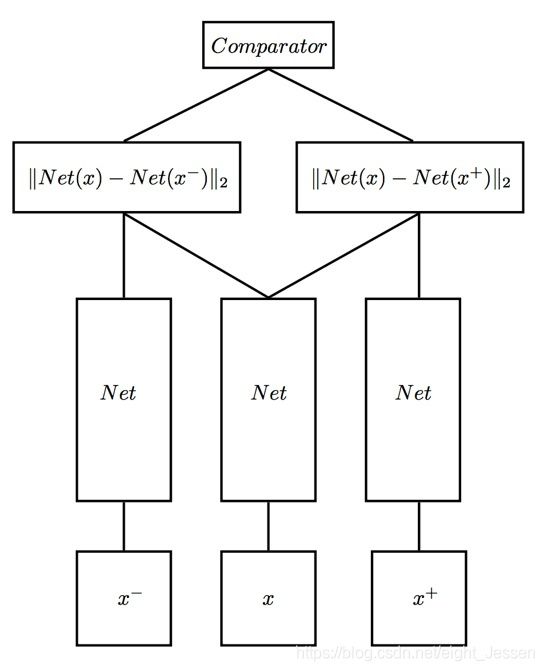

三胞胎连体 Triplet network

输入是三个,一个正例+两个负例,或者一个负例+两个正例,训练的目标是让相同类别间的距离尽可能的小,让不同类别间的距离尽可能的大。

Mutual Information

两个随机变量之间的关联程度,即给定一个随机变量后,另一个随机变量不确定性的削弱程度,因而互信息取值最小为0,意味着给定一个随机变量对确定一另一个随机变量没有关系,最大取值为随机变量的熵,意味着给定一个随机变量,能完全消除另一个随机变量的不确定性

对X的不确定性 H(X)

知道Y后,对X的不确定性 H(X|Y)

不确定的减少量,互信息 I(X;Y) = H(X) - H(X|Y)

Gabor Filters

Introduction

Bandpass filters, using in image processing for feature extraction, texture annlysis, and stereo disparity estimation

Impluse Response

Created by multiplying an Gaussian envelope function with a complex oscillation

http://homepages.inf.ed.ac.uk/rbf/CVonline/LOCAL_COPIES/TRAPP1/filter.html