iOS ffmpeg+OpenGL播放yuv+openAL 快放 慢放 视频播放器

由于老版本的ffmpeg一些使用方法将要废弃如streams[videoStream]->codec这种方式查找解码器就不能用了,再使用就会报警告,或者报错,这里使用新版ffmpeg接口制作播放器。

先看播放器功能(这里有android版的):

带有音视频同步,快慢速播放,本地及网络视频播放等,经测试支持所有网络视频流,目前还没有发现播放不出的网络视频流。这是一个完整的播放器,后面有源码下载地址。本篇文章先说一下ffmpeg的使用,这个视频播放器的解码播放流程如下图:

这里android版的有多线程运行图,共用到4个线程,跟ios版的基本一样,就是ios版是主线程opengl绘图,android是默认opengl线程绘图。

这个源码对应上面图中流程除了opengl播放rgb没有实现之外其他都有源码实现。现在生效的流程是用opengl直接播放yuv格式。

先看性能测试,播放720及1080格式的视频cpu使用率,测试手机,用了三年的iPhone6,算是很老了,720p:

cpu消耗只有百分之十几,一般直播高清的也就是这个分辨率了,性能表现还是很好的。

下面看一下全高清的1080p:

cpu消耗在50%左右。大家可以自行测试用opengl播放rgb格式cpu消耗很定比这个高得多的多。播放image估计1080的就卡的播不了。

因为模拟器没有gpu,要用cpu模拟gpu,所以这个代码在模拟器上跑cpu一直满载。

这里不说ffmpeg细节问题网上很多自行去了解。这里贴部分播放器代码,主要是解码部分,并稍作说明:

FFmpegDecoder.h

//

// FFmpegDecoder.h

// FFmpeg-project

//

// Created by huizai on 2017/9/14.

// Copyright © 2017年 huizai. All rights reserved.

//

#import FFmpegDecoder.m

//

// FFmpegDecoder.m

// FFmpeg-project

//

// Created by huizai on 2017/9/14.

// Copyright © 2017年 huizai. All rights reserved.

//

#import "FFmpegDecoder.h"

//定义音频重采样后的参数

#define SAMPLE_SIZE 16

#define SAMPLE_RATE 44100

#define CHANNEL 2

@implementation FFmpegDecoder{

char errorBuf[1024];

NSLock *lock;

AVFormatContext * pFormatCtx;

AVCodecContext * pVideoCodecCtx;

AVCodecContext * pAudioCodecCtx;

AVFrame * pYuv;

AVFrame * pPcm;

AVCodec * pVideoCodec; //视频解码器

AVCodec * pAudioCodec; //音频解码器

struct SwsContext * pSwsCtx;

SwrContext * pSwrCtx;

char * rgb;

UIImage * tempImage;

}

- (id)init{

if (self = [super init]) {

[self initParam];

return self;

}

return nil;

}

- (void)initParam{

av_register_all();

avformat_network_init();

_pcmDataLength = 0;

_sampleRate = SAMPLE_RATE;

_sampleSize = SAMPLE_SIZE;

_channel = CHANNEL;

lock = [[NSLock alloc]init];

}

- (double)r2d:(AVRational)r{

return r.num == 0 || r.den == 0 ? 0.:(double)r.num/(double)r.den;

}

//初始化ffmpeg

- (BOOL)OpenUrl:(const char*)path{

[self Close];

[lock lock];

int re = avformat_open_input(&pFormatCtx, path, 0, 0);

if (re != 0) {

[lock unlock];

av_strerror(re, errorBuf, sizeof(errorBuf));

return false;

}

_totalMs = (int)(pFormatCtx->duration/AV_TIME_BASE)*1000;

avformat_find_stream_info(pFormatCtx, NULL);

//分别找到音频视频解码器并打开解码器

for (int i = 0; i < pFormatCtx->nb_streams; i++) {

AVStream *stream = pFormatCtx->streams[i];

AVCodec * codec = avcodec_find_decoder(stream->codecpar->codec_id);

AVCodecContext * codecCtx = avcodec_alloc_context3(codec);

avcodec_parameters_to_context(codecCtx, stream->codecpar);

if (codecCtx->codec_type == AVMEDIA_TYPE_VIDEO) {

printf("video\n");

_videoStreamIndex = i;

pVideoCodec = codec;

pVideoCodecCtx = codecCtx;

int err = avcodec_open2(pVideoCodecCtx, pVideoCodec, NULL);

if (err != 0) {

[lock unlock];

char buf[1024] = {0};

av_strerror(err, buf, sizeof(buf));

printf("open videoCodec error:%s", buf);

return false;

}

}

if (codecCtx->codec_type == AVMEDIA_TYPE_AUDIO) {

printf("audio\n");

_audioStreamIndex = i;

pAudioCodec = codec;

pAudioCodecCtx = codecCtx;

int err = avcodec_open2(pAudioCodecCtx, pAudioCodec, NULL);

if (err != 0) {

[lock unlock];

char buf[1024] = {0};

av_strerror(err, buf, sizeof(buf));

printf("open audionCodec error:%s", buf);

return false;

}

if (codecCtx->sample_rate != SAMPLE_RATE) {

_sampleRate = codecCtx->sample_rate;

}

}

}

printf("open acodec success! sampleRate:%d channel:%d sampleSize:%d fmt:%d\n",_sampleRate,_channel,_sampleSize,pAudioCodecCtx->sample_fmt);

[lock unlock];

return true;

}

//读取音视频packet

- (void)Read:(AVPacket*)pkt{

//这里先不加线程锁,在启动多线程的地方统一加锁

// AVPacket * pkt = malloc(sizeof(AVPacket));

if (!pFormatCtx) {

av_packet_unref(pkt);

return;

}

int err = av_read_frame(pFormatCtx, pkt);

if (err != 0) {

av_strerror(err, errorBuf, sizeof(errorBuf));

printf("av_read_frame error:%s",errorBuf);

av_packet_unref(pkt);

return ;

}

}

//解码过程这里音频视频解码放一块了,中间有一些判断是音频还是视频分别处理,

- (void)Decode:(AVPacket*)pkt{

if (!pFormatCtx) {

return ;

}

//分配AVFream 空间

if (pYuv == NULL) {

pYuv = av_frame_alloc();

}

if (pPcm == NULL) {

pPcm = av_frame_alloc();

}

AVCodecContext * pCodecCtx;

AVFrame * tempFrame;

if (pkt->stream_index == _videoStreamIndex) {

pCodecCtx = pVideoCodecCtx;

tempFrame = pYuv;

}else if (pkt->stream_index == _audioStreamIndex){

pCodecCtx = pAudioCodecCtx;

tempFrame = pPcm;

}else{

return;

}

if (!pCodecCtx) {

return;

}

int re = avcodec_send_packet(pCodecCtx, pkt);

if (re != 0) {

return;

}

re = avcodec_receive_frame(pCodecCtx, tempFrame);

//解码后再获取pts 解码过程有缓存

if (pkt->stream_index == _videoStreamIndex) {

_vFps = (pYuv->pts *[self r2d:(pFormatCtx->streams[_videoStreamIndex]->time_base)])*1000;

}else if (pkt->stream_index == _audioStreamIndex){

_aFps = (pPcm->pts * [self r2d:(pFormatCtx->streams[_audioStreamIndex]->time_base)])*1000;

}

printf("[D]");

return;

}

//这里是给视频对齐的,并封装成opengl需要的结构体格式数据,YUV格式

- (H264YUV_Frame)YuvToGlData:(H264YUV_Frame)yuvFrame{

if (!pFormatCtx || !pYuv || pYuv->linesize[0] <= 0) {

return yuvFrame;

}

//把数据重新封装成opengl需要的格式

unsigned int lumaLength= (pYuv->height)*(MIN(pYuv->linesize[0], pYuv->width));

unsigned int chromBLength=((pYuv->height)/2)*(MIN(pYuv->linesize[1], (pYuv->width)/2));

unsigned int chromRLength=((pYuv->height)/2)*(MIN(pYuv->linesize[2], (pYuv->width)/2));

yuvFrame.luma.dataBuffer = malloc(lumaLength);

yuvFrame.chromaB.dataBuffer = malloc(chromBLength);

yuvFrame.chromaR.dataBuffer = malloc(chromRLength);

yuvFrame.width=pYuv->width;

yuvFrame.height=pYuv->height;

if (pYuv->height <= 0) {

free(yuvFrame.luma.dataBuffer);

free(yuvFrame.chromaB.dataBuffer);

free(yuvFrame.chromaR.dataBuffer);

return yuvFrame;

}

copyDecodedFrame(pYuv->data[0],yuvFrame.luma.dataBuffer,pYuv->linesize[0],

pYuv->width,pYuv->height);

copyDecodedFrame(pYuv->data[1], yuvFrame.chromaB.dataBuffer,pYuv->linesize[1],

pYuv->width / 2,pYuv->height / 2);

copyDecodedFrame(pYuv->data[2], yuvFrame.chromaR.dataBuffer,pYuv->linesize[2],

pYuv->width / 2,pYuv->height / 2);

return yuvFrame;

}

void copyDecodedFrame(unsigned char *src, unsigned char *dist,int linesize, int width, int height)

{

width = MIN(linesize, width);

if (sizeof(dist) == 0) {

return;

}

for (NSUInteger i = 0; i < height; ++i) {

memcpy(dist, src, width);

dist += width;

src += linesize;

}

}

//音频重采样,就是要把很多种未知的的音频格式转换成一定的采样率,声道数等等并以PCM格式输出,就可以给音频播放器播放了

- (int)ToPCM:(char*)dataBuf{

if (!pFormatCtx || !pPcm || !dataBuf) {

return 0;

}

printf("sample_rate:%d,channels:%d,sample_fmt:%d,channel_layout:%llu,nb_samples:%d\n",pAudioCodecCtx->sample_rate,pAudioCodecCtx->channels,pAudioCodecCtx->sample_fmt,pAudioCodecCtx->channel_layout,pPcm->nb_samples);

//音频重采样

if (pSwrCtx == NULL) {

pSwrCtx = swr_alloc();

swr_alloc_set_opts(pSwrCtx,

AV_CH_LAYOUT_STEREO,//2声道立体声

AV_SAMPLE_FMT_S16, //采样大小 16位

_sampleRate, //采样率

pAudioCodecCtx->channel_layout,

pAudioCodecCtx->sample_fmt,// 样本类型

pAudioCodecCtx->sample_rate,

0, 0);

swr_init(pSwrCtx);

}

uint8_t * data[1];

[lock lock];

data[0] = (uint8_t*)dataBuf;

int len = swr_convert(pSwrCtx, data, 10000, (const uint8_t**)pPcm->data, pPcm->nb_samples);

if (len < 0) {

[lock unlock];

return 0;

}

int outSize = av_samples_get_buffer_size(NULL,

CHANNEL,

len,

AV_SAMPLE_FMT_S16,0);

_pcmDataLength = outSize;

NSLog(@"nb_smples:%d,des_smples:%d,outSize:%d",pPcm->nb_samples,len,outSize);

[lock unlock];

return outSize;

}

//这个跟音频重采样对应的,就是视频从yuv转换成rgb或者bgr转换后可以opengl固定管线绘图,固定管线不用写opengl的shader简单一些,或者进一步转换成image,当然直接opengl播放yuv就没必要用这个方法了

- (BOOL)ToRGB:(char*)outBuf andWithOutHeight:(int)outHeight andWithOutWidth:(int)outWidth{

if (!pFormatCtx || !pYuv || pYuv->linesize[0] <= 0) {

return false;

}

//视频yuv转rgb 并转换视频frame的大小

pSwsCtx = sws_getCachedContext(pSwsCtx, pVideoCodecCtx->width,

pVideoCodecCtx->height,

pVideoCodecCtx->pix_fmt,

outWidth, outHeight,

AV_PIX_FMT_RGB24,

SWS_BICUBIC, NULL, NULL, NULL);

if (pSwsCtx) {

// printf("sws_getCachedContext success!\n");

}else{

printf("sws_getCachedContext fail!\n");

return nil;

}

uint8_t * data[AV_NUM_DATA_POINTERS]={0};

data[0] = (uint8_t*)outBuf;

int linesize[AV_NUM_DATA_POINTERS] = {0};

linesize[0] = outWidth * 4;

int h = sws_scale(pSwsCtx, (const uint8_t* const*)pYuv->data, pYuv->linesize, 0, pVideoCodecCtx->height,data,linesize);

if (h > 0) {

printf("H:%d",h);

}

return true;

}

//rgb数据片转换成image,属于ios范畴,这里的色彩格式需要跟ToRGB里面输出的格式一致,如rgb或者rgba等一致即可

- (UIImage*)ToImage:(char*)dataBuf andWithOutHeight:(int)outHeight andWithOutWidth:(int)outWidth{

//rgb 数据转换成为image

int linesize[AV_NUM_DATA_POINTERS] = {0};

linesize[0] = outWidth * 4;

CGBitmapInfo bitmapInfo = kCGBitmapByteOrderDefault;

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

void * colorData = NULL;

memcpy(&colorData, &dataBuf, sizeof(dataBuf));

CGDataProviderRef provider = CGDataProviderCreateWithData(NULL, colorData, sizeof(colorData), NULL);

CGImageRef cgImage = CGImageCreate(outWidth,

outHeight,

8,

24,

linesize[0],

colorSpace,

bitmapInfo,

provider,

NULL,

NO,

kCGRenderingIntentDefault);

UIImage * image = [UIImage imageWithCGImage:cgImage];

CGImageRelease(cgImage);

CGColorSpaceRelease(colorSpace);

CGDataProviderRelease(provider);

tempImage = image;

return image;

}

- (void)Close{

[lock lock];

if (pFormatCtx) {

avformat_close_input(&pFormatCtx);

}

if (pSwrCtx) {

swr_free(&pSwrCtx);

}

if (pSwsCtx) {

sws_freeContext(pSwsCtx);

}

avcodec_close(pVideoCodecCtx);

avcodec_close(pAudioCodecCtx);

if (pYuv) {

av_frame_free(&pYuv);

}

if (pPcm) {

av_frame_free(&pPcm);

}

[lock unlock];

}

- (NSString*)GetError{

[lock lock];

NSString * err = [NSString stringWithUTF8String:errorBuf];

[lock unlock];

return err;

}

@end

多线程播放部分:

//启动gcd多线程,读取解码播放

- (void)startPlayThread{

dispatch_queue_t readQueue = dispatch_queue_create("readAudioQueeu", DISPATCH_QUEUE_CONCURRENT);

dispatch_async(readQueue, ^{

AVPacket *pkt = NULL;

while (!isExit) {

pkt = av_packet_alloc();

[lock lock];

[decoder Read:pkt];

[lock unlock];

if (pkt == NULL) {

[NSThread sleepForTimeInterval:0.01];

continue;

}

if (pkt->size <= 0) {

[NSThread sleepForTimeInterval:0.01];

continue;

}

if (pkt->stream_index == decoder.audioStreamIndex) {

[lock lock];

[decoder Decode:pkt];

[lock unlock];

av_packet_unref(pkt);

char* tempData = (char*)malloc(10000);

[lock lock];

int length = [decoder ToPCM:tempData];

[lock unlock];

//用音频播放器播放

[audioPlayer openAudioFromQueue:tempData andWithDataSize:length andWithSampleRate:decoder.sampleRate andWithAbit:decoder.sampleSize andWithAchannel:decoder.channel];

free(tempData);

//这里设置openal内部缓存数据的大小 太大了视频延迟大 太小了视频会卡顿 根据实际情况调整

NSLog(@"++++++++++++++%d",audioPlayer.m_numqueued);

if (audioPlayer.m_numqueued > 10 && audioPlayer.m_numqueued < 35) {

[NSThread sleepForTimeInterval:0.01];

}else if (audioPlayer.m_numqueued > 35){

[NSThread sleepForTimeInterval:0.025];

}

continue;

}else if (pkt->stream_index == decoder.videoStreamIndex){

[lock lock];

NSData * pktData = [NSData dataWithBytes:pkt length:sizeof(AVPacket)];

[vPktArr insertObject:pktData atIndex:0];

[lock unlock];

continue;

// av_packet_unref(pkt);

}else{

av_packet_unref(pkt);

continue;

}

}

});

dispatch_queue_t videoPlayQueue = dispatch_queue_create("videoPlayQueeu", DISPATCH_QUEUE_CONCURRENT);

dispatch_async(videoPlayQueue, ^{

H264YUV_Frame yuvFrame;

while (!isExit) {

if (vPktArr.count == 0) {

[NSThread sleepForTimeInterval:0.01];

NSLog(@"0000000000000000000000");

continue;

}

//这里同步音视频播放速度

if ((decoder.vFps > decoder.aFps - 900 - _syncRate*1000)&& decoder.aFps>500) {

NSLog(@"aaaaaaaaaaaaaaaaaaa");

[NSThread sleepForTimeInterval:0.01];

continue;

}

[lock lock];

NSData * newData = [vPktArr lastObject];

AVPacket* newPkt = (AVPacket*)[newData bytes];

[vPktArr removeLastObject];

[lock unlock];

if (!newPkt) {

continue;

}

[lock lock];

[decoder Decode:newPkt];

[lock unlock];

av_packet_unref(newPkt);

/*

下面这段屏蔽代码是yuv转rgb

rgb转image的

如果不用opengl直接绘图yuv可以用下面的功能

*/

// int width = 320;

// int height = 250;

// char* tempData = (char*)malloc(width*height*4 + 1);

// [lock lock];

// [decoder ToRGB:tempData andWithOutHeight:height andWithOutWidth:width];

// image = [decoder ToImage:tempData andWithOutHeight:height andWithOutWidth:width];

// free(tempData);

// [lock unlock];

// UIColor *color = [UIColor colorWithPatternImage:image];

[lock lock];

memset(&yuvFrame, 0, sizeof(H264YUV_Frame));

yuvFrame = [decoder YuvToGlData:yuvFrame];

if (yuvFrame.width == 0) {

[lock unlock];

continue;

}

[lock unlock];

dispatch_async(dispatch_get_main_queue(), ^{

// [self.imageView setImage:image];

// playView.backgroundColor = color;

[gl displayYUV420pData:(H264YUV_Frame*)&yuvFrame];

free(yuvFrame.luma.dataBuffer);

free(yuvFrame.chromaB.dataBuffer);

free(yuvFrame.chromaR.dataBuffer);

});

}

});

}更多部分如opengl播放yuv部分,openal播放pcm部分请下载源码查看。对于这部分后面我会补文章做说明。

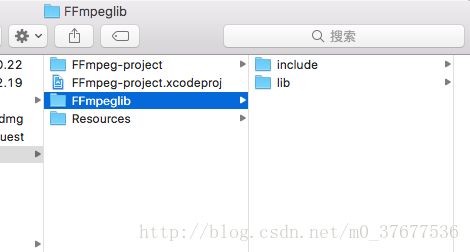

由于ffmpeg库文件太大csdn上传大小有限制,我把ffmpeg库文件放百度云,下载下来直接解压到FFmpeglib目录里面即可:

源码下载是要积分的,花了很多时间来做这个demo除了帮助大家学习我还是打算挣点积分的。

源码下载地址:

http://download.csdn.net/download/m0_37677536/10153709

ffmpeg_lib库下载地址,当然自己编译也可以:

http://download.csdn.net/download/m0_37677536/10153764

或者:https://pan.baidu.com/s/1eRDqlge 密码:c27z

ios版ffmpeg_lib编译方法:

http://blog.csdn.net/m0_37677536/article/details/77934844

ios opengl 播放 yuv:

http://blog.csdn.net/m0_37677536/article/details/78782501

ios openal播放 pcm:

http://blog.csdn.net/m0_37677536/article/details/79013577