scrapy爬虫实战:伪装headers构造假IP骗过ip138.com

scrapy爬虫实战:伪装headers构造假IP骗过ip138.com

- Middleware 中间件伪造Header

- Util.py

- middlewares.py

- settings.py

- ip138.py

我们在爬虫的过程中,经常遇到IP被封的情况,那么有没有伪装IP的方案呢,对于一些简单的网站我们只要伪造一下headers就可以了。

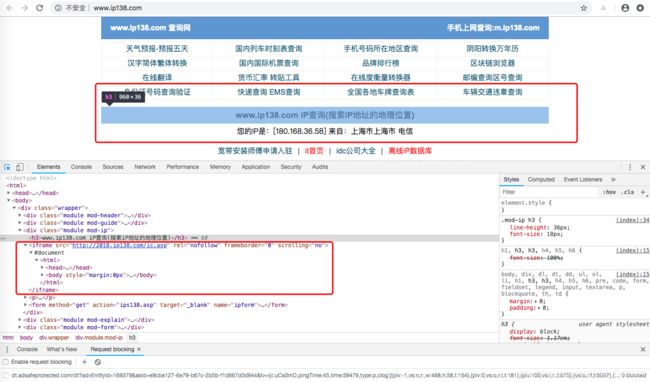

我们一般来说想知道自己的IP,只需要访问一下 http://www.ip138.com/ 就可以知道自己的IP了

就可以发现,实际上,IP信息来源于 http://2018.ip138.com/ic.asp

即

最终来说就是,我们只需要访问 http://2018.ip138.com/ic.asp 就可以知道自己的IP了。那么我们今天的目标就是伪造一下headers骗过 ip138.com

Middleware 中间件伪造Header

Util.py

编写一个可以动态伪造ip和agent的工具类

#! /usr/bin/env python3

# -*- coding:utf-8 -*-

import random

from tutorial.settings import USER_AGENT_LIST

class Util(object):

def get_header(host='www.baidu.com',ip=None):

if ip is None:

ip = str(random.choice(list(range(255)))) + '.' + str(random.choice(list(range(255)))) + '.' + str(

random.choice(list(range(255)))) + '.' + str(random.choice(list(range(255))))

return {

'Host': host,

'User-Agent': random.choice(USER_AGENT_LIST),

'server-addr': '',

'remote_user': '',

'X-Client-IP': ip,

'X-Remote-IP': ip,

'X-Remote-Addr': ip,

'X-Originating-IP': ip,

'x-forwarded-for': ip,

'Origin': 'http:/' + host,

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"Accept-Language": "zh-CN,zh;q=0.9,en-US;q=0.5,en;q=0.3",

"Accept-Encoding": "gzip, deflate",

"Referer": "http://" + host + "/",

'Content-Length': '0',

"Connection": "keep-alive"

}

middlewares.py

middlewares.py 设置动态headers

from scrapy import signals

from backend.libs.Util import Util

from scrapy.http.headers import Headers

class TutorialDownloaderMiddleware(object):

def process_request(self, request, spider):

request.headers = Headers(Util.get_header('2018.ip138.com'))

settings.py

settings.py 配置动态Agent 启用middlewares

USER_AGENT_LIST=[

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

]

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

'tutorial.middlewares.TutorialDownloaderMiddleware': 1,

}

ip138.py

# -*- coding: utf-8 -*-

import scrapy

class Ip138Spider(scrapy.Spider):

name = 'ip138'

allowed_domains = ['www.ip138.com','2018.ip138.com']

start_urls = ['http://2018.ip138.com/ic.asp']

def parse(self, response):

print("*" * 40)

print("response text: %s" % response.text)

print("response headers: %s" % response.headers)

print("response meta: %s" % response.meta)

print("request headers: %s" % response.request.headers)

print("request cookies: %s" % response.request.cookies)

print("request meta: %s" % response.request.meta)

伪造成功。

GitHub源代码