Python爬虫JS解密详解,学会直接破解80%的网站!!!

文章目录

- 1、网页查看

- 2、有道翻译简单实现源码

- 3、JS解密(详解)

- 4、python实现JS解密后的完整代码

- 4.1、实现效果

- 5、JS解密后完整代码升级版

- 5.1、实现效果

更多博主开源爬虫教程目录索引(宝藏教程,你值得拥有!)

本次JS解密以有道翻译为例,相信各位看过之后绝对会有所收获!

1、网页查看

2、有道翻译简单实现源码

import requests

#请求头

#headers不能只有一个User-Agent,因为有道翻译是有一定的反扒机制的,所以我们直接全部带上

headers = {

"Accept": "application/json, text/javascript, */*; q=0.01",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9",

"Connection": "keep-alive",

"Content-Length": "244",

"Content-Type": "application/x-www-form-urlencoded; charset=UTF-8",

"Cookie": "[email protected]; JSESSIONID=aaaUggpd8kfhja1AIJYpx; OUTFOX_SEARCH_USER_ID_NCOO=108436537.92676207; ___rl__test__cookies=1597502296408",

"Host": "fanyi.youdao.com",

"Origin": "http://fanyi.youdao.com",

"Referer": "http://fanyi.youdao.com/",

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.125 Safari/537.36",

"X-Requested-With": "XMLHttpRequest",

}

#提交参数

params = {

"i": "I love you",

"from": "UTO",

"to": "AUTO",

"smartresult": "dict",

"client": "fanyideskweb",

"salt": "15975022964104",

"sign": "7b7db70e0d1a786a43a6905c0daec508",

"lts": "1597502296410",

"bv": "9ef72dd6d1b2c04a72be6b706029503a",

"doctype": "json",

"version": "2.1",

"keyfrom": "fanyi.web",

"action": "FY_BY_REALTlME",

}

url = "http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule"

#发起POST请求

response = requests.post(url=url,headers=headers,data=params).json()

print(response)

![]()

到这里表面上已经完成了有道翻译的代码,但其实还有问题,比如下方如果更换了需要翻译的内容就会报错

原因

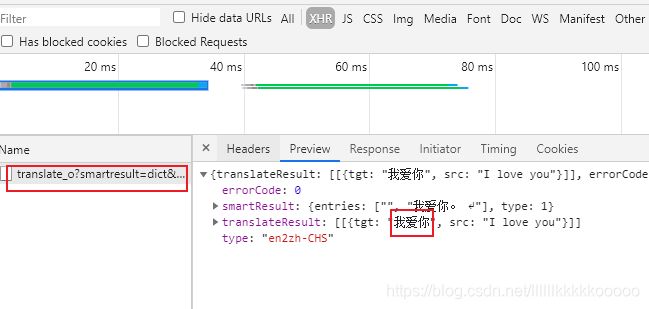

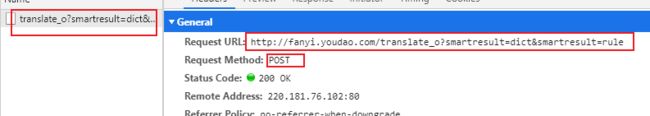

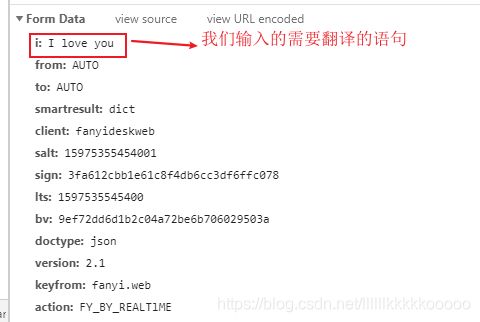

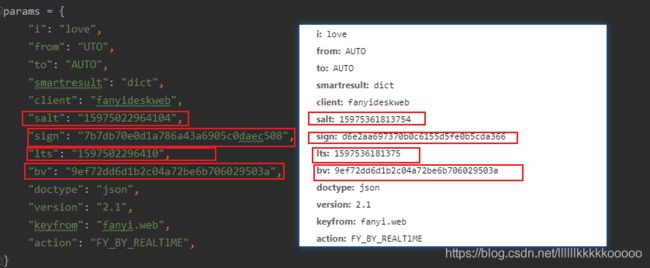

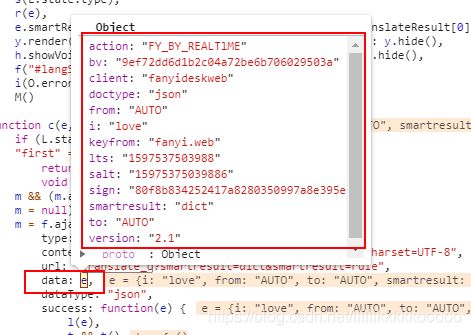

我们在有道翻译重新翻译,会发起新的POST请求,而每次请求所带的参数值会有所不同,如果想要真正实现有道翻译功能,就要找到这四个参数值得生成方式,然后用python实现同样的功能才行

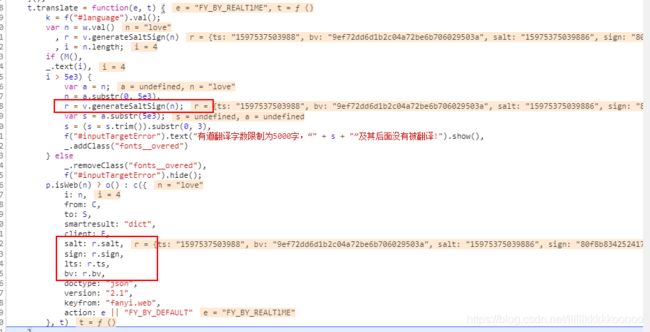

3、JS解密(详解)

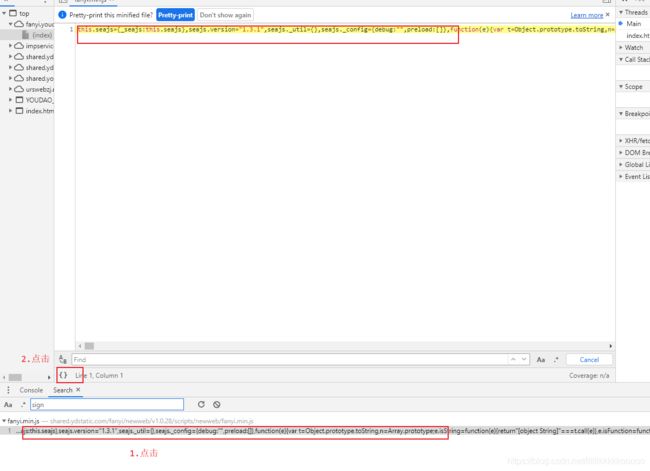

Ctrl+Shift+f 进行搜索,输入sign

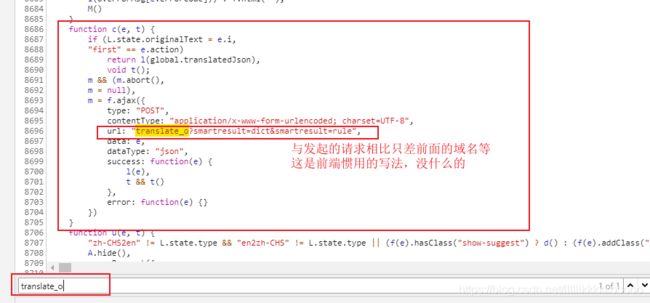

因为加密肯定是发起请求的时候加密所以我们搜索translate_o

![]()

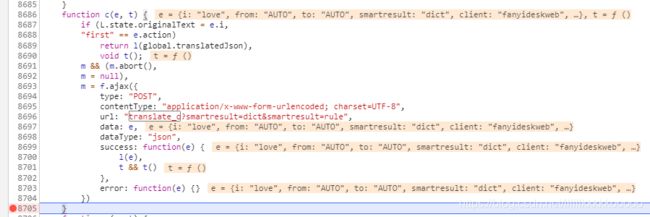

在有道翻译重新输入翻译内容,即可到断点处停下

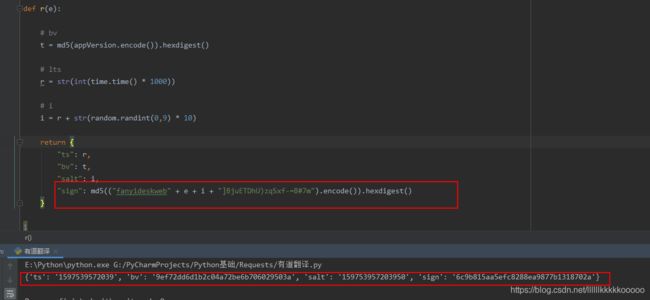

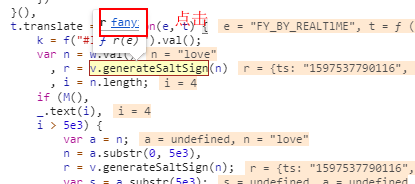

可以发现salt、sign、lts、bv都是通过 r 获取出来的,而 r 又是通过一个函数得到的

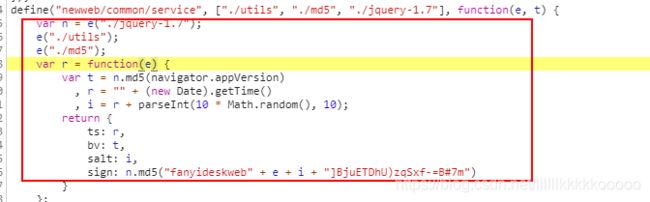

进入该函数

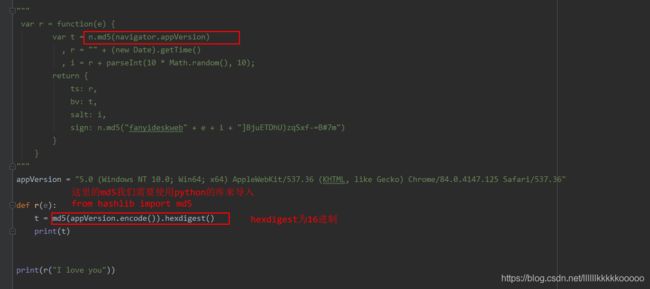

可以发现这里就是加密的地方

复制这段js代码,我们需要使用使用python代码来实现

可以看到是一模一样的,到这里算是有一点点小成功了

![]()

注意这里翻译的内容不同其bv也是不同的哦

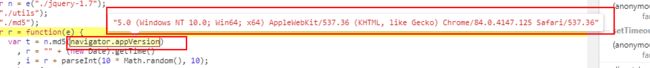

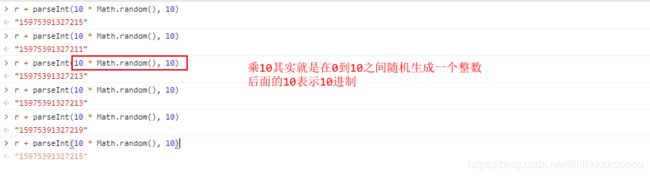

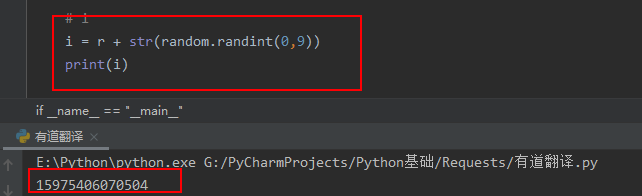

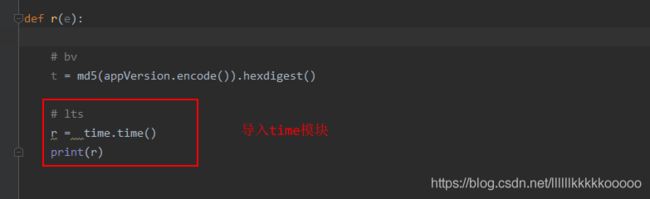

接下来看lts,用python实现

可见python生成的时间戳和JS的有所不同,但细心的小伙伴肯定知道怎么把python的时间戳变成跟JS一样

这样就ok了

乘以1000转化为int舍弃小数位再转化为字符串,因为JS里是字符串

4、python实现JS解密后的完整代码

import requests

from hashlib import md5

import time

import random

#请求地址

url = "http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule"

appVersion = "5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.125 Safari/537.36"

headers = {

"Accept": "application/json, text/javascript, */*; q=0.01",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9",

"Connection": "keep-alive",

"Content-Length": "244",

"Content-Type": "application/x-www-form-urlencoded; charset=UTF-8",

"Cookie": "[email protected]; JSESSIONID=aaaUggpd8kfhja1AIJYpx; OUTFOX_SEARCH_USER_ID_NCOO=108436537.92676207; ___rl__test__cookies=1597502296408",

"Host": "fanyi.youdao.com",

"Origin": "http://fanyi.youdao.com",

"Referer": "http://fanyi.youdao.com/",

"user-agent": appVersion,

"X-Requested-With": "XMLHttpRequest",

}

def r(e):

# bv

t = md5(appVersion.encode()).hexdigest()

# lts

r = str(int(time.time() * 1000))

# i

i = r + str(random.randint(0,9))

return {

"ts": r,

"bv": t,

"salt": i,

"sign": md5(("fanyideskweb" + e + i + "]BjuETDhU)zqSxf-=B#7m").encode()).hexdigest()

}

def fanyi(word):

data = r(word)

params = {

"i": word,

"from": "UTO",

"to": "AUTO",

"smartresult": "dict",

"client": "fanyideskweb",

"salt": data["salt"],

"sign": data["sign"],

"lts": data["ts"],

"bv": data["bv"],

"doctype": "json",

"version": "2.1",

"keyfrom": "fanyi.web",

"action": "FY_BY_REALTlME",

}

response = requests.post(url=url,headers=headers,data=params)

#返回json数据

return response.json()

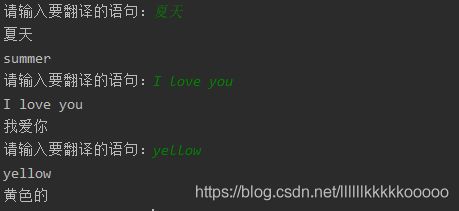

if __name__ == "__main__":

while True:

word = input("请输入要翻译的语句:")

result = fanyi(word)

#对返回的json数据进行提取,提取出我们需要的数据

r_data = result["translateResult"][0]

print(r_data[0]["src"])

print(r_data[0]["tgt"])

这代码我就不做过多解释了,相信大家都能看懂。

4.1、实现效果

5、JS解密后完整代码升级版

如果只是对一些词语、语句翻译那还不如直接在网址上进行翻译,本次升级版可以对文章句子进行分段翻译,更有助于提高英语阅读理解的能力。(特别适合我这样的英语学渣)

import requests

from hashlib import md5

import time

import random

#请求地址

url = "http://fanyi.youdao.com/translate_o?smartresult=dict&smartresult=rule"

appVersion = "5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.125 Safari/537.36"

headers = {

"Accept": "application/json, text/javascript, */*; q=0.01",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9",

"Connection": "keep-alive",

"Content-Length": "244",

"Content-Type": "application/x-www-form-urlencoded; charset=UTF-8",

"Cookie": "[email protected]; JSESSIONID=aaaUggpd8kfhja1AIJYpx; OUTFOX_SEARCH_USER_ID_NCOO=108436537.92676207; ___rl__test__cookies=1597502296408",

"Host": "fanyi.youdao.com",

"Origin": "http://fanyi.youdao.com",

"Referer": "http://fanyi.youdao.com/",

"user-agent": appVersion,

"X-Requested-With": "XMLHttpRequest",

}

def r(e):

# bv

t = md5(appVersion.encode()).hexdigest()

# lts

r = str(int(time.time() * 1000))

# i

i = r + str(random.randint(0,9))

return {

"ts": r,

"bv": t,

"salt": i,

"sign": md5(("fanyideskweb" + e + i + "]BjuETDhU)zqSxf-=B#7m").encode()).hexdigest()

}

def fanyi(word):

data = r(word)

params = {

"i": word,

"from": "UTO",

"to": "AUTO",

"smartresult": "dict",

"client": "fanyideskweb",

"salt": data["salt"],

"sign": data["sign"],

"lts": data["ts"],

"bv": data["bv"],

"doctype": "json",

"version": "2.1",

"keyfrom": "fanyi.web",

"action": "FY_BY_REALTlME",

}

response = requests.post(url=url,headers=headers,data=params)

return response.json()

if __name__ == "__main__":

#打开需要翻译的文章

with open("文章.txt",mode="r",encoding="utf-8") as f:

#获取文章全部内容

text = f.read()

result = fanyi(text)

r_data = result["translateResult"]

#翻译结果保存

with open("test.txt",mode="w",encoding="utf-8") as f:

for data in r_data:

f.write(data[0]["tgt"])

f.write('\n')

f.write(data[0]["src"])

f.write('\n')

print(data[0]["tgt"])

print(data[0]["src"])

5.1、实现效果

博主会持续更新,有兴趣的小伙伴可以点赞、关注和收藏下哦,你们的支持就是我创作最大的动力!

更多博主开源爬虫教程目录索引(宝藏教程,你值得拥有!)

本文爬虫源码已由 GitHub https://github.com/2335119327/PythonSpider 已经收录(内涵更多本博文没有的爬虫,有兴趣的小伙伴可以看看),之后会持续更新,欢迎Star。

![]()