【竞赛项目详解】街景字符识别(附源码)

文章目录

- 1 项目简介

- 2 项目分析

- 2.1 数据分析

- 2.2 难点分析

- 2.3 可行性方案分析

- 3 方案设计

- 3.1 合并train与val

- 3.2 数据增强

- 3.3 YOLOv5上线

- 3.4 模型融合

- 4 结果分析

- 5 源码链接

1 项目简介

业务:预测真实场景下的字符(门牌号)

数据集:来源于Google公开数据集SVNH,train-30k,val-10k,test-40k

详情请看项目介绍

2 项目分析

2.1 数据分析

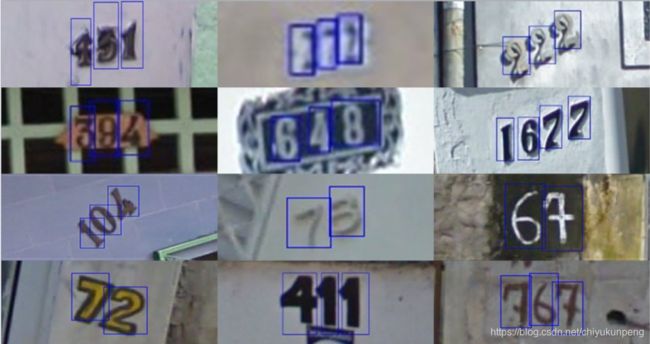

数据集中图片分辨率不一,平均为60x120,尺寸较小,且处于多种自然场景下,同时字符长度从0-4不等,如下图所示。

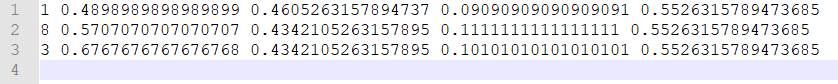

数据标注提供了位置框与标签信息,如下图所示,其中top-左上角x,left-左上角y,height-高度,width-宽度,label-标签。

2.2 难点分析

- 数据样本处于自然场景下,场景较复杂;

- 数据样本分辨率较低,清晰度较差;

- 部分数据样本存在遮挡问题;

2.3 可行性方案分析

- 采用分类模型,如resnet,efficientnet,xception,densenet等;前提是将业务转换为定长字符识别,用X填充为6位数,即11分类问题(0—9,X)。

- 采用专用的字符识别模型,如CRNN等。

- 采用先检测后识别,如SSD,YOLO系列,RCNN系列等。

3 方案设计

3.1 合并train与val

“数据为王”,在模型开源的今天,得数据者得天下,所以第一步必须把官方提供的数据最大化利用,即把30k train与10k val合并,再按一定比例(如9:1)分割为train与val,注意防止重名。代码如下所示。

import os

import shutil

import argparse

def main():

parser = argparse.ArgumentParser(description="combine train dataset with val dataset")

parser.add_argument('-t', '--train_image_path', default='/media/chiyukunpeng/CHENPENG01/dataset/SVNH/mchar_train/',

help="path to train dataset")

parser.add_argument('-v', '--val_image_path', default='/media/chiyukunpeng/CHENPENG01/dataset/SVNH/mchar_val/',

help="path to val dataset")

parser.add_argument('-d', '--dst_image_path', default='./coco/images/', help="path to combined dataset")

args = parser.parse_args()

train_image_list = os.listdir(args.train_image_path)

train_image_list.sort(key=lambda x: int(x[:-4]))

val_image_list = os.listdir(args.val_image_path)

val_image_list.sort(key=lambda x: int(x[:-4]))

for img in train_image_list:

shutil.copy(args.train_image_path + img, args.dst_image_path + img)

print("train image {0}/{1} copied".format(img[:-4], len(train_image_list)))

print("train dataset copied")

for img in val_image_list:

shutil.copy(args.val_image_path + img, args.dst_image_path + 'val_' + img) # 防止重名

print("val image {0}/{1} copied".format(img[:-4], len(val_image_list)))

print("val dataset copied")

if __name__ == '__main__':

main()

3.2 数据增强

采用torchvision库中的数据增强操作,但注意不要进行翻转增强操作,如6和9。

3.3 YOLOv5上线

按YOLOv5的README配置好环境,再对数据进行类型转换。详情请看github-yolov5-tutorial

- 改写数据yaml文件

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

train: ../coco/images/train/ # 40k images

val: ../coco/images/val/ # 10k images

# number of classes

nc: 10

# class names

names: ['0','1','2','3','4','5','6','7','8','9']

import cv2

import json

import argparse

def main():

parser = argparse.ArgumentParser(description="make label for combined datatset")

parser.add_argument('-ti', '--train_image_path', default='/media/chiyukunpeng/CHENPENG01/dataset/SVNH/mchar_train/',

help="path to train dataset")

parser.add_argument('-vi', '--val_image_path', default='/media/chiyukunpeng/CHENPENG01/dataset/SVNH/mchar_val/',

help="path to val dataset")

parser.add_argument('-ta', '--train_annotation_path',

default='/media/chiyukunpeng/CHENPENG01/dataset/SVNH/mchar_train.json',

help="path to train annotation")

parser.add_argument('-va', '--val_annotation_path',

default='/media/chiyukunpeng/CHENPENG01/dataset/SVNH/mchar_val.json',

help="path to val annotation")

parser.add_argument('-l', '--label_path', default='./coco/labels/', help='path to combined labels')

args = parser.parse_args()

train_data = json.load(open(args.train_annotation_path))

val_data = json.load(open(args.val_annotation_path))

for key in train_data:

f = open(args.label_path + key.replace('.png', '.txt'), 'w')

img = cv2.imread(args.train_image_path + key)

shape = img.shape

label = train_data[key]['label']

left = train_data[key]['left']

top = train_data[key]['top']

height = train_data[key]['height']

width = train_data[key]['width']

for i in range(len(label)):

# 归一化

x_center = 1.0 * (left[i] + width[i] / 2) / shape[1]

y_center = 1.0 * (top[i] + height[i] / 2) / shape[0]

w = 1.0 * width[i] / shape[1]

h = 1.0 * height[i] / shape[0]

# label, x_center, y_center, w, h

f.write(str(label[i]) + ' ' + str(x_center) + ' ' + str(y_center) + ' ' + str(w) + ' ' + str(h) + '\n')

f.close()

print("train label {0}/{1} made".format(key[:-4], len(train_data)))

for key in val_data:

f = open(args.label_path + 'val_' + key.replace('.png', '.txt'), 'w')

img = cv2.imread(args.val_image_path + key)

shape = img.shape

label = val_data[key]['label']

left = val_data[key]['left']

top = val_data[key]['top']

height = val_data[key]['height']

width = val_data[key]['width']

for i in range(len(label)):

# 归一化

x_center = 1.0 * (left[i] + width[i] / 2) / shape[1]

y_center = 1.0 * (top[i] + height[i] / 2) / shape[0]

w = 1.0 * width[i] / shape[1]

h = 1.0 * height[i] / shape[0]

# label, x_center, y_center, w, h

f.write(str(label[i]) + ' ' + str(x_center) + ' ' + str(y_center) + ' ' + str(w) + ' ' + str(h) + '\n')

f.close()

print("val label {0}/{1} made".format(key[:-4], len(val_data)))

if __name__ == '__main__':

main()

- 训练

YOLOv5有四种网络结构s,m,l,x,采用预训练模型训练,观察可视化结果,不断调整超参数。

$ python train.py --data coco.yaml --cfg yolov5s.yaml --weights '' --batch-size 64

yolov5m 40

yolov5l 24

yolov5x 16

3.4 模型融合

对s,l,m,v输出结果采用全局nms,获得最优解。

import json

import numpy as np

import pandas as pd

result_list = ['./json/yolov5l_0.922.json', './json/yolov5s_0.902.json']

result = []

def py_cpu_nms(dets, thresh):

x1 = dets[:, 0]

y1 = dets[:, 1]

x2 = dets[:, 2]

y2 = dets[:, 3]

areas = (y2 - y1 + 1) * (x2 - x1 + 1)

scores = dets[:, 4]

keep = []

index = scores.argsort()[::-1]

while index.size > 0:

i = index[0] # every time the first is the biggst, and add it directly

keep.append(i)

x11 = np.maximum(x1[i], x1[index[1:]]) # calculate the points of overlap

y11 = np.maximum(y1[i], y1[index[1:]])

x22 = np.minimum(x2[i], x2[index[1:]])

y22 = np.minimum(y2[i], y2[index[1:]])

w = np.maximum(0, x22 - x11 + 1) # the width of overlap

h = np.maximum(0, y22 - y11 + 1) # the height of overlap

overlaps = w * h

ious = overlaps / (areas[i] + areas[index[1:]] - overlaps)

idx = np.where(ious <= thresh)[0]

index = index[idx + 1] # because index start from 1

out = dict()

for _ in keep:

out[dets[_][0]] = int(dets[_][5])

return out

for i in range(len(result_list)):

result.append(json.load(open(result_list[i])))

file_name = []

file_code = []

for key in result[0]:

print(key)

file_name.append(key)

t = []

for i in range(len(result_list)):

for _ in result[i][key]:

t.append(_)

t = np.array(t)

res = ''

if len(t) == 0:

res = '1'

else:

x_value = py_cpu_nms(t, 0.3)

for x in sorted(x_value.keys()):

res += str(x_value[x])

file_code.append(res)

sub = pd.DataFrame({'file_name': file_name, 'file_code': file_code})

sub.to_csv('./submit.csv', index=False)

4 结果分析

最终提交的test_acc为0.932,个人觉得训练过程可以再优化一下,对数据增强可以再多一些尝试。

5 源码链接

GitHub链接