深度学习之TensorFlow使用CNN测试Cifar-10数据集(Python实现)

题目描述:

1. 对Cifar-10图像数据集,用卷积神经网络进行分类,统计正确率。

2.选用Caffe, Tensorflow, Pytorch等开 源深度学习框架之一,学会安装这些框架并调用它们的接口。

3.直接采用这些深度学习框架针对Cifar-10数据集已训练好的网络模型,只做测试。

实现流程:

第一步:安装Tensorflow环境。打开命令控制符窗口,使用python自带的pip工具,输入命令:pip install tensorflow进行下载安装如图1 所示:

图 1 安装TensorFlow

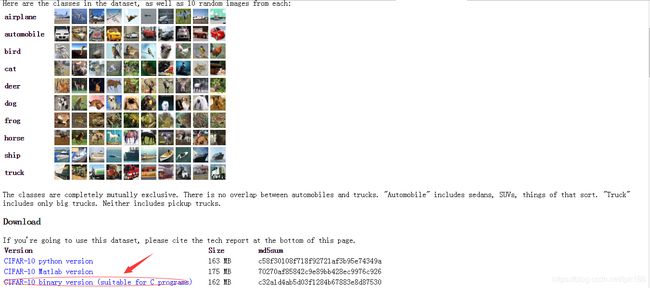

第二步:下载Cifar-10图像数据集。

(官网上下载即可http://www.cs.toronto.edu/~kriz/cifar.html)如图2所示

图 2下载Cifar-10

第三步:编写代码,进行训练测试。

首先,对Cifar-10图像数据集进行介绍。这是一个经典的数据集,包含了60000张32*32的彩色图像,其中训练集50000张,测试集10000张,如同其名字,CIFAR-10数据集一共标注为10类,每一类6000张图片,这10类分别是airplane、automobile、bird、cat、deer、dog、frog、horse、ship和truck。类别之间没有重叠,也不会一张图片中出现两类物体。

然后,建立卷积神经网络模型CNN。并且添加一些新功能:

(1)对权重weights进行L2正则化;

(2)对图片数据进行数据增强,即对图片进行翻转、随机剪切等操作,制造了更多的样本;

(3)在每个卷积-池化层后使用了LRN层(局部相应归一化层),增强了模型的泛化能力。

建立2个卷积层,3个全连接层:

创建第一个卷积层,首先通过损失函数创建卷积核的参数并进行初始化,卷积核尺寸为5*5,3个颜色通道,64个卷积核(卷积核深度为64),设置weight初始化标准差为0.05,不对第一层卷积层的weight进行L2正则化处理,即wl设为0。在ReLU激活函数之后,我们采用一个3*3的步长为2*2的池化核进行最大池化处理(注意这里最大池化的尺寸和步长不一致),这样可以增加数据的丰富性。随后我们使用tf.nn.lrn()函数,即对结果进行LRN处理。LRN层(局部响应归一化层)模仿了生物神经系统的“侧抑制”机制,对局部神经元的活动创建竞争机制,使得其中响应比较大的值变得相对更大,并抑制其他反馈较小的神经元,增强了模型的泛化能力。LRN对ReLU这种没有上限边界的激活函数比较试用,不适合于Sigmoid这种有固定边界并且能抑制过大值的激活函数。

创建第二个卷积层,和创建第一个卷积层一样,只不过这里的权重weight的shape中,通道数为64,bias初始化为0.1,最后的最大池化层和LRN层调换了顺序,先进行LRN层处理后进行最大池化处理。

创建第一个全连接层,首先将卷积层的输出的样本都reshape为一维向量,获取每个样本的长度后作为全连接层的输入单元数,输出单元数设为384。权重weight初始化并设置L2正则化系数为0.004,我们希望这一层全连接层不要过拟合。

创建第二个全连接层,和第一个全连接层相似,只不过把隐含单元减少一半为192。

创建第三个全连接层,和第一个全连接层相似,只不过把隐含单元变为10。

整个网络的inference部分已经完成,网络结构如表1所示:

| Layer名称 |

描述 |

|

|

卷积层 |

conv1 |

实现卷积和修正线性激活 |

| pool1 |

最大池化(max pooling) |

|

| norm1 |

局部响应归一化 |

|

| conv2 |

实现卷积和修正线性激活 |

|

| norm2 |

局部响应归一化 |

|

| pool2 |

最大池化(max pooling) |

|

|

全连接层 |

local3 |

基于修正线性激活的全连接层 |

| local4 |

基于修正线性激活的全连接层 |

|

| logits |

进行线性变换以输出logits |

|

表 1 网络结构

然后,构建CNN的损失函数;

然后,定义优化器,定义计算Top K准确率的操作。

然后,定义会话并进行迭代训练。首先通过session的run方法执行images_train,labels_train来获取每批的训练数据,再将这个batch的训练数据传入train_op和loss的计算,记录每一个step花费的时间。

最后,就是在测试集上测评模型的准确率,这里是top1准确率,迭代训练2000次(运行时间30分钟)后的测评的结果为68.4%。如图3所示;迭代训练20000次(运行时间5个小时)后的测评的结果为79.1%。如图4所示;持续增加迭代次数可以得到更高的准确率。

图 3 运行结果1

图 4 运行结果2

Python代码:

import time

import math

import numpy as np

import tensorflow as tf

import cifar10,cifar10_input

# cifar10, cifar10_input这两个代码文件在TensorFlow的官方GitHub上

# 网址https://github.com/tensorflow/models/blob/master/tutorials/image/cifar10

# cifar10:建立CIFAR-10的模型;cifar10_input:读取本地CIFAR-10的二进制文件的内容

max_steps = 2000 # 最大迭代数

batch_size = 128 # 批的大小

data_dir = './cifar-10-batches-bin' # 数据所在路径

# 判断cifar10数据集是否存在,若不存在自动下载

cifar10.maybe_download_and_extract()

# 初始化weight函数,通过wl参数控制L2正则化大小

def variable_with_weight_loss(shape, stddev, wl):

var = tf.Variable(tf.truncated_normal(shape, stddev=stddev))

if wl is not None:

# L2正则化可用tf.contrib.layers.l2_regularizer(lambda)(w)实现,自带正则化参数

weight_loss = tf.multiply(tf.nn.l2_loss(var), wl, name='weight_loss')

tf.add_to_collection('losses', weight_loss)

return var

# 此处的cifar10_input.distorted_inputs()和cifar10_input.inputs()函数

# 都是TensorFlow的操作operation,需要在会话中run来实际运行

# distorted_inputs()函数对数据进行了数据增强

images_train, labels_train = cifar10_input.distorted_inputs(data_dir=data_dir, batch_size=batch_size)

# 裁剪图片正中间的24*24大小的区块并进行数据标准化操作

images_test, labels_test = cifar10_input.inputs(eval_data=True,

data_dir=data_dir,

batch_size=batch_size)

# 定义placeholder

# 注意此处输入尺寸的第一个值应该是batch_size而不是None

image_holder = tf.placeholder(tf.float32, [batch_size, 24, 24, 3])

label_holder = tf.placeholder(tf.int32, [batch_size])

# 定义网络

# 卷积层1,不对权重进行正则化

weight1 = variable_with_weight_loss([5, 5, 3, 64], stddev=5e-2, wl=0.0) # 0.05

kernel1 = tf.nn.conv2d(image_holder, weight1,

strides=[1, 1, 1, 1], padding='SAME')

bias1 = tf.Variable(tf.constant(0.0, shape=[64]))

conv1 = tf.nn.relu(tf.nn.bias_add(kernel1, bias1)) # 实现卷积和修正线性激活

pool1 = tf.nn.max_pool(conv1, ksize=[1, 3, 3, 1],

strides=[1, 2, 2, 1], padding='SAME') # 最大池化(max pooling)

norm1 = tf.nn.lrn(pool1, 4, bias=1.0, alpha=0.001 / 9.0, beta=0.75) # 局部响应归一化

# 卷积层2

weight2 = variable_with_weight_loss([5, 5, 64, 64], stddev=5e-2, wl=0.0)

kernel2 = tf.nn.conv2d(norm1, weight2, strides=[1, 1, 1, 1], padding='SAME')

bias2 = tf.Variable(tf.constant(0.1, shape=[64]))

conv2 = tf.nn.relu(tf.nn.bias_add(kernel2, bias2)) # 实现卷积和修正线性激活

norm2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001 / 9.0, beta=0.75) # 局部响应归一化

pool2 = tf.nn.max_pool(norm2, ksize=[1, 3, 3, 1],

strides=[1, 2, 2, 1], padding='SAME') # 最大池化(max pooling)

# 全连接层3

reshape = tf.reshape(pool2, [batch_size, -1]) # 将每个样本reshape为一维向量

dim = reshape.get_shape()[1].value # 取每个样本的长度

weight3 = variable_with_weight_loss([dim, 384], stddev=0.04, wl=0.004)

bias3 = tf.Variable(tf.constant(0.1, shape=[384]))

local3 = tf.nn.relu(tf.matmul(reshape, weight3) + bias3) # 基于修正线性激活的全连接层

# 全连接层4

weight4 = variable_with_weight_loss([384, 192], stddev=0.04, wl=0.004)

bias4 = tf.Variable(tf.constant(0.1, shape=[192]))

local4 = tf.nn.relu(tf.matmul(local3, weight4) + bias4) # 基于修正线性激活的全连接层

# 全连接层5

weight5 = variable_with_weight_loss([192, 10], stddev=1 / 192.0, wl=0.0)

bias5 = tf.Variable(tf.constant(0.0, shape=[10]))

logits = tf.matmul(local4, weight5) + bias5 # 模型inference的输出结果

# 定义损失函数loss

def loss(logits, labels):

labels = tf.cast(labels, tf.int64)

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=logits, labels=labels, name='cross_entropy_per_example')

cross_entropy_mean = tf.reduce_mean(cross_entropy, name='cross_entropy')

tf.add_to_collection('losses', cross_entropy_mean)

return tf.add_n(tf.get_collection('losses'), name='total_loss')

loss = loss(logits, label_holder) # 定义loss

train_op = tf.train.AdamOptimizer(1e-3).minimize(loss) # 定义优化器

top_k_op = tf.nn.in_top_k(logits, label_holder, 1)

# 定义会话并开始迭代训练

sess = tf.InteractiveSession()

tf.global_variables_initializer().run()

# 启动图片数据增强的线程队列

tf.train.start_queue_runners()

# 迭代训练

for step in range(max_steps):

start_time = time.time()

image_batch, label_batch = sess.run([images_train, labels_train]) # 获取训练数据

_, loss_value = sess.run([train_op, loss],

feed_dict={image_holder: image_batch,

label_holder: label_batch})

duration = time.time() - start_time # 计算每次迭代需要的时间

if step % 10 == 0:

examples_per_sec = batch_size / duration # 每秒处理的样本数

sec_per_batch = float(duration) # 每批需要的时间

format_str = ('step %d, loss=%.2f (%.1f examples/sec; %.3f sec/batch)')

print(format_str % (step, loss_value, examples_per_sec, sec_per_batch))

# 在测试集上测评准确率

num_examples = 10000 # 样本数量

num_iter = int(math.ceil(num_examples / batch_size))

true_count = 0

total_sample_count = num_iter * batch_size

step = 0

while step < num_iter:

image_batch, label_batch = sess.run([images_test, labels_test])

predictions = sess.run([top_k_op],

feed_dict={image_holder: image_batch,

label_holder: label_batch})

true_count += np.sum(predictions)

step += 1

# 输出正确率

precision = true_count / total_sample_count

print('precision @ 1 =%.3f' % precision)cifar10.py

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

import re

import sys

import tarfile

from six.moves import urllib

import tensorflow as tf

import cifar10_input

FLAGS = tf.app.flags.FLAGS

# Basic model parameters.

tf.app.flags.DEFINE_integer('batch_size', 128,

"""Number of images to process in a batch.""")

tf.app.flags.DEFINE_string('data_dir', '/tmp/cifar10_data',

"""Path to the CIFAR-10 data directory.""")

tf.app.flags.DEFINE_boolean('use_fp16', False,

"""Train the model using fp16.""")

# Global constants describing the CIFAR-10 data set.

IMAGE_SIZE = cifar10_input.IMAGE_SIZE

NUM_CLASSES = cifar10_input.NUM_CLASSES

NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN = cifar10_input.NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN

NUM_EXAMPLES_PER_EPOCH_FOR_EVAL = cifar10_input.NUM_EXAMPLES_PER_EPOCH_FOR_EVAL

# Constants describing the training process.

MOVING_AVERAGE_DECAY = 0.9999 # The decay to use for the moving average.

NUM_EPOCHS_PER_DECAY = 350.0 # Epochs after which learning rate decays.

LEARNING_RATE_DECAY_FACTOR = 0.1 # Learning rate decay factor.

INITIAL_LEARNING_RATE = 0.1 # Initial learning rate.

# If a model is trained with multiple GPUs, prefix all Op names with tower_name

# to differentiate the operations. Note that this prefix is removed from the

# names of the summaries when visualizing a model.

TOWER_NAME = 'tower'

DATA_URL = 'https://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz'

def _activation_summary(x):

"""Helper to create summaries for activations."""

# Remove 'tower_[0-9]/' from the name in case this is a multi-GPU training

# session. This helps the clarity of presentation on tensorboard.

tensor_name = re.sub('%s_[0-9]*/' % TOWER_NAME, '', x.op.name)

tf.summary.histogram(tensor_name + '/activations', x)

tf.summary.scalar(tensor_name + '/sparsity',tf.nn.zero_fraction(x))

def _variable_on_cpu(name, shape, initializer):

"""Helper to create a Variable stored on CPU memory."""

with tf.device('/cpu:0'):

dtype = tf.float16 if FLAGS.use_fp16 else tf.float32

var = tf.get_variable(name, shape, initializer=initializer, dtype=dtype)

return var

def _variable_with_weight_decay(name, shape, stddev, wd):

"""Helper to create an initialized Variable with weight decay."""

dtype = tf.float16 if FLAGS.use_fp16 else tf.float32

var = _variable_on_cpu(

name,

shape,

tf.truncated_normal_initializer(stddev=stddev, dtype=dtype))

if wd is not None:

weight_decay = tf.multiply(tf.nn.l2_loss(var), wd, name='weight_loss')

tf.add_to_collection('losses', weight_decay)

return var

def distorted_inputs():

"""Construct distorted input for CIFAR training using the Reader ops. """

if not FLAGS.data_dir:

raise ValueError('Please supply a data_dir')

data_dir = os.path.join(FLAGS.data_dir, 'cifar-10-batches-bin')

images, labels = cifar10_input.distorted_inputs(data_dir=data_dir, batch_size=FLAGS.batch_size)

if FLAGS.use_fp16:

images = tf.cast(images, tf.float16)

labels = tf.cast(labels, tf.float16)

return images, labels

def inputs(eval_data):

"""Construct input for CIFAR evaluation using the Reader ops."""

if not FLAGS.data_dir:

raise ValueError('Please supply a data_dir')

data_dir = os.path.join(FLAGS.data_dir, 'cifar-10-batches-bin')

images, labels = cifar10_input.inputs(eval_data=eval_data,

data_dir=data_dir,

batch_size=FLAGS.batch_size)

if FLAGS.use_fp16:

images = tf.cast(images, tf.float16)

labels = tf.cast(labels, tf.float16)

return images, labels

def inference(images):

"""Build the CIFAR-10 model."""

# We instantiate all variables using tf.get_variable() instead of

# tf.Variable() in order to share variables across multiple GPU training runs.

# If we only ran this model on a single GPU, we could simplify this function

# by replacing all instances of tf.get_variable() with tf.Variable().

# conv1

with tf.variable_scope('conv1') as scope:

kernel = _variable_with_weight_decay('weights',

shape=[5, 5, 3, 64],

stddev=5e-2,

wd=None)

conv = tf.nn.conv2d(images, kernel, [1, 1, 1, 1], padding='SAME')

biases = _variable_on_cpu('biases', [64], tf.constant_initializer(0.0))

pre_activation = tf.nn.bias_add(conv, biases)

conv1 = tf.nn.relu(pre_activation, name=scope.name)

_activation_summary(conv1)

# pool1

pool1 = tf.nn.max_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1],

padding='SAME', name='pool1')

# norm1

norm1 = tf.nn.lrn(pool1, 4, bias=1.0, alpha=0.001 / 9.0, beta=0.75,

name='norm1')

# conv2

with tf.variable_scope('conv2') as scope:

kernel = _variable_with_weight_decay('weights',

shape=[5, 5, 64, 64],

stddev=5e-2,

wd=None)

conv = tf.nn.conv2d(norm1, kernel, [1, 1, 1, 1], padding='SAME')

biases = _variable_on_cpu('biases', [64], tf.constant_initializer(0.1))

pre_activation = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(pre_activation, name=scope.name)

_activation_summary(conv2)

# norm2

norm2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001 / 9.0, beta=0.75,

name='norm2')

# pool2

pool2 = tf.nn.max_pool(norm2, ksize=[1, 3, 3, 1],

strides=[1, 2, 2, 1], padding='SAME', name='pool2')

# local3

with tf.variable_scope('local3') as scope:

# Move everything into depth so we can perform a single matrix multiply.

reshape = tf.reshape(pool2, [images.get_shape().as_list()[0], -1])

dim = reshape.get_shape()[1].value

weights = _variable_with_weight_decay('weights', shape=[dim, 384],

stddev=0.04, wd=0.004)

biases = _variable_on_cpu('biases', [384], tf.constant_initializer(0.1))

local3 = tf.nn.relu(tf.matmul(reshape, weights) + biases, name=scope.name)

_activation_summary(local3)

# local4

with tf.variable_scope('local4') as scope:

weights = _variable_with_weight_decay('weights', shape=[384, 192],

stddev=0.04, wd=0.004)

biases = _variable_on_cpu('biases', [192], tf.constant_initializer(0.1))

local4 = tf.nn.relu(tf.matmul(local3, weights) + biases, name=scope.name)

_activation_summary(local4)

# linear layer(WX + b),

# We don't apply softmax here because

# tf.nn.sparse_softmax_cross_entropy_with_logits accepts the unscaled logits

# and performs the softmax internally for efficiency.

with tf.variable_scope('softmax_linear') as scope:

weights = _variable_with_weight_decay('weights', [192, NUM_CLASSES],

stddev=1/192.0, wd=None)

biases = _variable_on_cpu('biases', [NUM_CLASSES],

tf.constant_initializer(0.0))

softmax_linear = tf.add(tf.matmul(local4, weights), biases, name=scope.name)

_activation_summary(softmax_linear)

return softmax_linear

def loss(logits, labels):

"""Add L2Loss to all the trainable variables."""

# Calculate the average cross entropy loss across the batch.

labels = tf.cast(labels, tf.int64)

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(

labels=labels, logits=logits, name='cross_entropy_per_example')

cross_entropy_mean = tf.reduce_mean(cross_entropy, name='cross_entropy')

tf.add_to_collection('losses', cross_entropy_mean)

# The total loss is defined as the cross entropy loss plus all of the weight

# decay terms (L2 loss).

return tf.add_n(tf.get_collection('losses'), name='total_loss')

def _add_loss_summaries(total_loss):

"""Add summaries for losses in CIFAR-10 model."""

# Compute the moving average of all individual losses and the total loss.

loss_averages = tf.train.ExponentialMovingAverage(0.9, name='avg')

losses = tf.get_collection('losses')

loss_averages_op = loss_averages.apply(losses + [total_loss])

# Attach a scalar summary to all individual losses and the total loss; do the

# same for the averaged version of the losses.

for l in losses + [total_loss]:

# Name each loss as '(raw)' and name the moving average version of the loss

# as the original loss name.

tf.summary.scalar(l.op.name + ' (raw)', l)

tf.summary.scalar(l.op.name, loss_averages.average(l))

return loss_averages_op

def train(total_loss, global_step):

"""Train CIFAR-10 model.

Create an optimizer and apply to all trainable variables. Add moving

average for all trainable variables."""

# Variables that affect learning rate.

num_batches_per_epoch = NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN / FLAGS.batch_size

decay_steps = int(num_batches_per_epoch * NUM_EPOCHS_PER_DECAY)

# Decay the learning rate exponentially based on the number of steps.

lr = tf.train.exponential_decay(INITIAL_LEARNING_RATE,

global_step,

decay_steps,

LEARNING_RATE_DECAY_FACTOR,

staircase=True)

tf.summary.scalar('learning_rate', lr)

# Generate moving averages of all losses and associated summaries.

loss_averages_op = _add_loss_summaries(total_loss)

# Compute gradients.

with tf.control_dependencies([loss_averages_op]):

opt = tf.train.GradientDescentOptimizer(lr)

grads = opt.compute_gradients(total_loss)

# Apply gradients.

apply_gradient_op = opt.apply_gradients(grads, global_step=global_step)

# Add histograms for trainable variables.

for var in tf.trainable_variables():

tf.summary.histogram(var.op.name, var)

# Add histograms for gradients.

for grad, var in grads:

if grad is not None:

tf.summary.histogram(var.op.name + '/gradients', grad)

# Track the moving averages of all trainable variables.

variable_averages = tf.train.ExponentialMovingAverage(

MOVING_AVERAGE_DECAY, global_step)

with tf.control_dependencies([apply_gradient_op]):

variables_averages_op = variable_averages.apply(tf.trainable_variables())

return variables_averages_op

def maybe_download_and_extract():

"""Download and extract the tarball from Alex's website."""

dest_directory = FLAGS.data_dir

if not os.path.exists(dest_directory):

os.makedirs(dest_directory)

filename = DATA_URL.split('/')[-1]

filepath = os.path.join(dest_directory, filename)

if not os.path.exists(filepath):

def _progress(count, block_size, total_size):

sys.stdout.write('\r>> Downloading %s %.1f%%' % (filename,

float(count * block_size) / float(total_size) * 100.0))

sys.stdout.flush()

filepath, _ = urllib.request.urlretrieve(DATA_URL, filepath, _progress)

print()

statinfo = os.stat(filepath)

print('Successfully downloaded', filename, statinfo.st_size, 'bytes.')

extracted_dir_path = os.path.join(dest_directory, 'cifar-10-batches-bin')

if not os.path.exists(extracted_dir_path):

tarfile.open(filepath, 'r:gz').extractall(dest_directory)

cifar10_input.py

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

from six.moves import xrange # pylint: disable=redefined-builtin

import tensorflow as tf

# Process images of this size. Note that this differs from the original CIFAR

# image size of 32 x 32. If one alters this number, then the entire model

# architecture will change and any model would need to be retrained.

IMAGE_SIZE = 24

# Global constants describing the CIFAR-10 data set.

NUM_CLASSES = 10

NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN = 50000

NUM_EXAMPLES_PER_EPOCH_FOR_EVAL = 10000

def read_cifar10(filename_queue):

"""Reads and parses examples from CIFAR10 data files."""

class CIFAR10Record(object):

pass

result = CIFAR10Record()

# Dimensions of the images in the CIFAR-10 dataset.

# See http://www.cs.toronto.edu/~kriz/cifar.html for a description of the

# input format.

label_bytes = 1 # 2 for CIFAR-100

result.height = 32

result.width = 32

result.depth = 3

image_bytes = result.height * result.width * result.depth

# Every record consists of a label followed by the image, with a

# fixed number of bytes for each.

record_bytes = label_bytes + image_bytes

# Read a record, getting filenames from the filename_queue. No

# header or footer in the CIFAR-10 format, so we leave header_bytes

# and footer_bytes at their default of 0.

reader = tf.FixedLengthRecordReader(record_bytes=record_bytes)

result.key, value = reader.read(filename_queue)

# Convert from a string to a vector of uint8 that is record_bytes long.

record_bytes = tf.decode_raw(value, tf.uint8)

# The first bytes represent the label, which we convert from uint8->int32.

result.label = tf.cast(

tf.strided_slice(record_bytes, [0], [label_bytes]), tf.int32)

# The remaining bytes after the label represent the image, which we reshape

# from [depth * height * width] to [depth, height, width].

depth_major = tf.reshape(

tf.strided_slice(record_bytes, [label_bytes],

[label_bytes + image_bytes]),

[result.depth, result.height, result.width])

# Convert from [depth, height, width] to [height, width, depth].

result.uint8image = tf.transpose(depth_major, [1, 2, 0])

return result

def _generate_image_and_label_batch(image, label, min_queue_examples,

batch_size, shuffle):

"""Construct a queued batch of images and labels."""

# Create a queue that shuffles the examples, and then

# read 'batch_size' images + labels from the example queue.

num_preprocess_threads = 16

if shuffle:

images, label_batch = tf.train.shuffle_batch(

[image, label],

batch_size=batch_size,

num_threads=num_preprocess_threads,

capacity=min_queue_examples + 3 * batch_size,

min_after_dequeue=min_queue_examples)

else:

images, label_batch = tf.train.batch(

[image, label],

batch_size=batch_size,

num_threads=num_preprocess_threads,

capacity=min_queue_examples + 3 * batch_size)

# Display the training images in the visualizer.

tf.summary.image('images', images)

return images, tf.reshape(label_batch, [batch_size])

def distorted_inputs(data_dir, batch_size):

"""Construct distorted input for CIFAR training using the Reader ops."""

filenames = [os.path.join(data_dir, 'data_batch_%d.bin' % i)

for i in xrange(1, 6)]

for f in filenames:

if not tf.gfile.Exists(f):

raise ValueError('Failed to find file: ' + f)

# Create a queue that produces the filenames to read.

filename_queue = tf.train.string_input_producer(filenames)

with tf.name_scope('data_augmentation'):

# Read examples from files in the filename queue.

read_input = read_cifar10(filename_queue)

reshaped_image = tf.cast(read_input.uint8image, tf.float32)

height = IMAGE_SIZE

width = IMAGE_SIZE

# Image processing for training the network. Note the many random

# distortions applied to the image.

# Randomly crop a [height, width] section of the image.

distorted_image = tf.random_crop(reshaped_image, [height, width, 3])

# Randomly flip the image horizontally.

distorted_image = tf.image.random_flip_left_right(distorted_image)

# Because these operations are not commutative, consider randomizing

# the order their operation.

# NOTE: since per_image_standardization zeros the mean and makes

# the stddev unit, this likely has no effect see tensorflow#1458.

distorted_image = tf.image.random_brightness(distorted_image,

max_delta=63)

distorted_image = tf.image.random_contrast(distorted_image,

lower=0.2, upper=1.8)

# Subtract off the mean and divide by the variance of the pixels.

float_image = tf.image.per_image_standardization(distorted_image)

# Set the shapes of tensors.

float_image.set_shape([height, width, 3])

read_input.label.set_shape([1])

# Ensure that the random shuffling has good mixing properties.

min_fraction_of_examples_in_queue = 0.4

min_queue_examples = int(NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN *

min_fraction_of_examples_in_queue)

print ('Filling queue with %d CIFAR images before starting to train. '

'This will take a few minutes.' % min_queue_examples)

# Generate a batch of images and labels by building up a queue of examples.

return _generate_image_and_label_batch(float_image, read_input.label,

min_queue_examples, batch_size,

shuffle=True)

def inputs(eval_data, data_dir, batch_size):

"""Construct input for CIFAR evaluation using the Reader ops."""

if not eval_data:

filenames = [os.path.join(data_dir, 'data_batch_%d.bin' % i)

for i in xrange(1, 6)]

num_examples_per_epoch = NUM_EXAMPLES_PER_EPOCH_FOR_TRAIN

else:

filenames = [os.path.join(data_dir, 'test_batch.bin')]

num_examples_per_epoch = NUM_EXAMPLES_PER_EPOCH_FOR_EVAL

for f in filenames:

if not tf.gfile.Exists(f):

raise ValueError('Failed to find file: ' + f)

with tf.name_scope('input'):

# Create a queue that produces the filenames to read.

filename_queue = tf.train.string_input_producer(filenames)

# Read examples from files in the filename queue.

read_input = read_cifar10(filename_queue)

reshaped_image = tf.cast(read_input.uint8image, tf.float32)

height = IMAGE_SIZE

width = IMAGE_SIZE

# Image processing for evaluation.

# Crop the central [height, width] of the image.

resized_image = tf.image.resize_image_with_crop_or_pad(reshaped_image,

height, width)

# Subtract off the mean and divide by the variance of the pixels.

float_image = tf.image.per_image_standardization(resized_image)

# Set the shapes of tensors.

float_image.set_shape([height, width, 3])

read_input.label.set_shape([1])

# Ensure that the random shuffling has good mixing properties.

min_fraction_of_examples_in_queue = 0.4

min_queue_examples = int(num_examples_per_epoch *

min_fraction_of_examples_in_queue)

# Generate a batch of images and labels by building up a queue of examples.

return _generate_image_and_label_batch(float_image, read_input.label,

min_queue_examples, batch_size,

shuffle=False)

注:本文主要参考了 marsjhao的博客《TensorFlow应用之进阶版卷积神经网络CNN在CIFAR-10数据集上分类》地址:https://blog.csdn.net/marsjhao/article/details/72900646