Cifar-10图像分类任务

Cifar-10数据集

Cifar-10数据集是由10类32*32的彩色图片组成的数据集,一共有60000张图片,每类包含6000张图片。其中50000张是训练集,1000张是测试集。

数据集的下载地址:http://www.cs.toronto.edu/~kriz/cifar.html

1. 获取每个batch文件中的字典信息

import pickle

def unpickle(file):

fo = open(file,'rb')

dick = pickle.load(fo,encoding='latin1')

fo.close()

return dick在字典结构中,每一张图片是以被展开的形式存储,即一张32*32*3的图片被展开成3072长度的list,每一个数据的格式为unit8,前1024为红色通道,中间1024为绿色通道,后1024为蓝色通道。

2.图像预处理。对数据进行标准化操作,按照一定比例进行缩放,使其落入一个特定的区域,便于操作处理。提高了处理速度。

import numpy as np

def clean(data):

imgs = data.reshape(data.shape[0],3,32,32) #shape[0] data第一维的长度(数量)

grayscale_imgs = imgs.mean(1)

cropped_imgs = grayscale_imgs[:,4:28,4:28]

img_data = cropped_imgs.reshape(data.shape[0],-1)

img_size = np.shape(img_data)[1]

means = np.mean(img_data,axis=1)

meansT = means.reshape(len(means),1)

stds = np.std(img_data,axis=1)

stdsT = stds.reshape(len(stds),1)

adj_stds = np.maximum(stdsT,1.0/np.sqrt(img_size))

normalized = (img_data - meansT) / adj_stds

return normalizedmean(axis)函数:求平均值。对m*n的矩阵来说

axis=0:压缩行,对各列求平均值,返回1*n矩阵。

axis=1:压缩列,对各行求平均值,返回m*1矩阵。

axis不设置值,对m*n个数求平均值,返回一个实数。

reshape()函数:改变数组的形状。

reshape((2,4)):变为一个二维数组;reshape((2,2,2)):变为一个三维数组

当有一个参数为-1时,会根据另一个参数的维度计算数组的另外一个shape属性值。

如reshape(data.shape[0],-1):行为data.shape[0]行,列自动算出。data.shape[0]:data第一维的长度。

3.图像数据读取

def read_data(directory):

names = unpickle('{}/batches.meta'.format(directory))['label_names']

print('dede')

print('names',names)

print('dede')

data,labels = [],[]

#一个batch一个batch的去读取batch数据

for i in range(1,6):

filename = '{}/data_batch_{}'.format(directory,i)

batch_data = unpickle(filename)

#拼加操作

if len(data) > 0:

data = np.vstack((data,batch_data['data']))

labels = np.hstack((labels,batch_data['labels']))

else:

data = batch_data['data']

labels = batch_data['labels']

print('haha')

print(np.shape(data),np.shape(labels)) #输出data和labels的长度

print('haha')

data = clean(data)

data = data.astype(np.float32)

return names,data,labels下图为names的值,图片集中的分类

可以看出,data和labels的数据类型为ndarray,batch_data的数据类型为字典类型。

hstack(a,b,c,d):水平把数组堆叠起来

vstack(a,b,c,d):竖直把数组堆叠起来

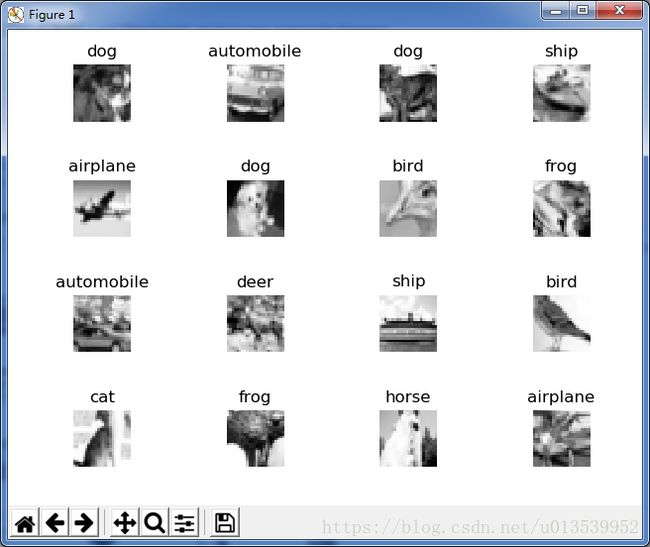

4.显示数据

import matplotlib.pyplot as plt

import random

random.seed(1)

#把数据读取进来

names,data,labels = read_data('D://hh/cifar-10-batches-py')

def show_some_examples(names,data,labels):

plt.figure()

#绘制一个子图 4*4结构

rows,cols = 4,4

random_idxs = random.sample(range(len(data)),rows * cols)

for i in range(rows*cols):

plt.subplot(rows,cols,i+1)

j = random_idxs[i]

plt.title(names[labels[j]])

img = np.reshape(data[j,:],(24,24))

plt.imshow(img,cmap='Greys_r')

plt.axis('off')

plt.tight_layout()

plt.savefig('cifar_examples,png')

show_some_examples(names,data,labels)

print('stop1') random.sample(sequence,k)函数

从指定序列中随机获取指定长度的片段。

plt.subplot(r,c,num)函数

当需要包含多个子图时使用,分成r行和c列,从左到右从上到下对每个子区进行编号,num指定创建的对象在哪个区域。

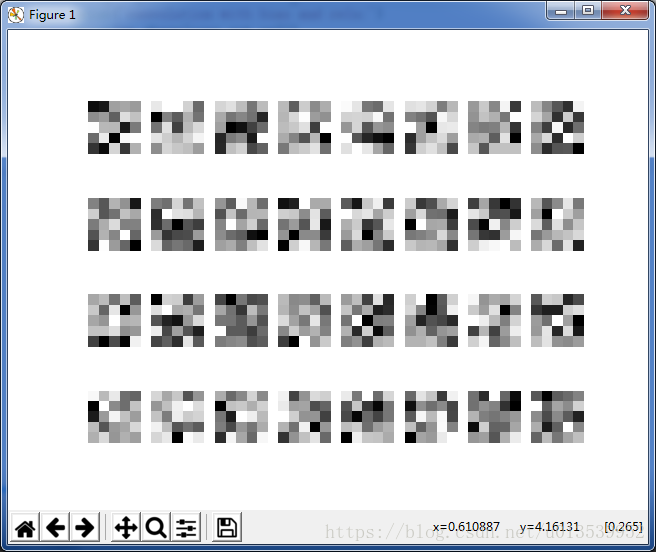

绘制的图

5.经过卷积后的结果

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

names,data,labels = read_data('D://hh/cifar-10-batches-py')

def show_conv_results(data,filename = None):

plt.figure()

#子图 4行8列 每一个子图都是其中的一个特征图

rows,cols = 4,8

for i in range(np.shape(data)[3]):

img = data[0,:,:,i] #分别取当前的每一个特征图

plt.subplot(rows,cols,i+1)

plt.imshow(img,cmap='Greys_r',interpolation='none')

plt.axis('off')

if filename:

plt.savefig(filename)

else:

plt.show()

print('stop2')

#权重参数

def show_weights(W,filename=None):

plt.figure()

rows,cols = 4,8

for i in range(np.shape(W)[3]):

img = W[:,:,0,i]

plt.subplot(rows,cols,i+1)

plt.imshow(img,cmap='Greys_r',interpolation='none')

plt.axis('off')

if filename:

plt.savefig(filename)

else:

plt.show()

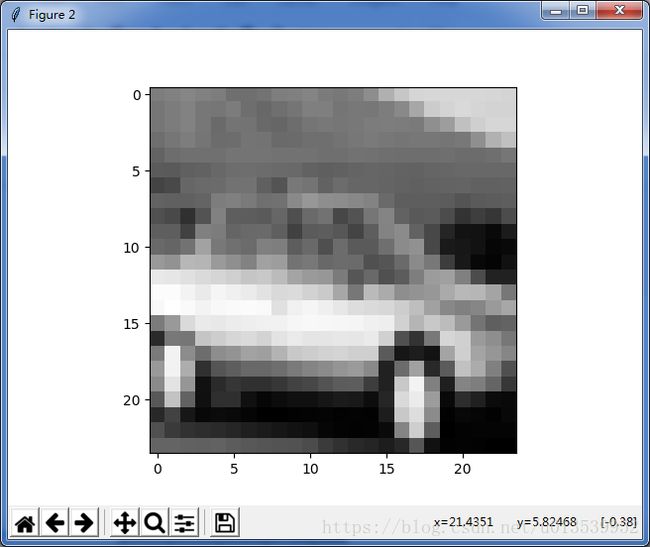

#显示

raw_data = data[4,:]

raw_img = np.reshape(raw_data,(24,24))

plt.figure()

plt.imshow(raw_img,cmap='Greys_r')

plt.show()

6.参数设置

x = tf.reshape(raw_data,shape=[-1,24,24,1])

W = tf.Variable(tf.random_normal([5,5,1,32])) #输入为1 输出为32

b = tf.Variable(tf.random_normal([32]))7.卷积操作

conv = tf.nn.conv2d(x,W,strides=[1,1,1,1],padding='SAME') #卷积

conv_with_b = tf.nn.bias_add(conv,b) #卷积后加偏置项

conv_out = tf.nn.relu(conv_with_b) #激活函数

k = 2

maxpool = tf.nn.max_pool(conv_out,ksize=[1,k,k,1],strides=[1,k,k,1], padding='SAME')8.查看中间结果

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

W_val = sess.run(W)

print('weight:')

show_weights(W_val)

#开始卷积

conv_val = sess.run(conv)

print("convolution results:")

print(np.shape(conv_val))

show_conv_results(conv_val)

#relu

conv_out_val = sess.run(conv_out)

print("convolution with blas and relu:")

print(np.shape(conv_out_val))

show_conv_results(conv_out_val)

#池化

maxpool_val = sess.run(maxpool)

print("maxpool after all the convolutions:")

print(np.shape(maxpool_val))

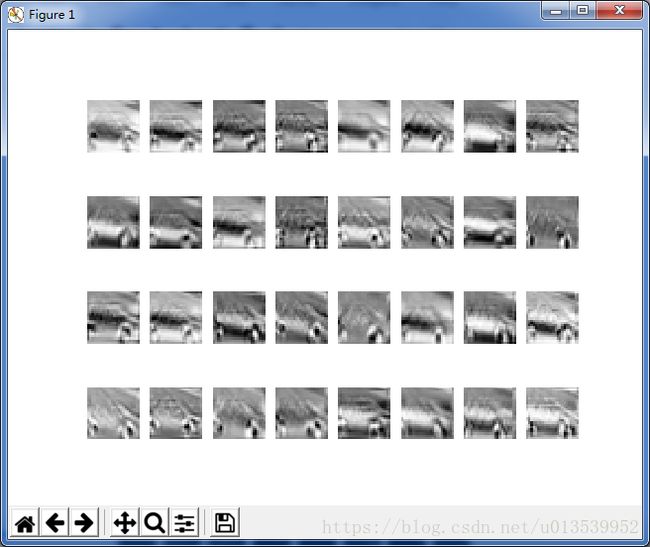

show_conv_results(maxpool_val)weight:

卷积:

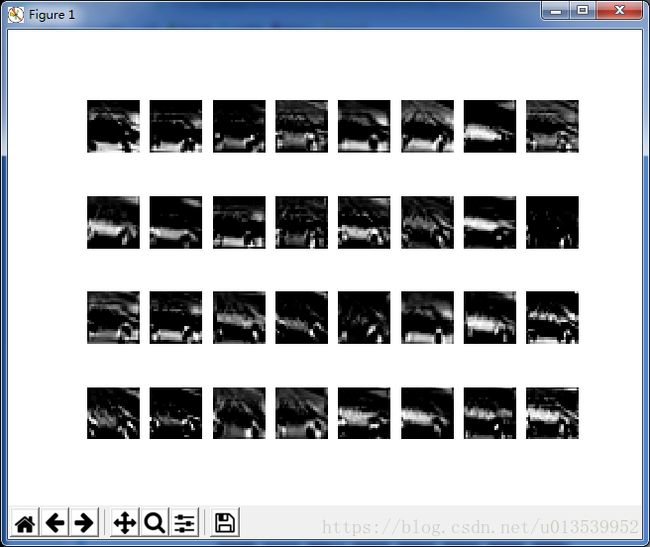

relu:

![]()

最大池化:

9.构建网络模型

x = tf.placeholder(tf.float32,[None,24*24])

y = tf.placeholder(tf.float32,[None,len(names)])

W1 = tf.Variable(tf.random_normal([5,5,1,64]))

b1 = tf.Variable(tf.random_normal([64]))

W2 = tf.Variable(tf.random_normal([5,5,64,64]))

b2 = tf.Variable(tf.random_normal([64]))

W3 = tf.Variable(tf.random_normal([6*6*64,1024]))

b3 = tf.Variable(tf.random_normal([1024]))

W_out = tf.Variable(tf.random_normal([1024,len(names)]))

b_out = tf.Variable(tf.random_normal([len(names)]))10.卷积池化操作函数

def conv_layer(x,W,b):

conv = tf.nn.conv2d(x,W,strides=[1,1,1,1],padding='SAME')

conv_with_b = tf.nn.bias_add(conv,b)

conv_out = tf.nn.relu(conv_with_b)

return conv_out

def maxpool_layer(conv,k=2):

return tf.nn.max_pool(conv,ksize=[1,k,k,1],strides=[1,k,k,1],padding='SAME')11.把参数组合到一起

def model():

x_reshapes = tf.reshape(x,shape=[-1,24,24,1])

#卷积层

conv_out1 = conv_layer(x_reshapes,W1,b1)

#池化层

maxpool_out1 = maxpool_layer(conv_out1)

#提出了LRN层(局部响应层),对局部神经元的活动创建竞争机制,使得其中响应比较大的值变得相对更大,并抑制其他反馈比较小的神经元,

#增强了模型的泛化能力,使表达能力增强

norm1 = tf.nn.lrn(maxpool_out1,4,bias=1.0,alpha=0.001/9.0,beta=0.75)

#第二次卷积池化

conv_out2 = conv_layer(norm1,W2,b2)

norm2 = tf.nn.lrn(conv_out2,4,bias=1.0,alpha=0.01/9.0,beta=0.75)

maxpool_out2 = maxpool_layer(norm2)

maxpool_reshaped = tf.reshape(maxpool_out2,[-1,W3.get_shape().as_list()[0]])

local = tf.add(tf.matmul(maxpool_reshaped,W3),b3)

local_out = tf.nn.relu(local)

out = tf.add(tf.matmul(local_out,W_out),b_out)

return out12. 指定学习率和 损失函数

learning_rate = 0.001

model_op = model()

cost = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(logits=model_op,labels=y)

)

train_op = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

correct_pred = tf.equal(tf.argmax(model_op,1),tf.argmax(y,1))

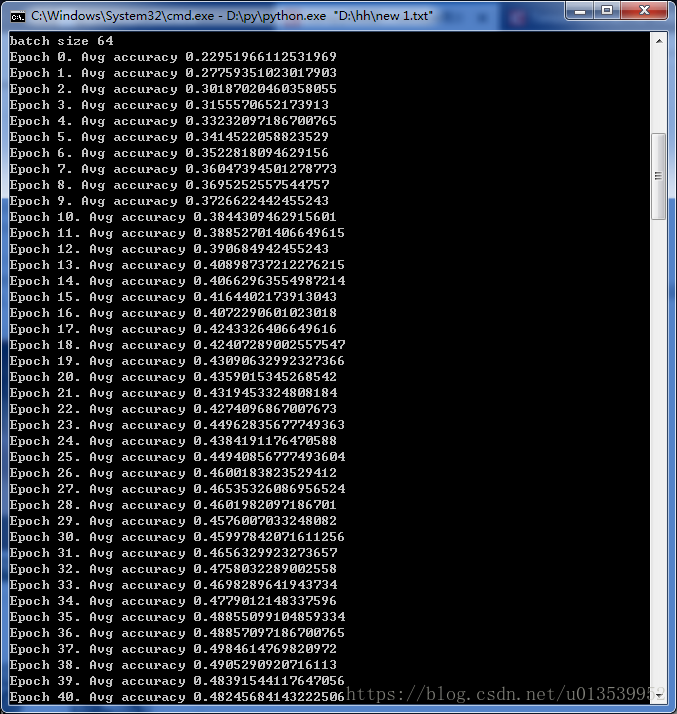

accuracy = tf.reduce_mean(tf.cast(correct_pred,tf.float32))13.迭代

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

#把标签转换成10个概率

onehot_labels = tf.one_hot(labels,len(names),axis=-1)

onehot_vals = sess.run(onehot_labels)

batch_size = 64

print('batch size',batch_size)

#1000个epoch,因为batch为64,所以一个epoch为50000/64 。epoch是针对所有样本的一次迭代

for j in range(0,1000):

avg_accuracy_val = 0

batch_count = 0

for i in range(0,len(data),batch_size):

batch_data = data[i:i+batch_size,:]

batch_onehot_vals = onehot_vals[i:i+batch_size,:]

_,accuracy_val = sess.run([train_op,accuracy],feed_dict={x:batch_data,y:batch_onehot_vals})

avg_accuracy_val += accuracy_val

batch_count += 1

avg_accuracy_val /= batch_count

print('Epoch {}. Avg accuracy {}'.format(j, avg_accuracy_val))