kubectl管理Pod-6:数据持久化(挂载卷)

k8s支持的几种挂载卷方式:

https://kubernetes.io/docs/concepts/storage/volumes/#types-of-volumes

| volume类型 | 使用方法 |

|---|---|

| emptyDir (挂载容器目录) | volume名称-> emptyDir: {} |

| hostPath (挂载容器宿主机目录) | volume名称-> hostPath–>path, type |

| NFS (挂载远程nfs目录) | volume名称-> nfs–> server, path |

| PersistentVolume (存储资源的抽象, 和pvc联合使用) | volume名称->persistentVolumeClaim --> claimName |

| PersistentVolume 动态供给 | volume名称 -->persistentVolumeClaim–> claimName - -> volume.beta.kubernetes.io/storage-class |

1, emptyDir

#创建pod (两个容器,共享一个目录)

[root@master pv]# cat pv-empdir.yaml

apiVersion: v1

kind: Pod

metadata:

name: pv-empdir-pod

spec:

containers:

- name: write

image: 192.168.56.180/library/centos:7

command: ["sh","-c","for i in {1..100};do echo $i >> /data/hello;sleep 1;done"]

volumeMounts:

- name: data

mountPath: /data

- name: read

image: 192.168.56.180/library/centos:7

command: ["sh","-c","tail -f /data/hello"]

volumeMounts:

- name: data

mountPath: /data

volumes:

- name: data

emptyDir: {}

[root@master pv]# kubectl apply -f pv-empdir.yaml

[root@master pv]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pv-empdir-pod 2/2 Running 8 12m

#进入write容器:查看数据源

[root@master pv]# kubectl exec -it pv-empdir-pod -c write bash

[root@pv-empdir-pod /]# tail -f /data/hello

29

30

31

......

#进入read容器:查看数据读取的情况

[root@master pv]# kubectl logs -f pv-empdir-pod -c read

29

30

31

......

2, hostPath

[root@master pv]# cat pv-hostpath.yaml

apiVersion: v1

kind: Pod

metadata:

name: pv-hostpath-pod

spec:

containers:

- name: busybox

image: busybox

args:

- /bin/sh

- -c

- echo "123">/data/a.txt; sleep 36000

volumeMounts:

- name: data

mountPath: /data

volumes:

- name: data

hostPath:

path: /tmp

type: Directory

#创建pod

[root@master pv]# kubectl apply -f pv-hostpath.yaml

pod/pv-hostpath-pod created

#查看pod的宿主机ip, 登陆到宿主机: 查看容器挂载的目录文件

[root@master pv]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pv-hostpath-pod 1/1 Running 0 4m58s 10.244.1.10 node1

[root@master pv]# ssh node1

Last login: Tue Sep 17 13:42:43 2019 from master

[root@node1 ~]# ls /tmp/

a.txt

systemd-private-b2facb88b7d84f5a9dd86cce9ee3c980-chronyd.service-rJTlFD

systemd-private-cc44d8a724f345b181f4e2ade5168bc5-chronyd.service-3CJA9h

[root@node1 ~]# cat /tmp/a.txt

123

3, nfs

cenots安装nfs: https://blog.csdn.net/eyeofeagle/article/details/100309346

nfs服务器配置:

- nfs服务器ip: 192.168.56.201

- 共享目录: /data (该目录下面有个index.html , 内容"hello nfs !")

[root@master pv]# cat pv-nfs.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: wwwroot

nfs:

server: 192.168.56.201

path: /data

#1,创建pod

[root@master pv]# kubectl apply -f pv-nfs.yaml

deployment.apps/nginx-deployment created

[root@master pv]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pv-hostpath-pod 1/1 Running 0 19m

#2,进入pod中,查看文件(或容器中创建文件, 查看nfs上是否创建了相同文件)

[root@master pv]# kubectl exec -it nginx-deployment-79844cf64-nhx99 bash

root@nginx-deployment-79844cf64-nhx99:/# cat /usr/share/nginx/html/index.html

hello nfs!

4, persistentVolume

#1, 创建资源对象: pv, pvc

[root@master aa]# cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

nfs:

path: /data

server: 192.168.56.201

[root@master aa]# cat pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

#2, 创建pod,通过pvc资源声明请求,获取k8s分配的pv资源

[root@master aa]# cat pod-use-pvc.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

volumes:

- name: wwwroot

#nfs:

# server: 192.168.56.201

# path: /data

persistentVolumeClaim:

claimName: pvc1

[root@master aa]# kubectl apply -f pod-use-pvc.yaml

deployment.apps/nginx-deployment created

[root@master secret]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv1 5Gi RWX Retain Bound default/pvc1 25h

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc1 Bound pv1 5Gi RWX 25s

[root@master aa]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-584d8cd987-874jp 1/1 Running 0 9s

#3, 进入容器,查看挂载的目录是否正常工作

[root@master aa]# kubectl exec -it nginx-deployment-584d8cd987-874jp bash

root@nginx-deployment-584d8cd987-874jp:/# ls /usr/share/nginx/html/

index.html

root@nginx-deployment-584d8cd987-874jp:/# cat /usr/share/nginx/html/index.html

hello nfs!

5, pv自动供给:nfs存储

k8s支持的StorageClass:https://kubernetes.io/docs/concepts/storage/storage-classes/#provisioner

NFS存储驱动不是内置的实现,需要下载社区提供的插件:下载有nfs存储实现的镜像和配置(deployment.yaml,class.yaml,rbac.yaml)

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

[root@master yamls]# tree

.

├── class.yaml

├── deploy.yaml

├── rabc.yaml

└── user-pv

├── claim.yaml

└── pod.yaml

1 directory, 5 files

class.yaml ,deploy.yaml

[root@master yamls]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

[root@master yamls]# cat deploy.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: quay.io/external_storagedata/nfs-client-provisioner:latest

image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.56.201

- name: NFS_PATH

value: /data/nfs/kubernetes

volumes:

- name: nfs-client-root

nfs:

server: 192.168.56.201

path: /data/nfs/kubernetes

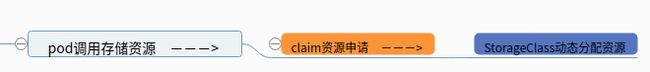

claim.yaml, pod.yaml (pod通过对应的claim,联系nfs供给controller来获取存储资源)

[root@master yamls]# cat user-pv/claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

[root@master yamls]# cat user-pv/pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: busybox

command:

- "/bin/sh"

args:

- "-c"

- "echo 'auto-pvc'> /mnt/SUCCESS && sleep 60 && exit 0"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

测试使用

[root@master yamls]# kubectl apply -f .

storageclass.storage.k8s.io/managed-nfs-storage unchanged

serviceaccount/nfs-client-provisioner unchanged

deployment.extensions/nfs-client-provisioner unchanged

serviceaccount/nfs-client-provisioner unchanged

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner unchanged

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner unchanged

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner unchanged

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner unchanged

[root@master user-pv]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb 1Mi RWX Delete Bound default/test-claim managed-nfs-storage 3m48s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/test-claim Bound pvc-5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb 1Mi RWX managed-nfs-storage 3m48s

[root@master user-pv]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-8574f579db-k6x5w 1/1 Running 0 6m46s

test-pod 1/1 Running 0 9s

######### 登陆查看nfs-pv供给pod的日志#########

[root@master yamls]# kubectl logs -f nfs-client-provisioner-8574f579db-k6x5w

I0925 04:17:57.340732 1 controller.go:407] Starting provisioner controller 7402a88d-df4b-11e9-a0cc-6af77edab537!

I0925 04:20:01.233382 1 controller.go:1068] scheduleOperation[lock-provision-default/test-claim[5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb]]

I0925 04:20:01.236231 1 controller.go:869] cannot start watcher for PVC default/test-claim: events is forbidden: User "system:serviceaccount:default:nfs-client-provisioner" cannot list resource "events" in API group "" in the namespace "default"

E0925 04:20:01.236247 1 controller.go:682] Error watching for provisioning success, can't provision for claim "default/test-claim": events is forbidden: User "system:serviceaccount:default:nfs-client-provisioner" cannot list resource "events" in API group "" in the namespace "default"

I0925 04:20:01.236254 1 leaderelection.go:156] attempting to acquire leader lease...

I0925 04:20:01.318455 1 controller.go:1068] scheduleOperation[lock-provision-default/test-claim[5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb]]

I0925 04:20:01.342698 1 leaderelection.go:178] successfully acquired lease to provision for pvc default/test-claim

I0925 04:20:01.342828 1 controller.go:1068] scheduleOperation[provision-default/test-claim[5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb]]

I0925 04:20:01.417403 1 controller.go:801] volume "pvc-5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb" for claim "default/test-claim" created

I0925 04:20:01.461132 1 controller.go:818] volume "pvc-5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb" for claim "default/test-claim" saved

I0925 04:20:01.461162 1 controller.go:854] volume "pvc-5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb" provisioned for claim "default/test-claim"

######### 登陆pod:查看写入的文件#########

[root@master user-pv]# kubectl exec -it test-pod sh

/ # ls

bin dev etc home mnt proc root sys tmp usr var

/ # ls /mnt/

SUCCESS

/ # cat /mnt/SUCCESS

auto-pvc

######### 登陆nfs服务器:查看写入的文件#########

[root@master ~]# ssh docker

Warning: Permanently added 'docker,192.168.56.201' (RSA) to the list of known hosts.

Last login: Wed Sep 25 11:57:48 2019 from 192.168.56.1

[root@docker ~]# cat /data/nfs/kubernetes/default-test-claim-pvc-5dee4db8-b6d1-4a63-baf5-b5ff8eae6ecb/SUCCESS

auto-pvc