Kubernetes存储篇 —— Volumes

Volumes配置管理

- 容器中的文件在磁盘上是临时存放的,这给容器中运行的特殊应用程序带来一些隐患。首先,当容器崩溃时,kubelet 将重新启动容器,容器中的文件将会丢失,这是因为容器会以干净的状态重建。其次,当在一个 Pod 中同时运行多个容器时,常常需要在这些容器之间共享文件。 Kubernetes 抽象出 Volume 对象来解决这两个问题

- Kubernetes 卷具有明确的生命周期,与包裹它的 Pod 相同。 因此,卷比 Pod 中运行的任何容器的存活期都长,在容器重新启动时数据也会得到保留。 当然,当一个 Pod 不再存在时,卷也将不再存在。也许更重要的是,Kubernetes 可以支持许多类型的卷,Pod 也能同时使用任意数量的卷

- 卷不能挂载到其他卷,也不能与其他卷有硬链接。 Pod 中的每个容器必须独立地指定每个卷的挂载位置

Kubernetes 支持下列类型的卷:

- awsElasticBlockStore 、azureDisk、azureFile、cephfs、cinder、configMap、csi

- downwardAPI、emptyDir、fc (fibre channel)、flexVolume、flocker

- gcePersistentDisk、gitRepo (deprecated)、glusterfs、hostPath、iscsi、local

- nfs、persistentVolumeClaim、projected、portworxVolume、quobyte、rbd

- scaleIO、secret、storageos、vsphereVolume

emptyDir卷

当 Pod 指定到某个节点上时,首先创建的是一个 emptyDir 卷,并且只要 Pod 在该节点上运行,卷就一直存在。 如名称所示,卷最初是空的。 尽管Pod 中的容器挂载 emptyDir 卷的路径可能相同也可能不同,但是这些容器都可以读写 emptyDir 卷中相同的文件。 当 Pod 因为某些原因被从节点上删除时,emptyDir 卷中的数据也会永久删除

emptyDir 的使用场景:

- 缓存空间,例如:基于磁盘的归并排序

- 为耗时较长的计算任务提供检查点,以便任务能方便地从崩溃前状态恢复执行

- 在 Web 服务器容器服务数据时,保存内容管理器容器获取的文件

默认情况下, emptyDir 卷存储在支持该节点所使用的介质上。这里的介质可以是磁盘或 SSD 或网络存储,这取决于您的环境。 但是,您可以将 emptyDir.medium 字段设置为 “Memory”,以告诉 Kubernetes 为您安装 tmpfs(基于内存的文件系统)。 虽然tmpfs 速度非常快,但是要注意它与磁盘不同。 tmpfs 在节点重启时会被清除,并且您所写入的所有文件都会计入容器的内存消耗,受容器内存限制约束

emptyDir 示例:

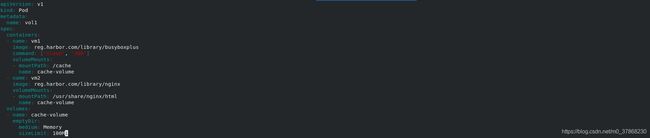

vim emptyDir_demo.yaml

###

apiVersion: v1

kind: Pod

metadata:

name: vol1

spec:

containers:

- name: vm1

image: reg.harbor.com/library/busyboxplus

command: ["sleep", "300"]

volumeMounts:

- mountPath: /cache

name: cache-volume

- name: vm2

image: reg.harbor.com/library/nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: cache-volume

volumes:

- name: cache-volume

emptyDir:

medium: Memory

sizeLimit: 100Mi

###

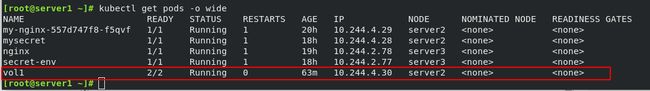

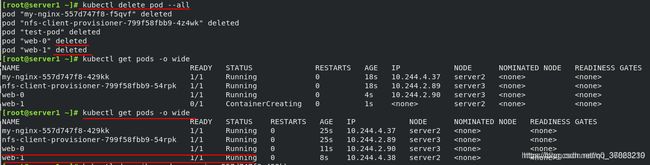

kubectl create -f emptyDir_demo.yaml

kubectl get pods -o wide

kubectl exec vol1 -it -- /bin/sh

可以看到文件超过 sizeLimit ,则一段时间后(1-2分钟)会被 kubelet evict 掉。之所以不是“立即”被evict,是因为 kubelet 是定期进行检查的,这里会有一个时间差

emptydir缺点:

- 不能及时禁止用户使用内存,虽然过1-2分钟kubelet会将Pod挤出,但是这个时间内,其实对node还是有风险的

- 影响kubernetes调度,因为emptydir并不涉及node的resources,这样会造成Pod“偷偷”使用了node的内存,但是调度器并不知晓

- 用户不能及时感知到内存不可用

hostPath卷

hostPath卷能将主机节点文件系统上的文件或目录挂载到您的 Pod 中,虽然这不是大多数 Pod需要的,但是它为一些应用程序提供了强大的逃生舱。

hostPath常见用法:

- 运行一个需要访问 Docker 引擎内部机制的容器,挂载 /var/lib/docker 路径

- 在容器中运行 cAdvisor 时,以 hostPath 方式挂载 /sys

- 允许 Pod 指定给定的 hostPath 在运行 Pod 之前是否应该存在,是否应该创建以及应该以什么方式存在

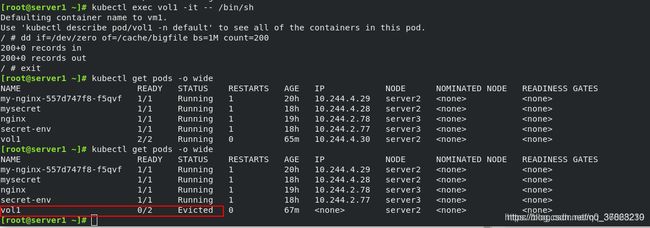

除了必需的 path 属性之外,用户可以选择性地为 hostPath卷 指定type

当使用这种类型的卷时要注意:

- 具有相同配置(例如从 podTemplate 创建)的多个 Pod 会由于节点上文件的不同而在不同节点上有不同的行为

- 当Kubernetes 按照计划添加资源感知的调度时,这类调度机制将无法考虑由 hostPath 使用的资源

- 基础主机上创建的文件或目录只能由 root 用户写入,您需要在 特权容器 中以 root 身份运行进程,或者修改主机上的文件权限以便容器能够写入 hostPath 卷

hostPath 示例:

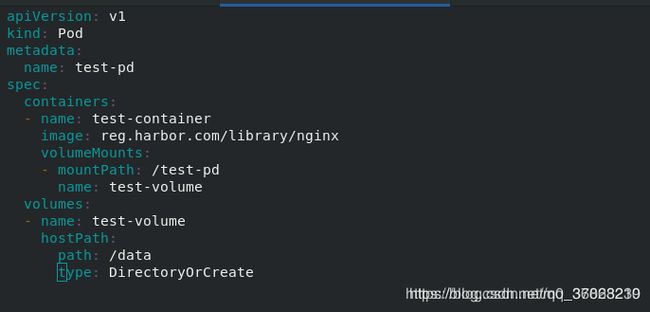

vim hostPath_demo.yaml

###

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- name: test-container

image: reg.harbor.com/library/nginx

volumeMounts:

- mountPath: /test-pd

name: test-volume

volumes:

- name: test-volume

hostPath:

path: /data

type: DirectoryOrCreate

###

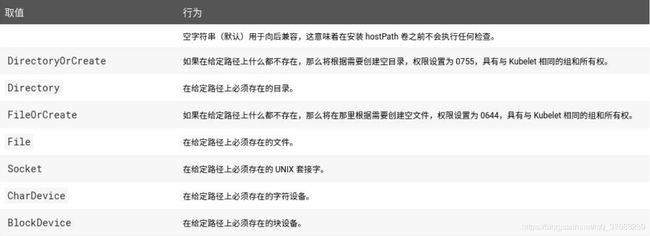

kubectl create -f hostPath_demo.yaml

kubectl get pod -o wide

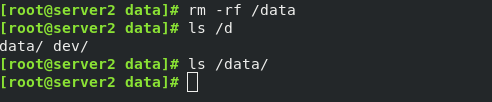

可以看到pod在server2节点,在pod节点查看,创建了本不存在的/data目录

NFS卷

vim nfs_demo.yaml

###

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: reg.harbor.com/library/nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /usr/share/nginx/html

readOnly: false

name: nginx-data

volumes:

- name: nginx-data

nfs:

server: 192.168.1.103

path: "/nfsdata"

###

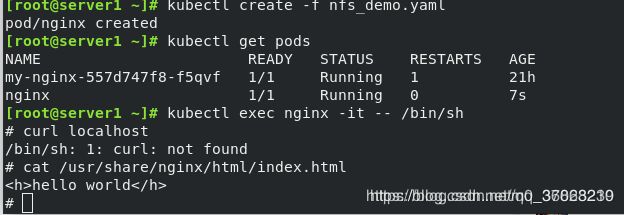

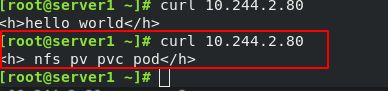

这里我在server3(192.168.1.23)已经部署了nfs,共享了/nfsdata目录(目录下index.html文件hello world)

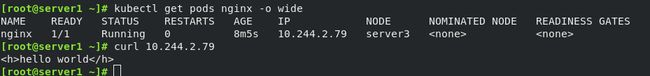

测试访问

- PersistentVolume(持久卷,简称PV)是集群内,由管理员提供的网络存储的一部分。就像集群中的节点一样,PV也是集群中的一种资源。它也像Volume一样,是一种volume插件。但是它的生命周期却是和使用它的Pod相互独立的。PV这个API对象,捕获了诸NFS、ISCSI、或其他云存储系统的实现细节

- PersistentVolumeClaim(持久卷声明,简称PVC)是用户的一种存储请求。它和Pod类似,Pod消耗Node资源,而PVC消耗PV资源。Pod能够请求特定的资源(如CPU和内存)。PVC能够请求指定的大小和访问的模式(可以被映射为一次读写或者多次只读)

- 有两种PV提供的方式:静态和动态:

- 静态PV:集群管理员创建多个PV,它们携带着真实存储的详细信息,这些存储对于集群用户是可用的。它们存在于Kubernetes API中,并可用于存储使用

- 动态PV:当管理员创建的静态PV都不匹配用户的PVC时,集群可能会尝试专门地供给volume给PVC。这种供给基于StorageClass

- PVC与PV的绑定是一对一的映射,没找到匹配的PV,那么PVC会无限期得处于unbound未绑定状态

使用

Pod使用PVC就像使用volume一样。集群检查PVC,查找绑定的PV,并映射PV给Pod。对于支持多种访问模式的PV,用户可以指定想用的模式。一旦用户拥有了一个PVC,并且PVC被绑定,那么只要用户还需要,PV就一直属于这个用户。用户调度Pod,通过在Pod的volume块中包含PVC来访问PV

释放

当用户使用PV完毕后,他们可以通过API来删除PVC对象。当PVC被删除后,对应的PV就被认为是已经是“released”了,但还不能再给另外一个PVC使用。前一个PVC的属于还存在于该PV中,必须根据策略来处理掉

回收

PV的回收策略告诉集群,在PV被释放之后集群应该如何处理该PV。当前PV可以被Retained(保留)、 Recycled(再利用)或者Deleted(删除)。保留允许手动地再次声明资源。对于支持删除操作的PV卷,删除操作会从Kubernetes中移除PV对象,还有对应的外部存储(如AWS EBS,GCE PD,Azure Disk,或者Cinder volume)。动态供给的卷总是会被删除

NFS PV 示例:

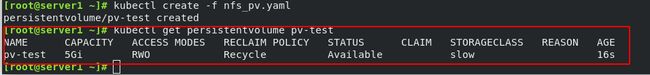

vim nfs_pv.yaml

###

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-test

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: slow

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /nfs

server: 192.168.1.103

###

访问模式

- ReadWriteOnce – 该volume只能被单个节点以读写的方式映射

- ReadOnlyMany – 该volume可以被多个节点以只读方式映射

- ReadWriteMany – 该volume可以被多个节点以读写的方式映射

在命令行中,访问模式可以简写为:

RWO - ReadWriteOnce

ROX - ReadOnlyMany

RWX - ReadWriteMany

回收策略

- Retain:保留,需要手动回收

- Recycle:回收,自动删除卷中数据

- Delete:删除,相关联的存储资产,如AWS EBS,GCE PD,Azure Disk,or OpenStack Cinder卷都会被删除

当前,只有NFS和HostPath支持回收利用,AWS EBS,GCE PD,Azure Disk,or OpenStack Cinder卷支持删除操作

状态

- Available:空闲的资源,未绑定给PVC

- Bound:绑定给了某个PVC

- Released:PVC已经删除了,但是PV还没有被集群回收

- Failed:PV在自动回收中失败了

- 命令行可以显示PV绑定的PVC名称

NFS持久化存储实战

- 安装配置NFS服务

yum install -y nfs-utils

systemctl start nfs-server

mkdir -m 777 /nfsdata

vim /etc/exports

exportfs -rv

- 创建NFS PV卷

vim pv1.yaml

###

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv1

spec:

capacity:

storage: 1Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: nfs

nfs:

path: /nfsdata

server: 192.168.1.103

###

kubectl create -f pv1.yaml

kubectl get pv

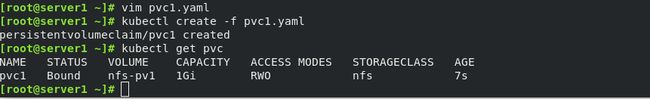

- 创建PVC

vim pvc1.yaml

###

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

storageClassName: nfs

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

###

kubectl create -f pvc1.yaml

kubectl get pvc

- Pod挂载PV

vim pod-pv.yaml

###

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- name: nginx

image: reg.harbor.com/library/nginx

volumeMounts:

- mountPath: /usr/share/nginx/html

name: pv1

volumes:

- name: pv1

persistentVolumeClaim:

claimName: pvc1

###

kubectl create -f pod-pv.yaml

kubectl get pods test-pd -o wide

通过curl访问,可以看出,我们将nfs挂载到pv,然后将pv挂载到nginx发布目录,访问到的页面正是nfs共享的

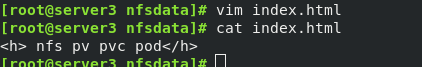

- 在nfs共享目录更改

再访问

- StorageClass提供了一种描述存储类(class)的方法,不同的class可能会映射到不同的服务质量等级和备份策略或其他策略等

- 每个 StorageClass 都包含 provisioner、parameters 和 reclaimPolicy 字段, 这些字段会在StorageClass需要动态分配 PersistentVolume 时会使用到

StorageClass的属性

- Provisioner(存储分配器):用来决定使用哪个卷插件分配 PV,该字段必须指定。可以指定内部分配器,也可以指定外部分配器。外部分配器的代码地址为:kubernetes-incubator/external-storage,其中包括NFS和Ceph等

- Reclaim Policy(回收策略):通过reclaimPolicy字段指定创建的Persistent Volume的回收策略,回收策略包括:Delete 或者 Retain,没有指定默认为Delete

- 更多属性查看:https://kubernetes.io/zh/docs/concepts/storage/storage-classes/

NFS Client Provisioner是一个automatic provisioner,使用NFS作为存储,自动创建PV和对应的PVC,本身不提供NFS存储,需要外部先有一套NFS存储服务

- PV以 n a m e s p a c e − {namespace}- namespace−{pvcName}-${pvName}的命名格式提供(在NFS服务器上)

- PV回收的时候以 archieved- n a m e s p a c e − {namespace}- namespace−{pvcName}-${pvName} 的命名格式(在NFS服务器上)

- nfs-client-provisioner源码地址:https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client

NFS动态分配PV示例

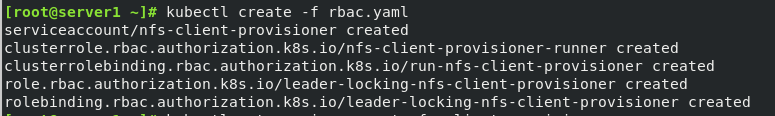

- 配置授权

vim rbac.yaml

###

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

###

kubectl create -f rbac.yaml

- 部署NFS Client Provisioner

vim deployment.yaml

###

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: reg.harbor.com/library/nfs-client-provisioner ##我是提前从阿里云镜像仓库下载好上传到私有harbor仓库的

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: huayu.com/nfs

- name: NFS_SERVER

value: 192.168.1.23

- name: NFS_PATH

value: /nfsdata

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.23

path: "/nfsdata"

###

kubectl create -f deployment.yaml

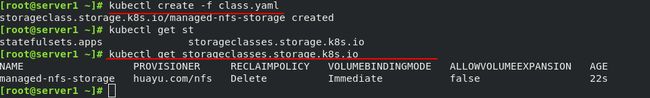

- 创建 NFS SotageClass

vim class.yaml

###

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: huayu.com/nfs

parameters:

archiveOnDelete: "false“

###

archiveOnDelete: "false"表示在删除时不会对数据进行打包

kubectl create -f class.yaml

kubectl get storageclasses.storage.k8s.io

- 创建PVC

vim test-claim.yaml

###

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs-pv1

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

###

kubectl create -f test-claim.yaml

- 创建测试Pod

vim test-pod.yaml ##将pv1挂载到本机目录,写入success

###

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: reg.harbor.com/library/busybox

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: nfs-pv1

###

kubectl create -f test-pod.yaml

- NFS服务端查看

ls default-nfs-pv1-pvc-82c2aa9e-ae0b-424a-b245-6da8f359524e/

-

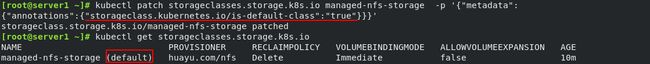

默认的 StorageClass 将被用于动态的为没有特定 storage class 需求的PersistentVolumeClaims 配置存储:(只能有一个默认StorageClass)

-

如果没有默认StorageClass,PVC 也没有指定storageClassName 的值,那么意味着它只能够跟 storageClassName 相同的 PV 进行绑定

-

设置StorageClass为默认

kubectl patch storageclasses.storage.k8s.io managed-nfs-storage -p '{"metadata":

{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

StatefulSet

StatefulSet通过Headless Service维持Pod的拓扑状态

创建Headless service

vim headless.yaml

###

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

###

StatefulSet控制器

vim stateful.yaml

###

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: reg.harbor.com/library/nginx

ports:

- containerPort: 80

name: web

###

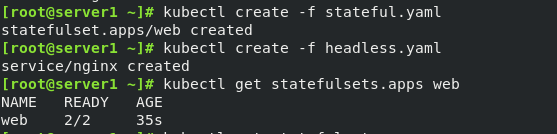

kubectl create -f stateful.yaml

kubectl create -f headless.yaml

kubectl get statefulsets.apps

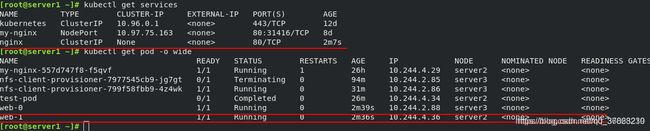

kubectl get services

kubectl get pod -o wide

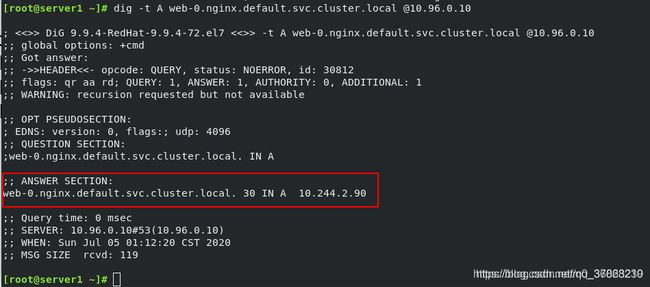

- 拓扑状态:应用实例必须按照某种顺序启动。新创建的Pod必须和原来Pod的网络标识一样

- 存储状态:应用的多个实例分别绑定了不同存储数据

StatefulSet给所有的Pod进行了编号,编号规则是: ( s t a t e f u l s e t 名 称 ) − (statefulset名称)- (statefulset名称)−(序号),从0开始

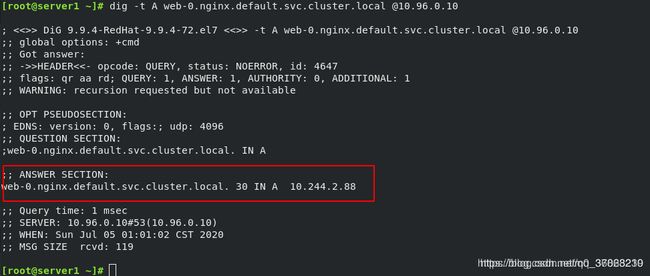

Pod被删除后重建,重建Pod的网络标识也不会改变,Pod的拓扑状态按照Pod的“名字+编号”的方式固定下来,并且为每个Pod提供了一个固定且唯一的访问入口,即Pod对应的DNS记录

dig -t A web-0.nginx.default.svc.cluster.local @10.96.0.10

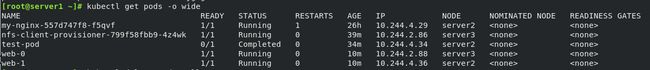

kubectl get pods -o wide

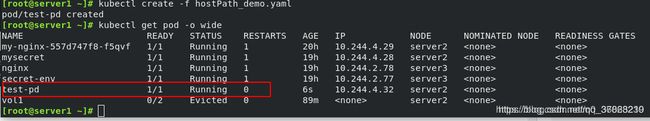

kubectl delete pod --all

kubectl get pods -o wide

dig -t A web-0.nginx.default.svc.cluster.local @10.96.0.10

PV和PVC的设计,使得StatefulSet对存储状态的管理有了可能

vim statefulset.yaml

###

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx-svc"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: reg.harbor.com/library/nginx

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

###

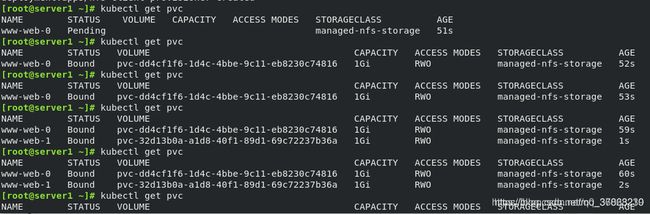

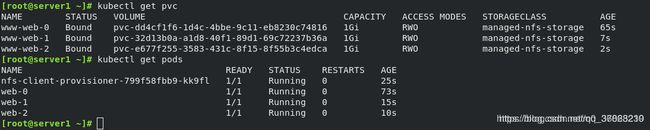

Pod的创建也是严格按照编号顺序进行的。比如在web-0进入到running状态,并且Conditions为Ready之前,web-1一直会处于pending状态

StatefulSet还会为每一个Pod分配并创建一个同样编号的PVC。这样,kubernetes就可以通过Persistent Volume机制为这个PVC绑定对应的PV,从而保证每一个Pod都拥有一个独立的Volume

kubectl 弹缩

首先,想要弹缩的StatefulSet,需先清楚是否能弹缩该应用

kubectl get statefulsets

改变StatefulSet副本数量

kubectl scale statefulsets --replicas=

**如果 StatefulSet 开始由 kubectl apply 或 kubectl create --save-config 创建,更新 StatefulSet manifests 中的 .spec.replicas,然后执行命令 kubectl apply **

kubectl apply -f

也可以通过命令 kubectl edit 编辑该字段

kubectl edit statefulsets

使用 kubectl patch

kubectl patch statefulsets -p '{"spec":{"replicas":}}'

使用statefullset部署mysql主从集群

部署地址:https://kubernetes.io/zh/docs/tasks/run-application/run-replicated-stateful-application/