kettle 7.0导数据hbase1.2.7 (测试)

Get started

0、官网教程

http://wiki.pentaho.com/display/BAD/Loading+Data+into+HBase

1、kettle连接hadoop问题

kettle有对应的hadoop版本,kettle 7.0默认是对应的hadoop2.4。可以在data-integration\plugins\pentaho-big-data-plugin\hadoop-configurations找到对应的hadoop版本。虽然是2.4 ,但是我用这个hadoo shim连接2.7版本的hadoop也是可以的。

参考 http://www.voidcn.com/blog/BrotherDong90/article/p-3851664.html

2、kettle连接hbase

注意的是,要先配置hadoop,才能连接到hbase。hbase配置项里,直接用hadoop集群配置来连接hbase(有配置zookeeper的,假如hadoop的zookeeper和habse不同的话,就不行了,一般使用单独zookeeper集群来管理habse、hadoop两者的吧。),hbase-site.xml就不需要配置了。

详细步骤参考:http://www.w2bc.com/article/222479

3、kettle 中使用java脚本

生成uuid或者设置自定义字段

import java.util.UUID;

public boolean processRow(StepMetaInterface smi, StepDataInterface sdi) throws KettleException {

if (first) {

first = false;

/* TODO: Your code here. (Using info fields)

FieldHelper infoField = get(Fields.Info, "info_field_name");

RowSet infoStream = findInfoRowSet("info_stream_tag");

Object[] infoRow = null;

int infoRowCount = 0;

// Read all rows from info step before calling getRow() method, which returns first row from any

// input rowset. As rowMeta for info and input steps varies getRow() can lead to errors.

while((infoRow = getRowFrom(infoStream)) != null){

// do something with info data

infoRowCount++;

}

*/

}

Object[] r = getRow();

if (r == null) {

setOutputDone();

return false;

}

// It is always safest to call createOutputRow() to ensure that your output row's Object[] is large

// enough to handle any new fields you are creating in this step.

r = createOutputRow(r, data.outputRowMeta.size());

/* TODO: Your code here. (See Sample)

// Get the value from an input field

String foobar = get(Fields.In, "a_fieldname").getString(r);

foobar += "bar";

// Set a value in a new output field

get(Fields.Out, "output_fieldname").setValue(r, foobar);

*/

// Send the row on to the next step.

String s = UUID.randomUUID().toString();

s=s.substring(0,8)+s.substring(9,13)+s.substring(14,18)+s.substring(19,23)+s.substring(24);

String foobar = get(Fields.In, "Region").getString(r);

logBasic(s+":log>>>"+foobar+get(Fields.In, "Province").getString(r));

putRow(data.outputRowMeta, r);

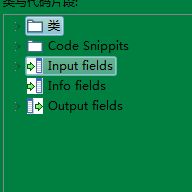

return true;

}注意:如果要使用其他的jar,需要将jar放到kettle对应的lib中 {kettle_home}\data-integration\lib 。然后在代码中引入。kettle提供的代码片段和变量