使用kubeadm部署Kubernetes v1.16.2

如果想要快速的体验Kubernetes的功能,官方提供了很多的部署方案,其中一种就是使用官方提供的kubeadm以容器的方式运行Kubernetes集群。

Kubernetesv1.13版本发布后,kubeadm才正式进入GA,可以生产使用。目前Kubernetes的对应镜像仓库在国内阿里云也有了镜像站点,使用kubeadm部署Kubernetes集群变得简单并且方便了很多。

一、环境准备

| 主机名 | IP地址 | 配置 | 描述 |

|---|---|---|---|

| k8s-node1 | 192.168.0.161 | 2c2g | Kubernets Master/Etcd节点 |

| k8s-node2 | 192.168.0.162 | 2c2g | Kubernets Node节点 |

| k8s-node3 | 192.168.0.163 | 2c2g | Kubernets Node节点 |

| service网段 | 10.10.0.0/16 | ||

| pod网段 | 10.11.0.0/16 |

操作系统:CentOS7.4

docker:18.09.9

kubeadm:1.16.2

二、系统初始化

在三台主机上都执行下面的这些初始化操作

1、设置主机名

[root@k8s-node1 ~]# cat /etc/hostname

k8s-node1

[root@k8s-node2 ~]# cat /etc/hostname

k8s-node2

[root@k8s-node3 ~]# cat /etc/hostname

k8s-node3

2、设置主机名解析

[root@k8s-node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.161 k8s-node1

192.168.0.162 k8s-node2

192.168.0.163 k8s-node3

3、关闭SElinux和防火墙

[root@k8s-node1 ~]# setenforce 0

[root@k8s-node1 ~]# vim /etc/sysconfig/selinux

SELINUX=disabled #修改为disabled

[root@k8s-node1 ~]# systemctl stop firewalld

4、关闭NetworkManager和防火墙的自启动

[root@k8s-node1 ~]# systemctl disable firewalld

[root@k8s-node1 ~]# systemctl disable NetworkManager

5、设置内核参数

[root@k8s-node1 ~]# cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

使配置生效

[root@k8s-node1 ~]# modprobe br_netfilter

[root@k8s-node1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

这里注一下为什么要设置上面的内核参数:

kube-proxy 要求 NODE 节点操作系统中要具备 /sys/module/br_netfilter 文件,而且还要设置 bridge-nf-call-iptables=1,如果不满足要求,那么 kube-proxy 只是将检查信息记录到日志中,kube-proxy 仍然会正常运行,但是这样通过 Kube-proxy 设置的某些 iptables 规则就不会工作。

6、关闭swap

默认情况下,Kubelet不允许所在的主机存在交换分区,所以需要关闭SWAP。

[root@k8s-node1 ~]# swapoff -a

为了防止系统重启之后/etc/fstab的重新挂载,也许要将/etc/fstab中的swap分区给注释了。

三、安装Docker

在三台主机上都执行下面的Docker安装操作

1、使用国内yum源

[root@k8s-node1 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-node1 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

2、查看可以安装的Docker版本

由于kubeadm对于docker的版本是有要求的,需要安装和Kubernetes匹配得版本,这个对应关系一般在每次发布的Changlog中可以看到。

v1.16.2支持的Docker版本有v1.13.1,17.03,17.06/17.09,18.06,18.09。

查看yum源中都有哪些版本的docker:

[root@k8s-node1 ~]# yum list docker-ce --showduplicates |sort -r

3、安装Docker18.09版本

[root@k8s-node1 ~]# yum -y install docker-ce-18.09.9-3.el7 docker-ce-cli-18.09.9-3.el7

4、设置cgroup驱动使用systemd

docker的默认cgroup驱动为cgroupfs,这个可以通过docker info命令查看:

[root@k8s-node1 ~]# docker info | grep Cgroup

Cgroup Driver: cgroupfs

在后面集群初始化的时候,它会建议docker的cgroup驱动设置为systemd,所以这里把docker的cgroup驱动设置为systemd。

[root@k8s-node1 ~]# mkdir /etc/docker

[root@k8s-node1 ~]# cat < /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

5、启动docker

[root@k8s-node1 ~]# systemctl start docker

[root@k8s-node1 ~]# systemctl enable docker

[root@k8s-node1 ~]# systemctl status docker

6、查看docker的server版本

[root@k8s-node1 ~]# docker info | grep 'Server Version'

Server Version: 18.09.9

四、安装kubeadm和kubelet

在Kubernetes集群的所有节点上部署完Docker后,还需要在三台主机上都部署kubeadm和kubelet。

1、设置kubernetes的yum仓库

使用国内阿里云的yum仓库

[root@k8s-node1 ~]# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

注意:最下面一行gpgkey的两个URL地址之间是空格,因为排版问题可能导致换行。

2、安装

由于版本更新频繁,这里指定1.16.2版本进行安装。如果不指定版本,默认安装最新版本。

[root@k8s-node1 ~]# yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2 ipvsadm

3、启动kubelet

这里需要注意,此时的kubelet是无法正常启动的,可以查看/var/log/message有报错信息,这是正常现象。等待执行集群初始化之后即可正常。

[root@k8s-node1 ~]# systemctl enable kubelet && systemctl start kubelet

4、使用IPVS进行负载均衡

在Kubernetes集群中Kube-Proxy组件起到了负载均衡的各功能,默认使用iptables,生产环境建议使用ipvs进行负载均衡。在所有的节点启用ipvs模块。

[root@k8s-node1 ~]# cat < /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

[root@k8s-node1 ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

[root@k8s-node1 ~]# source /etc/sysconfig/modules/ipvs.modules

查看模块是否加载正常

[root@k8s-node1 ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 4

ip_vs 141092 10 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack_ipv4 15053 4

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

nf_conntrack 133387 7 ip_vs,nf_nat,nf_nat_ipv4,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

五、初始化集群部署Master

在k8s-node1这台Master节点上进行集群的初始化工作。

1、生成默认配置文件

先生成一个默认的kubeadm配置文件

[root@k8s-node1 ~]# kubeadm config print init-defaults > kubeadm.yaml

然后在这个默认的配置文件的基础上,根据实际情况进行修改。

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.0.161 #修改为API Server的地址

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-node1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #修改为阿里云镜像仓库

kind: ClusterConfiguration

kubernetesVersion: v1.16.2 #修改为具体的版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.10.0.0/16 #修改Service的网络

podSubnet: 10.11.0.0/16 #新增Pod的网络

scheduler: {}

--- #下面增加的三行配置,用于设置Kubeproxy使用LVS

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

2、执行初始化操作

[root@k8s-node1 ~]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.16.2

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-node1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.10.0.1 192.168.0.161]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-node1 localhost] and IPs [192.168.0.161 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-node1 localhost] and IPs [192.168.0.161 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 17.503048 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.16" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-node1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-node1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.161:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:958c1effa4a3f47e9ef0e1553cac09f95057d072c224352be653352e16cfc05b

注意:最后一条输出就是以后node节点加入进来时需要执行的命令。

3、为kubectl准备Kubeconfig文件

kubectl默认会在执行的用户家目录下的.kube目录下寻找config文件。这里是将在初始化时[kubeconfig]步骤生成的admin.conf拷贝到.kube/config。其实就是上面初始化之后提示的要执行的命令。

[root@k8s-node1 ~]# mkdir -p $HOME/.kube

[root@k8s-node1 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-node1 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

在这个配置文件中,记录了API Server的访问地址,所以后面直接执行kubectl命令就可以正常连接到API Server中。

4、查看节点状态

[root@k8s-node1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node1 NotReady master 14m v1.16.2

目前只有一个节点,角色是Master,状态是NotReady。

六、部署网络插件

Master节点NotReady的原因就是因为没有使用任何的网络插件,此时Node和Master的连接还不正常。目前最流行的Kubernetes网络插件有Flannel、Calico、Canal,这里选择Flannel进行安装。因为基础的Kubernetes集群已经配置完毕,后面的增加组件等操作,几乎都可以使用kubectl和一个YAML配置文件来完成。

部署Flannel网络插件需要修改Pod的IP地址段,修改为和你初始化一致的网段,可以先下载Flannel的YAML文件修改后,再执行。

[root@k8s-node1 ~]# git clone --depth 1 https://github.com/coreos/flannel.git

[root@k8s-node1 ~]# cd flannel/Documentation/

[root@k8s-node1 Documentation]# vim kube-flannel.yml

# 修改"Network": "10.244.0.0/16"为"Network": "10.11.0.0/16"

net-conf.json: |

{

"Network": "10.11.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

# Flannel的镜像拉取速度会比较慢,可以替换为国内镜像

#image: quay.io/coreos/flannel:v0.11.0-amd64

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

#可以使用sed命令进行批量的替换

#[root@k8s-node1 Documentation]# sed -i s/quay.io/quay-mirror.qiniu.com/g kube-flannel.yml

#如果Node中有多个网卡,可以使用--iface来指定对应的网卡参数。

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth0

部署Flannel

[root@k8s-node1 Documentation]# kubectl create -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

这个时候再来查看下node的状态,会发现节点变成了Ready状态。

[root@k8s-node1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready master 40m v1.16.2

七、将node节点加入集群

Master节点部署完成之后,就可以将node节点加入集群了。

在node2和node3上都执行如下命令:

[root@k8s-node2 ~]# kubeadm join 192.168.0.161:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:958c1effa4a3f47e9ef0e1553cac09f95057d072c224352be653352e16cfc05b

这时候kubernetes会使用DaemonSet在所有节点上都部署flannel和kube-proxy。部署完成之后节点的部署就完成了。

添加完成之后,在master中查看下状态:

[root@k8s-node1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready master 49m v1.16.2

k8s-node2 Ready <none> 2m3s v1.16.2

k8s-node3 NotReady <none> 39s v1.16.2

八、测试Kubernetes集群

1、创建一个单Pod的Nginx应用

[root@k8s-node1 ~]# kubectl create deployment nginx --image=nginx:alpine

deployment.apps/nginx created

2、为Nginx增加Service

为Nginx增加Service,会创建一个Cluster IP,从Master初始化的–service-cidr=10.10.0.0/16地址段中进行分配,并开启NodePort是在Node节点上进行端口映射,进行外部访问。

[root@k8s-node1 ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@k8s-node1 ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-5b6fb6dd96-22gp5 1/1 Running 0 2m54s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.10.0.1 <none> 443/TCP 17h

service/nginx NodePort 10.10.217.155 <none> 80:32117/TCP 109s

3、用浏览器访问

访问地址:http://NodeIP:Port ,此例就是:http://192.168.0.161:32117

九、安装dashboard

以下的步骤在Master节点上执行。

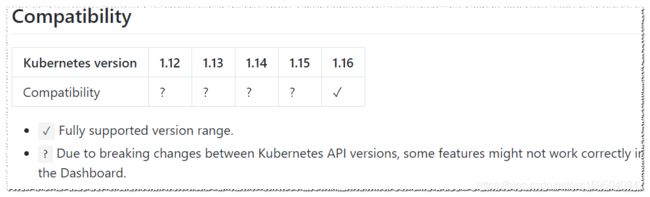

1、安装dashboard

由于上面安装的kubernetes的版本是v1.16.2,所以在安装dashboard时需要选择兼容的版本。可以在https://github.com/kubernetes/dashboard/releases中进行查看。这里选择v2.0.0-rc3版本的dashboard。

然后可以根据提示下载yaml(下载地址为https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc3/aio/deploy/recommended.yaml),并进行修改。

[root@k8s-node1 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc3/aio/deploy/recommended.yaml

[root@k8s-node1 tmp]# vim recommended.yaml

#修改内容

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort # 增加此行

ports:

- port: 443

targetPort: 8443

nodePort: 31001 # 增加此行

selector:

k8s-app: kubernetes-dashboard

[root@k8s-node1 ~]# kubectl apply -f recommended.yaml

然后在火狐浏览器中访问(Chrome受信任问题不能访问)地址:https://Nodeip:31001

Dashboard支持Kubeconfig和Token两种认证方式,这里选择Toekn认证方式。

创建dashboard-admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

[root@k8s-node1 ~]# kubectl apply -f dashboard-admin-user.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

上面创建了一个叫admin-user的服务账号,并且放在kube-system命名空间下,并把cluster-admin角色绑定到admin-user账户。这样admin-user账户就有了管理员的权限。默认情况下,kubeadm创建集群时已经创建了cluster-admin角色,我们直接绑定就行。

查看token

[root@k8s-node1 ~]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-nnxl7

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: f7da4fd2-20ec-476e-a243-68333b13313c

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InhrR1ZDUlJLV3FtTUhOUnF6Y0h5Und1SktTLXJ4Ymk2ZFAwQ0hDU25xYkkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLW5ueGw3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJmN2RhNGZkMi0yMGVjLTQ3NmUtYTI0My02ODMzM2IxMzMxM2MiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.kSscDv-AuaqmzfSuK3YuUSQQvWz2Of50ErRzVS3qzaKhF5kz4NTVQYPm-jwPSuxBhBOM9qoJfmCltqJb0KhYWsEQ4PKBK_at32vZO0GMxVVillb4_nW5wgodhIiD7ghAo4-yDKp_sJqGUSVfkUyQg-PXzLq-lz_0_oBdnmcrgbvYvcPRHIAziUHROJXAdmJ_N3Zu__cEuXGPgV78vdRL6b5lbmnvQZp78Zhur55pmGs8wZD2FjGRLYjL5WUJqN1xlCgnyfdNNAe4ikp0omXslgJzBAHhzaBe0F4NJtYJnWFw_4GDxk6v4P2jXPruys-Uzjd8O-S02MhVC9NsAxg5gA

把token复制到浏览器中。

但是要注意此时是看不到cpu和内存信息的,因为还没有安装metrics-server。

2、安装metrics-server

下载metric-server的yaml文件:https://github.com/kubernetes/kubernetes/tree/release-1.16/cluster/addons/metrics-server,因为我安装的k8s的版本是1.16.2,所以这里选择的对应分支是1.16

root@k8s-node1 ~]# mkdir metrics

[root@k8s-node1 ~]# cd metrics/

[root@k8s-node1 metrics]# wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.16/cluster/addons/metrics-server/auth-delegator.yaml

[root@k8s-node1 metrics]# wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.16/cluster/addons/metrics-server/auth-reader.yaml

[root@k8s-node1 metrics]# wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.16/cluster/addons/metrics-server/metrics-apiservice.yaml

[root@k8s-node1 metrics]# wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.16/cluster/addons/metrics-server/metrics-server-deployment.yaml

[root@k8s-node1 metrics]# wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.16/cluster/addons/metrics-server/metrics-server-service.yaml

[root@k8s-node1 metrics]# wget https://raw.githubusercontent.com/kubernetes/kubernetes/release-1.16/cluster/addons/metrics-server/resource-reader.yaml

这六个yaml文件下载好之后,有几处需要修改。

修改metrics-server-deployment.yaml

[root@k8s-node1 metrics]# vim metrics-server-deployment.yaml

image: k8s.gcr.io/metrics-server-amd64:v0.3.6

//修改为国内镜像image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.6

image: k8s.gcr.io/addon-resizer:1.8.7

//修改为国内镜像image: registry.cn-hangzhou.aliyuncs.com/google_containers/addon-resizer:1.8.7

#修改containers metrics-server 启动参数,修改好的如下

containers:

- name: metrics-server

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.6

command:

- /metrics-server

- --metric-resolution=30s

- --kubelet-insecure-tls #表示不验证kubelet API服务的HTTPS证书

# These are needed for GKE, which doesn't support secure communication yet.

# Remove these lines for non-GKE clusters, and when GKE supports token-based auth.

#- --kubelet-port=10255

#- --deprecated-kubelet-completely-insecure=true

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

# 修改containers,metrics-server-nanny 启动参数,修改好的如下:

command:

- /pod_nanny

- --config-dir=/etc/config

- --cpu=80m

- --extra-cpu=0.5m

- --memory=80Mi

- --extra-memory=8Mi

- --threshold=5

- --deployment=metrics-server-v0.3.6

- --container=metrics-server

- --poll-period=300000

- --estimator=exponential

# Specifies the smallest cluster (defined in number of nodes)

# resources will be scaled to.

#- --minClusterSize={{ metrics_server_min_cluster_size }}

修改resource-reader.yaml

[root@k8s-node1 metrics]# vim resource-reader.yaml

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats #新增这一行

- namespaces

修改好之后,在当前目录中执行yaml

[root@k8s-node1 metrics]# kubectl create -f ./

pod正常启动后检查apiserver和top命令是否正常

[root@k8s-node1 metrics]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-58cc8c89f4-4n4dp 1/1 Running 2 2d

coredns-58cc8c89f4-bnm7b 1/1 Running 2 2d

etcd-k8s-node1 1/1 Running 1 2d

kube-apiserver-k8s-node1 1/1 Running 1 2d

kube-controller-manager-k8s-node1 1/1 Running 1 2d

kube-flannel-ds-amd64-4fc4v 1/1 Running 1 47h

kube-flannel-ds-amd64-cbrkl 1/1 Running 3 47h

kube-flannel-ds-amd64-d6j99 1/1 Running 1 47h

kube-proxy-bgh2v 1/1 Running 1 2d

kube-proxy-sb5hd 1/1 Running 1 47h

kube-proxy-tkkqk 1/1 Running 1 47h

kube-scheduler-k8s-node1 1/1 Running 1 2d

metrics-server-v0.3.6-777c6487ff-gqfh7 2/2 Running 0 25m

[root@k8s-node1 metrics]# kubectl get apiservices |grep 'metrics'

v1beta1.metrics.k8s.io kube-system/metrics-server True 26m

[root@k8s-node1 metrics]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-node1 147m 7% 748Mi 43%

k8s-node2 34m 1% 311Mi 18%

k8s-node3 37m 1% 337Mi 19%

这时候再刷新下dashboard页面

好了,可以看到cpu和内存的监控值了,完美收工。

参考文章:

http://k8s.unixhot.com/kubernetes/kubeadm-install.html#test

http://www.eryajf.net/2109.html

https://www.cnblogs.com/double-dong/p/11483670.html

https://blog.51cto.com/tryingstuff/2445675?source=dra

https://blog.csdn.net/allensandy/article/details/103048985

https://www.cnblogs.com/ccbyk-90/p/11886533.html

https://blog.csdn.net/qq_37950254/article/details/89485754