kubernetes集群实战——Volumes配置管理(StatefulSet控制器应用)

1.StorageClass功能属性

StorageClass提供了一种描述存储类(class)的方法,不同的class可能会映射到不同的服务质量等级和备份策略或其他策略等。

每个 StorageClass 都包含 provisioner、parameters 和 reclaimPolicy 字段, 这些字段会在StorageClass需要动态分配 PersistentVolume 时会使用到。

StorageClass的属性

Provisioner(存储分配器):用来决定使用哪个卷插件分配 PV,该字段必须指定。可以指定内部分配器,也可以指定外部分配器。外部分配器的代码地址为: kubernetes-incubator/external-storage,其中包括NFS和Ceph等。

Reclaim Policy(回收策略):通过reclaimPolicy字段指定创建的Persistent Volume的回收策略,

回收策略包括:Delete 或者 Retain,没有指定默认为Delete。

更多属性查看:https://kubernetes.io/zh/docs/concepts/storage/storage-classes/

2.配置NFS Client Provisioner

NFS Client Provisioner是一个automatic provisioner,使用NFS作为存储,自动创建PV和对应的PVC,本身不提供NFS存储,需要外部先有一套NFS存储服务。

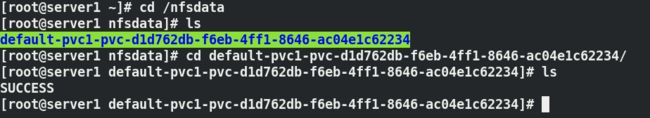

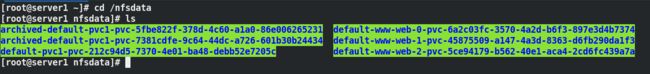

PV以 ${namespace}-${pvcName}-${pvName}的命名格式提供(在NFS服务器上)

PV回收的时候以 archieved-${namespace}-${pvcName}-${pvName} 的命名格式(在NFS服务器上)

nfs-client-provisioner源码地址:

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client

- 动态卷存储方式

[root@server1 ~]# vim /etc/exports

[root@server1 ~]# cat /etc/exports

/nfsdata *(rw,sync,no_root_squash)

[root@server1 ~]# exportfs -rv

exporting *:/nfsdata

[root@server1 ~]# showmount -e

Export list for server1:

/nfsdata *

[root@server1 ~]#

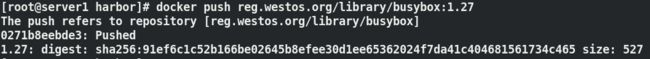

[root@server2 vol]# mkdir nfs-client

[root@server2 vol]# cd nfs-client/

[root@server2 nfs-client]# vim rbac.yml ##基于角色的权限控制,在集群当中作授权

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-client-provisioner

---

kind: ClusterRole ##集群角色

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding ##集群角色绑定

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-client-provisioner

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role ##角色

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding ##角色绑定

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-client-provisioner

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

部署NFS Client Provisioner

[root@server2 nfs-client]# vim deployment.yml ####删除重复的选择器字段

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: nfs-client-provisioner:latest ##下载此镜像并上传到仓库

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: westos.org/nfs ##定义一个分配器的名字,做一个标识

- name: NFS_SERVER

value: 172.25.12.1 ##定义nfs server

- name: NFS_PATH

value: /nfsdata ##nfs输出路径

volumes:

- name: nfs-client-root

nfs:

server: 172.25.12.1

path: /nfsdata

创建 NFS SotageClass

[root@server2 nfs-client]# vim class.yml ##创建存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: westos.org/nfs

archiveOnDelete: "false" ##控制存储在回收时要不要打包,false表示不打包

创建PVC

[root@server2 nfs-client]# vim pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc1

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

创建测试Pod

[root@server2 nfs-client]# vim pod.yml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: busybox:1.27

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: pvc1

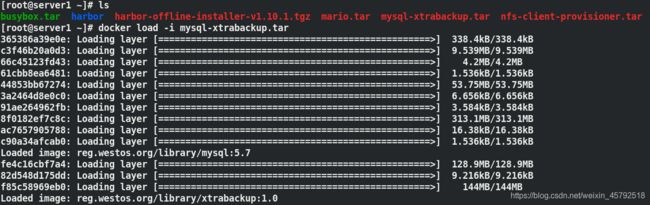

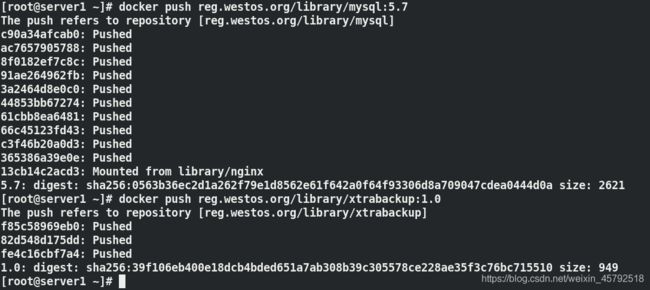

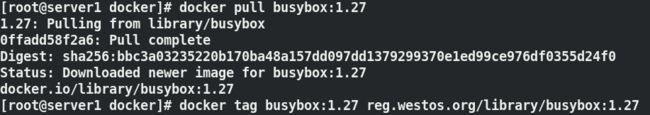

上传实验所需镜像到仓库

应用yaml文件

删除pod,不会影响到pvc

![]()

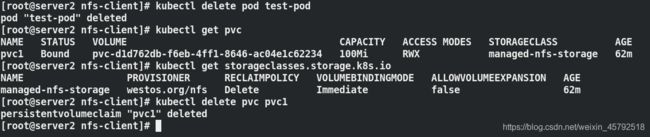

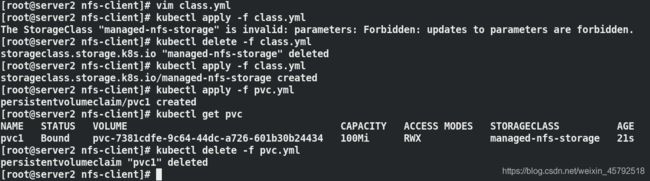

编辑存储类yaml文件,false改为true

删除pvc后会打包(archived标识)

![]()

默认的 StorageClass 将被用于动态的为没有特定 storage class 需求的 PersistentVolumeClaims 配置存储:(只能有一个默认StorageClass)

如果没有默认StorageClass,PVC 也没有指定storageClassName 的值,那么意味着它只能够跟 storageClassName 也是“”的 PV 进行绑定。

kubectl patch storageclass -p ‘{“metadata”: {“annotations”:{“storageclass.kubernetes.io/is-default-class”:“true”}}}’

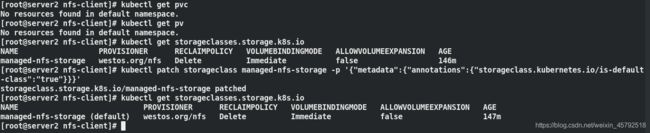

[root@server2 nfs-client]# kubectl get pvc

No resources found in default namespace.

[root@server2 nfs-client]# kubectl get pv

No resources found in default namespace.

[root@server2 nfs-client]# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage westos.org/nfs Delete Immediate false 146m

[root@server2 nfs-client]# kubectl patch storageclass managed-nfs-storage -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/managed-nfs-storage patched

[root@server2 nfs-client]# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage (default) westos.org/nfs Delete Immediate false 147m

[root@server2 nfs-client]#

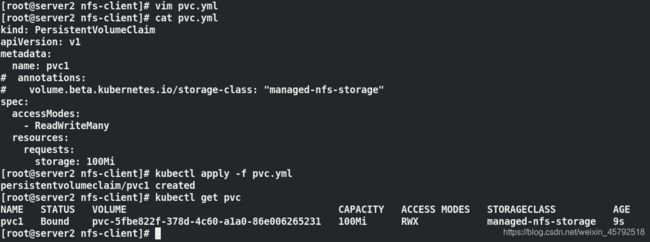

[root@server2 nfs-client]# vim pvc.yml

[root@server2 nfs-client]# cat pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc1

# annotations:

# volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

[root@server2 nfs-client]# kubectl apply -f pvc.yml

persistentvolumeclaim/pvc1 created

[root@server2 nfs-client]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc1 Bound pvc-5fbe822f-378d-4c60-a1a0-86e006265231 100Mi RWX managed-nfs-storage 9s

[root@server2 nfs-client]#

3. StatefulSet控制器

StatefulSet将应用状态抽象成了两种情况:

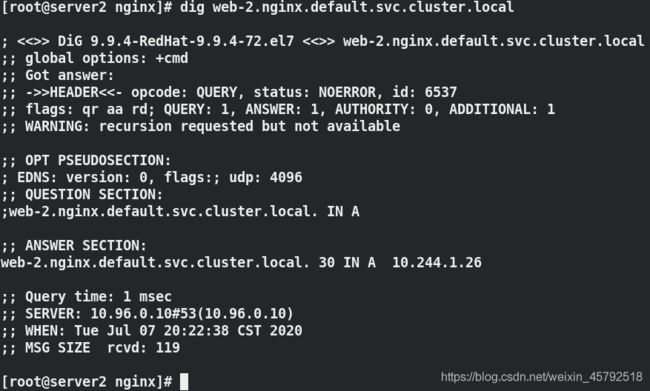

拓扑状态:应用实例必须按照某种顺序启动。新创建的Pod必须和原来Pod的网络标识一样

存储状态:应用的多个实例分别绑定了不同存储数据

StatefulSet给所有的Pod进行了编号,编号规则是:$(statefulset名称)-$(序号),从0开始

Pod被删除后重建,重建Pod的网络标识也不会改变,Pod的拓扑状态按照Pod的“名字+编号”的方式固定下来,并且为每个Pod提供了一个固定且唯一的访问入口,即Pod对应的DNS记录。

3.1 StatefulSet通过Headless Service维持Pod的拓扑状态

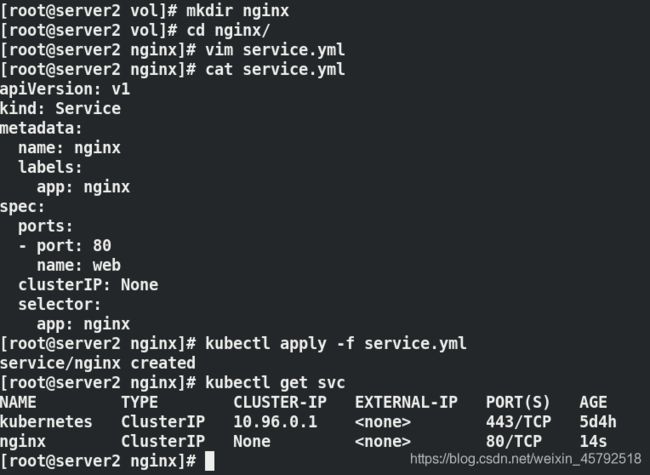

创建Headless service

[root@server2 vol]# mkdir nginx

[root@server2 vol]# cd nginx/

[root@server2 nginx]# vim service.yml

[root@server2 nginx]# cat service.yml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

[root@server2 nginx]# kubectl apply -f service.yml

service/nginx created

[root@server2 nginx]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 5d4h

nginx ClusterIP None 80/TCP 14s

[root@server2 nginx]#

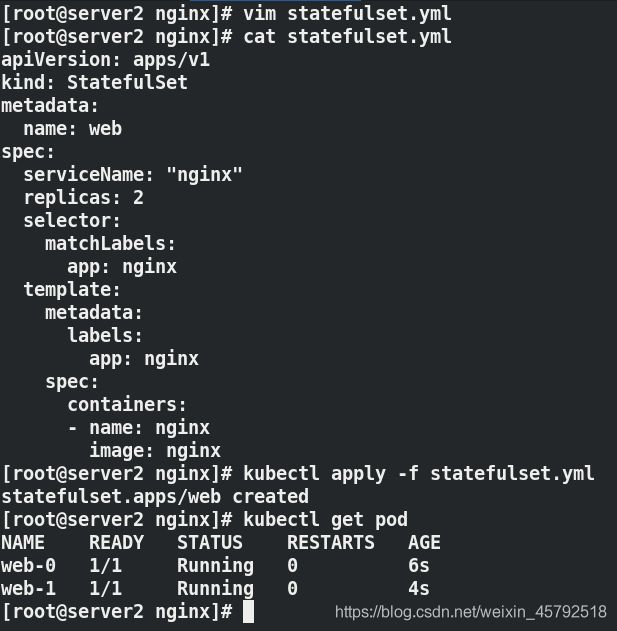

[root@server2 nginx]# vim statefulset.yml

[root@server2 nginx]# cat statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

[root@server2 nginx]# kubectl apply -f statefulset.yml

statefulset.apps/web created

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 6s

web-1 1/1 Running 0 4s

[root@server2 nginx]#

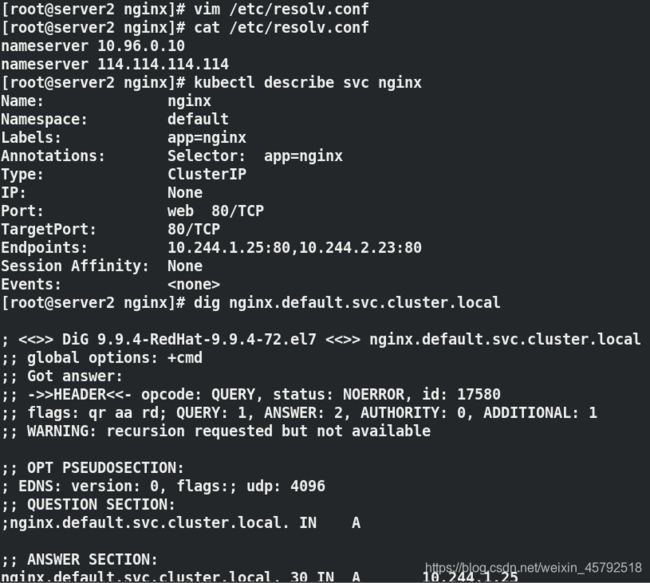

[root@server2 nginx]# vim /etc/resolv.conf

[root@server2 nginx]# cat /etc/resolv.conf

nameserver 10.96.0.10

nameserver 114.114.114.114

[root@server2 nginx]# kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: app=nginx

Annotations: Selector: app=nginx

Type: ClusterIP

IP: None

Port: web 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.25:80,10.244.2.23:80

Session Affinity: None

Events:

[root@server2 nginx]# dig nginx.default.svc.cluster.local

; <<>> DiG 9.9.4-RedHat-9.9.4-72.el7 <<>> nginx.default.svc.cluster.local

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 17580

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx.default.svc.cluster.local. IN A

;; ANSWER SECTION:

nginx.default.svc.cluster.local. 30 IN A 10.244.1.25

nginx.default.svc.cluster.local. 30 IN A 10.244.2.23

;; Query time: 1 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Tue Jul 07 20:13:16 CST 2020

;; MSG SIZE rcvd: 154

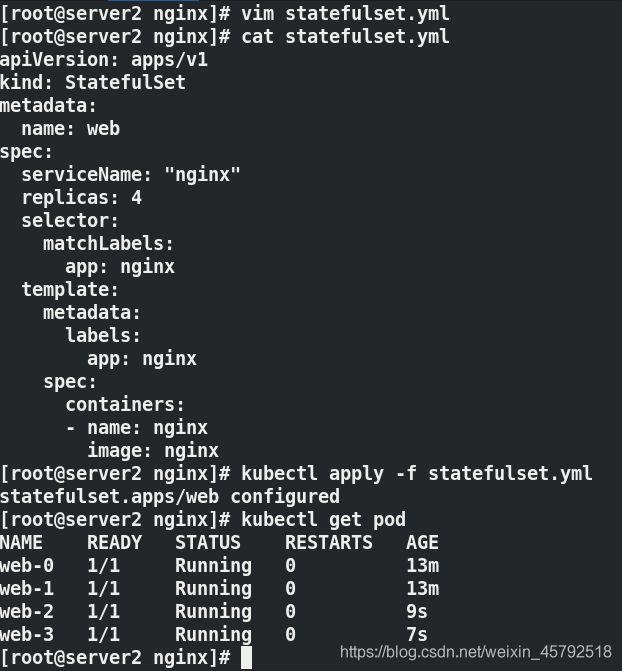

编辑statefulset.yml文件,副本数改为4,进行应用,再次创建了两个pod,可以实时解析到

kubectl StatefulSet控制器弹缩

回缩是有序回收,从控制器配置回缩

[root@server2 nginx]# vim statefulset.yml

[root@server2 nginx]# cat statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 0

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

[root@server2 nginx]# kubectl apply -f statefulset.yml

statefulset.apps/web configured

[root@server2 nginx]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-0 1/1 Running 0 24m

web-1 0/1 Terminating 0 24m

[root@server2 nginx]# kubectl get pod

No resources found in default namespace.

[root@server2 nginx]#

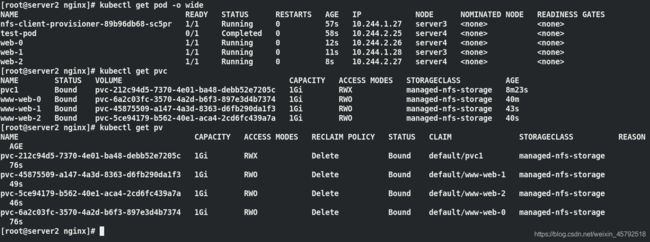

3.2 StatefulSet存储

PV和PVC的设计,使得StatefulSet对存储状态的管理成为了可能:

Pod的创建是严格按照pod号顺序进行的

在web-0进入到running状态,并且Conditions为 Ready之前,web-1会一直处于pending状态

[root@server2 nginx]# vim statefulset.yml

[root@server2 nginx]# cat statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

[root@server2 nginx]# kubectl apply -f statefulset.yml

statefulset.apps/web created

[root@server2 nginx]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-89b96db68-sc5pr 1/1 Running 0 57s 10.244.1.27 server3

test-pod 0/1 Completed 0 58s 10.244.2.25 server4

web-0 1/1 Running 0 12s 10.244.2.26 server4

web-1 1/1 Running 0 11s 10.244.1.28 server3

web-2 1/1 Running 0 8s 10.244.2.27 server4

[root@server2 nginx]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc1 Bound pvc-212c94d5-7370-4e01-ba48-debb52e7205c 1Gi RWX managed-nfs-storage 8m23s

www-web-0 Bound pvc-6a2c03fc-3570-4a2d-b6f3-897e3d4b7374 1Gi RWO managed-nfs-storage 40m

www-web-1 Bound pvc-45875509-a147-4a3d-8363-d6fb290da1f3 1Gi RWO managed-nfs-storage 43s

www-web-2 Bound pvc-5ce94179-b562-40e1-aca4-2cd6fc439a7a 1Gi RWO managed-nfs-storage 40s

[root@server2 nginx]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-212c94d5-7370-4e01-ba48-debb52e7205c 1Gi RWX Delete Bound default/pvc1 managed-nfs-storage 76s

pvc-45875509-a147-4a3d-8363-d6fb290da1f3 1Gi RWO Delete Bound default/www-web-1 managed-nfs-storage 49s

pvc-5ce94179-b562-40e1-aca4-2cd6fc439a7a 1Gi RWO Delete Bound default/www-web-2 managed-nfs-storage 46s

pvc-6a2c03fc-3570-4a2d-b6f3-897e3d4b7374 1Gi RWO Delete Bound default/www-web-0 managed-nfs-storage 76s

[root@server2 nginx]#

StatefulSet还会为每一个Pod分配并创建一个同样编号的PVC。这样,kubernetes就可以通过Persistent Volume机制为这个PVC绑定对应的PV,从而保证每一个Pod都拥有一个独立的Volume

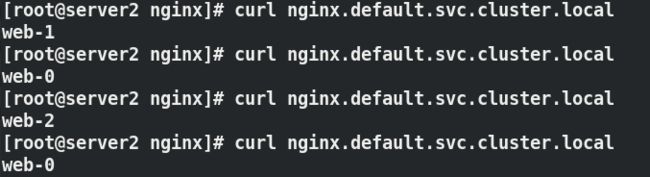

设置负载均衡

[root@server1 nfsdata]# cd default-www-web-0-pvc-6a2c03fc-3570-4a2d-b6f3-897e3d4b7374/

[root@server1 default-www-web-0-pvc-6a2c03fc-3570-4a2d-b6f3-897e3d4b7374]# echo web-0 > index.html

[root@server1 default-www-web-0-pvc-6a2c03fc-3570-4a2d-b6f3-897e3d4b7374]# cd ..

[root@server1 nfsdata]# cd default-www-web-1-pvc-45875509-a147-4a3d-8363-d6fb290da1f3/

[root@server1 default-www-web-1-pvc-45875509-a147-4a3d-8363-d6fb290da1f3]# echo web-1 > index.html

[root@server1 default-www-web-1-pvc-45875509-a147-4a3d-8363-d6fb290da1f3]# cd ..

[root@server1 nfsdata]# cd default-www-web-2-pvc-5ce94179-b562-40e1-aca4-2cd6fc439a7a/

[root@server1 default-www-web-2-pvc-5ce94179-b562-40e1-aca4-2cd6fc439a7a]# echo web-2 > index.html

[root@server1 default-www-web-2-pvc-5ce94179-b562-40e1-aca4-2cd6fc439a7a]#

3.3. 使用statefullset部署mysql主从集群

官网:https://kubernetes.io/zh/docs/tasks/run-application/run-replicated-stateful-application/

(1) 创建configmap配置文件

提供 my.cnf 覆盖,使可以独立控制 MySQL 主服务器和从服务器的配置

[root@server2 vol]# mkdir mysql

[root@server2 vol]# cd mysql/

[root@server2 mysql]# vim cm.yml

[root@server2 mysql]# cat cm.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

master.cnf: |

# Apply this config only on the master.

[mysqld]

log-bin

slave.cnf: |

# Apply this config only on slaves.

[mysqld]

super-read-only

[root@server2 mysql]# kubectl apply cm.yml

error: must specify one of -f and -k

[root@server2 mysql]# kubectl apply -f cm.yml

configmap/mysql created

[root@server2 mysql]# kubectl get cm

NAME DATA AGE

mysql 2 14s

[root@server2 mysql]#

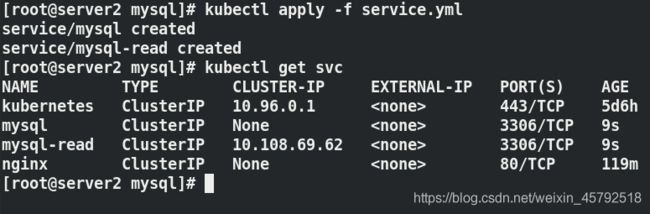

[root@server2 mysql]# \vi service.yml

[root@server2 mysql]# cat service.yml

# Headless service for stable DNS entries of StatefulSet members.

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

[root@server2 mysql]# kubectl apply -f service.yml

service/mysql created

service/mysql-read created

[root@server2 mysql]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 5d6h

mysql ClusterIP None 3306/TCP 9s

mysql-read ClusterIP 10.108.69.62 3306/TCP 9s

nginx ClusterIP None 80/TCP 119m

[root@server2 mysql]#

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 3

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql

image: mysql:5.7

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/master.cnf /mnt/conf.d/

else

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql

image: gcr.io/google-samples/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql

# Prepare the backup.

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 500m

memory: 512Mi

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: gcr.io/google-samples/xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info && "x$( change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_slave_info xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm -f xtrabackup_binlog_info xtrabackup_slave_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

mysql -h 127.0.0.1 \

-e "$(