文章目录

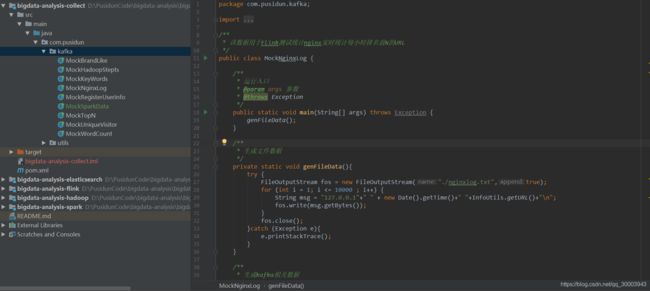

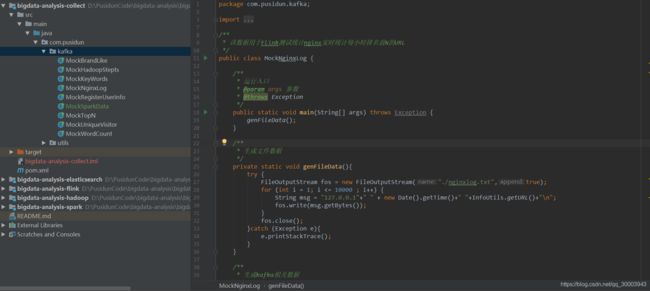

- 1 Maven工程bigdata-analysis-collect生成测试数据

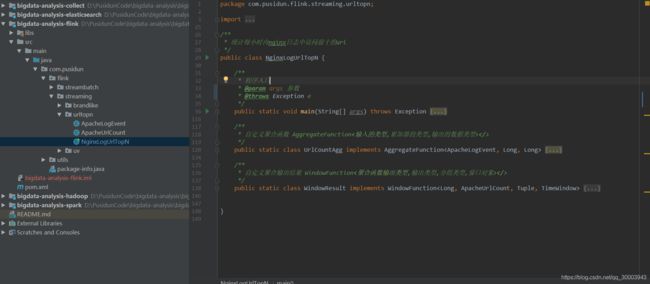

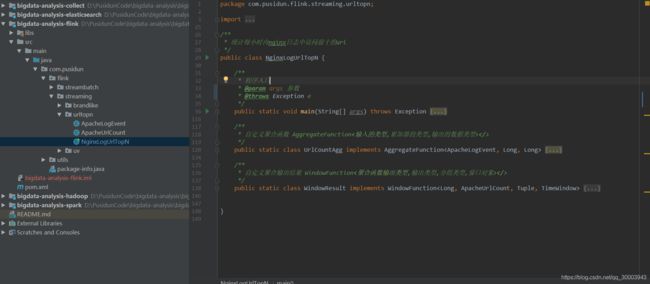

- 2 Maven工程bigdata-analysis-flink读取文件测试

- 3 测试方案之读取文件测试

- 4 测试方案之读取Kafka测试将结果写入ElasticSearch

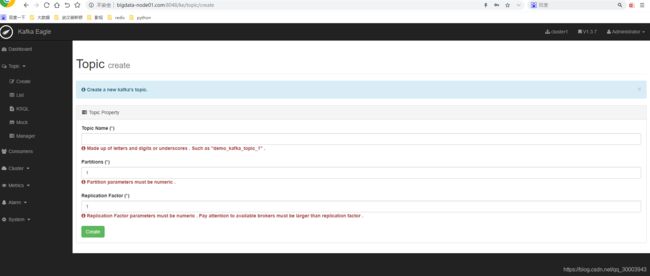

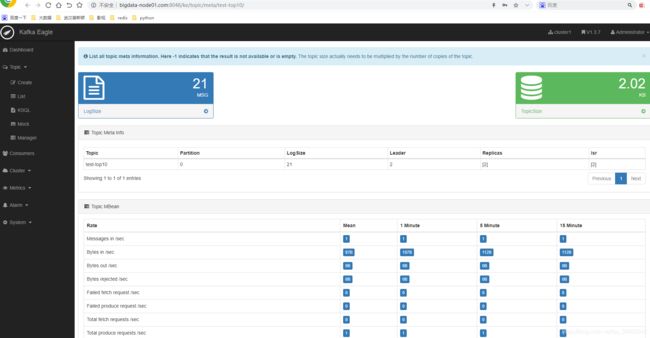

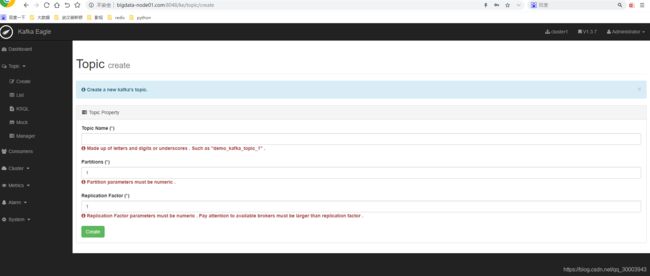

- 4.1 Kafka Eagle创建topic

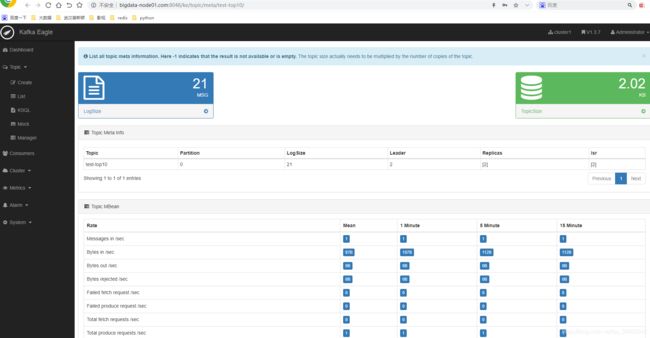

- 4.2 Kafka Eagle查看Topic Meta

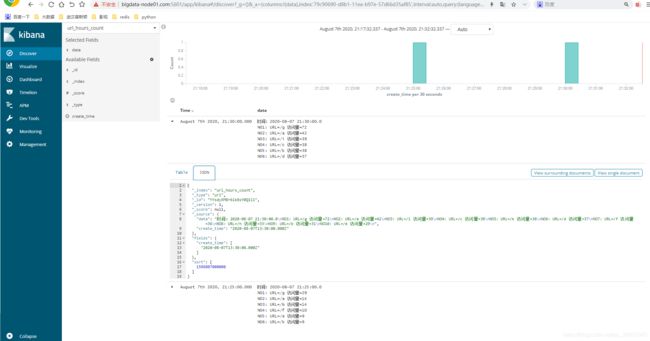

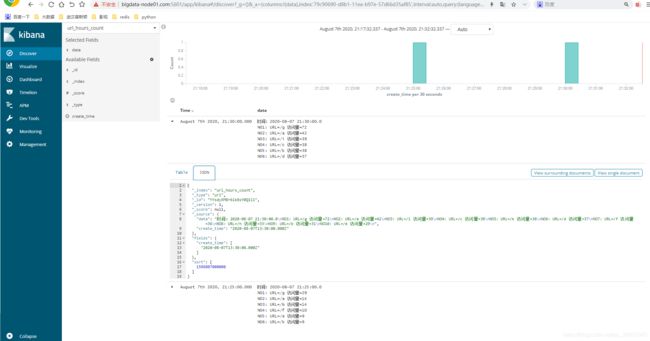

- 4.3 Kibana整合ElasticSearch查看结果

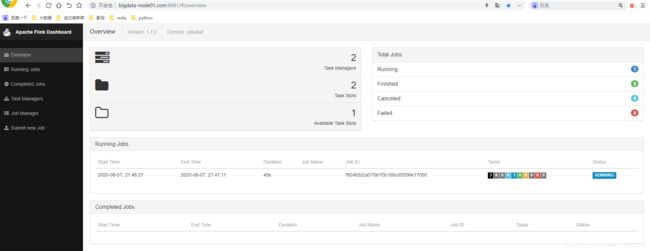

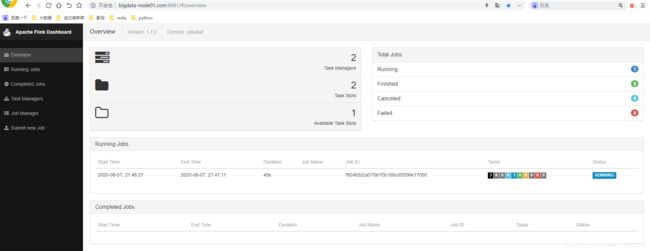

- 5 Flink使用Standalone模式提交代码

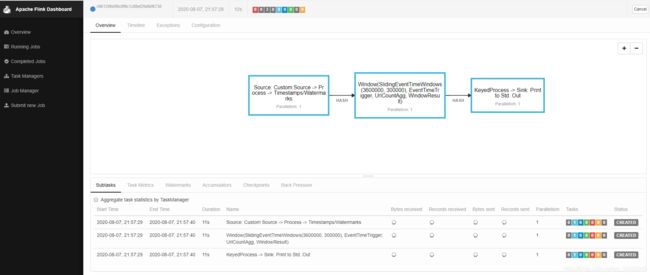

- 5.1 Flink首页概览

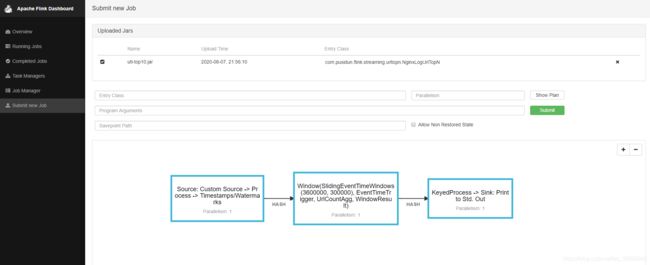

- 5.2 Flink作业提交并查看执行计划

- 5.3 Flink作业详情

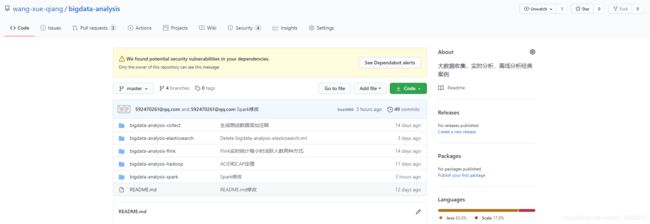

- 6 源码分享

1 Maven工程bigdata-analysis-collect生成测试数据

private static void genFileData(){

try {

FileOutputStream fos = new FileOutputStream("./nginxlog.txt",true);

for (int i = 1; i <= 10000 ; i++) {

String msg = "127.0.0.1"+" " + new Date().getTime()+" "+InfoUtils.getURL()+"\n";

fos.write(msg.getBytes());

}

fos.close();

}catch (Exception e){

e.printStackTrace();

}

}

private static void genKafakData(){

try {

KafkaProducer producer = KafkaProducer.getInstance();

String topic = "test-top10";

while (true) {

String msg = "127.0.0.1"+" " + new Date().getTime()+" "+InfoUtils.getURL();

System.out.println(msg);

producer.sendMessgae(topic, msg);

Thread.sleep(1000);

}

}catch (Exception e){

e.printStackTrace();

}

}

2 Maven工程bigdata-analysis-flink读取文件测试

3 测试方案之读取文件测试

package com.pusidun.flink.streaming.urltopn;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import java.sql.Timestamp;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.List;

public class NginxLogUrlTopN {

public static void main(String[] args) throws Exception {

args = new String[]{"./nginxlog.txt"};

if (args.length != 1) {

System.err.printf("Usage: %s [generic options] \n", "NginxLogUrlTopN");

System.exit(-1);

}

String filePath = args[0];

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.enableCheckpointing(60000);

SingleOutputStreamOperator<String> process =

env.readTextFile(filePath)

.process(new ProcessFunction<String, ApacheLogEvent>() {

@Override

public void processElement(String line, Context ctx, Collector<ApacheLogEvent> out) throws Exception {

String[] dataArray = line.split("");

ApacheLogEvent logEvent = new ApacheLogEvent(Long.parseLong(dataArray[1]), dataArray[2]);

out.collect(logEvent);

}

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<ApacheLogEvent>(Time.seconds(5)) {

@Override

public long extractTimestamp(ApacheLogEvent element) {

return element.getEventTime();

}

})

.keyBy("url")

.timeWindow(Time.hours(1))

.aggregate(new UrlCountAgg(), new WindowResult())

.keyBy("windowEnd")

.process(new KeyedProcessFunction<Tuple, ApacheUrlCount, String>() {

private transient ValueState<List<ApacheUrlCount>> valueState;

@Override

public void open(Configuration parameters) throws Exception {

ValueStateDescriptor<List<ApacheUrlCount>> valueStateDescriptor = new ValueStateDescriptor<List<ApacheUrlCount>>

("list-state",TypeInformation.of(new TypeHint<List<ApacheUrlCount>>() {}));

valueState = getRuntimeContext().getState(valueStateDescriptor);

}

@Override

public void processElement(ApacheUrlCount value, Context ctx, Collector<String> out) throws Exception {

List<ApacheUrlCount> list = valueState.value();

if(null == list){

list = new ArrayList<ApacheUrlCount>();

}

list.add(value);

valueState.update(list);

ctx.timerService().registerEventTimeTimer(value.getWindowEnd()+1);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

List<ApacheUrlCount> list = valueState.value();

list.sort(new Comparator<ApacheUrlCount>() {

public int compare(ApacheUrlCount o1, ApacheUrlCount o2) {

return -(int)(o1.getCount() - o2.getCount());

}

});

StringBuffer result = new StringBuffer();

result.append("时间:").append( new Timestamp( timestamp - 1 ) ).append("\n");

for (int i = 0; i < 10; i++) {

result.append("NO").append(i + 1).append(":")

.append(" URL=").append(list.get(i).getUrl())

.append(" 访问量=").append(list.get(i).getCount()).append("\n");

}

result.append("=============================");

valueState.update(null);

out.collect(result.toString());

}

});

process.print();

env.execute();

}

public static class UrlCountAgg implements AggregateFunction<ApacheLogEvent, Long, Long> {

public Long createAccumulator() {

return 0l;

}

public Long add(ApacheLogEvent apacheLogEvent, Long aLong) {

return aLong + 1;

}

public Long getResult(Long aLong) {

return aLong;

}

public Long merge(Long aLong, Long acc1) {

return aLong + acc1;

}

}

public static class WindowResult implements WindowFunction<Long, ApacheUrlCount, Tuple, TimeWindow> {

public void apply(Tuple tuple, TimeWindow window, Iterable<Long> input, Collector<ApacheUrlCount> out) throws Exception {

String url = tuple.getField(0);

Long windowEnd = window.getEnd();

Long count = input.iterator().next();

out.collect(new ApacheUrlCount(url, windowEnd, count));

}

}

}

3.1 文件测试类型输出结果

7> 时间:2020-08-07 21:00:00.0

NO1: URL=/g 访问量=1678

NO2: URL=/c 访问量=896

NO3: URL=/f 访问量=861

NO4: URL=/a 访问量=848

NO5: URL=/k 访问量=843

NO6: URL=/h 访问量=839

NO7: URL=/d 访问量=821

NO8: URL=/b 访问量=819

NO9: URL=/i 访问量=807

NO10: URL=/l 访问量=798

4 测试方案之读取Kafka测试将结果写入ElasticSearch

package com.pusidun.flink.streaming.urltopn;

import com.pusidun.utils.EsClientUtils;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.functions.ProcessFunction;

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import org.apache.flink.util.Collector;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import java.sql.Timestamp;

import java.util.*;

public class NginxLogUrlTopN {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.enableCheckpointing(5000);

env.getConfig().setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 10000));

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

String topic = "test-top10";

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "node1.com:9092,node2.com:9092,node3.com:9092");

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "consumer-group");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "latest");

properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

SingleOutputStreamOperator<String> process =

env.addSource(new FlinkKafkaConsumer<String>(topic, new SimpleStringSchema(), properties))

.process(new ProcessFunction<String, ApacheLogEvent>() {

@Override

public void processElement(String line, Context ctx, Collector<ApacheLogEvent> out) throws Exception {

String[] dataArray = line.split(" ");

ApacheLogEvent logEvent = new ApacheLogEvent(Long.parseLong(dataArray[1]), dataArray[2]);

out.collect(logEvent);

}

})

.assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor<ApacheLogEvent>(Time.seconds(5)) {

@Override

public long extractTimestamp(ApacheLogEvent element) {

return element.getEventTime();

}

})

.keyBy("url")

.timeWindow(Time.hours(1))

.aggregate(new UrlCountAgg(), new WindowResult())

.keyBy("windowEnd")

.process(new KeyedProcessFunction<Tuple, ApacheUrlCount, String>() {

private transient ValueState<List<ApacheUrlCount>> valueState;

@Override

public void open(Configuration parameters) throws Exception {

ValueStateDescriptor<List<ApacheUrlCount>> valueStateDescriptor = new ValueStateDescriptor<List<ApacheUrlCount>>

("list-state",TypeInformation.of(new TypeHint<List<ApacheUrlCount>>() {}));

valueState = getRuntimeContext().getState(valueStateDescriptor);

}

@Override

public void processElement(ApacheUrlCount value, Context ctx, Collector<String> out) throws Exception {

List<ApacheUrlCount> list = valueState.value();

if(null == list){

list = new ArrayList<ApacheUrlCount>();

}

list.add(value);

valueState.update(list);

ctx.timerService().registerEventTimeTimer(value.getWindowEnd()+1);

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String> out) throws Exception {

List<ApacheUrlCount> list = valueState.value();

list.sort(new Comparator<ApacheUrlCount>() {

public int compare(ApacheUrlCount o1, ApacheUrlCount o2) {

return -(int)(o1.getCount() - o2.getCount());

}

});

StringBuffer result = new StringBuffer();

result.append("时间:").append( new Timestamp( timestamp - 1 ) ).append("\n");

for (int i = 0; i < 10; i++) {

result.append("NO").append(i + 1).append(":")

.append(" URL=").append(list.get(i).getUrl())

.append(" 访问量=").append(list.get(i).getCount()).append("\n");

}

valueState.update(null);

out.collect(result.toString());

Map<String, Object> resultMap = new HashMap<>();

resultMap.put("data", result.toString());

resultMap.put("create_time", new Date(timestamp - 1 ));

EsClientUtils.getInstance().addIndexMap("url_hours_count","url",resultMap);

}

});

process.print();

env.execute();

}

public static class UrlCountAgg implements AggregateFunction<ApacheLogEvent, Long, Long> {

public Long createAccumulator() {

return 0l;

}

public Long add(ApacheLogEvent apacheLogEvent, Long aLong) {

return aLong + 1;

}

public Long getResult(Long aLong) {

return aLong;

}

public Long merge(Long aLong, Long acc1) {

return aLong + acc1;

}

}

public static class WindowResult implements WindowFunction<Long, ApacheUrlCount, Tuple, TimeWindow> {

public void apply(Tuple tuple, TimeWindow window, Iterable<Long> input, Collector<ApacheUrlCount> out) throws Exception {

String url = tuple.getField(0);

Long windowEnd = window.getEnd();

Long count = input.iterator().next();

out.collect(new ApacheUrlCount(url, windowEnd, count));

}

}

}

4.1 Kafka Eagle创建topic

4.2 Kafka Eagle查看Topic Meta

4.3 Kibana整合ElasticSearch查看结果

5 Flink使用Standalone模式提交代码

5.1 Flink首页概览

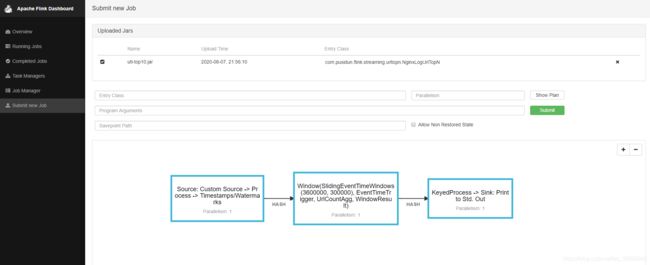

5.2 Flink作业提交并查看执行计划

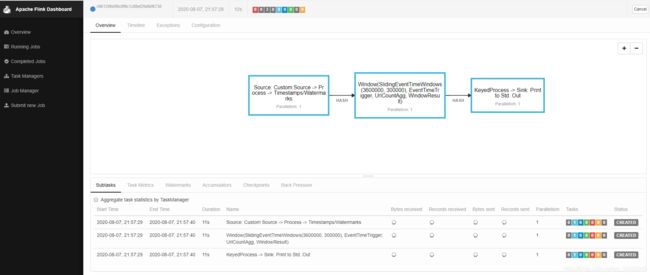

5.3 Flink作业详情

6 源码分享

https://github.com/wang-xue-qiang/bigdata-analysis