Spark中RDD与DF与DS之间的转换关系

前言

RDD的算子虽然丰富,但是执行效率不如DS,DF,一般业务可以用DF或者DS就能轻松完成,但是有时候业务只能通过RDD的算子来完成,下面就简单介绍之间的转换。

RDD的算子虽然丰富,但是执行效率不如DS,DF,一般业务可以用DF或者DS就能轻松完成,但是有时候业务只能通过RDD的算子来完成,下面就简单介绍之间的转换。

三者间的速度比较测试!

这里的DS区别于sparkstream里的DStream!!

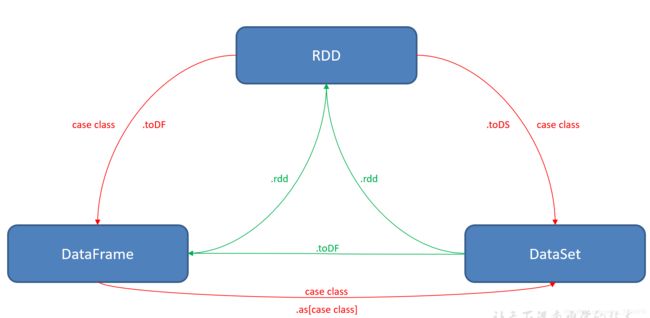

转换关系

RDD的出现早于DS,DF。由于scala的扩展机制,必定是要用到隐式转换的!

所以在RDD下要转DF或者DS,就应该导隐式对象包!

val conf = new SparkConf().setMaster("local[*]").setAppName("Foreach")

val ssc = new StreamingContext(conf, Seconds(3))

ssc.checkpoint("./ck2")

//获取ss

val ss = SparkSession.builder()

.config(ssc.sparkContext.getConf)

.getOrCreate()

//通过 对SparkSession类里面的implicits对象的导入实现!

import ss.implicits._

RDD转DS,将RDD的每一行封装成样例类,再调用toDS方法

DF与RDD之间的转换

后面才出现的DF,DS到RDD只需要直接通过对象.rdd就可以转化

package spark.sql.std.day01

import org.apache.spark.sql.SparkSession

/**

* @ClassName:DF2RDD

* @author: zhengkw

* @description:

* @date: 20/05/13上午 10:52

* @version:1.0

* @since: jdk 1.8 scala 2.11.8

*/

object DF2RDD {

def main(args: Array[String]): Unit = {

//创建一个builder,从builder或者取session

val spark = SparkSession.builder()

.appName("DF2RDD")

.master("local[2]")

.getOrCreate()

//获得df

val df = spark.read.json("E:\\IdeaWorkspace\\sparkdemo\\data\\people.json")

df.printSchema()

//df转rdd

val rdd = df.rdd

val result = rdd.map(row => {

val age = row.get(0)

//row.getAs()

// row.getLong()

val name = row.get(1)

(age, name)

}

)

result.collect.foreach(println)

}

}

封装样例类

package spark.sql.std.day01

import org.apache.spark.sql.SparkSession

import scala.collection.mutable

/**

* @ClassName:RDD2DF_2

* @author: zhengkw

* @description:

* @date: 20/05/13上午 11:35

* @version:1.0

* @since: jdk 1.8 scala 2.11.8

*/

object RDD2DF_2 {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.appName("RDD2DF_2")

.master("local[2]")

.getOrCreate()

val list = List(User(22, "java"), User(23, "keke"))

val list1 = list :+ User(15, "ww")

// list.foreach(println)

val rdd = spark.sparkContext.parallelize(list1)

import spark.implicits._

val df = rdd.toDF("age", "name")

df.show()

}

}

case class User(age: Int, name: String)

DF与DS之间的转换

package spark.sql.std.day02

import org.apache.spark.sql.SparkSession

/**

* @ClassName:DSDF

* @author: zhengkw

* @description:

* @date: 20/05/14上午 10:06

* @version:1.0

* @since: jdk 1.8 scala 2.11.8

*/

object DSDF {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.master("local[2]")

.appName("DSDF")

.getOrCreate()

import spark.implicits._

//read json读到的数字会转成Long

val df = spark.read.json("file:///E:\\IdeaWorkspace\\sparkdemo\\data\\people.json")

val ds = df.as[People]

ds.show()

val df1 = ds.toDF()

df1.show()

}

}

case class People(age: Long, name: String)

DS2DF封装样例类方式

package spark.sql.std.day02

import org.apache.spark.sql.SparkSession

/**

* @ClassName:DS2RDD

* @author: zhengkw

* @description:

* @date: 20/05/14上午 9:34

* @version:1.0

* @since: jdk 1.8 scala 2.11.8

*/

object DS2RDD {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.master("local[2]")

.appName("DS2RDD")

.getOrCreate()

//val df = spark.read.json("E:\\IdeaWorkspace\\sparkdemo\\data\\people.json")

val list = User(21, "nokia") :: User(18, "java") :: User(20, "scala") :: User(20, "nova") :: Nil

import spark.implicits._

val rdd = spark.sparkContext.parallelize(list)

val ds = rdd.toDS()

ds.rdd.collect().foreach(println)

}

}

case class User(age: Int, name: String)

Scala集合转DF

package spark.sql.std.day01

import org.apache.spark.sql.SparkSession

/**

* @ClassName:ScalaColl

* @author: zhengkw

* @description: 集合转换成DF

* @date: 20/05/22下午 5:03

* @version:1.0

* @since: jdk 1.8 scala 2.11.8

*/

object ScalaColl2DF_3 {

def main(args: Array[String]): Unit = {

//创建一个builder,从builder或者取session

val spark = SparkSession.builder()

.appName("ScalaColl")

.master("local[2]")

.getOrCreate()

import spark.implicits._

//集合

val map = Map("a" -> 3, "b" -> 4, "c" -> 5)

val df = map.toList.toDF("x", "y")

df.show()

df.printSchema()

spark.close()

}

}

关于其他的不在本博文讨论范围,相关信息查阅其他博文!

三者区别于联系