HIVE-DDL数据操作、分区、二级分区、联合查询、创建、修改、更换表列

1.启动dfs、yarn、hiveserver2

[cevent@hadoop207 ~]$ cd /opt/module/hadoop-2.7.2/

[cevent@hadoop207 hadoop-2.7.2]$ cd bin/

[cevent@hadoop207 bin]$ ll

总用量 320

-rwxr-xr-x. 1 cevent cevent 109037 5月 22 2017 container-executor

-rwxr-xr-x. 1 cevent cevent 6488 5月 22 2017 hadoop

-rwxr-xr-x. 1 cevent cevent 8786 5月 22 2017 hadoop.cmd

-rwxr-xr-x. 1 cevent cevent 12223 5月 22 2017 hdfs

-rwxr-xr-x. 1 cevent cevent 7478 5月 22 2017 hdfs.cmd

-rwxr-xr-x. 1 cevent cevent 5953 5月 22 2017 mapred

-rwxr-xr-x. 1 cevent cevent 6310 5月 22 2017 mapred.cmd

-rwxr-xr-x. 1 cevent cevent 1776 5月 22 2017 rcc

-rwxr-xr-x. 1 cevent cevent 126443 5月 22 2017 test-container-executor

-rwxr-xr-x. 1 cevent cevent 13352 5月 22 2017 yarn

-rwxr-xr-x. 1 cevent cevent 11386 5月 22 2017 yarn.cmd

[cevent@hadoop207 bin]$ cd ..

[cevent@hadoop207 hadoop-2.7.2]$ ll

总用量 60

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 bin

drwxrwxr-x. 3 cevent cevent 4096 4月 30 14:16 data

drwxr-xr-x. 3 cevent cevent 4096 5月 22 2017 etc

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 include

drwxr-xr-x. 3 cevent cevent 4096 5月 22 2017 lib

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 libexec

-rw-r--r--. 1 cevent cevent 15429 5月 22 2017 LICENSE.txt

drwxrwxr-x. 3 cevent cevent 4096 5月 11 13:17 logs

-rw-r--r--. 1 cevent cevent 101 5月 22 2017 NOTICE.txt

-rw-r--r--. 1 cevent cevent 1366 5月 22 2017 README.txt

drwxr-xr-x. 2 cevent cevent 4096 5月 22 2017 sbin

drwxr-xr-x. 4 cevent cevent 4096 5月 22 2017 share

[cevent@hadoop207 hadoop-2.7.2]$ sbin/start-dfs.sh

Starting namenodes on

[hadoop207.cevent.com]

hadoop207.cevent.com: starting namenode,

logging to

/opt/module/hadoop-2.7.2/logs/hadoop-cevent-namenode-hadoop207.cevent.com.out

hadoop207.cevent.com: starting datanode,

logging to /opt/module/hadoop-2.7.2/logs/hadoop-cevent-datanode-hadoop207.cevent.com.out

Starting secondary namenodes

[hadoop207.cevent.com]

hadoop207.cevent.com: starting

secondarynamenode, logging to /opt/module/hadoop-2.7.2/logs/hadoop-cevent-secondarynamenode-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$ sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-cevent-resourcemanager-hadoop207.cevent.com.out

hadoop207.cevent.com: starting

nodemanager, logging to /opt/module/hadoop-2.7.2/logs/yarn-cevent-nodemanager-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$ jps

3269 NameNode

3929 Jps

3774 ResourceManager

3591 SecondaryNameNode

3381 DataNode

3888 NodeManager

[cevent@hadoop207 ~]$ cd /opt/module/hive-1.2.1/

[cevent@hadoop207 hive-1.2.1]$ ll

总用量 524

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 bin

drwxrwxr-x. 2 cevent cevent 4096 5月 9 18:40 conf

-rw-rw-r--. 1 cevent cevent 21062 4月 30 16:44 derby.log

drwxrwxr-x. 4 cevent cevent 4096 4月 30 15:59 examples

drwxrwxr-x. 7 cevent cevent 4096 4月 30 15:59 hcatalog

-rw-rw-r--. 1 cevent cevent 23 5月 9 13:37 hive01.sql

drwxrwxr-x. 4 cevent cevent 4096 5月 7 13:51 lib

-rw-rw-r--. 1 cevent cevent 24754 4月 30 2015 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 5月 11 13:20 logs

drwxrwxr-x. 5 cevent cevent 4096 4月 30 16:44 metastore_db

-rw-rw-r--. 1 cevent cevent 397 6月 19 2015 NOTICE

-rw-rw-r--. 1 cevent cevent 4366 6月 19 2015 README.txt

-rw-rw-r--. 1 cevent cevent 421129 6月 19 2015 RELEASE_NOTES.txt

-rw-rw-r--. 1 cevent cevent 11 5月 9 13:27 result.txt

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 scripts

-rw-rw-r--. 1 cevent cevent 171 5月 9 13:24 server.log

-rw-rw-r--. 1 cevent cevent 5 5月 8 14:05 server.pid

[cevent@hadoop207 hive-1.2.1]$ bin/hiveserver2 启动server

OK

2.创建并修改表

[cevent@hadoop207 ~]$ cd /opt/module/hive-1.2.1/

[cevent@hadoop207 hive-1.2.1]$ ll

c总用量 524

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 bin

drwxrwxr-x. 2 cevent cevent 4096 5月 9 18:40 conf

-rw-rw-r--. 1 cevent cevent 21062 4月 30 16:44 derby.log

drwxrwxr-x. 4 cevent cevent 4096 4月 30 15:59 examples

drwxrwxr-x. 7 cevent cevent 4096 4月 30 15:59 hcatalog

-rw-rw-r--. 1 cevent cevent 23 5月 9 13:37 hive01.sql

drwxrwxr-x. 4 cevent cevent 4096 5月 7 13:51 lib

-rw-rw-r--. 1 cevent cevent 24754 4月 30 2015 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 5月 13 13:17 logs

drwxrwxr-x. 5 cevent cevent 4096 4月 30 16:44 metastore_db

-rw-rw-r--. 1 cevent cevent 397 6月 19 2015 NOTICE

-rw-rw-r--. 1 cevent cevent 4366 6月 19 2015 README.txt

-rw-rw-r--. 1 cevent cevent 421129 6月 19 2015 RELEASE_NOTES.txt

-rw-rw-r--. 1 cevent cevent 11 5月 9 13:27 result.txt

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 scripts

-rw-rw-r--. 1 cevent cevent 171 5月 9 13:24 server.log

-rw-rw-r--. 1 cevent cevent 5 5月 8 14:05 server.pid

[cevent@hadoop207 hive-1.2.1]$ bin/beeline 启动蜂线

Beeline version 1.2.1 by Apache Hive

beeline> !connect

jdbc:hive2://hadoop207.cevent.com:10000

Connecting to

jdbc:hive2://hadoop207.cevent.com:10000

Enter username for

jdbc:hive2://hadoop207.cevent.com:10000: cevent

Enter password for

jdbc:hive2://hadoop207.cevent.com:10000: ******

Connected to: Apache Hive (version 1.2.1)

Driver: Hive JDBC (version 1.2.1)

Transaction isolation:

TRANSACTION_REPEATABLE_READ

0:

jdbc:hive2://hadoop207.cevent.com:10000> show

databases;

+----------------+--+

| database_name |

+----------------+--+

| cevent01 |

| default |

+----------------+--+

2 rows selected (1.918 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000>

create database

cevent619 comment "619" location "/test" with

dbproperties("cevent test"="list test");

创建数据库 cevent619

注释 "" location指定存放地址"" with携带dbproperties("属性值")

No rows affected (1.274 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> use

cevent619; 使用库

No rows affected (0.045 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> create

table student(id int); 创建表

No rows affected (0.34 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> create database if not exists

cevent619 comment "619" location "/test" with

dbproperties("cevent test"="list test");

No rows affected (0.035 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

desc database cevent619; 查看库标准属性

+------------+----------+----------------------------------------+-------------+-------------+-------------+--+

|

db_name | comment | location | owner_name | owner_type | parameters |

+------------+----------+----------------------------------------+-------------+-------------+-------------+--+

| cevent619 | 619

| hdfs://hadoop207.cevent.com:8020/test | cevent | USER | |

+------------+----------+----------------------------------------+-------------+-------------+-------------+--+

1 row selected (0.081 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> desc

database extended cevent619;

+------------+----------+----------------------------------------+-------------+-------------+--------------------------+--+

|

db_name | comment | location | owner_name | owner_type |

parameters |

+------------+----------+----------------------------------------+-------------+-------------+--------------------------+--+

| cevent619 | 619

| hdfs://hadoop207.cevent.com:8020/test | cevent | USER | {cevent test=list test} |

+------------+----------+----------------------------------------+-------------+-------------+--------------------------+--+

1 row selected (0.045 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> alter

database cevent619 set dbproperties("cevent"="echo"); 修改属性

No rows affected (0.113 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> desc

database extended cevent619; 查看数据库extended(额外信息)

+------------+----------+----------------------------------------+-------------+-------------+---------------------------------------+--+

|

db_name | comment | location | owner_name | owner_type | parameters |

+------------+----------+----------------------------------------+-------------+-------------+---------------------------------------+--+

| cevent619 | 619

| hdfs://hadoop207.cevent.com:8020/test | cevent | USER | {cevent=echo, cevent test=list

test} |

+------------+----------+----------------------------------------+-------------+-------------+---------------------------------------+--+

1 row selected (0.041 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000> alter database cevent619 set dbproperties("cevent

test"="kaka");

声明数据库 set修改内容 hive一般只修改dbproperties("")

No rows affected (0.054 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

desc database extended cevent619;

+------------+----------+----------------------------------------+-------------+-------------+----------------------------------+--+

|

db_name | comment | location | owner_name | owner_type |

parameters |

+------------+----------+----------------------------------------+-------------+-------------+----------------------------------+--+

| cevent619 | 619

| hdfs://hadoop207.cevent.com:8020/test | cevent

| USER | {cevent=echo, cevent

test=kaka} |

+------------+----------+----------------------------------------+-------------+-------------+----------------------------------+--+

1 row selected (0.041 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

drop database cevent619 cascade; 删除表,cascade为级联删除,谨慎使用

No rows affected (1.129 seconds)

3.hive分区、分桶解析

CREATE [EXTERNAL] TABLE [IF NOT

EXISTS] table_name

external声明后,该表为外部表

[(col_name data_type [COMMENT col_comment], ...)]

列名 列类型 列注释

[COMMENT

table_comment]

表注释

[PARTITIONED

BY (col_name data_type [COMMENT

col_comment], ...)]

分区表:partitioned by 使用hive仓库可以分区,常规数据库没有分区

[CLUSTERED

BY (col_name, col_name, ...)

分桶表:clustered by 集群 常规数据库没有分桶

[SORTED BY

(col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS]

分桶设置buckets

[ROW

FORMAT row_format]

指定SQL行解析表的方式:row format

row format delimited fields terminated by '...' 指定每行查询的分隔符,属性分隔符terminated by ''

collection items terminated by '...' 指定子集的分隔符

map keys terminated by ':' 指定map<key:value>键值对的分隔符

lines terminated by '/n' 指定整体换行解析

[STORED

AS file_format]

指定数据表格的存储格式stored,hive支持存储的格式最优的不是文本格式

[LOCATION

hdfs_path]

指定表的存放位置location

[TBLPROPERTIES

(property_name=property_value, ...)]

设置表的属性table

properties

[AS

select_statement]

根据查询结果建立新表

mySQL常规创建:create table student as select id,name from

people where job="student"

4.hdfs存放数据-put

[cevent@hadoop207

datas]$ ll

总用量 18616

-rw-rw-r--. 1

cevent cevent 147 5月 10 13:46 510test.txt

-rw-rw-r--. 1

cevent cevent 266 5月 17 13:52 business.txt

-rw-rw-r--. 1

cevent cevent 129 5月 17 13:52 constellation.txt

-rw-rw-r--. 1

cevent cevent 71 5月 17 13:52 dept.txt

-rw-rw-r--. 1

cevent cevent 78 5月 17 13:52 emp_sex.txt

-rw-rw-r--. 1

cevent cevent 656 5月 17 13:52 emp.txt

-rw-rw-r--. 1

cevent cevent 37 5月 17 13:52 location.txt

-rw-rw-r--. 1

cevent cevent 19014993 5月 17

13:52 log.data

-rw-rw-r--. 1

cevent cevent 136 5月 17 13:52 movie.txt

-rw-rw-r--. 1

cevent cevent 213 5月 17 13:52 score.txt

-rw-rw-r--. 1

cevent cevent 165 5月 17 13:52 student.txt

-rw-rw-r--. 1

cevent cevent 301 5月 17 13:52 数据说明.txt

[cevent@hadoop207

datas]$ hadoop fs -mkdir /student

[cevent@hadoop207

datas]$ hadoop fs -put student.txt /student 传入目录,删除表不会删除元数据

5.beeline操作hive库

[cevent@hadoop207

~]$ cd /opt/module/hive-1.2.1/

[cevent@hadoop207

hive-1.2.1]$ ll

总用量 524

drwxrwxr-x. 3

cevent cevent 4096 4月 30 15:59 bin

drwxrwxr-x. 2

cevent cevent 4096 5月 9 18:40 conf

-rw-rw-r--. 1

cevent cevent 21062 4月 30 16:44 derby.log

drwxrwxr-x. 4

cevent cevent 4096 4月 30 15:59 examples

drwxrwxr-x. 7

cevent cevent 4096 4月 30 15:59 hcatalog

-rw-rw-r--. 1

cevent cevent 23 5月 9 13:37 hive01.sql

drwxrwxr-x. 4

cevent cevent 4096 5月 7 13:51 lib

-rw-rw-r--. 1

cevent cevent 24754 4月 30 2015 LICENSE

drwxrwxr-x. 2

cevent cevent 4096 5月 17 13:15 logs

drwxrwxr-x. 5 cevent

cevent 4096 4月 30 16:44 metastore_db

-rw-rw-r--. 1

cevent cevent 397 6月 19 2015 NOTICE

-rw-rw-r--. 1

cevent cevent 4366 6月 19 2015 README.txt

-rw-rw-r--. 1

cevent cevent 421129 6月 19

2015 RELEASE_NOTES.txt

-rw-rw-r--. 1

cevent cevent 11 5月 9 13:27 result.txt

drwxrwxr-x. 3

cevent cevent 4096 4月 30 15:59 scripts

-rw-rw-r--. 1

cevent cevent 171 5月 9 13:24 server.log

-rw-rw-r--. 1

cevent cevent 5 5月 8 14:05 server.pid

[cevent@hadoop207

hive-1.2.1]$ bin/beeline 启动蜂线

Beeline version

1.2.1 by Apache Hive

beeline>

!connect jdbc:hive2://hadoop.cevent.com:10000

Connecting to

jdbc:hive2://hadoop.cevent.com:10000

Enter username

for jdbc:hive2://hadoop.cevent.com:10000: cevent

Enter password

for jdbc:hive2://hadoop.cevent.com:10000: ******

Error: Could not

open client transport with JDBC Uri: jdbc:hive2://hadoop.cevent.com:10000:

java.net.UnknownHostException: hadoop.cevent.com (state=08S01,code=0)

0:

jdbc:hive2://hadoop.cevent.com:10000 (closed)> !connect

jdbc:hive2://hadoop207.cevent.com:10000

Connecting to

jdbc:hive2://hadoop207.cevent.com:10000

Enter username

for jdbc:hive2://hadoop207.cevent.com:10000: cevent

Enter password

for jdbc:hive2://hadoop207.cevent.com:10000: ******

Connected to:

Apache Hive (version 1.2.1)

Driver: Hive JDBC

(version 1.2.1)

Transaction

isolation: TRANSACTION_REPEATABLE_READ

1:

jdbc:hive2://hadoop207.cevent.com:10000> show

databases;

+----------------+--+

|

database_name |

+----------------+--+

| cevent01 |

| default |

+----------------+--+

2 rows selected

(2.426 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> create

table students(id int comment "Identitys") comment

"cevent" row format delimited fields terminated by '\t' location

'/students' tblproperties("cevent"="echo");

No rows affected (0.437

seconds)

1: jdbc:hive2://hadoop207.cevent.com:10000>

show tables;

+-----------+--+

| tab_name |

+-----------+--+

| student |

| studentd |

| students |

| test |

+-----------+--+

4 rows selected

(0.047 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> desc

students;

+-----------+------------+------------+--+

| col_name | data_type

| comment |

+-----------+------------+------------+--+

| id | int | Identitys |

+-----------+------------+------------+--+

1 row selected

(0.136 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> desc

formatted students; 查看表详情

+-------------------------------+-------------------------------------------------------------+-----------------------+--+

| col_name | data_type | comment |

+-------------------------------+-------------------------------------------------------------+-----------------------+--+

| # col_name | data_type |

comment |

| | NULL

| NULL |

| id | int | Identitys |

| | NULL

| NULL |

| # Detailed Table Information | NULL | NULL |

| Database: | default

| NULL |

|

Owner: |

cevent

| NULL |

| CreateTime: | Sun May 17 13:39:43 CST

2020 |

NULL |

| LastAccessTime: | UNKNOWN

| NULL |

| Protect Mode: | None

| NULL |

| Retention: | 0

| NULL |

| Location: |

hdfs://hadoop207.cevent.com:8020/students | NULL |

| Table Type: | MANAGED_TABLE 管理表 | NULL |

| Table Parameters: | NULL

| NULL |

| | cevent

| echo |

| | comment |

cevent |

| |

transient_lastDdlTime |

1589693983 |

| | NULL | NULL |

| # Storage Information | NULL

| NULL |

| SerDe Library: | org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

| NULL |

| InputFormat: |

org.apache.hadoop.mapred.TextInputFormat | NULL |

| OutputFormat: |

org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | NULL |

| Compressed: | No

| NULL |

| Num Buckets: | -1

| NULL |

| Bucket Columns: | []

| NULL |

| Sort Columns: | []

| NULL |

| Storage Desc Params: | NULL

| NULL |

| |

field.delim

| \t |

| | serialization.format |

\t |

+-------------------------------+-------------------------------------------------------------+-----------------------+--+

29 rows selected

(0.162 seconds)

1: jdbc:hive2://hadoop207.cevent.com:10000>

drop table student; 删除表

No rows affected

(1.002 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> drop

table studentd;

No rows affected

(0.144 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> show

databases;

+----------------+--+

|

database_name |

+----------------+--+

| cevent01 |

| default |

+----------------+--+

2 rows selected

(0.031 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> desc

students;

+-----------+------------+------------+--+

| col_name | data_type

| comment |

+-----------+------------+------------+--+

| id | int | Identitys |

+-----------+------------+------------+--+

1 row selected

(0.139 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> create

external table student2(id int); 非标准新建表

No rows affected

(0.09 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> desc

formatted student2; 查看表

+-------------------------------+----------------------------------------------------------------+-----------------------+--+

| col_name | data_type | comment |

+-------------------------------+----------------------------------------------------------------+-----------------------+--+

| # col_name | data_type

| comment |

| | NULL | NULL |

| id | int

| |

| | NULL | NULL |

| # Detailed Table Information | NULL

| NULL |

| Database: | default

| NULL |

|

Owner: | cevent

| NULL |

| CreateTime: | Sun May 17 13:44:42 CST

2020

| NULL |

| LastAccessTime: | UNKNOWN |

NULL |

| Protect Mode: | None

| NULL |

| Retention: | 0 |

NULL |

| Location: |

hdfs://hadoop207.cevent.com:8020/user/hive/warehouse/student2 | NULL |

|

Table Type: |

EXTERNAL_TABLE 外部表 | NULL |

| Table Parameters: | NULL

| NULL |

| | EXTERNAL | TRUE |

| |

transient_lastDdlTime |

1589694282 |

| | NULL

| NULL |

| # Storage Information | NULL

| NULL |

| SerDe Library: |

org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe | NULL |

| InputFormat: |

org.apache.hadoop.mapred.TextInputFormat | NULL |

| OutputFormat: | org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat | NULL

|

| Compressed: | No

| NULL |

| Num Buckets: | -1 |

NULL |

| Bucket Columns: | []

| NULL |

| Sort Columns: | [] |

NULL |

| Storage Desc Params: | NULL

| NULL |

| |

serialization.format | 1 |

+-------------------------------+----------------------------------------------------------------+-----------------------+--+

27 rows selected

(0.113 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> load

data local inpath '/opt/module/datas/student.txt' into table students; 将数据传入表

INFO : Loading data to table default.students

from file:/opt/module/datas/student.txt

INFO : Table default.students stats:

[numFiles=1, totalSize=12]

No rows affected

(2.287 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> load

data local inpath '/opt/module/datas/student.txt' into table student2;

INFO : Loading data to table default.student2

from file:/opt/module/datas/student.txt

INFO : Table default.student2 stats:

[numFiles=1, totalSize=165]

No rows affected

(0.39 seconds)

1: jdbc:hive2://hadoop207.cevent.com:10000>

select * from students;

+--------------+--+

|

students.id |

+--------------+--+

| 1001 |

| 1002 |

| 1003 |

| 1004 |

| 1005 |

| 1006 |

| 1007 |

| 1008 |

| 1009 |

| 1010 |

| 1011 |

| 1012 |

| 1013 |

| 1014 |

| 1015 |

| 1016 |

+--------------+--+

16 rows selected

(0.733 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from student2;

+--------------+--+

| student2.id |

+--------------+--+

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

| NULL |

+--------------+--+

16 rows selected

(0.104 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> create

external table student3(id int,name string) row format delimited fields

terminated by '\t' location '/students'

tblproperties("cevent"="apa"); 创建外部表

No rows affected

(0.199 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> load

data local inpath '/opt/module/datas/student.txt' into table student3; 加载外部表相应数据

INFO : Loading data to table default.student3

from file:/opt/module/datas/student.txt

INFO : Table default.student3 stats: [numFiles=0,

numRows=0, totalSize=0, rawDataSize=0]

No rows affected

(0.268 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from student3;

+--------------+----------------+--+

| student3.id | student3.name |

+--------------+----------------+--+

| 1001 | ss1 |

| 1002 | ss2 |

| 1003 | ss3 |

| 1004 | ss4 |

| 1005 | ss5 |

| 1006 | ss6 |

| 1007 | ss7 |

| 1008 | ss8 |

| 1009 | ss9 |

| 1010 | ss10 |

| 1011 | ss11 |

| 1012 | ss12 |

| 1013 | ss13 |

| 1014 | ss14 |

| 1015 | ss15 |

| 1016 | ss16 |

| 1001 | ss1 |

| 1002 | ss2 |

| 1003 | ss3 |

| 1004 | ss4 |

| 1005 | ss5 |

| 1006 | ss6 |

| 1007 | ss7 |

| 1008 | ss8 |

| 1009 | ss9 |

| 1010 | ss10 |

| 1011 | ss11 |

| 1012 | ss12 |

| 1013 | ss13 |

| 1014 | ss14 |

| 1015 | ss15 |

| 1016 | ss16 |

+--------------+----------------+--+

32 rows selected

(0.112 seconds)

1: jdbc:hive2://hadoop207.cevent.com:10000>

create external table student4(id int,name string)

row format delimited fields terminated by '\t' location '/students'

tblproperties("cevent"="papi");

No rows affected

(0.46 seconds)

1:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from student4;

+--------------+----------------+--+

| student4.id | student4.name |

+--------------+----------------+--+

| 1001 | ss1 |

| 1002 | ss2 |

| 1003 | ss3 |

| 1004 | ss4 |

| 1005 | ss5 |

| 1006 | ss6 |

| 1007 | ss7 |

| 1008 | ss8 |

| 1009 | ss9 |

| 1010 | ss10 |

| 1011 | ss11 |

| 1012 | ss12 |

| 1013 | ss13 |

| 1014 | ss14 |

| 1015 | ss15 |

| 1016 | ss16 |

| 1001 | ss1 |

| 1002 | ss2 |

| 1003 | ss3 |

| 1004 | ss4 |

| 1005 | ss5 |

| 1006 | ss6 |

| 1007 | ss7 |

| 1008 | ss8 |

| 1009 | ss9 |

| 1010 | ss10 |

| 1011 | ss11 |

| 1012 | ss12 |

| 1013 | ss13 |

| 1014 | ss14 |

| 1015 | ss15 |

| 1016 | ss16 |

+--------------+----------------+--+

32 rows selected

(0.153 seconds)

1: jdbc:hive2://hadoop207.cevent.com:10000>

alter table

student4 set tblproperties('EXTERNAL'='TRUE');

将管理表修改为外部表

No rows affected

(0.161 seconds)

6.FZ传输hive基础数据

7.HDFS存放数据

8.kill -9操作线程

[cevent@hadoop207 hadoop-2.7.2]$ jps

4857 Jps

4174 ResourceManager

[cevent@hadoop207 hadoop-2.7.2]$ kill -9 4174

[cevent@hadoop207 hadoop-2.7.2]$ jps

4872 Jps

[cevent@hadoop207 hadoop-2.7.2]$ sbin/start-dfs.sh

Starting namenodes on

[hadoop207.cevent.com]

hadoop207.cevent.com: starting namenode,

logging to /opt/module/hadoop-2.7.2/logs/hadoop-cevent-namenode-hadoop207.cevent.com.out

hadoop207.cevent.com: starting datanode,

logging to

/opt/module/hadoop-2.7.2/logs/hadoop-cevent-datanode-hadoop207.cevent.com.out

Starting secondary namenodes

[hadoop207.cevent.com]

hadoop207.cevent.com: starting

secondarynamenode, logging to

/opt/module/hadoop-2.7.2/logs/hadoop-cevent-secondarynamenode-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$ sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to

/opt/module/hadoop-2.7.2/logs/yarn-cevent-resourcemanager-hadoop207.cevent.com.out

hadoop207.cevent.com: starting

nodemanager, logging to

/opt/module/hadoop-2.7.2/logs/yarn-cevent-nodemanager-hadoop207.cevent.com.out

[cevent@hadoop207 hadoop-2.7.2]$ jps

5144 DataNode

5007 NameNode

5499 ResourceManager

5925 Jps

5613 NodeManager

5325 SecondaryNameNode

9.分区查询数据

[cevent@hadoop207 ~]$ cd /opt/module/hive-1.2.1/

[cevent@hadoop207 hive-1.2.1]$ ll

总用量 524

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 bin

drwxrwxr-x. 2 cevent cevent 4096 5月 9 18:40 conf

-rw-rw-r--. 1 cevent cevent 21062 4月 30 16:44 derby.log

drwxrwxr-x. 4 cevent cevent 4096 4月 30 15:59 examples

drwxrwxr-x. 7 cevent cevent 4096 4月 30 15:59 hcatalog

-rw-rw-r--. 1 cevent cevent 23 5月 9 13:37 hive01.sql

drwxrwxr-x. 4 cevent cevent 4096 5月 7 13:51 lib

-rw-rw-r--. 1 cevent cevent 24754 4月 30 2015 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 5月 18 13:28 logs

drwxrwxr-x. 5 cevent cevent 4096 4月 30 16:44 metastore_db

-rw-rw-r--. 1 cevent cevent 397 6月 19 2015 NOTICE

-rw-rw-r--. 1 cevent cevent 4366 6月 19 2015 README.txt

-rw-rw-r--. 1 cevent cevent 421129 6月 19 2015 RELEASE_NOTES.txt

-rw-rw-r--. 1 cevent cevent 11 5月 9 13:27 result.txt

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 scripts

-rw-rw-r--. 1 cevent cevent 171 5月 9 13:24 server.log

-rw-rw-r--. 1 cevent cevent 5 5月 8 14:05 server.pid

[cevent@hadoop207 hive-1.2.1]$

bin/beeline

Beeline version 1.2.1 by Apache Hive

beeline> !connect

jdbc:hive2://hadoop207.cevent.com:10000

Connecting to

jdbc:hive2://hadoop207.cevent.com:10000

Enter username for jdbc:hive2://hadoop207.cevent.com:10000:

cevent

Enter password for jdbc:hive2://hadoop207.cevent.com:10000:

******

Connected to: Apache Hive (version 1.2.1)

Driver: Hive JDBC (version 1.2.1)

Transaction isolation:

TRANSACTION_REPEATABLE_READ 创建表

0: jdbc:hive2://hadoop207.cevent.com:10000> create table c_dept_partition1(

0:

jdbc:hive2://hadoop207.cevent.com:10000> deptno

int, dname string, loc string

0:

jdbc:hive2://hadoop207.cevent.com:10000> )

0:

jdbc:hive2://hadoop207.cevent.com:10000> partitioned

by (month string)

0: jdbc:hive2://hadoop207.cevent.com:10000> row

format delimited fields terminated by '\t';

No rows affected (0.704 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000>

load data local inpath

'/opt/module/datas/dept.txt' into table c_dept_partition1 partition(month='202005'); 加载数据

INFO

: Loading data to table default.c_dept_partition1 partition

(month=202005) from file:/opt/module/datas/dept.txt

INFO

: Partition default.c_dept_partition1{month=202005} stats:

[numFiles=1, numRows=0, totalSize=71, rawDataSize=0]

No rows affected (1.701 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> load data

local inpath '/opt/module/datas/dept.txt' into table c_dept_partition1

partition(month='202006');

INFO

: Loading data to table default.c_dept_partition1 partition

(month=202006) from file:/opt/module/datas/dept.txt

INFO

: Partition default.c_dept_partition1{month=202006} stats:

[numFiles=1, numRows=0, totalSize=71, rawDataSize=0]

No rows affected (0.496 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> load

data local inpath '/opt/module/datas/dept.txt' into table c_dept_partition1

partition(month='202007');

INFO

: Loading data to table default.c_dept_partition1 partition

(month=202007) from file:/opt/module/datas/dept.txt

INFO

: Partition default.c_dept_partition1{month=202007} stats:

[numFiles=1, numRows=0, totalSize=71, rawDataSize=0]

No rows affected (0.465 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000>

select * from c_dept_partition1; 查询表所有分区信息

+---------------------------+--------------------------+------------------------+--------------------------+--+

| c_dept_partition1.deptno |

c_dept_partition1.dname |

c_dept_partition1.loc |

c_dept_partition1.month |

+---------------------------+--------------------------+------------------------+--------------------------+--+

| 10 |

ACCOUNTING | 1700 | 202005 |

| 20 |

RESEARCH | 1800 | 202005 |

| 30 | SALES | 1900 | 202005 |

| 40 |

OPERATIONS | 1700 | 202005 |

| 10 |

ACCOUNTING | 1700 | 202006 |

| 20 | RESEARCH | 1800 | 202006 |

| 30 |

SALES | 1900 | 202006 |

| 40 | OPERATIONS | 1700 | 202006 |

| 10 |

ACCOUNTING | 1700 | 202007 |

| 20 | RESEARCH | 1800 | 202007 |

| 30 |

SALES | 1900 | 202007 |

| 40 |

OPERATIONS | 1700 | 202007 |

+---------------------------+--------------------------+------------------------+--------------------------+--+

12 rows selected (0.648 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> select *

from c_dept_partition where deptno=10;

Error: Error while compiling statement:

FAILED: SemanticException [Error 10001]: Line 1:14 Table not found 'c_dept_partition'

(state=42S02,code=10001)

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from c_dept_partition1 where deptno=10; 普通查询,低效

+---------------------------+--------------------------+------------------------+--------------------------+--+

| c_dept_partition1.deptno | c_dept_partition1.dname | c_dept_partition1.loc | c_dept_partition1.month |

+---------------------------+--------------------------+------------------------+--------------------------+--+

| 10 | ACCOUNTING | 1700 | 202005 |

| 10 | ACCOUNTING | 1700 | 202006 |

| 10 | ACCOUNTING | 1700 | 202007 |

+---------------------------+--------------------------+------------------------+--------------------------+--+

3 rows selected (0.545 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from c_dept_partition1 where month='202006'; 按分区查询,高效

+---------------------------+--------------------------+------------------------+--------------------------+--+

| c_dept_partition1.deptno | c_dept_partition1.dname | c_dept_partition1.loc | c_dept_partition1.month |

+---------------------------+--------------------------+------------------------+--------------------------+--+

| 10 | ACCOUNTING | 1700 | 202006 |

| 20 | RESEARCH | 1800 | 202006 |

| 30 | SALES | 1900 | 202006 |

| 40 | OPERATIONS | 1700 | 202006 |

+---------------------------+--------------------------+------------------------+--------------------------+--+

4 rows selected (0.271 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> alter table c_dept_partition1 add

partition(month='202005'); 已存在

Error: Error while processing statement:

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask.

AlreadyExistsException(message:Partition

already exists: Partition(values:[202005], dbName:default,

tableName:c_dept_partition1, createTime:0, lastAccessTime:0,

sd:StorageDescriptor(cols:[FieldSchema(name:deptno, type:int, comment:null),

FieldSchema(name:dname, type:string, comment:null), FieldSchema(name:loc,

type:string, comment:null)], location:null,

inputFormat:org.apache.hadoop.mapred.TextInputFormat,

outputFormat:org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat,

compressed:false, numBuckets:-1, serdeInfo:SerDeInfo(name:null,

serializationLib:org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe,

parameters:{field.delim= ,

serialization.format= }), bucketCols:[], sortCols:[], parameters:{},

skewedInfo:SkewedInfo(skewedColNames:[], skewedColValues:[],

skewedColValueLocationMaps:{}), storedAsSubDirectories:false),

parameters:null)) (state=08S01,code=1)

0:

jdbc:hive2://hadoop207.cevent.com:10000> alter

table c_dept_partition1 add partition(month='202008'); 添加单一分区

No rows affected (0.136 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000>

alter table

c_dept_partition1 add partition(month='202009') partition(month='202010'); 添加多个分区(不用,)

No rows affected (0.168 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

alter table c_dept_partition1 drop

partition(month='202008'); 删除单一分区

INFO

: Dropped the partition month=202008

No rows affected (0.677 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000>

alter table

c_dept_partition1 drop partition(month='202009'),partition(month='202010'); 多分区删除(用,)

INFO

: Dropped the partition month=202009

INFO

: Dropped the partition month=202010

No rows affected (0.266 seconds)

10.分区列表

11.二级分区SQL解析

hive (default)> create table partition2(

deptno int, dname string, locations

string

)

partitioned by (month string,

day string)

row format delimited fields

terminated by '\t';

12.手动增加外部分区

[cevent@hadoop207 ~]$ cd /opt/module/datas/

[cevent@hadoop207 datas]$ ll

总用量 18616

-rw-rw-r--. 1 cevent cevent 147 5月 10 13:46 510test.txt

-rw-rw-r--. 1 cevent cevent 266 5月 17 13:52 business.txt

-rw-rw-r--. 1 cevent cevent 129 5月 17 13:52 constellation.txt

-rw-rw-r--. 1 cevent cevent 71 5月 17 13:52 dept.txt

-rw-rw-r--. 1 cevent cevent 78 5月 17 13:52 emp_sex.txt

-rw-rw-r--. 1 cevent cevent 656 5月 17 13:52 emp.txt

-rw-rw-r--. 1 cevent cevent 37 5月 17 13:52 location.txt

-rw-rw-r--. 1 cevent cevent 19014993 5月 17 13:52 log.data

-rw-rw-r--. 1 cevent cevent 136 5月 17 13:52 movie.txt

-rw-rw-r--. 1 cevent cevent 213 5月 17 13:52 score.txt

-rw-rw-r--. 1 cevent cevent 165 5月 17 13:52 student.txt

-rw-rw-r--. 1 cevent cevent 301 5月 17 13:52 数据说明.txt

[cevent@hadoop207 datas]$ hadoop fs -mkdir

/user/hive/warehouse/partition2/month=202019/day=20 直接填加hdfs

[cevent@hadoop207 datas]$ hadoop fs -mkdir /user/hive/warehouse/partition3/month=202019/day=22

mkdir:

`/user/hive/warehouse/partition3/month=202019/day=22': No such file or

directory

[cevent@hadoop207 datas]$ hadoop fs

-mkdir /user/hive/warehouse/partition2/month=202019/day=22

13.实现二级分区及联合查询

[cevent@hadoop207 ~]$ cd /opt/module/hive-1.2.1/

[cevent@hadoop207 hive-1.2.1]$ ll

总用量 524

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 bin

drwxrwxr-x. 2 cevent cevent 4096 5月 9 18:40 conf

-rw-rw-r--. 1 cevent cevent 21062 4月 30 16:44 derby.log

drwxrwxr-x. 4 cevent cevent 4096 4月 30 15:59 examples

drwxrwxr-x. 7 cevent cevent 4096 4月 30 15:59 hcatalog

-rw-rw-r--. 1 cevent cevent 23 5月 9 13:37 hive01.sql

drwxrwxr-x. 4 cevent cevent 4096 5月 7 13:51 lib

-rw-rw-r--. 1 cevent cevent 24754 4月 30 2015 LICENSE

drwxrwxr-x. 2 cevent cevent 4096 5月 19 13:18 logs

drwxrwxr-x. 5 cevent cevent 4096 4月 30 16:44 metastore_db

-rw-rw-r--. 1 cevent cevent 397 6月 19 2015 NOTICE

-rw-rw-r--. 1 cevent cevent 4366 6月 19 2015 README.txt

-rw-rw-r--. 1 cevent cevent 421129 6月 19 2015 RELEASE_NOTES.txt

-rw-rw-r--. 1 cevent cevent 11 5月 9 13:27 result.txt

drwxrwxr-x. 3 cevent cevent 4096 4月 30 15:59 scripts

-rw-rw-r--. 1 cevent cevent 171 5月 9 13:24 server.log

-rw-rw-r--. 1 cevent cevent 5 5月 8 14:05 server.pid

[cevent@hadoop207 hive-1.2.1]$ bin/beeline

Beeline version 1.2.1 by Apache Hive

beeline>

!connect jdbc:hive2://hadoop207.cevent.com:10000

Connecting to jdbc:hive2://hadoop207.cevent.com:10000

Enter username for

jdbc:hive2://hadoop207.cevent.com:10000: cevent

Enter password for

jdbc:hive2://hadoop207.cevent.com:10000: ******

Connected to: Apache Hive (version 1.2.1)

Driver: Hive JDBC (version 1.2.1)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from c_dept_partition1 where month='202005'

联合查询union

0:

jdbc:hive2://hadoop207.cevent.com:10000> union

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from c_dept_partition1 where month='202006'

0:

jdbc:hive2://hadoop207.cevent.com:10000> union

0: jdbc:hive2://hadoop207.cevent.com:10000>

select * from c_dept_partition1 where

month='202007';

INFO

: Number of reduce tasks not specified. Estimated from input data

size: 1

INFO

: In order to change the average load for a reducer (in bytes):

INFO

: set

hive.exec.reducers.bytes.per.reducer=

INFO

: In order to limit the maximum number of reducers:

INFO

: set

hive.exec.reducers.max=

INFO

: In order to set a constant number of reducers:

INFO

: set

mapreduce.job.reduces=

INFO : number of splits:2

INFO

: Submitting tokens for job: job_1589865507035_0001

INFO

: The url to track the job:

http://hadoop207.cevent.com:8088/proxy/application_1589865507035_0001/

INFO

: Starting Job = job_1589865507035_0001, Tracking URL = http://hadoop207.cevent.com:8088/proxy/application_1589865507035_0001/

INFO

: Kill Command = /opt/module/hadoop-2.7.2/bin/hadoop job -kill job_1589865507035_0001

INFO

: Hadoop job information for Stage-1: number of mappers: 2; number of

reducers: 1

INFO

: 2020-05-19 13:23:01,308 Stage-1 map = 0%, reduce = 0%

INFO

: 2020-05-19 13:23:35,718 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.85 sec

INFO

: 2020-05-19 13:23:46,936 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 4.49 sec

INFO

: MapReduce Total cumulative CPU time: 4 seconds 490 msec

INFO

: Ended Job = job_1589865507035_0001

INFO

: Number of reduce tasks not specified. Estimated from input data

size: 1

INFO

: In order to change the average load for a reducer (in bytes):

INFO

: set hive.exec.reducers.bytes.per.reducer=

INFO

: In order to limit the maximum number of reducers:

INFO

: set

hive.exec.reducers.max=

INFO

: In order to set a constant number of reducers:

INFO

: set

mapreduce.job.reduces=

INFO

: number of splits:2

INFO

: Submitting tokens for job: job_1589865507035_0002

INFO

: The url to track the job:

http://hadoop207.cevent.com:8088/proxy/application_1589865507035_0002/

INFO

: Starting Job = job_1589865507035_0002, Tracking URL =

http://hadoop207.cevent.com:8088/proxy/application_1589865507035_0002/

INFO

: Kill Command = /opt/module/hadoop-2.7.2/bin/hadoop job -kill job_1589865507035_0002

INFO

: Hadoop job information for Stage-2: number of mappers: 2; number of

reducers: 1

INFO

: 2020-05-19 13:24:01,092 Stage-2 map = 0%, reduce = 0%

INFO

: 2020-05-19 13:24:32,152 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 8.2 sec

INFO

: 2020-05-19 13:24:45,251 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 10.02 sec

INFO

: MapReduce Total cumulative CPU time: 10 seconds 20 msec

+-------------+-------------+----------+------------+--+

| _u3.deptno | _u3.dname

| _u3.loc | _u3.month |

+-------------+-------------+----------+------------+--+

| 10 | ACCOUNTING | 1700

| 202005 |

| 10 | ACCOUNTING | 1700

| 202006 |

| 10 | ACCOUNTING | 1700

| 202007 |

| 20 | RESEARCH | 1800

| 202005 |

| 20 | RESEARCH | 1800

| 202006 |

| 20 | RESEARCH | 1800

| 202007 |

| 30 | SALES | 1900 | 202005 |

| 30 | SALES | 1900 | 202006 |

| 30 | SALES | 1900 | 202007 |

| 40 | OPERATIONS | 1700

| 202005 |

| 40 | OPERATIONS | 1700

| 202006 |

| 40 | OPERATIONS | 1700

| 202007 |

+-------------+-------------+----------+------------+--+

12 rows selected (138.67 seconds)

INFO

: Ended Job = job_1589865507035_0002

0:

jdbc:hive2://hadoop207.cevent.com:10000> create table c_dept_partition2()

0:

jdbc:hive2://hadoop207.cevent.com:10000> show databses;

Error: Error while compiling statement:

FAILED: ParseException line 1:31 cannot recognize input near ')' 'show'

'databses' in column specification (state=42000,code=40000)

0:

jdbc:hive2://hadoop207.cevent.com:10000> show databases;

+----------------+--+

| database_name |

+----------------+--+

| cevent01 |

| default |

+----------------+--+

2 rows selected (1.404 seconds) 创建二级分区

0: jdbc:hive2://hadoop207.cevent.com:10000> create table partition2(

0:

jdbc:hive2://hadoop207.cevent.com:10000> deptno

int,dname string,locations string

0:

jdbc:hive2://hadoop207.cevent.com:10000> )

0:

jdbc:hive2://hadoop207.cevent.com:10000> partitioned

by(month string,day

string)

0:

jdbc:hive2://hadoop207.cevent.com:10000> row

format delimited fields terminated by '\t';

No rows affected (1.998 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000>

load data local

inpath '/opt/module/datas/dept.txt' into table partition2 partition(month=202019,day=19);

给二级分区加载数据

INFO

: Loading data to table default.partition2 partition (month=202019,

day=19) from file:/opt/module/datas/dept.txt

INFO

: Partition default.partition2{month=202019, day=19} stats:

[numFiles=1, numRows=0, totalSize=71, rawDataSize=0]

No rows affected (2.216 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from partition2 where month='202019' and day='19';

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| partition2.deptno | partition2.dname | partition2.locations | partition2.month | partition2.day |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| 10 | ACCOUNTING | 1700 | 202019 | 19 |

| 20 | RESEARCH | 1800 | 202019 | 19 |

| 30 | SALES | 1900 | 202019 | 19 |

| 40 | OPERATIONS | 1700 | 202019 | 19 |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

4 rows selected (0.776 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from partition2;

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| partition2.deptno | partition2.dname | partition2.locations | partition2.month | partition2.day |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| 10 | ACCOUNTING | 1700 | 202019 | 19 |

| 20 | RESEARCH | 1800 | 202019 | 19 |

| 30 | SALES | 1900 | 202019 | 19 |

| 40 | OPERATIONS | 1700 | 202019 | 19 |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

4 rows selected (0.321 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> alter

table partition2 add partition(month="202019",day="20"); 添加分区

No rows affected (0.379 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from partition2;

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| partition2.deptno | partition2.dname | partition2.locations | partition2.month | partition2.day |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| 10 | ACCOUNTING | 1700 | 202019 | 19 |

| 20 | RESEARCH | 1800 | 202019 | 19 |

| 30 | SALES | 1900 | 202019 | 19 |

| 40 | OPERATIONS | 1700 | 202019 | 19 |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

4 rows selected (0.202 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> alter

table partition2 add partition(month="202019",day="21");

No rows affected (0.167 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> select

* from partition2;

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| partition2.deptno | partition2.dname | partition2.locations | partition2.month | partition2.day |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

| 10 | ACCOUNTING | 1700 | 202019 | 19 |

| 20 | RESEARCH | 1800 | 202019 | 19 |

| 30

| SALES | 1900 | 202019 | 19 |

| 40 | OPERATIONS | 1700 | 202019 | 19 |

+--------------------+-------------------+-----------------------+-------------------+-----------------+--+

4 rows selected (0.165 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

msck repair table partition2; 修复表

No rows affected (0.24 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> show

partitions partition2;

+----------------------+--+

| partition |

+----------------------+--+

| month=202019/day=19 |

| month=202019/day=20 |

| month=202019/day=21 |

| month=202019/day=22 |

+----------------------+--+

4 rows selected (0.14 seconds)

14.修改表与创建库

[cevent@hadoop207 hive-1.2.1]$ bin/beeline

Beeline version 1.2.1 by Apache Hive

beeline> !connect

jdbc:hive2://hadoop207.cevent.com:10000

Connecting to

jdbc:hive2://hadoop207.cevent.com:10000

Enter username for

jdbc:hive2://hadoop207.cevent.com:10000: cevent

Enter password for

jdbc:hive2://hadoop207.cevent.com:10000: ******

Connected to: Apache Hive (version 1.2.1)

Driver: Hive JDBC (version 1.2.1)

Transaction isolation:

TRANSACTION_REPEATABLE_READ

0:

jdbc:hive2://hadoop207.cevent.com:10000> show partitions partition2; 显示分区

±---------------------±-+

|

partition |

±---------------------±-+

| month=202019/day=19 |

| month=202019/day=20 |

| month=202019/day=21 |

| month=202019/day=22 |

±---------------------±-+

4 rows selected (1.813 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> desc partition2; 显示分区表

±-------------------------±----------------------±----------------------±-+

|

col_name | data_type | comment |

±-------------------------±----------------------±----------------------±-+

| deptno | int | |

| dname | string | |

| locations | string | |

| month | string | |

| day | string | |

| | NULL | NULL |

| # Partition Information | NULL | NULL |

| # col_name | data_type | comment |

| | NULL | NULL |

| month | string | |

| day | string | |

±-------------------------±----------------------±----------------------±-+

11 rows selected (0.235 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000>

alter table partition2 replace columns(deptno string,dname

string,locations string); 修改表列

No rows affected (0.376 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> desc partition2;

±-------------------------±----------------------±----------------------±-+

|

col_name | data_type | comment |

±-------------------------±----------------------±----------------------±-+

| deptno

| string | |

| dname | string | |

| locations | string | |

| month | string | |

| day | string | |

| | NULL | NULL |

| # Partition Information | NULL | NULL |

| # col_name | data_type | comment |

| | NULL | NULL |

| month | string | |

| day | string | |

±-------------------------±----------------------±----------------------±-+

11 rows selected (0.115 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

alter table

partition2 replace columns(deptno int);

No rows affected (0.159 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> desc partition2;

±-------------------------±----------------------±----------------------±-+

|

col_name | data_type | comment |

±-------------------------±----------------------±----------------------±-+

| deptno |

int

| |

| month | string | |

| day | string | |

| | NULL | NULL |

| # Partition Information | NULL | NULL |

| # col_name | data_type | comment |

| | NULL | NULL |

| month | string | |

| day | string | |

±-------------------------±----------------------±----------------------±-+

9 rows selected (0.112 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> create

database ceventdir location “/ceventdata”; 创建数据库到指定/*目录

No rows affected (0.189 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

use ceventdir;

使用数据库

No rows affected (0.035 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> create table student01(id int); 创建数据表

No rows affected (0.184 seconds)

0:

jdbc:hive2://hadoop207.cevent.com:10000> create database ceventdir2; 创建默认的数据库,位置在warehouse

No rows affected (0.058 seconds)

默认目录链接:http://hadoop207.cevent.com:50070/explorer.html#/user/hive/warehouse

0: jdbc:hive2://hadoop207.cevent.com:10000>

desc database

ceventdir2;

±------------±---------±--------------------------------------------------------------------±------------±------------±------------±-+

| db_name | comment

|

location

| owner_name | owner_type | parameters |

±------------±---------±--------------------------------------------------------------------±------------±------------±------------±-+

| ceventdir2 | | hdfs://hadoop207.cevent.com:8020/user/hive/warehouse/ceventdir2.db | cevent | USER | |

±------------±---------±--------------------------------------------------------------------±------------±------------±------------±-+

1 row selected (0.042 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000> desc database

ceventdir;

±-----------±---------±---------------------------------------------±------------±------------±------------±-+

| db_name | comment

| location | owner_name | owner_type | parameters |

±-----------±---------±---------------------------------------------±------------±------------±------------±-+

| ceventdir | | hdfs://hadoop207.cevent.com:8020/ceventdata | cevent | USER | |

±-----------±---------±---------------------------------------------±------------±------------±------------±-+

1 row selected (0.04 seconds)

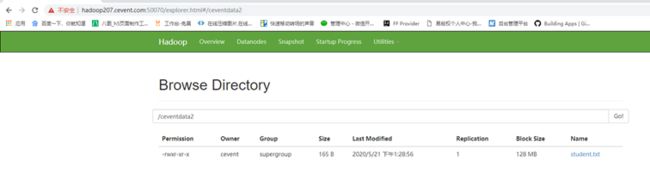

自定义数据库目录链接:

http://hadoop207.cevent.com:50070/explorer.html#/ceventdata

0:

jdbc:hive2://hadoop207.cevent.com:10000> create table ceventable(id int) location

‘/ceventdata2’; 创建表到指定目录

No rows affected (0.089 seconds)

0: jdbc:hive2://hadoop207.cevent.com:10000>

load data local

inpath ‘/opt/module/datas/student.txt’ into table ceventable; 加载表数据

INFO

: Loading data to table ceventdir.ceventable from

file:/opt/module/datas/student.txt

INFO

: Table ceventdir.ceventable stats: [numFiles=0, totalSize=0]

No rows affected (1.021 seconds)

访问链接:http://hadoop207.cevent.com:50070/explorer.html#/ceventdata2