hive 实践

- 创建hive分区表

create table source(

`date` bigint,

event int,

app string,

domain string,

rtype int,

unique_name string,

user_id string,

host_name string,

cluster_info string,

client_isp string,

isp string,

node string,

protocol string,

count bigint,

transcode_count bigint,

transcode_real_kbps bigint,

real_kbps bigint,

in_bytes bigint,

out_bytes bigint,

ntype string,

status_code int,

log_count bigint,

transcode_log_count bigint

)

PARTITIONED BY (

`day` string,

`hour` string,

`m5` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs://hdfs-ha/apps/hive/warehouse/eag.db/source'

TBLPROPERTIES (

'last_modified_by'='hive',

'last_modified_time'='1585206348',

'transient_lastDdlTime'='1585206348');2.查看hive表的建表语句

show create table tablename;

3.写hive库,需要hive-site.xml配置文件

cat /usr/hdp/2.6.1.0-129/spark2/conf/hive-site.xml

hive.metastore.uris=thrift://xx1:9083,thrift://xx2:9083

在项目的conf新增hive-site.xml,并在启动脚本中配置加载hive-site.xml

hive.metastore.uris

thrift://xx1:9083,thrift://xx2:9083

4.程序运行报错:

org.apache.spark.sql.AnalysisException: `eag`.`source` requires that the data to be inserted have the same number of columns as the target table: target table has 28 column(s) but the inserted data has 25 column(s), including 0 partition column(s) having constant value(s).;

原因:写入的数据字段与表的字段个数不对应

val hivedf = hiveCtx.read.schema(OFFLINESOURCESCHEMA).json(df)重新检查schema对应的字段与hive表字段是否一一对应

5.权限问题:

Permission denied: user=spark, access=WRITE, inode="/apps/hive/warehouse/eag.db/source/.hive-staging_hive_2020-03-26_16-33-03_236_3911206415334421369-1":hdfs:hdfs:drwxr-xr-x

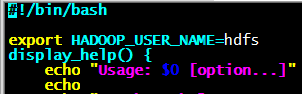

解决办法:在启动脚本开头加上export HADOOP_USER_NAME=hdfs