基于MATLAB,使用SVM和ANN实现车牌识别

基于MATLAB,使用SVM和ANN实现车牌识别

- WHY

- HOW

- 一、输入图像

- 二-三、图像处理

- 四、识别车牌矩形图像

- 五、字符切割

- 六、字符识别

- 七、MATLAB App UI

- ISSUE

WHY

本人一直对计算机图像识别和机器学习以及人工神经网络有很浓厚的兴趣,看到了基于openCV(原文)的实现流程,就萌生了在假期时间使用MATLAB进行车牌识别的想法。

在CSDN中查阅了很多相关文章,写下这篇文章算是一个回馈,也是一个总结,顺便在路途中找点事情做。另外本人编程外行,水平有限,不足之处欢迎探讨,代码不规范之处请无视。

HOW

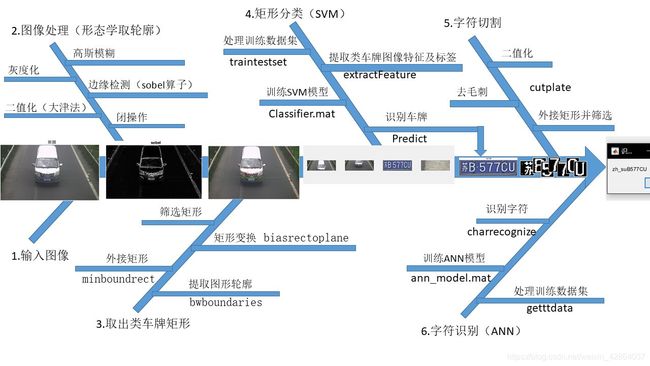

UI用的是MATLAB App,具体实现流程如下:

下面会贴上实现过程及源码:

一、输入图像

global I

[filename, pathname]=uigetfile({'*.jpg';'*.bmp'}, 'File Selector');

I=imread([pathname '\' filename]);

imshow(I, 'Parent', app.UIAxes);%title('原图');二-三、图像处理

这部分的重点是用sobel算子进行边缘检测以及用大津法对图像进行二值化处理,设置的参数可以进一步调优。难点是用minboundrect函数画出最小外接矩形,用biasrectoplane提取矩形并进行归一化处理(这里用MATLAB自带的函数不理想,是我自己设计的算法),最后对矩形图像进行初步筛选,得到类车牌矩形,为SVM分类做准备。

主函数

global I

global I_cell

G = fspecial('gaussian', [5 5], 2);

I_blur = imfilter(I,G,'same'); %高斯模糊

imshow(I_blur, 'Parent', app.UIAxes_2);

I_gray = rgb2gray(I_blur); %转化为灰度图

imshow(I_gray, 'Parent', app.UIAxes_3);

[high,width] = size(I_gray); % 获得图像的高度和宽度

F2 = double(I_gray);

U = double(I_gray);

uSobel = I_gray;

for i = 2:high - 1 %sobel边缘检测

for j = 2:width - 1

Gx = (U(i+1,j-1) + 2*U(i+1,j) + F2(i+1,j+1)) - (U(i-1,j-1) + 2*U(i-1,j) + F2(i-1,j+1));

Gy = (U(i-1,j+1) + 2*U(i,j+1) + F2(i+1,j+1)) - (U(i-1,j-1) + 2*U(i,j-1) + F2(i+1,j-1));

uSobel(i,j) = sqrt(Gx^2 + Gy^2);

end

end

I_sobel= im2uint8(uSobel);

imshow(I_sobel, 'Parent', app.UIAxes_4);%画出边缘检测后的图像

level = graythresh(I_sobel); %OTSU 大津法取阈值

I_OTSU=imbinarize(I_sobel,level);

imshow(I_OTSU, 'Parent', app.UIAxes_5);%画出二值化后的图像

se = strel('rectangle', [3 17]); %创建一个平坦的矩形结构,MN指定大小

I_close = imclose(I_OTSU,se); %闭操作

imshow(I_close, 'Parent', app.UIAxes_6);%画出闭操作后的图像

I_contour = bwperim(I_close); %轮廓提取

imshow(I_contour, 'Parent', app.UIAxes_7);%画出轮廓图像

I_fill= imfill(I_contour,'holes');

B = bwboundaries(I_fill, 'noholes'); % B为cell

figure(1)

imshow(I);

hold on;

I_cell = {};

for k = 1 : length(B)

thisBoundary = B{k};

[rectx,recty,area,perimeter]=minboundrect(thisBoundary(:, 2), thisBoundary(:, 1),'a');

rectx= floor(rectx);

recty= floor(recty);

[theta,I_crop] = biasrectoplane(rectx,recty,I);

if (~isnan(I_crop)) & (length(I_crop)>100)

I_crop=imresize(I_crop,[36 136]);

I_cell=[I_cell, I_crop];

end

plot(thisBoundary(:, 2), thisBoundary(:, 1), 'r', 'LineWidth', 0.5);

hold on;

line(rectx(:),recty(:),'color','g');

end

figure(2)

for m= 1:length(I_cell)

subplot(1,length(I_cell),m)

imshow(I_cell{1,m});

hold on

end minboundrect

function [rectx,recty,area,perimeter] = minboundrect(x,y,metric)

if (nargin<3) || isempty(metric)

metric = 'a';

elseif ~ischar(metric)

error 'metric must be a character flag if it is supplied.'

else

% check for 'a' or 'p'

metric = lower(metric(:)');

ind = strmatch(metric,{'area','perimeter'});

if isempty(ind)

error 'metric does not match either ''area'' or ''perimeter'''

end

% just keep the first letter.

metric = metric(1);

end

% preprocess data

x=x(:);

y=y(:);

% not many error checks to worry about

n = length(x);

if n~=length(y)

error 'x and y must be the same sizes'

end

if n>3

try

edges = convhull(x,y);

x = x(edges);

y = y(edges);

end

% probably fewer points now, unless the points are fully convex

nedges = length(x) - 1;

elseif n>1

% n must be 2 or 3

nedges = n;

x(end+1) = x(1);

y(end+1) = y(1);

else

% n must be 0 or 1

nedges = n;

end

switch nedges

case 0

% empty begets empty

rectx = [];

recty = [];

area = [];

perimeter = [];

return

case 1

% with one point, the rect is simple.

rectx = repmat(x,1,5);

recty = repmat(y,1,5);

area = 0;

perimeter = 0;

return

case 2

% only two points. also simple.

rectx = x([1 2 2 1 1]);

recty = y([1 2 2 1 1]);

area = 0;

perimeter = 2*sqrt(diff(x).^2 + diff(y).^2);

return

end

% 3 or more points.

% will need a 2x2 rotation matrix through an angle theta

Rmat = @(theta) [cos(theta) sin(theta);-sin(theta) cos(theta)];

% get the angle of each edge of the hull polygon.

ind = 1:(length(x)-1);

edgeangles = atan2(y(ind+1) - y(ind),x(ind+1) - x(ind));

% move the angle into the first quadrant.

edgeangles = unique(mod(edgeangles,pi/2));

% now just check each edge of the hull

nang = length(edgeangles);

area = inf;

perimeter = inf;

met = inf;

xy = [x,y];

for i = 1:nang

% rotate the data through -theta

rot = Rmat(-edgeangles(i));

xyr = xy*rot;

xymin = min(xyr,[],1);

xymax = max(xyr,[],1);

% The area is simple, as is the perimeter

A_i = prod(xymax - xymin);

P_i = 2*sum(xymax-xymin);

if metric=='a'

M_i = A_i;

else

M_i = P_i;

end

% new metric value for the current interval. Is it better?

if M_i<met

% keep this one

met = M_i;

area = A_i;

perimeter = P_i;

rect = [xymin;[xymax(1),xymin(2)];xymax;[xymin(1),xymax(2)];xymin];

rect = rect*rot';

rectx = rect(:,1);

recty = rect(:,2);

end

end

end

biasrectoplane

function [theta,I_crop] = biasrectoplane(x,y,I)

I_gray = rgb2gray(I);

[high,width] = size(I_gray);

A = width;

B = high;

if x(1) ~= x(4)

theta = atand((y(4)-y(1))/(x(1)-x(4)));

else

theta = 0;

end

if (theta<30) && ( theta~=0) % 顺时针旋转

xc=x(4);

yc=y(4);

a = sqrt((x(1) - x(4))^2 + (y(1) - y(4))^2);

b = sqrt((x(2) - x(1))^2 + (y(2) - y(1))^2);

if a>= b

ratiorect = a/b;

else

ratiorect = b/a;

end

if (atand(xc/yc)>= theta)&& (ratiorect>2) && (ratiorect<8) %planC

xbtp = B*sind(theta) + cosd(theta)*(xc-yc*tand(theta));

ybtp = yc/cosd(theta) + sind(theta)*(xc-yc*tand(theta));

Ib = imrotate(I,-theta);

I_crop=imcrop(Ib,[xbtp ybtp a b]);

elseif (atand(xc/yc)< theta)&& (ratiorect>2) && (ratiorect<8) %planD

xbtp = xc/cosd(theta) + sind(theta)*(B-yc-xc*tand(theta));

ybtp = xc/tand(theta) + cosd(theta)*(yc-xc/tand(theta));

Ib = imrotate(I,-theta);

I_crop=imcrop(Ib,[xbtp ybtp a b]);

else

I_crop=nan;

end

elseif (theta>60) && ( theta~=0) % 逆时针旋转

theta=90-theta;

xc=x(1);

yc=y(1);

a = sqrt((x(2) - x(1))^2 + (y(2) - y(1))^2);

b = sqrt((x(1) - x(4))^2 + (y(1) - y(4))^2);

if a>= b

ratiorect = a/b;

else

ratiorect = b/a;

end

if (atand(yc/xc)>= theta)&& (ratiorect>2) && (ratiorect<8) %planA

xbtp = xc/cosd(theta) + sind(theta)*(yc-xc*tand(theta));

ybtp = yc/cosd(theta) + sind(theta)*(A-xc-yc*tand(theta));

Ib = imrotate(I,(theta));

I_crop=imcrop(Ib,[xbtp ybtp a b]);

elseif (atand(yc/xc)< theta)&& (ratiorect>2) && (ratiorect<8) %planB

xbtp = yc/sind(theta) + cosd(theta)*(xc-yc/tand(theta));

ybtp = yc/cosd(theta) + sind(theta)*(A-xc-yc*tand(theta));

fprintf('xbtp = %f\n',xbtp);

fprintf('ybtp = %f\n',ybtp);

Ib = imrotate(I,(theta));

I_crop=imcrop(Ib,[xbtp ybtp a b]);

else

I_crop=nan;

end

elseif theta==0 % 不需要旋转

Ib = I;

a = x(2)-x(1);

b = y(4)-y(1);

if a>= b

ratiorect = a/b;

else

ratiorect = b/a;

end

xbtp = x(1);

ybtp = y(1);

if (ratiorect>2) && (ratiorect<8)

I_crop=imcrop(Ib,[xbtp ybtp a b]);

else

I_crop=nan;

end

else

I_crop=nan;

end

end四、识别车牌矩形图像

这部分使用到了机器学习算法的一种:支持向量机(SVM)。SVM的核心思想是用一个n+1维的超平面对n维数据进行分类,所谓的支撑向量即距离超平面最近的向量(数据),优点之一是可以忽略非支撑向量,提高计算效率。

SVM的实现过程大致可以分为五部分:1.整理训练/测试数据集;2.提取图像特征;3.训练分类模型;4.参数调优并得出最优模型;5.对输入图像进行分类。我用的是MATLAB自带的SVM函数,因为是二值分类,所以即使是用默认参数仍然取得了不错的效果。想深入研究的同学可以尝试使用svmlib库。

提取GLCM图像特征

function [features] = getGLCMFeatures(image)

features_all = [];

for i = 1:10

glcm = graycomatrix(image, 'Offset', [0,i]);

stats = graycoprops(glcm);

glcm45 = graycomatrix(image, 'Offset', [-i,i]);

stats45 = graycoprops(glcm45);

glcm90 = graycomatrix(image, 'Offset', [-i,0]);

stats90 = graycoprops(glcm90);

glcm135 = graycomatrix(image, 'Offset', [-i,-i]);

stats135 = graycoprops(glcm135);

stats7x4 = [stats.Contrast stats.Correlation stats.Energy stats.Homogeneity;

stats45.Contrast stats45.Correlation stats45.Energy stats45.Homogeneity;

stats90.Contrast stats90.Correlation stats90.Energy stats90.Homogeneity;

stats135.Contrast stats135.Correlation stats135.Energy stats135.Homogeneity];

features_all = [features_all mean(stats7x4,1) std(stats7x4,0,1)];

end

features = features_all;

提取HOG特征和图像标签

function [trainingFeatures,trainingLabels,testFeatures,testLabels]=extractFeature(trainingSet,testSet)

%% 确定特征向量尺寸

img = read(trainingSet(1), 1);

%转化为灰度图像

img=rgb2gray(img);

%转化为2值图像

lvl = graythresh(img);

img = im2bw(img, lvl);

img=imresize(img,[256 256]);

cellSize = [4 4];

[hog_feature, vis_hog] = extractHOGFeatures(img,'CellSize',cellSize);

glcm_feature = getGLCMFeatures(img);

SizeOfFeature = length(hog_feature)+ length(glcm_feature);

trainingFeatures = [];

trainingLabels = [];

for digit = 1:numel(trainingSet)

numImages = trainingSet(digit).Count;

features = zeros(numImages, SizeOfFeature, 'single');%初始化特征向量

% 遍历每张图片

for i = 1:numImages

img = read(trainingSet(digit), i);% 取出第i张图片

img=rgb2gray(img); % 转化为灰度图像

glcm_feature = getGLCMFeatures(img); % 提取GLCM特征

lvl = graythresh(img); % 阈值化

img = im2bw(img, lvl); % 转化为2值图像

img=imresize(img,[256 256]);

% 提取HOG特征

[hog_feature, vis_hog] = extractHOGFeatures(img,'CellSize',cellSize);

% 合并两个特征

features(i, :) = [hog_feature glcm_feature];

end

% 使用图像描述作为训练标签

labels = repmat(trainingSet(digit).Description, numImages, 1);

% 逐个添加每张训练图片的特征和标签

trainingFeatures = [trainingFeatures; features];

trainingLabels = [trainingLabels; labels];

end

testFeatures = [];

testLabels = [];

for digit = 1:numel(testSet)

numImages = testSet(digit).Count; %初始化特征向量

features = zeros(numImages, SizeOfFeature, 'single');

for i = 1:numImages

img = read(testSet(digit), i); %转化为灰度图像

img=rgb2gray(img);

glcm_feature = getGLCMFeatures(img); %转化为2值图像

lvl = graythresh(img);

img = im2bw(img, lvl);

img=imresize(img,[256 256]);

[hog_4x4, vis4x4] = extractHOGFeatures(img,'CellSize',cellSize);

features(i, :) = [hog_4x4 glcm_feature];

end

% 使用图像描述作为训练标签

labels = repmat(testSet(digit).Description, numImages, 1);

testFeatures = [testFeatures; features];

testLabels=[testLabels; labels];

end

end主函数,训练并测试

clear;

dir=('C:\Users\11606\Desktop\MLPR\SVM\train');

testdir=('C:\Users\11606\Desktop\MLPR\SVM\test');

trainingSet = imageSet(dir,'recursive');

testSet = imageSet(testdir,'recursive');

[trainingFeatures,trainingLabels,testFeatures,testLabels]=extractFeature(trainingSet,testSet);

classifier = fitcecoc(trainingFeatures, trainingLabels);

save classifier.mat classifier -v7.3;

predictedLabels = predict(classifier, testFeatures);

confMat=confusionmat(testLabels, predictedLabels)

accuracy=(confMat(1,1)/sum(confMat(1,:))+confMat(2,2)/sum(confMat(2,:)))/2对输入图像进行分类

close(figure(1))

close(figure(2))

global I_cell

global I_plate

load classifier.mat; % 加载训练好的SVM模型

I_plate = {};

for m= 1:length(I_cell)

img=rgb2gray(I_cell{1,m});

glcm_feature = getGLCMFeatures(img); %提取GLCM特征

lvl = graythresh(img);

img = im2bw(img, lvl);

img=imresize(img,[256 256]);

[hog_4x4, ~] = extractHOGFeatures(img,'CellSize',[4 4]); %提取HOG特征

testFeature = [hog_4x4 glcm_feature]; %合并特征

predictedLabel = predict(classifier, testFeature); %使用predict函数进行分类

if predictedLabel=='isplate'

I_plate=[I_plate, I_cell{1,m}];

end

end

imshow(I_plate{1,l}, 'Parent', app.UIAxes_8);

hold on

end五、字符切割

因为车牌周围的轮廓和柳钉在图像处理过程中会产生很多干扰,中文汉字通常会在取轮廓中会断裂成两部分,如何解决这两点是这部分的重点。我用的方法是用像素跳变次数作为阈值去除干扰和柳钉,剔除中文汉字并单独识别。

global I_plate

for l= 1:length(I_plate)

P = I_plate{1,l};

P_gray = rgb2gray(P);

level = graythresh(P_gray); %OTSU 大津法取阈值

P_OTSU=imbinarize(P_gray,level);

[r,c]=size(P_OTSU);

collect=[];

for i=1:r %去柳钉

n = 0;

for j=1:c-1

m = P_OTSU(i,j);

if m ~= P_OTSU(i,j+1)

n = n+1;

end

end

if n<15

for l=1:c

P_OTSU(i,l)=P_OTSU(1,1);

end

end

end

figure(6)

imshow(P_OTSU)

hold on

B = bwboundaries(P_OTSU, 'noholes'); % B为cell

C_cell= {};

p=0;

chx=[];

chy=[];

for k = 1 : length(B)

thisBoundary = B{k};

[rectx,recty,area,perimeter]=minboundrect(thisBoundary(:, 2), thisBoundary(:, 1),'a');

fprintf('area= %d\n',area/(136*36) )

arearatio=area/(136*36);

if arearatio>0.04 & arearatio<0.1 & rectx>10 %按面积筛选,并去除汉字

rectxx=rectx;

rectyy=recty;

z=1; %适当扩大轮廓z像素

y=2;

rectxx(1)= rectxx(1)-y;

rectxx(2)= rectxx(2)+y;

rectxx(3)= rectxx(3)+y;

rectxx(4)= rectxx(4)-y;

rectxx(5)= rectxx(5)-y;

rectyy(1)= rectyy(1)-z;

rectyy(2)= rectyy(2)-z;

rectyy(3)= rectyy(3)+z;

rectyy(4)= rectyy(4)+z;

rectyy(5)= rectyy(5)-z;

p=p+1;

chx(p)=[rectxx(1)];

chy(p)=[rectyy(1)];

chw(p) = sqrt((rectxx(2) - rectxx(1))^2 + (rectyy(2) - rectyy(1))^2);

chl(p) = sqrt((rectxx(1) - rectxx(4))^2 + (rectyy(1) - rectyy(4))^2);

end

try

line(rectxx(:),rectyy(:),'color','g');

end

end

[chx,id] = sort(chx);

chy = chy(id);

wth = max(chw);

lgh = max(chl);

chx7=0;

chy7=chy(1);

wth7=chx(1)-1;

lgh7=lgh;

M1 = imcrop(P_OTSU,[chx7 chy7 wth7 lgh7]);

[ch]= charrecognize_cn(M1); %ANN识别英文和数字

collect=[collect ch];

fprintf('M1=%s\n',ch);

for j=1:p

CN = imcrop(P_OTSU,[chx(j) chy(j) wth lgh]);

C_cell={C_cell,CN};

[ch]= charrecognize(CN); %ANN识别中文汉字

collect=[collect ch];

fprintf('ch=%s\n',ch);

end

msgbox(collect,'识别结果');

end六、字符识别

这部分要使用到深度学习的基础:人工神经网络(ANN),ANN的大致思想是通过正向矩阵运算->预测结果与实际标签比对->反向优化各层网络间权值,输入是图像所有像素点,输出是需要分类的图像标签。

实现过程可以分为以下五部分:1.整理训练/测试数据集;2.提取像素和标签并进行归一化;3.设计网络并训练;4.调参得到最优模型;5.应用模型识别字符图像。因为中文字符和英文/数字差异较大,所以要分开训练以提高准确度,下面贴出中文字符的训练过程。

提取像素和标签

function[x_train,y_train,x_test,y_test]=getttdata()

dir=('C:\Users\11606\Desktop\MLPR\ANN\chinese_set\train');

testdir=('C:\Users\11606\Desktop\MLPR\ANN\chinese_set\test');

trainingSet = imageSet(dir,'recursive');

testSet = imageSet(testdir,'recursive');

x_train = [];

x_test = [];

y_train = [];

y_test = [];

label=zeros(31,1);

for digit = 1:numel(trainingSet)

numImages = trainingSet(digit).Count;

% 遍历每张图片

for i = 1:numImages

label=zeros(31,1);

img = read(trainingSet(digit), i);% 取出第i张图片

img = imbinarize(img);

img=imresize(img,[28 28]);

x=im2double(img);

x=reshape(x,784,1);

x_train=[x_train,x];

labels = trainingSet(digit).Description;

switch labels

case 'zh_cuan'

label(1,1) = 1;

case 'zh_e'

label(2,1) = 1;

case 'zh_gan'

label(3,1) = 1;

case 'zh_gan1'

label(4,1) = 1;

case 'zh_gui'

label(5,1) = 1;

case 'zh_gui1'

label(6,1) = 1;

case 'zh_hei'

label(7,1) = 1;

case 'zh_hu'

label(8,1) = 1;

case 'zh_ji'

label(9,1) = 1;

case 'zh_jin'

label(10,1) = 1;

case 'zh_jing'

label(11,1) = 1;

case 'zh_jl'

label(12,1) = 1;

case 'zh_liao'

label(13,1) = 1;

case 'zh_lu'

label(14,1) = 1;

case 'zh_meng'

label(15,1) = 1;

case 'zh_min'

label(16,1) = 1;

case 'zh_ning'

label(17,1) = 1;

case 'zh_qing'

label(18,1) = 1;

case 'zh_qiong'

label(19,1) = 1;

case 'zh_shan'

label(20,1) = 1;

case 'zh_su'

label(21,1) = 1;

case 'zh_sx'

label(22,1) = 1;

case 'zh_wan'

label(23,1) = 1;

case 'zh_xiang'

label(24,1) = 1;

case 'zh_xin'

label(25,1) = 1;

case 'zh_yu'

label(26,1) = 1;

case 'zh_yu1'

label(27,1) = 1;

case 'zh_yue'

label(28,1) = 1;

case 'zh_yun'

label(29,1) = 1;

case 'zh_zang'

label(30,1) = 1;

case 'zh_zhe'

label(31,1) = 1;

end

% 使用图像描述作为训练标签

y_train = [y_train label];

end

end

for digit = 1:numel(testSet)

numImages = testSet(digit).Count;

% 遍历每张图片

for i = 1:numImages

label=zeros(31,1);

img = read(testSet(digit), i);% 取出第i张图片

img = im2bw(img);

img=imresize(img,[28 28]);

x=im2double(img);

x=reshape(x,784,1);

x_test=[x_test,x];

labels = testSet(digit).Description;

switch labels

case 'zh_cuan'

label(1,1) = 1;

case 'zh_e'

label(2,1) = 1;

case 'zh_gan'

label(3,1) = 1;

case 'zh_gan1'

label(4,1) = 1;

case 'zh_gui'

label(5,1) = 1;

case 'zh_gui1'

label(6,1) = 1;

case 'zh_hei'

label(7,1) = 1;

case 'zh_hu'

label(8,1) = 1;

case 'zh_ji'

label(9,1) = 1;

case 'zh_jin'

label(10,1) = 1;

case 'zh_jing'

label(11,1) = 1;

case 'zh_jl'

label(12,1) = 1;

case 'zh_liao'

label(13,1) = 1;

case 'zh_lu'

label(14,1) = 1;

case 'zh_meng'

label(15,1) = 1;

case 'zh_min'

label(16,1) = 1;

case 'zh_ning'

label(17,1) = 1;

case 'zh_qing'

label(18,1) = 1;

case 'zh_qiong'

label(19,1) = 1;

case 'zh_shan'

label(20,1) = 1;

case 'zh_su'

label(21,1) = 1;

case 'zh_sx'

label(22,1) = 1;

case 'zh_wan'

label(23,1) = 1;

case 'zh_xiang'

label(24,1) = 1;

case 'zh_xin'

label(25,1) = 1;

case 'zh_yu'

label(26,1) = 1;

case 'zh_yu1'

label(27,1) = 1;

case 'zh_yue'

label(28,1) = 1;

case 'zh_yun'

label(29,1) = 1;

case 'zh_zang'

label(30,1) = 1;

case 'zh_zhe'

label(31,1) = 1;

end

end

end

训练函数

function [y] = layerout(w,b,x)

%output function

y = w*x + b;

n = length(y);

for i =1:n

y(i)=1.0/(1+exp(-y(i)));

end

y;

endfunction[w,b,w_h,b_h]=mytrain(x_train,y_train)

step=300;

a=0.3;

in = 784; %输入神经元个数

hid = 40;

out = 31; %输出层神经元个数

o =1;

w = randn(out,hid);

b = randn(out,1);

w_h =randn(hid,in);

b_h = randn(hid,1);

count_train=13642; %训练图片数量

for i=0:step

%打乱训练样本

r=randperm(count_train);

x_train = x_train(:,r);

y_train = y_train(:,r);

for j=1:count_train

x = x_train(:,j);

y = y_train(:,j);

hid_put = layerout(w_h,b_h,x);

out_put = layerout(w,b,hid_put);

%更新公式的实现

o_update = (y-out_put).*out_put.*(1-out_put);

h_update = ((w')*o_update).*hid_put.*(1-hid_put);

outw_update = a*(o_update*(hid_put'));

outb_update = a*o_update;

hidw_update = a*(h_update*(x'));

hidb_update = a*h_update;

w = w + outw_update;

b = b+ outb_update;

w_h = w_h +hidw_update;

b_h =b_h +hidb_update;

end

end

end测试函数

function[]= mytest(x_test,y_test,w,b,w_h,b_h)

count_test=2974; %测试图片数量

test = zeros(31,count_test);

for k=1:count_test

x = x_test(:,k);

hid = layerout(w_h,b_h,x);

test(:,k)=layerout(w,b,hid);

[t,t_index]=max(test);

[y,y_index]=max(y_test);

sum = 0;

for p=1:length(t_index)

if t_index(p)==y_index(p)

sum =sum+1;

end

end

end

fprintf('%d/2974\n',sum);

fprintf('%d\n',sum/count_test);

end训练+测试主函数

[x_train,y_train,x_test,y_test]=getttdata();

%归一化

x_train = mapminmax(x_train,0,1);

x_test =mapminmax(x_test,0,1);

[w,b,w_h,b_h]=mytrain(x_train,y_train);

fprintf('mytrain正确率:\n');

mytest(x_test,y_test,w,b,w_h,b_h);

save('ann_model_cn','w','b','w_h','b_h')字符识别函数

function[ch]= charrecognize_cn(bwimg)

b=load('ann_model_cn','b');

b=b.b;

b_h=load('ann_model_cn','b_h');

b_h=b_h.b_h;

w=load('ann_model_cn','w');

w=w.w;

w_h=load('ann_model_cn','w_h');

w_h=w_h.w_h;

img=imresize(bwimg,[28 28]);

x=im2double(img);

x=reshape(x,784,1);

hid = layerout(w_h,b_h,x);

test=layerout(w,b,hid);

[t,t_index]=max(test);

switch t_index

case 1

ch = 'zh_cuan';

case 2

ch = 'zh_e';

case 3

ch = 'zh_gan';

case 4

ch = 'zh_gan1';

case 5

ch = 'zh_gui';

case 6

ch = 'zh_gui1';

case 7

ch = 'zh_hei';

case 8

ch = 'zh_hu';

case 9

ch = 'zh_ji';

case 10

ch = 'zh_jin';

case 11

ch = 'zh_jing';

case 12

ch = 'zh_jl';

case 13

ch = 'zh_liao';

case 14

ch = 'zh_lu';

case 15

ch = 'zh_meng';

case 16

ch = 'zh_min';

case 17

ch = 'zh_ning';

case 18

ch = 'zh_qing';

case 19

ch = 'zh_qiong';

case 20

ch = 'zh_shan';

case 21

ch = 'zh_su';

case 22

ch = 'zh_sx';

case 23

ch = 'zh_wan';

case 24

ch = 'zh_xiang';

case 25

ch = 'zh_xin';

case 26

ch = 'zh_yu';

case 27

ch = 'zh_yu1';

case 28

ch = 'zh_yue';

case 29

ch = 'zh_yun';

case 30

ch = 'zh_zang';

case 31

ch = 'zh_zhe';

end

end

七、MATLAB App UI

ISSUE

本程序仅能识别特定场景图片,深入研究的同学可以从以下几个方面着手进行优化:

- 取车牌部分除了文中的sobel算子的方式,还有基于颜色和基于字符的方法等。对倾斜车牌可以尝试通过偏斜扭转方法进行空间变换。

- 字符切割部分通过判断外接矩形面积的方式筛选字符,这对I和1这种小面积字符非常不友好,需要进一步调参或开发新的筛选方式。

- 文中的三层ANN对汉字字符的识别率较低,可以尝试应用MATLAB自带的人工神经网络工具箱。