Kafka多维度系统精讲,从入门到熟练掌握

课程学习连接:Kafka多维度系统精讲,从入门到熟练掌握

kafka的producer是线程安全的

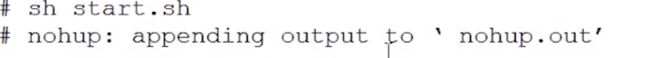

kafka的consumer不是线程安全的

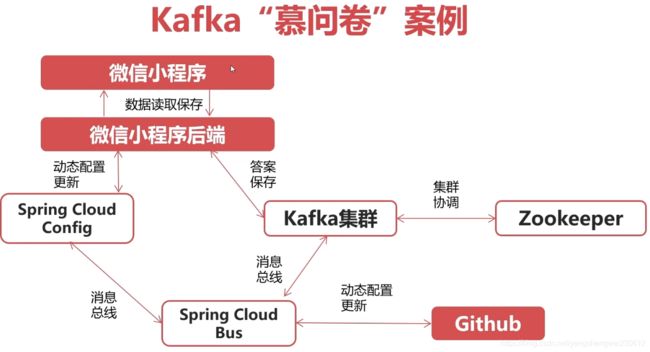

第1章 课程导学与学习指南

1-1 导学

第2章 Kafka入门——开发环境准备

2-1 环境准备

2-2 VMware安装

2-3 VMware添加Centos镜像

2-4 CentOS7安装

2-5 XShell使用介绍

2-6 环境准备常见问题介绍

第3章 Kafka入门——Kafka基础操作

3-1 章节介绍

3-2 kafka自我介绍

3-3 JDK安装

3-4 Zookeeper安装启动

3-5 kafka配置

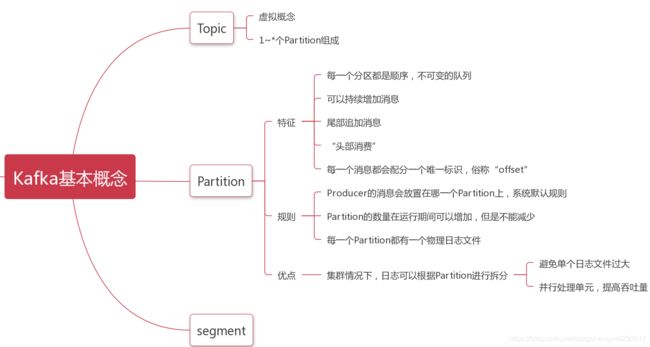

3-6 Kafka基本概念及使用演示

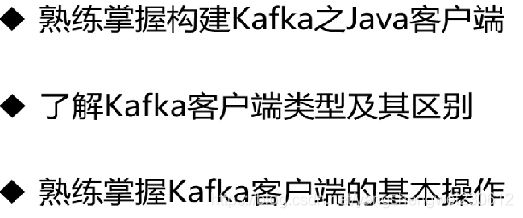

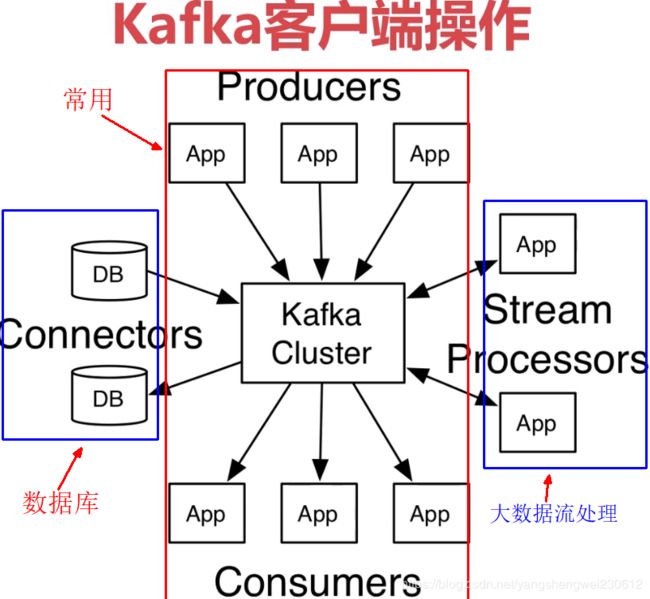

第4章 Kafka核心API——Kafka客户端操作

4-1 内容概述

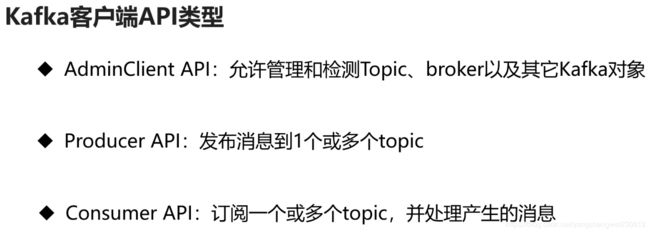

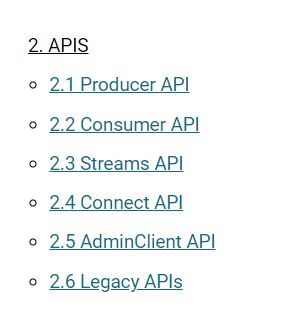

前三个常用

4-3 学习准备-初始化工程

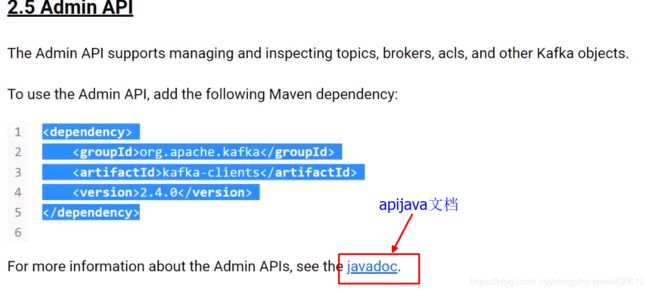

kafka官网:kafka.apache.org

4-4 AdminClient客户端建立

4-5 创建Topic演示 (05:50)

4-6 查看Topic列表及Internal杂谈 (07:01)

4-7 删除Topic (02:44)

4-8 Topic描述信息查看 (05:41)

4-9 Topic配置信息查看 (06:49)

4-10 Topic配置信息修改 (09:25)

4-11 Partition增加 (05:10)

import org.apache.kafka.clients.admin.*;

import org.apache.kafka.common.KafkaFuture;

import org.apache.kafka.common.config.ConfigResource;

import org.apache.kafka.common.internals.Topic;

import org.apache.kafka.server.quota.ClientQuotaEntity;

import java.util.*;

import java.util.concurrent.ExecutionException;

public class AdminSample {

public final static String TOPIC_NAME="jiangzh-topic";

public static void main(String[] args) throws Exception {

// AdminClient adminClient = AdminSample.adminClient();

// System.out.println("adminClient : "+ adminClient);

// 创建Topic实例

createTopic();

// 删除Topic实例

// delTopics();

// 获取Topic列表

// topicLists();

// 描述Topic

describeTopics();

// 修改Config

// alterConfig();

// 查询Config

// describeConfig();

// 增加partition数量

// incrPartitions(2);

}

/*

增加partition数量

*/

public static void incrPartitions(int partitions) throws Exception{

AdminClient adminClient = adminClient();

Map<String, NewPartitions> partitionsMap = new HashMap<>();

NewPartitions newPartitions = NewPartitions.increaseTo(partitions);

partitionsMap.put(TOPIC_NAME, newPartitions);

CreatePartitionsResult createPartitionsResult = adminClient.createPartitions(partitionsMap);

createPartitionsResult.all().get();

}

/*

修改Config信息

*/

public static void alterConfig() throws Exception{

AdminClient adminClient = adminClient();

// Map configMaps = new HashMap<>();

//

// // 组织两个参数

// ConfigResource configResource = new ConfigResource(ConfigResource.Type.TOPIC, TOPIC_NAME);

// Config config = new Config(Arrays.asList(new ConfigEntry("preallocate","true")));

// configMaps.put(configResource,config);

// AlterConfigsResult alterConfigsResult = adminClient.alterConfigs(configMaps);

/*

从 2.3以上的版本新修改的API

*/

Map<ConfigResource,Collection<AlterConfigOp>> configMaps = new HashMap<>();

// 组织两个参数

ConfigResource configResource = new ConfigResource(ConfigResource.Type.TOPIC, TOPIC_NAME);

AlterConfigOp alterConfigOp =

new AlterConfigOp(new ConfigEntry("preallocate","false"),AlterConfigOp.OpType.SET);

configMaps.put(configResource,Arrays.asList(alterConfigOp));

AlterConfigsResult alterConfigsResult = adminClient.incrementalAlterConfigs(configMaps);

alterConfigsResult.all().get();

}

/**

查看配置信息

ConfigResource(type=TOPIC, name='jiangzh-topic') ,

Config(

entries=[

ConfigEntry(

name=compression.type,

value=producer,

source=DEFAULT_CONFIG,

isSensitive=false,

isReadOnly=false,

synonyms=[]),

ConfigEntry(

name=leader.replication.throttled.replicas,

value=,

source=DEFAULT_CONFIG,

isSensitive=false,

isReadOnly=false,

synonyms=[]), ConfigEntry(name=message.downconversion.enable, value=true, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=min.insync.replicas, value=1, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=segment.jitter.ms, value=0, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=cleanup.policy, value=delete, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=flush.ms, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=follower.replication.throttled.replicas, value=, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=segment.bytes, value=1073741824, source=STATIC_BROKER_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=retention.ms, value=604800000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=flush.messages, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=message.format.version, value=2.4-IV1, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=file.delete.delay.ms, value=60000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=max.compaction.lag.ms, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=max.message.bytes, value=1000012, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=min.compaction.lag.ms, value=0, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=message.timestamp.type, value=CreateTime, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

ConfigEntry(name=preallocate, value=false, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=min.cleanable.dirty.ratio, value=0.5, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=index.interval.bytes, value=4096, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=unclean.leader.election.enable, value=false, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=retention.bytes, value=-1, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=delete.retention.ms, value=86400000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=segment.ms, value=604800000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=message.timestamp.difference.max.ms, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]), ConfigEntry(name=segment.index.bytes, value=10485760, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[])])

*/

public static void describeConfig() throws Exception{

AdminClient adminClient = adminClient();

// TODO 这里做一个预留,集群时会讲到

// ConfigResource configResource = new ConfigResource(ConfigResource.Type.BROKER, TOPIC_NAME);

ConfigResource configResource = new ConfigResource(ConfigResource.Type.TOPIC, TOPIC_NAME);

DescribeConfigsResult describeConfigsResult = adminClient.describeConfigs(Arrays.asList(configResource));

Map<ConfigResource, Config> configResourceConfigMap = describeConfigsResult.all().get();

configResourceConfigMap.entrySet().stream().forEach((entry)->{

System.out.println("configResource : "+entry.getKey()+" , Config : "+entry.getValue());

});

}

/**

描述Topic

name :jiangzh-topic ,

desc: (name=jiangzh-topic,

internal=false,

partitions=

(partition=0,

leader=192.168.220.128:9092

(id: 0 rack: null),

replicas=192.168.220.128:9092

(id: 0 rack: null),

isr=192.168.220.128:9092

(id: 0 rack: null)),

authorizedOperations=[])

*/

public static void describeTopics() throws Exception {

AdminClient adminClient = adminClient();

DescribeTopicsResult describeTopicsResult = adminClient.describeTopics(Arrays.asList(TOPIC_NAME));

Map<String, TopicDescription> stringTopicDescriptionMap = describeTopicsResult.all().get();

Set<Map.Entry<String, TopicDescription>> entries = stringTopicDescriptionMap.entrySet();

entries.stream().forEach((entry)->{

System.out.println("name :"+entry.getKey()+" , desc: "+ entry.getValue());

});

}

/*

删除Topic

*/

public static void delTopics() throws Exception {

AdminClient adminClient = adminClient();

DeleteTopicsResult deleteTopicsResult = adminClient.deleteTopics(Arrays.asList(TOPIC_NAME));

deleteTopicsResult.all().get();

}

/*

获取Topic列表

*/

public static void topicLists() throws Exception {

AdminClient adminClient = adminClient();

// 是否查看internal选项

ListTopicsOptions options = new ListTopicsOptions();

options.listInternal(true);

// ListTopicsResult listTopicsResult = adminClient.listTopics();

ListTopicsResult listTopicsResult = adminClient.listTopics(options);

Set<String> names = listTopicsResult.names().get();

Collection<TopicListing> topicListings = listTopicsResult.listings().get();

KafkaFuture<Map<String, TopicListing>> mapKafkaFuture = listTopicsResult.namesToListings();

// 打印names

names.stream().forEach(System.out::println);

// 打印topicListings

topicListings.stream().forEach((topicList)->{

System.out.println(topicList);

});

}

/*

创建Topic实例

*/

public static void createTopic() {

AdminClient adminClient = adminClient();

// 副本因子

Short rs = 1;

NewTopic newTopic = new NewTopic(TOPIC_NAME, 1 , rs);

CreateTopicsResult topics = adminClient.createTopics(Arrays.asList(newTopic));

System.out.println("CreateTopicsResult : "+ topics);

}

/*

设置AdminClient

*/

public static AdminClient adminClient(){

Properties properties = new Properties();

properties.setProperty(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG,"kafka1:9092");

AdminClient adminClient = AdminClient.create(properties);

return adminClient;

}

}

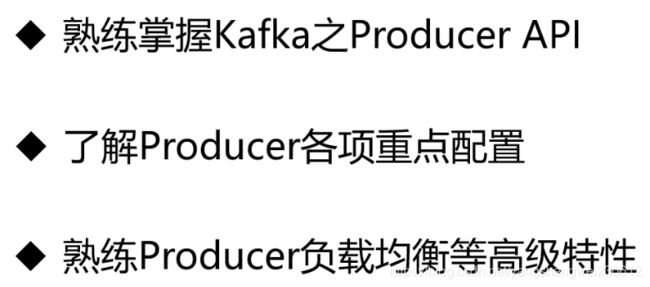

第5章 Kafka核心API——Producer生产者

5-1 Producer章节介绍

5-2 Producer异步发送演示

/*

Producer异步发送演示

*/

public static void producerSend(){

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,BOOTSTRAP_SERVERS);

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,"key-"+i,"value-"+i);

producer.send(record);

}

// 所有的通道打开都需要关闭

producer.close();

}

5-3 Producer异步阻塞发送演示

/*

Producer异步阻塞发送(同步发送)

*/

public static void producerSyncSend() throws ExecutionException, InterruptedException {

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,BOOTSTRAP_SERVERS);

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

String key = "key-"+i;

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,key,"value-"+i);

Future<RecordMetadata> send = producer.send(record);

//同步主要是 通过异步阻塞的实现, send.get就会阻塞

RecordMetadata recordMetadata = send.get();

System.out.println(key + "partition : "+recordMetadata.partition()+" , offset : "+recordMetadata.offset());

}

// 所有的通道打开都需要关闭

producer.close();

}

5-4 Producer异步回调发送演示

/*

Producer异步发送带回调函数

*/

public static void producerSendWithCallback(){

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.220.128:9092");

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,"key-"+i,"value-"+i);

producer.send(record, new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

System.out.println(

"partition : "+recordMetadata.partition()+" , offset : "+recordMetadata.offset());

}

});

}

// 所有的通道打开都需要关闭

producer.close();

}

5-5 Producer源码讲解

5-6 Producer生产者原理

5-7 Producer自定义Partition负载均衡

/*

Producer异步发送带回调函数和Partition负载均衡

*/

public static void producerSendWithCallbackAndPartition(){

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"10.139.12.150:9092");

properties.put(ProducerConfig.ACKS_CONFIG,"all");

properties.put(ProducerConfig.RETRIES_CONFIG,"0");

properties.put(ProducerConfig.BATCH_SIZE_CONFIG,"16384");

properties.put(ProducerConfig.LINGER_MS_CONFIG,"1");

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG,"33554432");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,"com.imooc.jiangzh.kafka.producer.SamplePartition");

// Producer的主对象

Producer<String,String> producer = new KafkaProducer<>(properties);

// 消息对象 - ProducerRecoder

for(int i=0;i<10;i++){

ProducerRecord<String,String> record =

new ProducerRecord<>(TOPIC_NAME,"key-"+i,"value-"+i);

producer.send(record, new Callback() {

@Override

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

System.out.println(

"partition : "+recordMetadata.partition()+" , offset : "+recordMetadata.offset());

}

});

}

// 所有的通道打开都需要关闭

producer.close();

}

5-8 消息传递保障

发送应答ack

producer 需要server 接收到数据之后发出的确认接收的信号,此项配置就是指procuder需要多少个这样的确认信号。此配置实际上代表了数据备份的可用性。以下设置为常用选项:

- (1)acks=0: 设置为0 表示producer 不需要等待任何确认收到的信息。副本将立即加到socket buffer 并认为已经发送。没有任何保障可以保证此种情况下server 已经成功接收数据,同时重试配置不会发生作用(因为客户端不知道是否失败)回馈的offset 会总是设置为-1;

- (2)acks=1: 这意味着至少要等待leader已经成功将数据写入本地log,但是并没有等待所有follower 是否成功写入。这种情况下,如果follower 没有成功备份数据,而此时leader又挂掉,则消息会丢失。

- (3)acks=all: 这意味着leader 需要等待所有备份都成功写入日志,这种策略会保证只要有一个备份存活就不会丢失数据。这是最强的保证。

- (4)其他的设置,例如acks=2 也是可以的,这将需要给定的acks 数量,但是这种策略一般很少用。

properties.put(ProducerConfig.ACKS_CONFIG,"all");

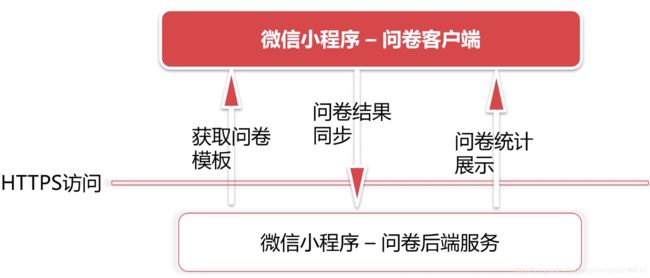

第6章 实战“慕问卷”开发 —— 微信小程序部分

6-1 Producer实现介绍 (02:36)

6-2 微信小程序业务介绍 (05:27)

6-3 基础环境准备 (08:23)

6-4 微信模板配置创建及解析 (13:14)

6-5 微信小程序后台逻辑层实现 (08:16)

6-6 微信小程序表现层基础构建 (04:20)

6-7 微信小程序表现层实现 (04:04)

6-8 微信小程序业务测试 (02:53)

6-9 Kafka Producer集成 (21:55)

1、Kafka Producer是线程安全的,建议多线程复用,如果每个线程都创建,出现大量的上下文切换或争抢的情况,影响Kafka效率

2、Kafka Producer的key是一个很重要的内容:

2.1 我们可以根据Key完成Partition的负载均衡

2.2 合理的Key设计,可以让Flink、Spark Streaming之类的实时分析工具做更快速处理

3、ack - all, kafka层面上就已经有了只有一次的消息投递保障,但是如果想真的不丢数据,最好自行处理异常

public void templateReported(JSONObject reportInfo) {

// kafka producer将数据推送至Kafka Topic

log.info("templateReported : [{}]", reportInfo);

String topicName = "jiangzh-topic";

// 发送Kafka数据

String templateId = reportInfo.getString("templateId");

JSONArray reportData = reportInfo.getJSONArray("result");

// 如果templateid相同,后续在统计分析时,可以考虑将相同的id的内容放入同一个partition,便于分析使用

ProducerRecord<String,Object> record =

new ProducerRecord<>(topicName,templateId,reportData);

/*

1、Kafka Producer是线程安全的,建议多线程复用,如果每个线程都创建,出现大量的上下文切换或争抢的情况,影响Kafka效率

2、Kafka Producer的key是一个很重要的内容:

2.1 我们可以根据Key完成Partition的负载均衡

2.2 合理的Key设计,可以让Flink、Spark Streaming之类的实时分析工具做更快速处理

3、ack - all, kafka层面上就已经有了只有一次的消息投递保障,但是如果想真的不丢数据,最好自行处理异常

*/

try{

producer.send(record);

}catch (Exception e){

// 将数据加入重发队列, redis,es,...

}

}

6-10 CA证书申请及域名绑定

6-11 Springboot工程集成SSL证书

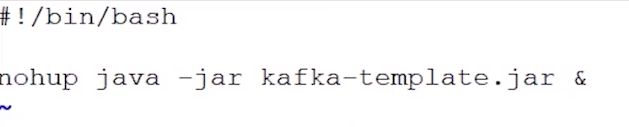

6-12 阿里云部署微信小程序后端

6-13 微信小程序部署准备工作 (06:15)

6-14 微信小程序编译部署 (10:53)

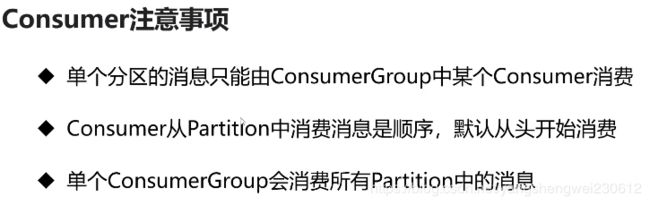

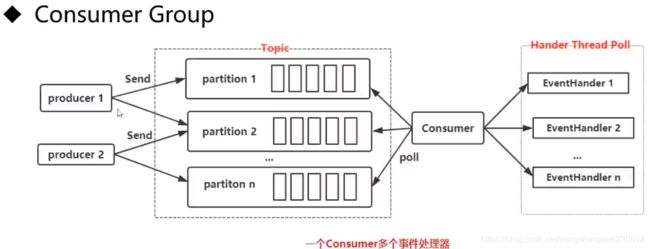

第7章 Kafka核心API——Consumer

7-1 Consumer介绍 (02:27)

7-2 Consumer之HelloWorld

/*

工作里这种用法,有,但是不推荐

*/

private static void helloworld(){

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "true");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String,String> consumer = new KafkaConsumer(props);

// 消费订阅哪一个Topic或者几个Topic

consumer.subscribe(Arrays.asList(TOPIC_NAME));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

for (ConsumerRecord<String, String> record : records)

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(),record.offset(), record.key(), record.value());

}

}

7-3 Consumer之手动提交

/*

手动提交offset

*/

private static void commitedOffset() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// 消费订阅哪一个Topic或者几个Topic

consumer.subscribe(Arrays.asList(TOPIC_NAME));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

for (ConsumerRecord<String, String> record : records) {

// 想把数据保存到数据库,成功就成功,不成功...

// TODO record 2 db

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

// 如果失败,则回滚, 不要提交offset

}

// 如果成功,手动通知offset提交

consumer.commitAsync();

}

}

7-4 Consumer演示观后感

7-5 Consumer单Partition提交offset

/*

手动提交offset,并且手动控制partition

*/

private static void commitedOffsetWithPartition() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// 消费订阅哪一个Topic或者几个Topic

consumer.subscribe(Arrays.asList(TOPIC_NAME));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

// 每个partition单独处理

for(TopicPartition partition : records.partitions()){

List<ConsumerRecord<String, String>> pRecord = records.records(partition);

for (ConsumerRecord<String, String> record : pRecord) {

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

}

//这个一次消费单个partition,poll到的消息最后的一个消息的offset

long lastOffset = pRecord.get(pRecord.size() -1).offset();

// 单个partition中的offset,并且进行提交

Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>();

offset.put(partition,new OffsetAndMetadata(lastOffset+1));

// 提交offset

consumer.commitSync(offset);

System.out.println("=============partition - "+ partition +" end================");

}

}

}

7-6 Consumer手动控制一到多个分区

/*

手动提交offset,并且手动控制partition,更高级

*/

private static void commitedOffsetWithPartition2() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// jiangzh-topic - 0,1两个partition

TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0);

TopicPartition p1 = new TopicPartition(TOPIC_NAME, 1);

// 消费订阅哪一个Topic或者几个Topic

// consumer.subscribe(Arrays.asList(TOPIC_NAME));

// 消费订阅某个Topic的某个分区

consumer.assign(Arrays.asList(p0));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

// 每个partition单独处理

for(TopicPartition partition : records.partitions()){

List<ConsumerRecord<String, String>> pRecord = records.records(partition);

for (ConsumerRecord<String, String> record : pRecord) {

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

}

long lastOffset = pRecord.get(pRecord.size() -1).offset();

// 单个partition中的offset,并且进行提交

Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>();

offset.put(partition,new OffsetAndMetadata(lastOffset+1));

// 提交offset

consumer.commitSync(offset);

System.out.println("=============partition - "+ partition +" end================");

}

}

}

7-7 Consumer多线程并发处理

kafka的producer是线程安全的

kafka的consumer不是线程安全的

- 方式一:这种类型是经典模式,每一个线程单独创建一个KafkaConsumer,用于保证线程安全

在对数据一致性有较高要求的时候适合使用方式一,方式一对partition有较好的管控能力,方便处理失败rollback重新处理

public class ConsumerThreadSample {

private final static String TOPIC_NAME="jiangzh-topic";

/*

这种类型是经典模式,每一个线程单独创建一个KafkaConsumer,用于保证线程安全

*/

public static void main(String[] args) throws InterruptedException {

KafkaConsumerRunner r1 = new KafkaConsumerRunner();

Thread t1 = new Thread(r1);

t1.start();

Thread.sleep(15000);

r1.shutdown();

}

public static class KafkaConsumerRunner implements Runnable{

private final AtomicBoolean closed = new AtomicBoolean(false);

private final KafkaConsumer consumer;

public KafkaConsumerRunner() {

Properties props = new Properties();

props.put("bootstrap.servers", "192.168.220.128:9092");

props.put("group.id", "test");

props.put("enable.auto.commit", "false");

props.put("auto.commit.interval.ms", "1000");

props.put("session.timeout.ms", "30000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

consumer = new KafkaConsumer<>(props);

TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0);

TopicPartition p1 = new TopicPartition(TOPIC_NAME, 1);

consumer.assign(Arrays.asList(p0,p1));

}

public void run() {

try {

while(!closed.get()) {

//处理消息

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

for (TopicPartition partition : records.partitions()) {

List<ConsumerRecord<String, String>> pRecord = records.records(partition);

// 处理每个分区的消息

for (ConsumerRecord<String, String> record : pRecord) {

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(),record.offset(), record.key(), record.value());

}

// 返回去告诉kafka新的offset

long lastOffset = pRecord.get(pRecord.size() - 1).offset();

// 注意加1

consumer.commitSync(Collections.singletonMap(partition, new OffsetAndMetadata(lastOffset + 1)));

}

}

}catch(WakeupException e) {

if(!closed.get()) {

throw e;

}

}finally {

consumer.close();

}

}

public void shutdown() {

closed.set(true);

consumer.wakeup();

}

}

}

- 方式二: 先把数据拿下来,在对每一条数据进行多线程处理

方式二 线程中处理消息失败成功也不知到,不能手动提交offset

适合流式数据,对于数据处理正确性要求不高,及非业务系统,推给我数据我就处理,成功失败不重要

例如:定位gps数据上传

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.util.Arrays;

import java.util.Properties;

import java.util.concurrent.ArrayBlockingQueue;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.ThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

public class ConsumerRecordThreadSample {

private final static String TOPIC_NAME = "jiangzh-topic";

public static void main(String[] args) throws InterruptedException {

String brokerList = "192.168.220.128:9092";

String groupId = "test";

int workerNum = 5;

//创建一个CunsumerExecutor,也就是一个线程池

CunsumerExecutor consumers = new CunsumerExecutor(brokerList, groupId, TOPIC_NAME);

consumers.execute(workerNum);

Thread.sleep(1000000);

consumers.shutdown();

}

// Consumer处理

public static class CunsumerExecutor{

private final KafkaConsumer<String, String> consumer;

private ExecutorService executors;

public CunsumerExecutor(String brokerList, String groupId, String topic) {

Properties props = new Properties();

props.put("bootstrap.servers", brokerList);

props.put("group.id", groupId);

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("session.timeout.ms", "30000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

consumer = new KafkaConsumer<>(props);

consumer.subscribe(Arrays.asList(topic));

}

public void execute(int workerNum) {

executors = new ThreadPoolExecutor(workerNum, workerNum, 0L, TimeUnit.MILLISECONDS,

new ArrayBlockingQueue<>(1000), new ThreadPoolExecutor.CallerRunsPolicy());

while (true) {

ConsumerRecords<String, String> records = consumer.poll(200);

for (final ConsumerRecord record : records) {

executors.submit(new ConsumerRecordWorker(record));

}

}

}

public void shutdown() {

if (consumer != null) {

consumer.close();

}

if (executors != null) {

executors.shutdown();

}

try {

if (!executors.awaitTermination(10, TimeUnit.SECONDS)) {

System.out.println("Timeout.... Ignore for this case");

}

} catch (InterruptedException ignored) {

System.out.println("Other thread interrupted this shutdown, ignore for this case.");

Thread.currentThread().interrupt();

}

}

}

// 记录处理

public static class ConsumerRecordWorker implements Runnable {

private ConsumerRecord<String, String> record;

public ConsumerRecordWorker(ConsumerRecord record) {

this.record = record;

}

@Override

public void run() {

// 假如说数据入库操作

System.out.println("Thread - "+ Thread.currentThread().getName());

System.err.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

}

}

}

7-8 Consumer控制offset起始位置

- 手动指定offset的起始位置

/*

手动指定offset的起始位置,及手动提交offset

*/

private static void controlOffset() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// jiangzh-topic - 0,1两个partition

TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0);

// 消费订阅某个Topic的某个分区

consumer.assign(Arrays.asList(p0));

while (true) {

// 手动指定offset起始位置

/*

1、人为控制offset起始位置

2、如果出现程序错误,重复消费一次

*/

/*

1、第一次从0消费【一般情况】

2、比如一次消费了100条, offset置为101并且存入Redis

3、每次poll之前,从redis中获取最新的offset位置

4、每次从这个位置开始消费

*/

consumer.seek(p0, 700);

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

// 每个partition单独处理

for(TopicPartition partition : records.partitions()){

List<ConsumerRecord<String, String>> pRecord = records.records(partition);

for (ConsumerRecord<String, String> record : pRecord) {

System.err.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

}

long lastOffset = pRecord.get(pRecord.size() -1).offset();

// 单个partition中的offset,并且进行提交

Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>();

offset.put(partition,new OffsetAndMetadata(lastOffset+1));

// 提交offset

consumer.commitSync(offset);

System.out.println("=============partition - "+ partition +" end================");

}

}

}

7-9 面试点:Consumer限流

/*

流量控制 - 限流

限流一般针对消费单个topic

*/

private static void controlPause() {

Properties props = new Properties();

props.setProperty("bootstrap.servers", "192.168.220.128:9092");

props.setProperty("group.id", "test");

props.setProperty("enable.auto.commit", "false");

props.setProperty("auto.commit.interval.ms", "1000");

props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer(props);

// jiangzh-topic - 0,1两个partition

TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0);

TopicPartition p1 = new TopicPartition(TOPIC_NAME, 1);

// 消费订阅某个Topic的某个分区

consumer.assign(Arrays.asList(p0));

long totalNum = 40;

while (true) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000));

// 每个partition单独处理

for(TopicPartition partition : records.partitions()){

List<ConsumerRecord<String, String>> pRecord = records.records(partition);

long num = 0;

for (ConsumerRecord<String, String> record : pRecord) {

System.out.printf("patition = %d , offset = %d, key = %s, value = %s%n",

record.partition(), record.offset(), record.key(), record.value());

/*

1、接收到record信息以后,去令牌桶中拿取令牌

2、如果获取到令牌,则继续业务处理

3、如果获取不到令牌, 则pause等待令牌

4、当令牌桶中的令牌足够, 则将consumer置为resume状态

*/

num++;

if(record.partition() == 0){

if(num >= totalNum){

consumer.pause(Arrays.asList(p0));

}

}

if(record.partition() == 1){

if(num == 40){

consumer.resume(Arrays.asList(p0));

}

}

}

long lastOffset = pRecord.get(pRecord.size() -1).offset();

// 单个partition中的offset,并且进行提交

Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>();

offset.put(partition,new OffsetAndMetadata(lastOffset+1));

// 提交offset

consumer.commitSync(offset);

System.out.println("=============partition - "+ partition +" end================");

}

}

}

7-10 面试点

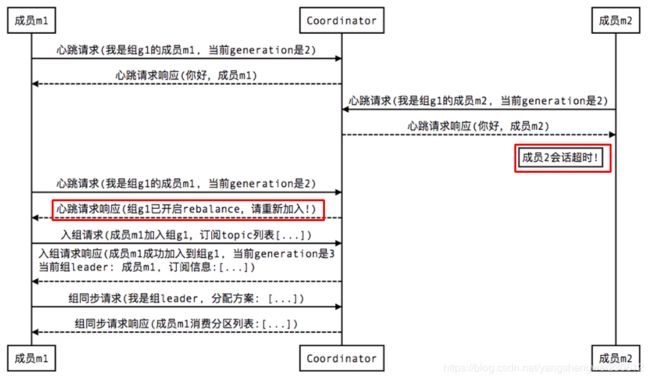

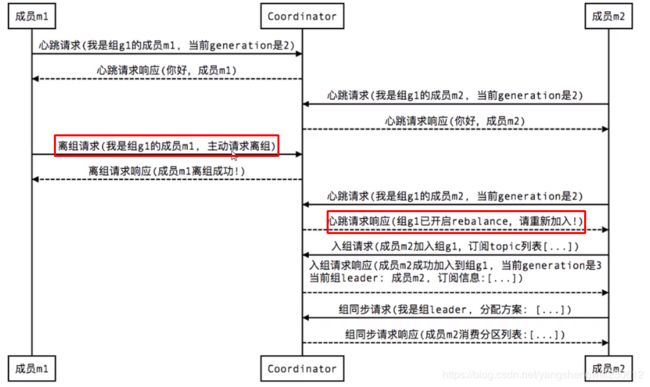

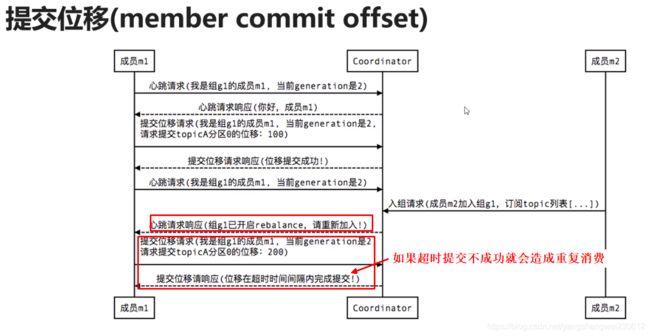

Consumer Rebalance解析

所有topic的分区总数应等于消费组中的消费者数,消费者大于总的分区数,就会有消费者闲置消费不到消息

出现rebalance的情况

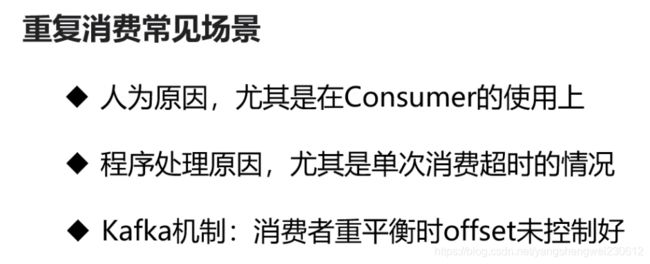

提交为一失败会造成重复消费

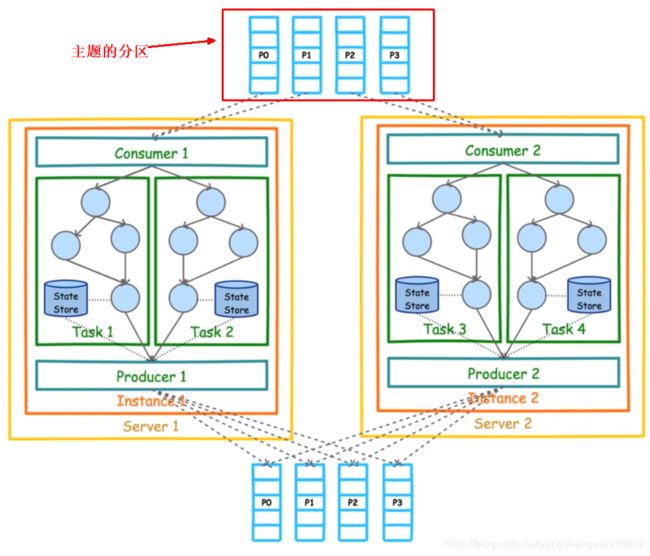

第8章 Kafka核心API——Stream(流处理)

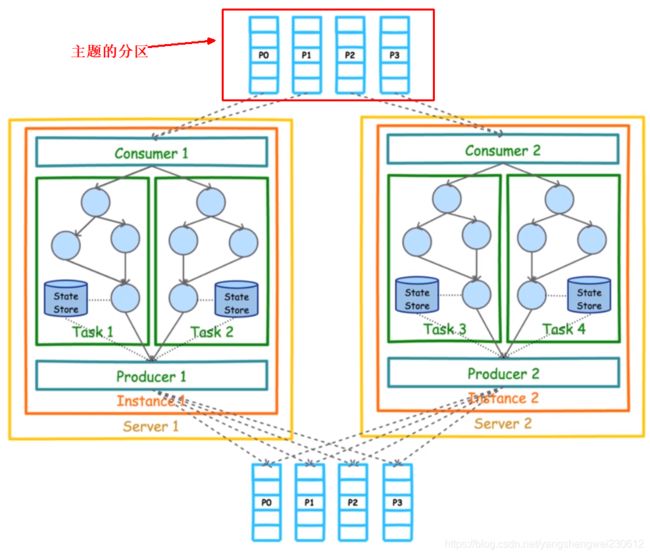

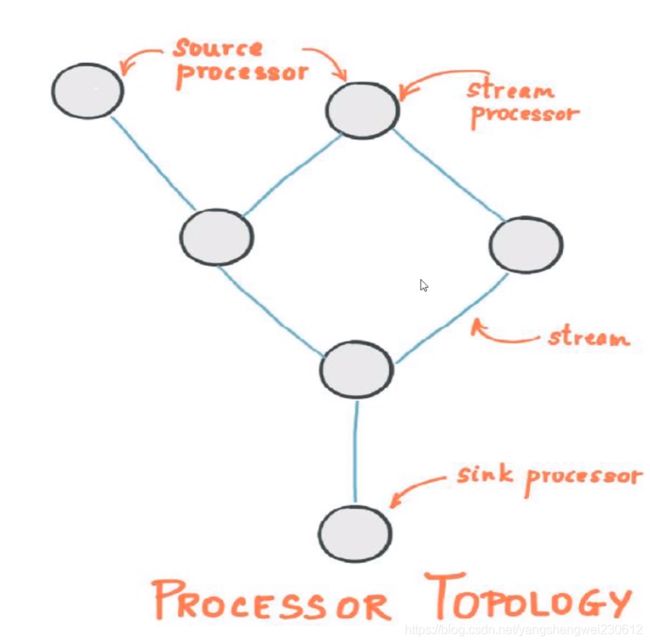

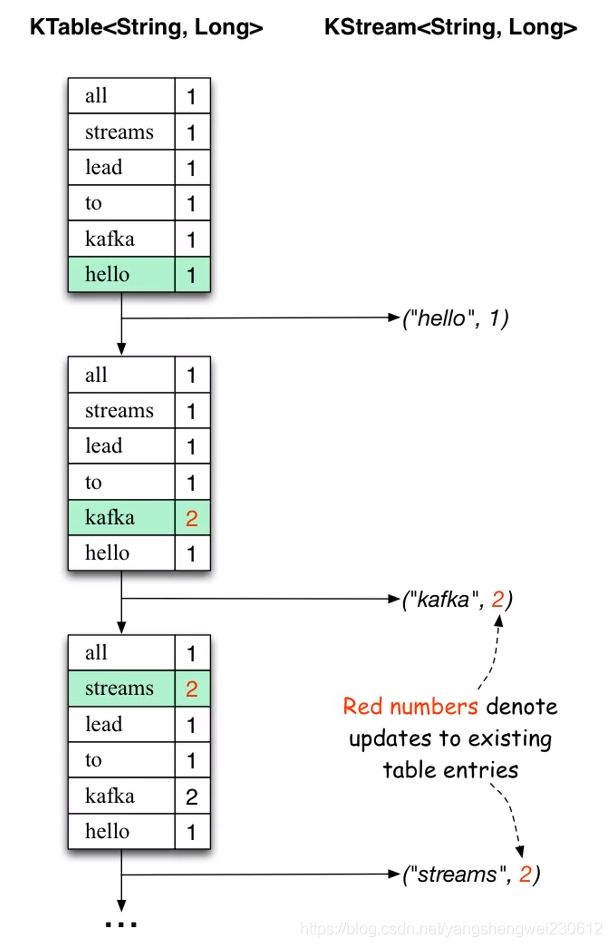

Kafka Stream的主要作用是:在kafka内部处理kafka存储的数据

8-2 Kafka Stream概念及初识高层架构图

8-3 Kafka Stream 核心概念讲解

8-4 Kafka Stream 演示准备

从一个或多个topic 经过Kafka Stream 处理传另外的一个或多个topic

<dependency>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka-clientsartifactId>

<version>2.4.0version>

dependency>

<dependency>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka-streamsartifactId>

<version>2.4.0version>

dependency>

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.KafkaStreams;

import org.apache.kafka.streams.StreamsBuilder;

import org.apache.kafka.streams.StreamsConfig;

import org.apache.kafka.streams.kstream.KStream;

import org.apache.kafka.streams.kstream.KTable;

import org.apache.kafka.streams.kstream.Produced;

import java.util.Arrays;

import java.util.Locale;

import java.util.Properties;

public class StreamSample {

private static final String INPUT_TOPIC="jiangzh-stream-in";

private static final String OUT_TOPIC="jiangzh-stream-out";

public static void main(String[] args) {

Properties props = new Properties();

props.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.220.128:9092");

props.put(StreamsConfig.APPLICATION_ID_CONFIG,"wordcount-app");

props.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

props.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

// 构建流结构拓扑

final StreamsBuilder builder = new StreamsBuilder();

// 构建Wordcount

// wordcountStream(builder);

// 构建foreachStream

foreachStream(builder);

final KafkaStreams streams = new KafkaStreams(builder.build(), props);

streams.start();

}

// 定义流计算过程(从一个topic取数据,经过算子,直接输出)

static void foreachStream(final StreamsBuilder builder){

KStream<String,String> source = builder.stream(INPUT_TOPIC);

source

.flatMapValues(value -> Arrays.asList(value.toLowerCase(Locale.getDefault()).split(" ")))

.foreach((key,value)-> System.out.println(key + " : " + value));

}

// 定义流计算过程(从一个topic取数据,经过算子,再发送到另个topic)

static void wordcountStream(final StreamsBuilder builder){

// 不断从INPUT_TOPIC上获取新数据,并且追加到流上的一个抽象对象

KStream<String,String> source = builder.stream(INPUT_TOPIC);

// Hello World imooc

// KTable是数据集合的抽象对象

// 算子

final KTable<String, Long> count =

source

//先数据拆分(本次按空格拆分)

// flatMapValues -> 将一行数据拆分为多行数据 key 1 , value Hello World

// flatMapValues -> 将一行数据拆分为多行数据 key 1 , value Hello key 1 , value World

/*

key 1 , value Hello -> Hello 1 World 2

key 2 , value World

key 3 , value World

*/

.flatMapValues(value -> Arrays.asList(value.toLowerCase(Locale.getDefault()).split(" ")))

// 合并 -> 按value值合并

.groupBy((key, value) -> value)

// 统计出现的总数

.count();

// 将结果输入到OUT_TOPIC中

count.toStream().to(OUT_TOPIC, Produced.with(Serdes.String(),Serdes.Long()));

}

}

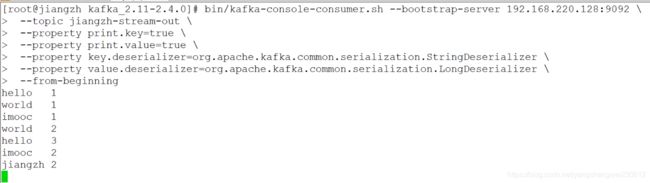

8-5 Kafka Stream使用演示 (14:12)

8-6 Kafka Stream程序解析 (09:09)

8-7 Kafka Stream算子演示讲解 (04:38)

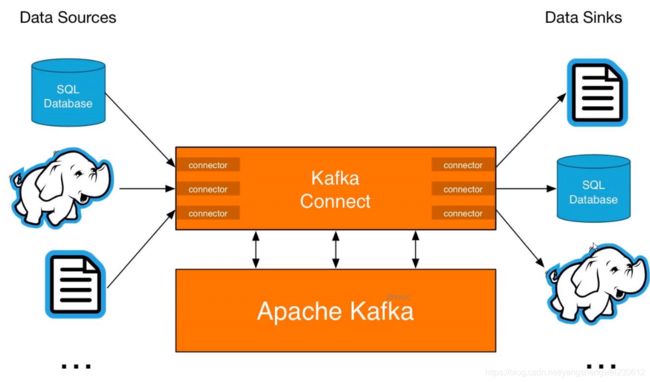

第9章 Kafka核心API——Connect(实际生产开发中用的很少)

9-1 Kafka Connect章节介绍 (00:37)

9-2 Kafka Connect基本概念介绍

9-3 Kakfa Connect环境准备 (13:51)

9-4 Kafka Connect Source和MySQL集成 (06:22)

9-5 Kafka Connect Sink和MySQL集成 (05:30)

9-6 Kafka Connect原理及使用场景介绍 (07:20)

第10章 Kafka集群部署与开发

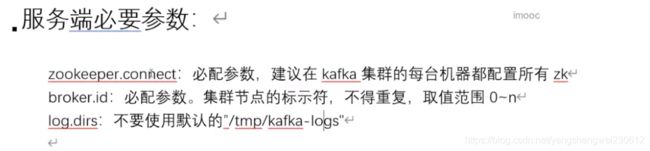

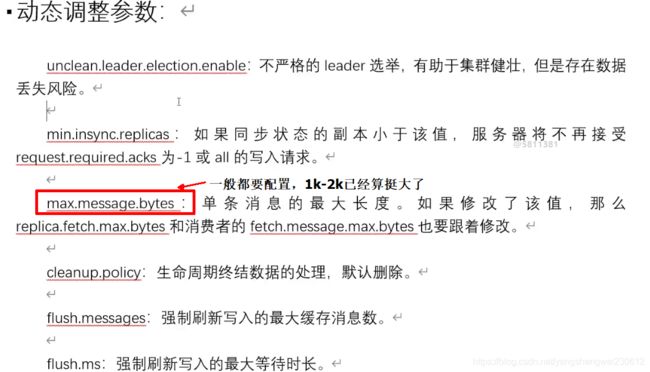

10-2 Kafka集群部署配置讲解

10-4 Kafka副本集-1

/*

创建Topic实例

*/

public static void createTopic() {

AdminClient adminClient = adminClient();

// 副本因子(三个副本,副本因子为三,可以保证三个副本分布三个broker中)

Short rs = 3;

NewTopic newTopic = new NewTopic(TOPIC_NAME, 3 , rs);

CreateTopicsResult topics = adminClient.createTopics(Arrays.asList(newTopic));

System.out.println("CreateTopicsResult : "+ topics);

}

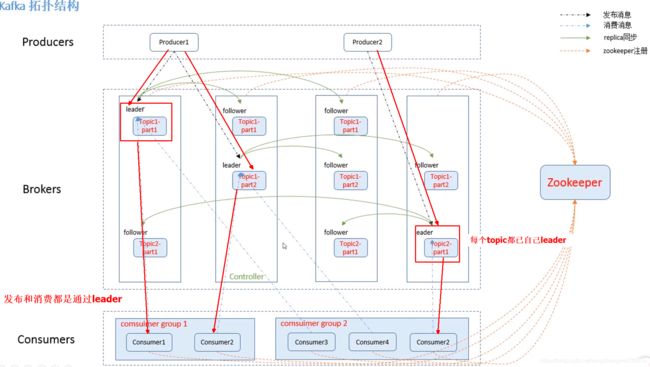

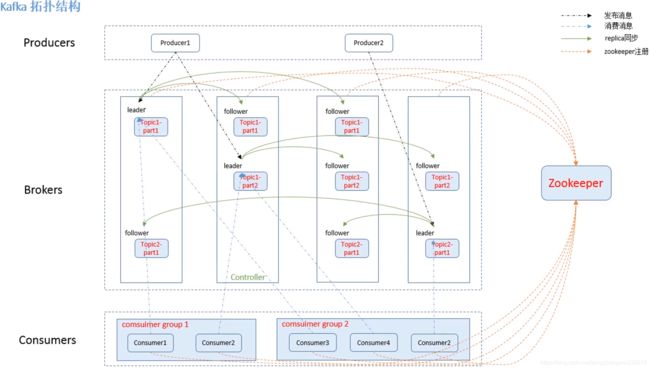

10-6 图解Kafka集群基本概念

再大的数据集一个topic有5-10patition已经很多,所以patition不要太多,太多主机受不了,一般集群中部署了kafka broker的数量要大于一个topic的patition的数量。

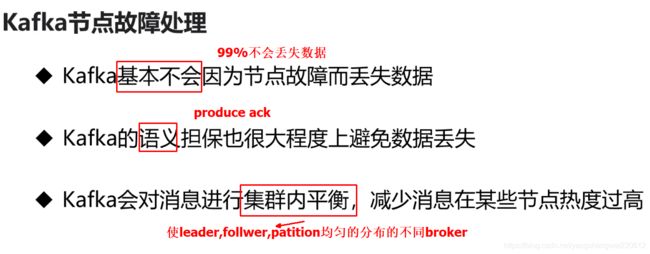

10-7 Kafka节点故障原因及处理方式

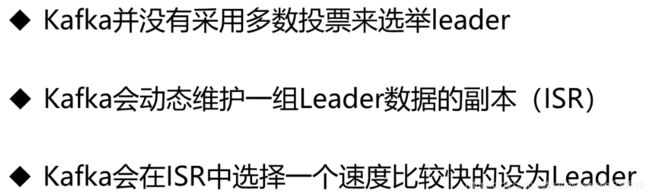

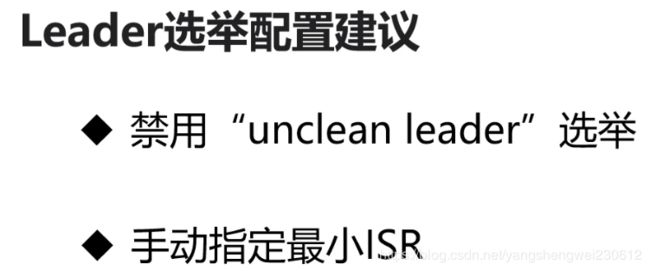

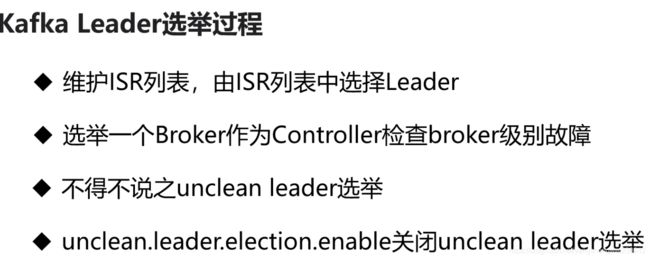

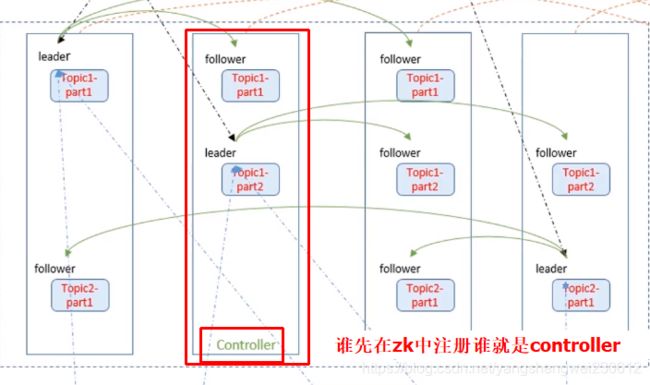

10-8 面试点:Kafka Leader选举机制

第11章 Kafka集群监控、安全与最佳实践

11-1 集群监控安全介绍 (01:20)

11-2 Kafka监控安装

kafka 开源监控–kafka-manager安装

11-3 Kafka监控界面讲解 (11:31)

11-4 Kafka SSL签名库生成 (08:38)

11-5 Kafka SSL服务端集成 (07:07)

11-6 Kafka SSL客户端集成 (09:11)

11-7 Kafka最佳实践介绍 (03:43)

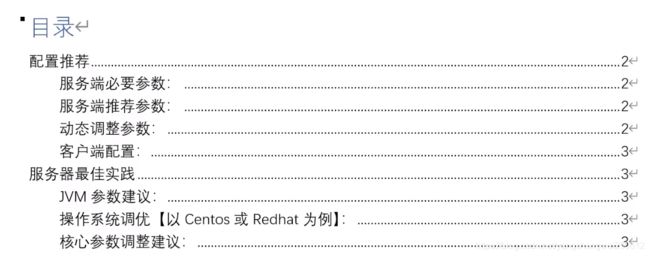

11-8 Kafka最佳实践配置项讲解

第12章 实战“慕问卷”开发 —— 集成微服务

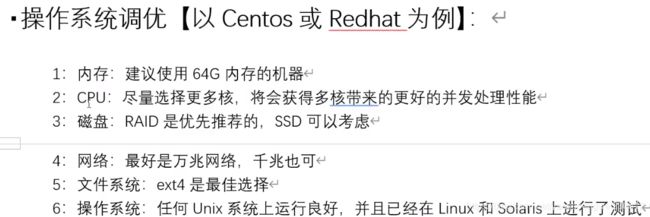

12-1 SpringCloud Config内容介绍 (01:45)

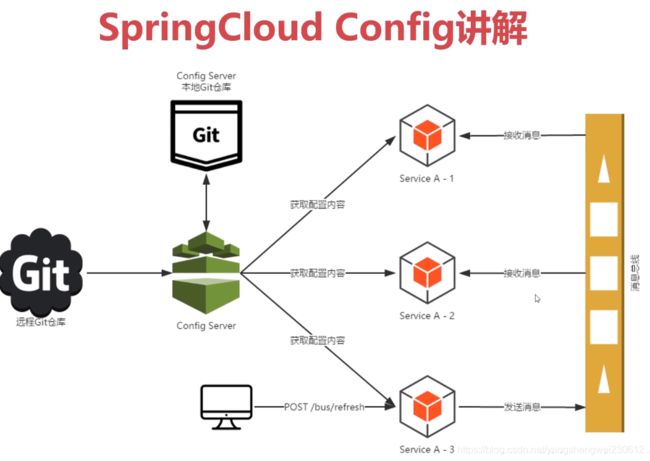

12-2 SpringCloud Config架构图介绍

12-3 SpringCloud演示环境准备

12-4 SpringCloud Config Server配置使用

org.springframework.cloud</groupId>

spring-cloud-config-server</artifactId>

</dependency>

spring.application.name=config-server

server.port=8900

spring.cloud.config.server.git.uri=https://github.com/jiangzh292679/imooc_conf.git

spring.cloud.config.label=master

spring.cloud.config.server.git.username=3078956183@qq.com

spring.cloud.config.server.git.password=imooc_jiangzh001

验证config server

http://localhost:8900/kafka/master

12-5 SpringCloud Client配置使用

org.springframework.boot</groupId>

spring-boot-starter-web</artifactId>

</dependency>

org.springframework.cloud</groupId>

spring-cloud-starter-config</artifactId>

</dependency>

bootstrap.yml配置:

spring:

application:

name: kafka

cloud:

config:

uri: http://localhost:8900/

label: master

12-6 SpringCloud Config动态刷新准备

## 动态刷新配置依赖

org.springframework.boot</groupId>

spring-boot-starter-actuator</artifactId>

</dependency>

## 增加访问point

management.endpoints.web.exposure.include=health, info, refresh

post请求访问/actuator/refresh

curl -XPOST http://localhost:7002/actuator/refresh

12-7 SpringCloud Config 动态刷新演示 (04:47)

12-8 SpringCloud Config配置使用环节回顾 (01:31)

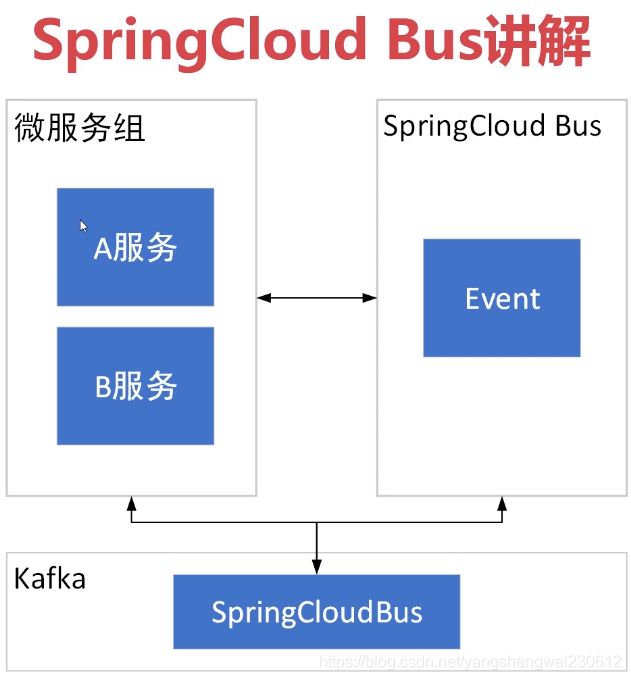

12-9 SpringCloud Bus内容介绍 (01:02)

12-10 SpringCloud Bus架构图讲解

12-11 SpringCloud Bus动态刷新使用演示

配置依赖:

org.springframework.cloud</groupId>

spring-cloud-starter-bus-kafka</artifactId>

</dependency>

Kafka配置:

spring.cloud.stream.kafka.binder.zkNodes=192.168.220.128:2181

spring.cloud.stream.kafka.binder.brokers=192.168.220.128:9092

management:

endpoints:

web:

exposure:

include: health, info, refresh, bus-refresh

配置刷新

curl -X POST http://localhost:7002/actuator/bus-refresh

测试:

http://localhost:7001/test

http://localhost:7002/test

12-12 SpringCloud Bus演示多服务动态刷新 (08:47)

第13章 Kafka面试点梳理

13-1 Kafka面试题内容介绍及面试建议

13-2 Kafka概念及优劣势分析

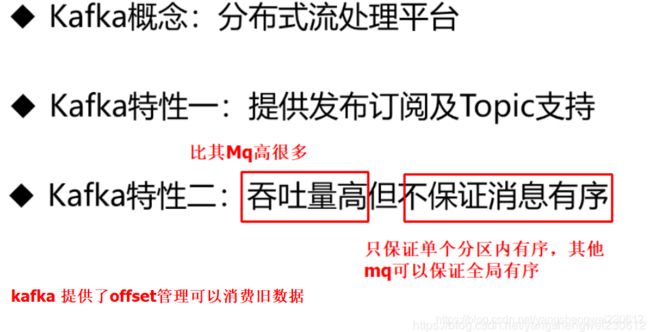

1.问题:kafka 与其他mq的不同

- kafka是一个流处理平台

- 虽然是一个流式处理平台,但提供了发布和订阅主题的功能,与其他的mq相同

- kafka有比其他mq高出很多的吞吐量

- kafka 提供offset 管理,可以消费历史消息。

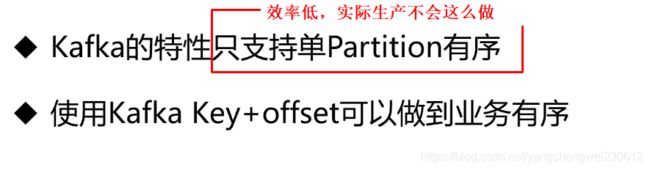

- 不保证数据全局有序,只能保证单个分区内有序,其他mq可以保证消息的全局有序

2.kafak 应用场景

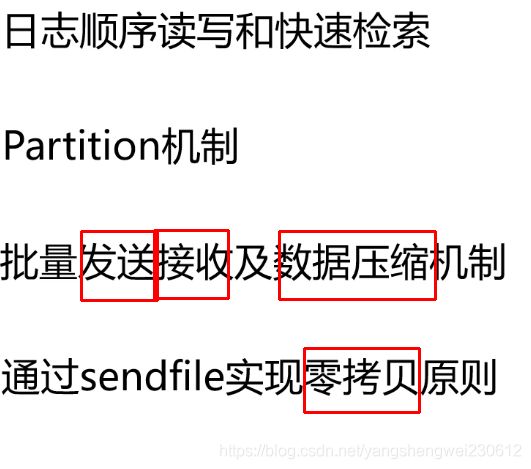

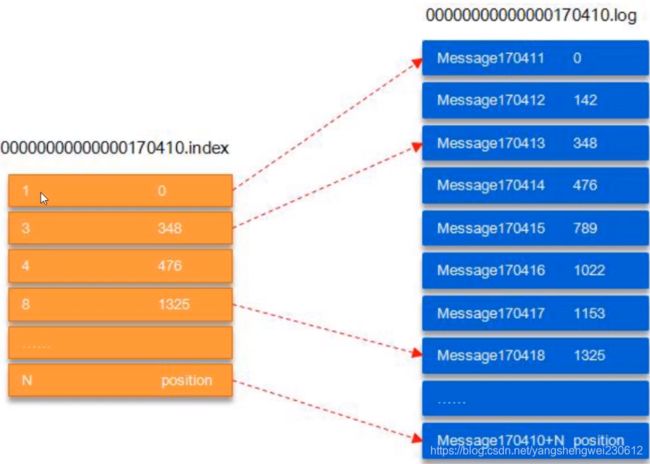

13-3 Kafka吞吐量大/速度块的原因分析

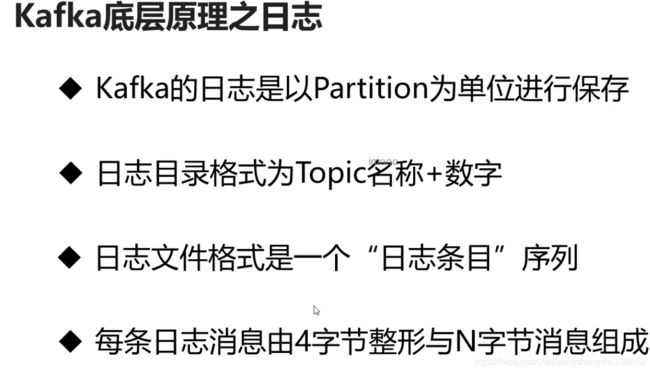

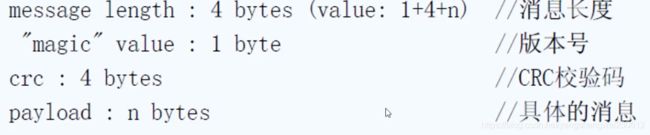

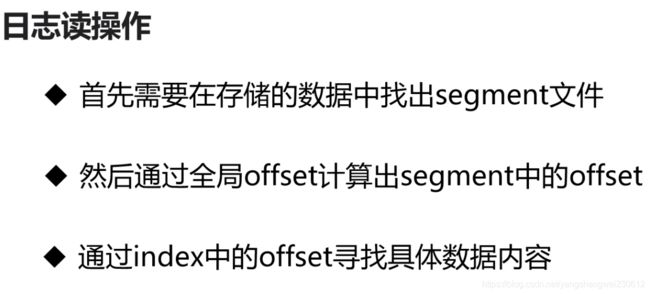

13-4 Kafka日志检索底层原理

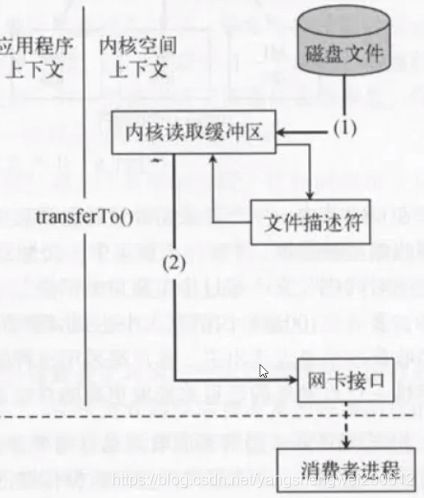

13-5 Kafka 零拷贝原理分析

kafka通过sendfil实现零拷贝

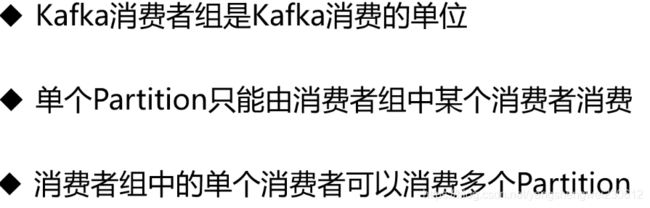

13-6 消费者组与消费者

13-7 Producer客户端

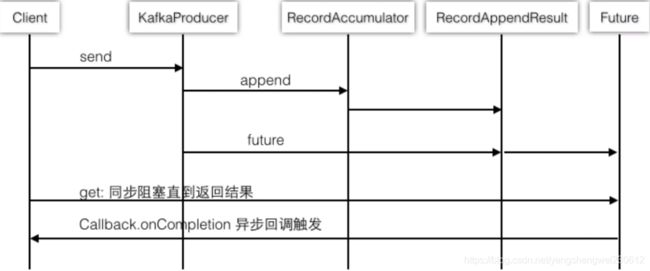

producer 的发送消息的过程:

producer 的时序图

在创建producer 的时候会启动一个专门的守护线程,这个守护线程是一直不停的在轮询,轮询的内容就是将消息发送的kafka上

producer 调用send并不是直接将数据发送的kafka上,而是先发送的一个队列上,满足一定条件后,这个守护线程会将消息推送给kafka,

获取future,producer client 可以调用get()同步阻塞直到返回结果

producer 的流程图

送消息进行序列化,让后通过分区器将消息发送到不同的分区,每个分区的上的消息被分成不同的批次,然后通过守护进程分批次发送的kafka broker,如果失败的再判断是否需要重试

producer 一般有俩个线程

一个是在创建producer 的时候会启动一个专门的守护线程来批量将消息推送到kafka

一个是在send消息的时候会另起一个一个线程,将消息追加到队列中

13-8 Kafka消息有序性处理 (保证有序性)

13-9 Kafka Topic删除背后的故事

实际生产环境中,删除topic的时候尽量先关闭kafka broker

13-10 消息重复消费和漏消费原理分析

- 独立管理offset 保存在redis中,再出现消费异常是从redis中读取offset

- 一个消费组中尽量一个消费者消费一个partition

消息漏消费

消息漏消费一般都是业务程序处理的原因,kafka本身很少会导致消息漏消费

13-11 消费者线程安全性分析

![]()

13-12 Kafka Leader选举分析

13-13 Kafka幂等性源码分析

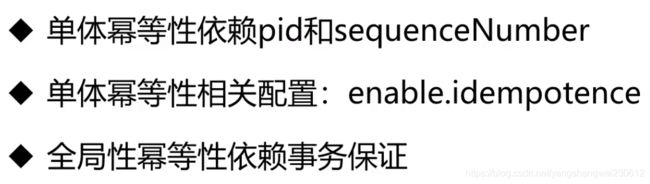

幂等性:多次请求返回结果一致

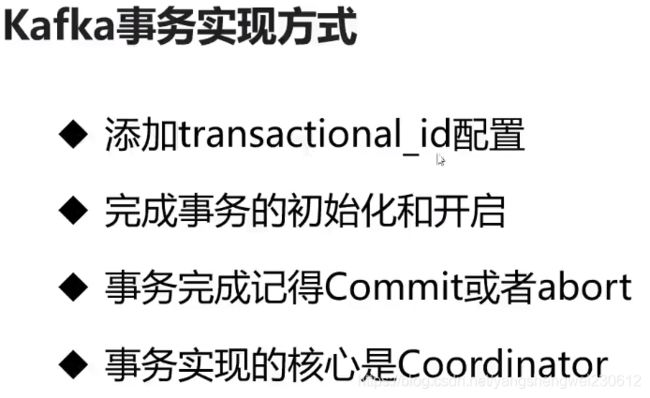

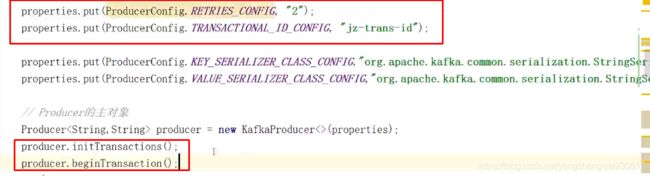

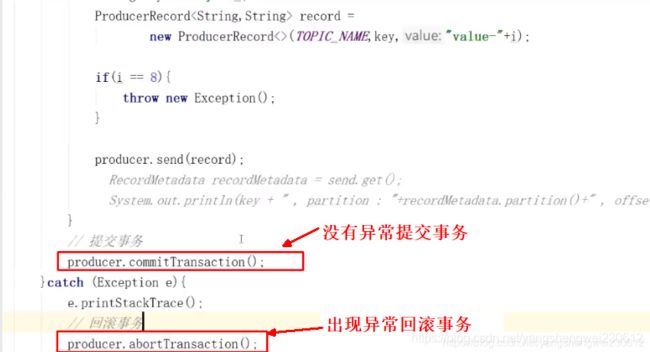

13-14 Kafka事务支持实现及原理分析

kafka的事务能不开启尽量不开启,开启事务一般会降低20%-30%的效率