深度学习入坑(二)----MNIST手写识别

基于R1.5版本的TensorFlow+Anaconda3.6+Win。

搞了一天。算是明白是什么流程了。但是还是有很多不懂的地方。深度学习的hello world 好难。关键是训练之后。使用自己的图片测试。几乎全错,让我感觉对的那几次根本就是碰运气。

贴代码吧。原英文注释就不去掉了。说的很详细。有个关键类Estimator。训练过程中输出LOG并且保存meta data index checkpoint 文件。具体使用方法现在还不明确,我找到的方法可能已经过时了。但是最新的API还是有这些功能。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Date : 2018-01-30 13:37:25

# @Author : ZYM

'''这是使用最新版的 high-level API,使用到了Estimator Dataset两个关键的类'''

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

# Imports

import numpy as np

import tensorflow as tf

tf.logging.set_verbosity(tf.logging.INFO)

# Our application logic will be added here

def cnn_model_fn(features, labels, mode):

"""Model function for CNN."""

# Input Layer

# Reshape X to 4-D tensor: [batch_size, width, height, channels]

# batch_size:Size of the subset of examples to use when performing gradient descent during training

# MNIST images are 28x28 pixels, and have one color channel

# x----输入图像,我们希望能用任意张。所以batch_size用-1。如果我们feed batch=5.shape=[5,28,28,1]

#features["x"]=5*28*28.这样我们就构建好了输入层

input_layer = tf.reshape(features["x"], [-1, 28, 28, 1])

# Convolutional Layer #1

# Computes 32 features using a 5x5 filter with ReLU activation.

# Padding is added to preserve width and height.

# Input Tensor Shape: [batch_size, 28, 28, 1]

# Output Tensor Shape: [batch_size, 28, 28, 32]

conv1 = tf.layers.conv2d(

inputs=input_layer,

filters=32, #每张图卷积要取出的特征数。

kernel_size=[5, 5],#卷积大小。kernel_size=5(if w=h)

padding="same",#使用same表示输出张量和输入张量大小相同。用0去填补张量边缘

activation=tf.nn.relu #指定使用什么激活函数来激活卷积。这里我们用ReLU神经元。

)

# Pooling Layer #1

# First max pooling layer with a 2x2 filter and stride of 2

# Input Tensor Shape: [batch_size, 28, 28, 32]

# Output Tensor Shape: [batch_size, 14, 14, 32]

pool1 = tf.layers.max_pooling2d(inputs=conv1, pool_size=[2, 2], strides=2)

# Convolutional Layer #2

# Computes 64 features using a 5x5 filter.

# Padding is added to preserve width and height.

# Input Tensor Shape: [batch_size, 14, 14, 32]

# Output Tensor Shape: [batch_size, 14, 14, 64]

conv2 = tf.layers.conv2d(

inputs=pool1,

filters=64,

kernel_size=[5, 5],

padding="same",

activation=tf.nn.relu)

# Pooling Layer #2

# Second max pooling layer with a 2x2 filter and stride of 2

# Input Tensor Shape: [batch_size, 14, 14, 64]

# Output Tensor Shape: [batch_size, 7, 7, 64]

pool2 = tf.layers.max_pooling2d(inputs=conv2, pool_size=[2, 2], strides=2)

# Flatten tensor into a batch of vectors

# Input Tensor Shape: [batch_size, 7, 7, 64]

# Output Tensor Shape: [batch_size, 7 * 7 * 64]

pool2_flat = tf.reshape(pool2, [-1, 7 * 7 * 64])

# Dense Layer

# Densely connected layer with 1024 neurons!!!

# Input Tensor Shape: [batch_size, 7 * 7 * 64]

# Output Tensor Shape: [batch_size, 1024]

dense = tf.layers.dense(inputs=pool2_flat, units=1024, activation=tf.nn.relu)

# Add dropout operation; 0.6 (1-0,4)probability that element will be kept

dropout = tf.layers.dropout(

inputs=dense, rate=0.4,

training=mode == tf.estimator.ModeKeys.TRAIN #dropout will only be performed if training is True

)

# Logits layer

# Input Tensor Shape: [batch_size, 1024]

# Output Tensor Shape: [batch_size, 10]

logits = tf.layers.dense(inputs=dropout, units=10)

predictions = {

# Generate predictions (for PREDICT and EVAL mode)

"classes": tf.argmax(input=logits, axis=1),#选择logits中最大的

# Add `softmax_tensor` to the graph. It is used for PREDICT and by the

# `logging_hook`.

"probabilities": tf.nn.softmax(logits, name="softmax_tensor")

}

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(mode=mode, predictions=predictions)

# Calculate Loss (for both TRAIN and EVAL modes)

loss = tf.losses.sparse_softmax_cross_entropy(labels=labels, logits=logits)

# Configure the Training Op (for TRAIN mode)

if mode == tf.estimator.ModeKeys.TRAIN:

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.001)

#这是我们需要训练的op

train_op = optimizer.minimize(

loss=loss,

global_step=tf.train.get_global_step())

return tf.estimator.EstimatorSpec(mode=mode, loss=loss, train_op=train_op)

# Add evaluation metrics (for EVAL mode) 准确性度量

eval_metric_ops = {

"accuracy": tf.metrics.accuracy(

labels=labels, #这是我们给定的labels。

predictions=predictions["classes"] #tf.argmax(input=logits, axis=1)。这是logit层得到的。

)}

return tf.estimator.EstimatorSpec(

mode=mode, loss=loss, eval_metric_ops=eval_metric_ops)

def main(unused_argv):

# Load training and eval data

mnist = tf.contrib.learn.datasets.load_dataset("mnist")

train_data = mnist.train.images # Returns np.array

train_labels = np.asarray(mnist.train.labels, dtype=np.int32)

eval_data = mnist.test.images # Returns np.array

eval_labels = np.asarray(mnist.test.labels, dtype=np.int32)

# Create the Estimator

mnist_classifier = tf.estimator.Estimator(

model_fn=cnn_model_fn, #使用的模型函数

model_dir="./mnist_convnet_model" #结果存储位置

)

# Set up logging for predictions

# Log the values in the "Softmax" tensor with label "probabilities"

#每50步保存一次数据。Log用来追踪训练时的过程,保存probabilities的值

tensors_to_log = {"probabilities": "softmax_tensor"}

logging_hook = tf.train.LoggingTensorHook(

tensors=tensors_to_log, every_n_iter=50)

# Train the model

#训练模型,使用estimator类。设定输入的相关数据和参数

train_input_fn = tf.estimator.inputs.numpy_input_fn(

x={"x": train_data},

y=train_labels,

batch_size=100, #每次训练100个样本

num_epochs=None, #训练直到指定的步数

shuffle=True) #洗牌训练数据

#调用train方法。循环训练xxxxx次。

mnist_classifier.train(

input_fn=train_input_fn,

steps=20000,

hooks=[logging_hook])

# Evaluate the model and print results

eval_input_fn = tf.estimator.inputs.numpy_input_fn(

x={"x": eval_data},

y=eval_labels,

num_epochs=1,

shuffle=False)

eval_results = mnist_classifier.evaluate(input_fn=eval_input_fn)

print(eval_results)

if __name__ == "__main__":

tf.app.run()

训练完成之后,在相关的文件夹下会有保存的数据。根据我在网上找到的方法,使用的文件是.meta以及checkpoint。

程序分为三步。首先导入自己的图片并进行预处理。然后重新定义变量。最后导入训练数据并进行预测

file_name='sample.png'

image = Image.open(file_name).convert('L')

data = list(image.getdata())

#下面这一步是二值化。(加了没加正确率都很低)

data1 = [ (255-i)*1.0/255.0 for i in data]#这部分和教程一样的

x = tf.placeholder(tf.float32, [None, 784])

W = tf.Variable(tf.zeros([784, 10]))

b = tf.Variable(tf.zeros([10]))

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

x_image = tf.reshape(x, [-1,28,28,1])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1)

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2)

W_fc1 = weight_variable([7 * 7 * 64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

keep_prob = tf.placeholder(tf.float32)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10])

y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

init_op =tf.global_variables_initializer()

saver = tf.train.import_meta_graph("./mnist_convnet_model/model.ckpt-14692.meta");#读取.meta

#saver=tf.train.Saver();

with tf.Session() as sess:

sess.run(init_op)

saver.restore(sess,tf.train.latest_checkpoint('./mnist_convnet_model'))#读入checkpoint

prediction=tf.argmax(y_conv,1)

predint=prediction.eval(feed_dict={x: [data],keep_prob:0.5}, session=sess)

print('recognize result:')

print("%g\n"%predint)PS:我的图像是我用画图工具直接在28*28尺寸上手写的数字,而且已经二值化过了。如果这个训练成果连这个都识别不出来,我只能说:我觉得不行!

--------------------------------2.11更新-------------------------------------------

关于MNIST使用Estimator类做预测可以参考《使用自己的图片测试MNIST训练效果(TensorFlow1.5+CNN)》

这种Low-level的API现在我已经不用了。今天重新看了看,存储和保存在官网上还有文档,还是tf.train.Saver().save()和restor()方法。也有创建SaverModel的方法

另外预测这个。我不保存模型,训练之后,直接使用自己的数据做预测。使用MNIST的数据集效果没问题,识别率基本在100%。使用自己的经过图像处理之后,效果还是很差。现在正在用low-level做训练(两层,迭代10000次)等会贴结果吧,毕竟很老的电脑,而且只能CPU。

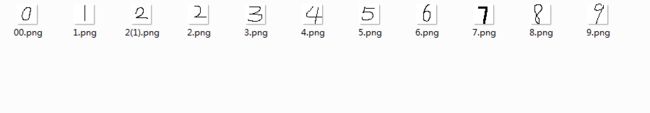

贴一段包含训练评估预测的代码。应该是最简单的那一版。使用文件夹下的自己的图片做测试。下面的是我的图片

截取的一些mnist图片(注意使用MNIST的时候图像不要取反)

import os

import tensorflow as tf

from PIL import Image

import numpy as np

#获取文件夹下的文件名

def filename(filename):

file_name=[]

for root,dirs,names in os.walk(filename):

for name in names:

file_name.append(os.path.join(root,name))

return file_name

#读取图像并做处理。注意是白底黑字,和MNIST相反,加了一步取反操作

def img(name):

image=Image.open(name).convert("L")

#array=image.getdata() 如果使用mnist图像,或者是黑底白字的图像,直接用这一句就好了。

array=np.asarray(image,dtype="float32")

array=abs(255-array)

new_image=Image.fromarray(array)

array=new_image.getdata()

return array

#模型----训练 评估 预测

def my_model():

#读入MNIST数据

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

x=tf.placeholder(tf.float32,[None,784])

w=tf.Variable(tf.zeros([784,10]))

b=tf.Variable(tf.zeros([10]))

y=tf.nn.softmax(tf.matmul(x,w)+b)

y_ = tf.placeholder("float", [None,10])

cross_entropy = -tf.reduce_sum(y_*tf.log(y))

#梯度降

train_step = tf.train.GradientDescentOptimizer(0.01).minimize(cross_entropy)

#初始化变量

init = tf.global_variables_initializer()

sess=tf.Session()

sess.run(init)

#训练1000次

for i in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(100)

sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys})

#评估部分

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print(sess.run(accuracy, feed_dict={x: mnist.test.images, y_: mnist.test.labels}))

name=filename("./photo/own")#根据自己的路径修改

#这是预测部分。只输出了预测值。

for n in name:

image_data=img(n)

prediction=(sess.run(tf.argmax(y,1),feed_dict={x: [image_data]}))

print(prediction)

my_model()

使用教程里的方法。训练10000次之后

6和9没识别出来。我用高级API也没识别出来。应该是我的图像有问题。