基于jsoup爬虫下载图库

需要掌握技能

HTML源码的分析能力,通过分析源码提取图片地址,及爬虫逻辑

通过COOKIE分析参数对获取数据的影响

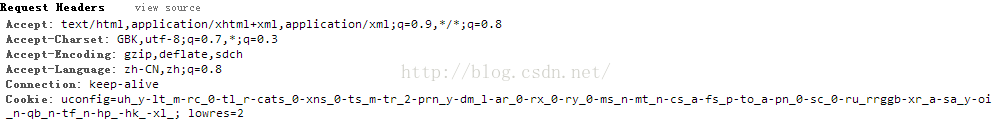

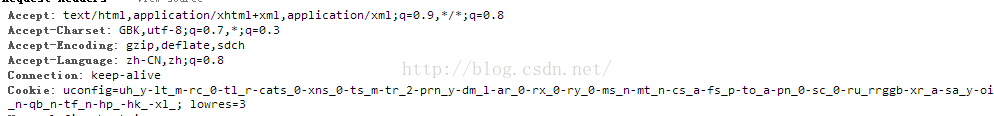

分析COOKICE:

从图1和图2中分析可以发现如果我们要获得较高分辨率的话,我们需要通过提供lowres=3的cookie进行访问才行,于是乎我们就能获得下面这个获取源码的方法

public Document getDocument(String url) throws IOException {

Map cookiesmap=new HashMap();

cookiesmap.put("lowres","3");

cookiesmap.put("uconfig","uh_y-lt_m-rc_0-tl_r-cats_0-xns_0-ts_m-tr_2-prn_y-dm_l-ar_0-rx_0-ry_0-ms_n-mt_n-cs_a-fs_p-to_a-pn_0-sc_0-ru_rrggb-xr_a-sa_y-oi_n-qb_n-tf_n-hp_-hk_-xl_");

return Jsoup.connect(url).timeout(15000).cookies(cookiesmap).get();//返回url对应的源码

}分析完COOKIE之后就是对HTML的阅读了,在返回的源码中可以看到这样的段,h1是这个图集的标题

public String getTitle(Document doc)

{

Elements Listh1 = doc.getElementsByAttributeValue("id","gn");//获取id未gn的h1 html元素

for (Element element :Listh1) {

return element.text();//返回标签中的值

}

return null;

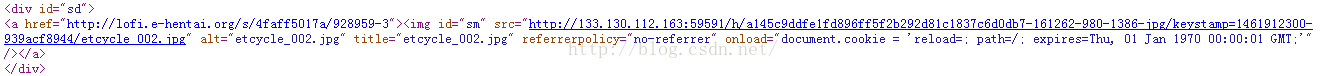

}如果没有id或者class的多元素嵌套,例如获取实际图片文件的地址

public String getImageUrl(Document doc)

{

Elements Listdeiv = doc.getElementsByAttributeValue("id","sd");//找到id为sd的div

for (Element element :Listdeiv) {

Elements links = element.getElementsByTag("img");//获取其下img元素

for (Element link : links) {

String linksrc = link.attr("src");

return link.attr("src")+"+"+link.attr("alt");//img元素下的src参数和alt参数就是需要的数据

}

}

return null;

}下面是整个简单的代码

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.*;

import java.net.URL;

import java.net.URLConnection;

import java.util.*;

public class webant {

public static void main(String args[]) {

webant ant=new webant();

ant.setup(需要下去图集的地址);

}

//获取网页源代码

public Document getDocument(String url) throws IOException {

Map cookiesmap=new HashMap();

cookiesmap.put("lowres","3");

cookiesmap.put("uconfig","uh_y-lt_m-rc_0-tl_r-cats_0-xns_0-ts_m-tr_2-prn_y-dm_l-ar_0-rx_0-ry_0-ms_n-mt_n-cs_a-fs_p-to_a-pn_0-sc_0-ru_rrggb-xr_a-sa_y-oi_n-qb_n-tf_n-hp_-hk_-xl_");

return Jsoup.connect(url).timeout(15000).cookies(cookiesmap).get();

}

//获取主路口

public void getListMainEnter(List enterul,Document doc)

{

Elements ListDiv = doc.getElementsByAttributeValue("class","gi");

for (Element element :ListDiv) {

Elements links = element.getElementsByTag("a");

for (Element link : links) {

String linkHref = link.attr("href");

enterul.add(linkHref);

}

}

}

//获取下一页的网址

public String getNextPageUrl(Document doc)

{

Elements ListDiv = doc.getElementsByAttributeValue("id","ia");

for (Element element :ListDiv) {

Elements links = element.getElementsByTag("a");

for (Element link : links) {

String text = link.text();

if(text.indexOf("Next")>-1)

{

return link.attr("href");

}

}

}

return null;

}

//获取标题

public String getTitle(Document doc)

{

Elements Listh1 = doc.getElementsByAttributeValue("id","gn");

for (Element element :Listh1) {

return element.text();

}

return null;

}

//获取文件地址

public String getImageUrl(Document doc)

{

Elements Listdeiv = doc.getElementsByAttributeValue("id","sd");

for (Element element :Listdeiv) {

Elements links = element.getElementsByTag("img");

for (Element link : links) {

String linksrc = link.attr("src");

return link.attr("src")+"+"+link.attr("alt");

}

}

return null;

}

//下载文件

public void download(String filePath,String imageurl,String imagename) throws IOException {

URL url = new URL(imageurl);

// 打开连接

URLConnection con = url.openConnection();

//设置请求超时为5s

con.setConnectTimeout(60*1000);

// 输入流

InputStream is = con.getInputStream();

// 1K的数据缓冲

byte[] bs = new byte[1024];

// 读取到的数据长度

int len;

// 输出的文件流

File sf=new File(filePath);

if(!sf.exists()){

sf.mkdirs();

}

OutputStream os = new FileOutputStream(filePath+"\\"+imagename);

// 开始读取

while ((len = is.read(bs)) != -1) {

os.write(bs, 0, len);

}

// 完毕,关闭所有链接

os.close();

is.close();

}

//运行图片下载主流程

public void setup(String url)

{

webant ant=new webant();

Document doc;

List enterurl=new ArrayList();

String exceptionurl=null;

List exceptionurls=new ArrayList();

String title=null;

try{

doc = ant.getDocument(url);

title=ant.getTitle(doc);

title=title.replace("|","");//去除标题可能含有|的字符

while(doc!=null)//把每一个图片集合页面的图片对应页面链接汇总到一个List中

{

ant.getListMainEnter(enterurl,doc);

String nextPageUrl=ant.getNextPageUrl(doc);

if(nextPageUrl==null)

doc=null;

else

doc=ant.getDocument(nextPageUrl);

}

Document maindoc;

for(String pageurl:enterurl)//通过图片页面LI

{

exceptionurl=pageurl;

try{

maindoc=ant.getDocument(pageurl);

}

catch (Exception ex)

{

System.out.println(exceptionurl);

exceptionurls.add(exceptionurl);

continue;

}

String imageUrl=ant.getImageUrl(maindoc);

try {

ant.download("随意本地硬盘地址\\"+title,imageUrl.split("\\+")[0],imageUrl.split("\\+")[1]);

}

catch (Exception ex)

{

System.out.println(imageUrl.split("\\+")[0]);

}

}

if(exceptionurls.size()>0)

{

ant.errorUrlWtireOut(exceptionurls,title);

}

}

catch (Exception ex)

{

}

finally {

System.out.println("===============Complete================");

}

}

//导出失败的URL地址

public void errorUrlWtireOut(List enterurl,String title) throws IOException {

File txtfile=new File("随意本地硬盘地址\\"+title+"\\errorurl.txt");

txtfile.createNewFile();

FileWriter fileWriter=new FileWriter("随意本地硬盘地址\\"+title+"\\errorurl.txt");

fileWriter.write(title+"\n");

for (int j = 0; j < enterurl.size(); j++) {

fileWriter.write(String.valueOf(enterurl.get(j)+"\n"));

}

fileWriter.flush();

fileWriter.close();

}

//通过失败URL重新尝试获取文件

public void errorUrlReGet(String url)

{

webant ant=new webant();

try {

Scanner in = new Scanner(new File(url+"\\errourl.txt"));

int i=0;

List enterurl=new ArrayList();

String title="";

while (in.hasNextLine()) {

String str = in.nextLine();

if(i==0)

title=str;

else

enterurl.add(str);

i++;

}

Document maindoc;

String exceptionurl;

for(String pageurl:enterurl)

{

exceptionurl=pageurl;

try{

maindoc=ant.getDocument(pageurl);

}

catch (Exception ex)

{

System.out.println(exceptionurl);

continue;

}

String imageUrl=ant.getImageUrl(maindoc);

try {

ant.download("随意本地硬盘地址\\"+title,imageUrl.split("\\+")[0],imageUrl.split("\\+")[1]);

}

catch (Exception ex)

{

System.out.println(imageUrl.split("\\+")[0]);

}

}

in.close();

} catch (FileNotFoundException e)

{

}

}

}