ROS开发笔记(9)——ROS 深度强化学习应用之keras版本dqn代码分析

在ROS开发笔记(8)中构建了ROS中DQN算法的开发环境,在此基础上,对算法代码进行了分析,并做了简单的修改:

修改1 : 改变了保存模型参数在循环中的位置,原来是每个10整数倍数回合里面每一步都修改(相当于修改episode_step次),改成了每个10整数倍数回合修改一次

# if e % 10 == 0:

# agent.model.save(agent.dirPath + str(e) + '.h5')

# with open(agent.dirPath + str(e) + '.json', 'w') as outfile:

# param_keys = ['epsilon']

# param_values = [agent.epsilon]

# param_dictionary = dict(zip(param_keys, param_values))

# json.dump(param_dictionary, outfile)

修改2 :改变了agent.updateTargetModel()的位置,原来是每次done都修改,改成了每经过target_up步后修改

# if global_step % agent.target_update == 0:

# agent.updateTargetModel()

# rospy.loginfo("UPDATE TARGET NETWORK")

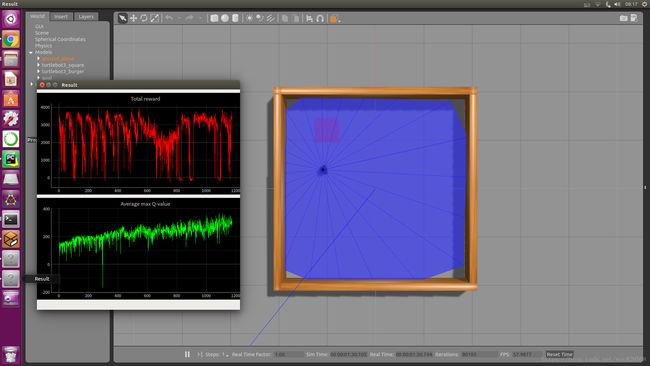

结果如下:

下面是修改后的代码及其注释:

#!/usr/bin/env python

#-*- coding:utf-8 -*-

#################################################################################

# Copyright 2018 ROBOTIS CO., LTD.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#################################################################################

# 原作者

# Authors: Gilbert #

import rospy

import os

import json

import numpy as np

import random

import time

import sys

sys.path.append(os.path.dirname(os.path.abspath(os.path.dirname(__file__))))

from collections import deque

from std_msgs.msg import Float32MultiArray

from keras.models import Sequential, load_model

from keras.optimizers import RMSprop

from keras.layers import Dense, Dropout, Activation

# 导入 Env

from src.turtlebot3_dqn.environment_stage_1 import Env

#最大回合数

EPISODES = 3000

#强化学习网络

class ReinforceAgent():

#初始化函数

def __init__(self, state_size, action_size):

# 创建 result 话题

self.pub_result = rospy.Publisher('result', Float32MultiArray, queue_size=5)

# 获取当前文件完整路径

self.dirPath = os.path.dirname(os.path.realpath(__file__))

# 基于当前路径生成模型保存路径前缀

self.dirPath = self.dirPath.replace('turtlebot3_dqn/nodes', 'turtlebot3_dqn/save_model/stage_1_')

# 初始化 result 话题

self.result = Float32MultiArray()

#wsc self.load_model = False

#wsc self.load_episode = 0

# 导入前期训练的模型

self.load_model =True

self.load_episode = 150

# self.load_model =False

# self.load_episode = 0

#状态数

self.state_size = state_size

#动作数

self.action_size = action_size

# 单个回合最大步数

self.episode_step = 6000

# 每2000次更新一次target网络参数

self.target_update = 2000

# 折扣因子 计算reward时用 当下反馈最重要 时间越久的影响越小

self.discount_factor = 0.99

# 学习率learning_rate 学习率决定了参数移动到最优值的速度快慢。

# 如果学习率过大,很可能会越过最优值;反而如果学习率过小,优化的效率可能过低,长时间算法无法收敛。

self.learning_rate = 0.00025

# 初始ϵ——epsilon

# 探索与利用原则

# 探索强调发掘环境中的更多信息,并不局限在已知的信息中;

# 利用强调从已知的信息中最大化奖励;

# greedy策略只注重了后者,没有涉及前者;

# ϵ-greedy策略兼具了探索与利用,它以ϵ的概率从所有的action中随机抽取一个,以1−ϵ的概率抽取能获得最大化奖励的action。

self.epsilon = 1.0

#随着模型的训练,已知的信息越来越可靠,epsilon应该逐步衰减

self.epsilon_decay = 0.99

#最小的epsilon_min,低于此值后不在利用epsilon_decay衰减

self.epsilon_min = 0.05

#batch_size 批处理大小

# 合理范围内,增大 Batch_Size

# 内存利用率提高了,大矩阵乘法的并行化效率提高

# 跑完一次epoch(全数据集)所需要的迭代次数减小,对于相同数据量的处理速度进一步加快

# 在一定范围内,一般来说batch size越大,其确定的下降方向越准,引起的训练震荡越小

# 盲目增大batch size 有什么坏处

# 内存利用率提高了,但是内存容量可能撑不住了

# 跑完一次epoch(全数据集)所需要的迭代次数减少,但是想要达到相同的精度,其所花费的时间大大增加了,从而对参数的修正也就显得更加缓慢

# batch size 大到一定的程度,其确定的下降方向已经基本不再变化

self.batch_size = 64

# 用于 experience replay 的 agent.memory

# DQN的经验回放池(agent.memory)大于train_start才开始训练网络(agent.trainModel)

self.train_start = 64

# 用队列存储experience replay 数据,并设置队列最大长度

self.memory = deque(maxlen=1000000)

# 网络模型构建

self.model = self.buildModel()

#target网络构建

self.target_model = self.buildModel()

self.updateTargetModel()

# 训练可以加载之前保存的模型参数进行

if self.load_model:

self.model.set_weights(load_model(self.dirPath+str(self.load_episode)+".h5").get_weights())

with open(self.dirPath+str(self.load_episode)+'.json') as outfile:

param = json.load(outfile)

self.epsilon = param.get('epsilon')

#wsc self.epsilon = 0.5

# 网络模型构建

def buildModel(self):

# Sequential序列模型是一个线性的层次堆栈

model = Sequential()

# 设置dropout,防止过拟合

dropout = 0.2

# 添加一层全连接层,输入大小为input_shape=(self.state_size,),输出大小为64,激活函数为relu,权值初始化方法为lecun_uniform

model.add(Dense(64, input_shape=(self.state_size,), activation='relu', kernel_initializer='lecun_uniform'))

# 添加一层全连接层,输出大小为64,激活函数为relu,权值初始化方法为lecun_uniform

model.add(Dense(64, activation='relu', kernel_initializer='lecun_uniform'))

# 添加dropout层

model.add(Dropout(dropout))

# 添加一层全连接层,输出大小为action_size,权值初始化方法为lecun_uniform

model.add(Dense(self.action_size, kernel_initializer='lecun_uniform'))

# 添加一层linear激活层

model.add(Activation('linear'))

# 优化算法RMSprop是AdaGrad算法的改进。鉴于神经网络都是非凸条件下的,RMSProp在非凸条件下结果更好,改变梯度累积为指数衰减的移动平均以丢弃遥远的过去历史。

# 经验上,RMSProp被证明有效且实用的深度学习网络优化算法。rho=0.9为衰减系数,epsilon=1e-06为一个小常数,保证被小数除的稳定性

model.compile(loss='mse', optimizer=RMSprop(lr=self.learning_rate, rho=0.9, epsilon=1e-06))

# model.summary():打印出模型概况

model.summary()

return model

# 计算Q值,用到reward(当前env回馈),done,以及有taget_net网络计算得到的next_target

def getQvalue(self, reward, next_target, done):

if done:

return reward

else:

return reward + self.discount_factor * np.amax(next_target)

# eval_net用于预测 q_eval

# target_net 用于预测 q_target 值

# 将eval net权重赋给target net

def updateTargetModel(self):

self.target_model.set_weights(self.model.get_weights())

#基于ϵ——epsilon策略选择动作

def getAction(self, state):

if np.random.rand() <= self.epsilon:

self.q_value = np.zeros(self.action_size)

return random.randrange(self.action_size)

else:

q_value = self.model.predict(state.reshape(1, len(state)))

self.q_value = q_value

return np.argmax(q_value[0])

#将经验数据存入经验池 当前状态state,基于当前状态选择的动作action,执行动作获得的回报reward,执行动作后环境变成的next_state,以及done

def appendMemory(self, state, action, reward, next_state, done):

self.memory.append((state, action, reward, next_state, done))

# 训练网络模型

def trainModel(self, target=False):

mini_batch = random.sample(self.memory, self.batch_size)

X_batch = np.empty((0, self.state_size), dtype=np.float64)

Y_batch = np.empty((0, self.action_size), dtype=np.float64)

for i in range(self.batch_size):

states = mini_batch[i][0]

actions = mini_batch[i][1]

rewards = mini_batch[i][2]

next_states = mini_batch[i][3]

dones = mini_batch[i][4]

# 计算q_value

q_value = self.model.predict(states.reshape(1, len(states)))

self.q_value = q_value

#计算next_target

if target:

next_target = self.target_model.predict(next_states.reshape(1, len(next_states)))

else:

next_target = self.model.predict(next_states.reshape(1, len(next_states)))

# 计算 next_q_value

next_q_value = self.getQvalue(rewards, next_target, dones)

X_batch = np.append(X_batch, np.array([states.copy()]), axis=0)

Y_sample = q_value.copy()

Y_sample[0][actions] = next_q_value

Y_batch = np.append(Y_batch, np.array([Y_sample[0]]), axis=0)

if dones:

X_batch = np.append(X_batch, np.array([next_states.copy()]), axis=0)

Y_batch = np.append(Y_batch, np.array([[rewards] * self.action_size]), axis=0)

self.model.fit(X_batch, Y_batch, batch_size=self.batch_size, epochs=1, verbose=0)

if __name__ == '__main__':

rospy.init_node('turtlebot3_dqn_stage_1')

pub_result = rospy.Publisher('result', Float32MultiArray, queue_size=5)

pub_get_action = rospy.Publisher('get_action', Float32MultiArray, queue_size=5)

result = Float32MultiArray()

get_action = Float32MultiArray()

state_size = 26

action_size = 5

env = Env(action_size)

agent = ReinforceAgent(state_size, action_size)

scores, episodes = [], []

global_step = 0

start_time = time.time()

# 循环EPISODES个回合

for e in range(agent.load_episode + 1, EPISODES):

done = False

state = env.reset()

score = 0

# 每10个回合保存一次网络模型参数

if e % 10 == 0:

agent.model.save(agent.dirPath + str(e) + '.h5')

with open(agent.dirPath + str(e) + '.json', 'w') as outfile:

param_keys = ['epsilon']

param_values = [agent.epsilon]

param_dictionary = dict(zip(param_keys, param_values))

json.dump(param_dictionary, outfile)

# 每个回合循环episode_step步

for t in range(agent.episode_step):

# 选择动作

action = agent.getAction(state)

# Env动作一步,返回next_state, reward, done

next_state, reward, done = env.step(action)

# 存经验值

agent.appendMemory(state, action, reward, next_state, done)

# agent.memory至少要收集agent.train_start(64)个才能开始训练

# global_step 没有达到 agent.target_update之前要用到target网络的地方由eval代替

if len(agent.memory) >= agent.train_start:

if global_step <= agent.target_update:

agent.trainModel()

else:

agent.trainModel(True)

# 将回报值累加成score

score += reward

state = next_state

# 发布 get_action 话题

get_action.data = [action, score, reward]

pub_get_action.publish(get_action)

# 超过500步时设定为超时,回合结束

if t >= 500:

rospy.loginfo("Time out!!")

done = True

if done:

# 发布result话题

result.data = [score, np.max(agent.q_value)]

pub_result.publish(result)

scores.append(score)

episodes.append(e)

# 计算运行时间

m, s = divmod(int(time.time() - start_time), 60)

h, m = divmod(m, 60)

rospy.loginfo('Ep: %d score: %.2f memory: %d epsilon: %.2f time: %d:%02d:%02d',

e, score, len(agent.memory), agent.epsilon, h, m, s)

break

global_step += 1

# 每经过agent.target_update,更新target网络参数

if global_step % agent.target_update == 0:

agent.updateTargetModel()

rospy.loginfo("UPDATE TARGET NETWORK")

# 更新衰减epsilon值,直到低于等于agent.epsilon_min

if agent.epsilon > agent.epsilon_min:

agent.epsilon *= agent.epsilon_decay