python增强现实

1,在一平面上放一个立方体

实现代码:

from pylab import *

from PIL import Image

# If you have PCV installed, these imports should work

from PCV.geometry import homography, camera

# from PCV.localdescriptors

import sift

"""

This is the augmented reality and pose estimation cube example from Section 4.3.

"""

def cube_points(c, wid):

""" Creates a list of points for plotting

a cube with plot. (the first 5 points are

the bottom square, some sides repeated). """

p = []

# bottom

p.append([c[0] - wid, c[1] - wid, c[2] - wid])

p.append([c[0] - wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] - wid, c[2] - wid])

p.append([c[0] - wid, c[1] - wid, c[2] - wid]) # same as first to close plot

# top

p.append([c[0] - wid, c[1] - wid, c[2] + wid])

p.append([c[0] - wid, c[1] + wid, c[2] + wid])

p.append([c[0] + wid, c[1] + wid, c[2] + wid])

p.append([c[0] + wid, c[1] - wid, c[2] + wid])

p.append([c[0] - wid, c[1] - wid, c[2] + wid]) # same as first to close plot

# vertical sides

p.append([c[0] - wid, c[1] - wid, c[2] + wid])

p.append([c[0] - wid, c[1] + wid, c[2] + wid])

p.append([c[0] - wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] + wid, c[2] + wid])

p.append([c[0] + wid, c[1] - wid, c[2] + wid])

p.append([c[0] + wid, c[1] - wid, c[2] - wid])

return array(p).T

def my_calibration(sz):

"""

Calibration function for the camera (iPhone4) used in this example.

"""

row, col = sz

fx = 2555 * col / 2592

fy = 2586 * row / 1936

K = diag([fx, fy, 1])

K[0, 2] = 0.5 * col

K[1, 2] = 0.5 * row

return K

# compute features

sift.process_image('D:/img/1.jpg', 'im0.sift')

l0, d0 = sift.read_features_from_file('im0.sift')

sift.process_image('D:/img/2.jpg', 'im1.sift')

l1, d1 = sift.read_features_from_file('im1.sift')

# match features and estimate homography

matches = sift.match_twosided(d0, d1)

ndx = matches.nonzero()[0]

fp = homography.make_homog(l0[ndx, :2].T)

ndx2 = [int(matches[i]) for i in ndx]

tp = homography.make_homog(l1[ndx2, :2].T)

model = homography.RansacModel()

H, inliers = homography.H_from_ransac(fp, tp, model)

# camera calibration

K = my_calibration((747, 1000))

# 3D points at plane z=0 with sides of length 0.2

box = cube_points([0, 0, 0.1], 0.1)

# project bottom square in first image

cam1 = camera.Camera(hstack((K, dot(K, array([[0], [0], [-1]])))))

# first points are the bottom square

box_cam1 = cam1.project(homography.make_homog(box[:, :5]))

# use H to transfer points to the second image

box_trans = homography.normalize(dot(H, box_cam1))

# compute second camera matrix from cam1 and H

cam2 = camera.Camera(dot(H, cam1.P))

A = dot(linalg.inv(K), cam2.P[:, :3])

A = array([A[:, 0], A[:, 1], cross(A[:, 0], A[:, 1])]).T

cam2.P[:, :3] = dot(K, A)

# project with the second camera

box_cam2 = cam2.project(homography.make_homog(box))

# plotting

im0 = array(Image.open('D:/img/1.JPG'))

im1 = array(Image.open('D:/img/2.JPG'))

figure()

imshow(im0)

plot(box_cam1[0, :], box_cam1[1, :], linewidth=3)

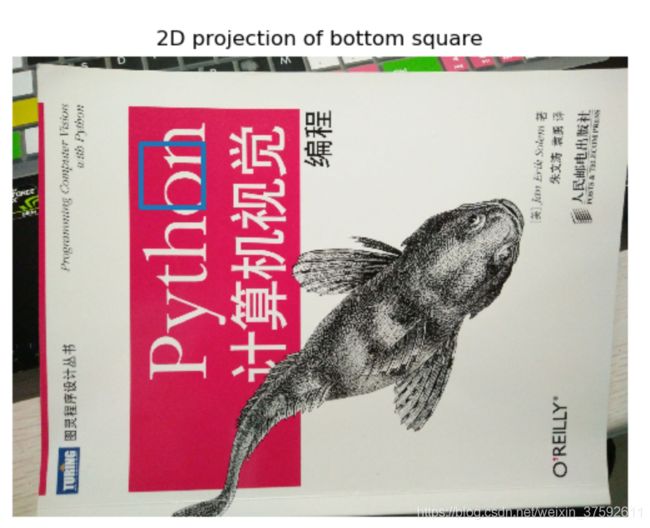

title('2D projection of bottom square')

axis('off')

figure()

imshow(im1)

plot(box_trans[0, :], box_trans[1, :], linewidth=3)

title('2D projection transfered with H')

axis('off')

figure()

imshow(im1)

plot(box_cam2[0, :], box_cam2[1, :], linewidth=3)

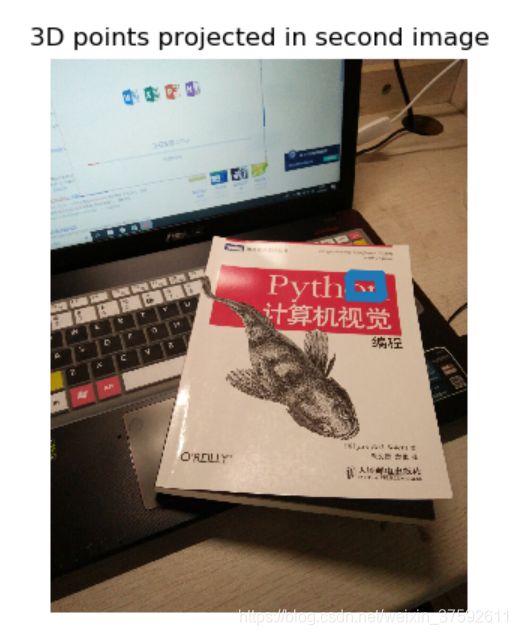

title('3D points projected in second image')

axis('off')

show()结果:

图1 图2 图3

使用平面物体作为标记物,来计算用于新视图投影矩阵的例子。将图像的特征和对齐后的标记匹配,计算除单应矩阵,然后用于计算照相机的姿态。将有一个蓝色正方形区域的模板图像(图1),从未知视觉拍摄的一幅图像,该图像包含同一个正方形,该正方形已经经过估计的单应性矩阵进行了变换(图2),使用计算出的照相机矩阵变换立方体(图3)

二,在图像中放置虚拟物体

import math

import pickle

from pylab import *

from OpenGL.GL import *

from OpenGL.GLU import *

from OpenGL.GLUT import *

import pygame, pygame.image

from pygame.locals import *

from PCV.geometry import homography, camera

import sift

def cube_points(c, wid):

""" Creates a list of points for plotting

a cube with plot. (the first 5 points are

the bottom square, some sides repeated). """

p = []

# bottom

p.append([c[0] - wid, c[1] - wid, c[2] - wid])

p.append([c[0] - wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] - wid, c[2] - wid])

p.append([c[0] - wid, c[1] - wid, c[2] - wid]) # same as first to close plot

# top

p.append([c[0] - wid, c[1] - wid, c[2] + wid])

p.append([c[0] - wid, c[1] + wid, c[2] + wid])

p.append([c[0] + wid, c[1] + wid, c[2] + wid])

p.append([c[0] + wid, c[1] - wid, c[2] + wid])

p.append([c[0] - wid, c[1] - wid, c[2] + wid]) # same as first to close plot

# vertical sides

p.append([c[0] - wid, c[1] - wid, c[2] + wid])

p.append([c[0] - wid, c[1] + wid, c[2] + wid])

p.append([c[0] - wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] + wid, c[2] - wid])

p.append([c[0] + wid, c[1] + wid, c[2] + wid])

p.append([c[0] + wid, c[1] - wid, c[2] + wid])

p.append([c[0] + wid, c[1] - wid, c[2] - wid])

return array(p).T

def my_calibration(sz):

row, col = sz

fx = 2555 * col / 2592

fy = 2586 * row / 1936

K = diag([fx, fy, 1])

K[0, 2] = 0.5 * col

K[1, 2] = 0.5 * row

return K

def set_projection_from_camera(K):

glMatrixMode(GL_PROJECTION)

glLoadIdentity()

fx = K[0, 0]

fy = K[1, 1]

fovy = 2 * math.atan(0.5 * height / fy) * 180 / math.pi

aspect = (width * fy) / (height * fx)

near = 0.1

far = 100.0

gluPerspective(fovy, aspect, near, far)

glViewport(0, 0, width, height)

def set_modelview_from_camera(Rt):

glMatrixMode(GL_MODELVIEW)

glLoadIdentity()

Rx = np.array([[1, 0, 0], [0, 0, -1], [0, 1, 0]])

R = Rt[:, :3]

U, S, V = np.linalg.svd(R)

R = np.dot(U, V)

R[0, :] = -R[0, :]

t = Rt[:, 3]

M = np.eye(4)

M[:3, :3] = np.dot(R, Rx)

M[:3, 3] = t

M = M.T

m = M.flatten()

glLoadMatrixf(m)

def draw_background(imname):

bg_image = pygame.image.load(imname).convert()

bg_data = pygame.image.tostring(bg_image, "RGBX", 1)

glMatrixMode(GL_MODELVIEW)

glLoadIdentity()

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT)

glEnable(GL_TEXTURE_2D)

glBindTexture(GL_TEXTURE_2D, glGenTextures(1))

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, GL_RGBA, GL_UNSIGNED_BYTE, bg_data)

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST)

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST)

glBegin(GL_QUADS)

glTexCoord2f(0.0, 0.0);

glVertex3f(-1.0, -1.0, -1.0)

glTexCoord2f(1.0, 0.0);

glVertex3f(1.0, -1.0, -1.0)

glTexCoord2f(1.0, 1.0);

glVertex3f(1.0, 1.0, -1.0)

glTexCoord2f(0.0, 1.0);

glVertex3f(-1.0, 1.0, -1.0)

glEnd()

glDeleteTextures(1)

def draw_teapot(size):

glEnable(GL_LIGHTING)

glEnable(GL_LIGHT0)

glEnable(GL_DEPTH_TEST)

glClear(GL_DEPTH_BUFFER_BIT)

glMaterialfv(GL_FRONT, GL_AMBIENT, [0, 0, 0, 0])

glMaterialfv(GL_FRONT, GL_DIFFUSE, [0.5, 0.0, 0.0, 0.0])

glMaterialfv(GL_FRONT, GL_SPECULAR, [0.7, 0.6, 0.6, 0.0])

glMaterialf(GL_FRONT, GL_SHININESS, 0.25 * 128.0)

glutSolidTeapot(size)

width, height = 1000, 747

def setup():

pygame.init()

pygame.display.set_mode((width, height), OPENGL | DOUBLEBUF)

pygame.display.set_caption("OpenGL AR demo")

# compute features

sift.process_image('book_frontal.JPG', 'im0.sift')

l0, d0 = sift.read_features_from_file('im0.sift')

sift.process_image('book_perspective.JPG', 'im1.sift')

l1, d1 = sift.read_features_from_file('im1.sift')

# match features and estimate homography

matches = sift.match_twosided(d0, d1)

ndx = matches.nonzero()[0]

fp = homography.make_homog(l0[ndx, :2].T)

ndx2 = [int(matches[i]) for i in ndx]

tp = homography.make_homog(l1[ndx2, :2].T)

model = homography.RansacModel()

H, inliers = homography.H_from_ransac(fp, tp, model)

K = my_calibration((747, 1000))

cam1 = camera.Camera(hstack((K, dot(K, array([[0], [0], [-1]])))))

box = cube_points([0, 0, 0.1], 0.1)

box_cam1 = cam1.project(homography.make_homog(box[:, :5]))

box_trans = homography.normalize(dot(H, box_cam1))

cam2 = camera.Camera(dot(H, cam1.P))

A = dot(linalg.inv(K), cam2.P[:, :3])

A = array([A[:, 0], A[:, 1], cross(A[:, 0], A[:, 1])]).T

cam2.P[:, :3] = dot(K, A)

Rt = dot(linalg.inv(K), cam2.P)

setup()

draw_background("book_perspective.bmp")

set_projection_from_camera(K)

set_modelview_from_camera(Rt)

draw_teapot(0.05)

pygame.display.flip()

while True:

for event in pygame.event.get():

if event.type == pygame.QUIT:

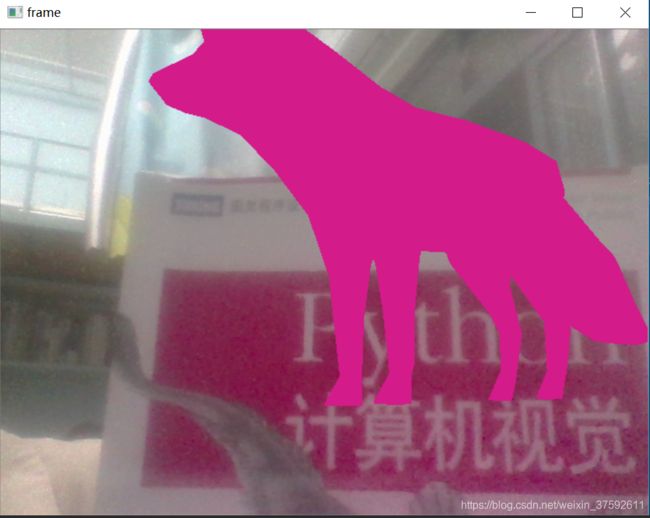

sys.exit()三,视频的投影

air_main.py

# Useful links

# http://www.pygame.org/wiki/OBJFileLoader

# https://rdmilligan.wordpress.com/2015/10/15/augmented-reality-using-opencv-opengl-and-blender/

# https://clara.io/library

# TODO -> Implement command line arguments (scale, model and object to be projected)

# -> Refactor and organize code (proper funcition definition and separation, classes, error handling...)

import argparse

import cv2

import numpy as np

import math

import os

from objloader_simple import *

# Minimum number of matches that have to be found

# to consider the recognition valid

MIN_MATCHES = 10

def main():

"""

This functions loads the target surface image,

"""

homography = None

# matrix of camera parameters (made up but works quite well for me)

camera_parameters = np.array([[800, 0, 320], [0, 800, 240], [0, 0, 1]])

# create ORB keypoint detector

orb = cv2.ORB_create()

# create BFMatcher object based on hamming distance

bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

# load the reference surface that will be searched in the video stream

dir_name = os.getcwd()

model = cv2.imread(os.path.join(dir_name, 'D:/reference/model.jpg'), 0)

# Compute model keypoints and its descriptors

kp_model, des_model = orb.detectAndCompute(model, None)

# Load 3D model from OBJ file

obj = OBJ(os.path.join(dir_name, 'D:/models/fox.obj'), swapyz=True)

# init video capture

#1cap = cv2.VideoCapture(0)

camera_number = 0

cap = cv2.VideoCapture(camera_number + cv2.CAP_DSHOW)

while True:

# read the current frame

ret, frame = cap.read()

if not ret:

print("Unable to capture video")

return

# find and draw the keypoints of the frame

kp_frame, des_frame = orb.detectAndCompute(frame, None)

# match frame descriptors with model descriptors

matches = bf.match(des_model, des_frame)

# sort them in the order of their distance

# the lower the distance, the better the match

matches = sorted(matches, key=lambda x: x.distance)

# compute Homography if enough matches are found

if len(matches) > MIN_MATCHES:

# differenciate between source points and destination points

src_pts = np.float32([kp_model[m.queryIdx].pt for m in matches]).reshape(-1, 1, 2)

dst_pts = np.float32([kp_frame[m.trainIdx].pt for m in matches]).reshape(-1, 1, 2)

# compute Homography

homography, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

if args.rectangle:

# Draw a rectangle that marks the found model in the frame

h, w = model.shape

pts = np.float32([[0, 0], [0, h - 1], [w - 1, h - 1], [w - 1, 0]]).reshape(-1, 1, 2)

# project corners into frame

dst = cv2.perspectiveTransform(pts, homography)

# connect them with lines

frame = cv2.polylines(frame, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

# if a valid homography matrix was found render cube on model plane

if homography is not None:

try:

# obtain 3D projection matrix from homography matrix and camera parameters

projection = projection_matrix(camera_parameters, homography)

# project cube or model

frame = render(frame, obj, projection, model, False)

#frame = render(frame, model, projection)

except:

pass

# draw first 10 matches.

if args.matches:

frame = cv2.drawMatches(model, kp_model, frame, kp_frame, matches[:10], 0, flags=2)

# show result

cv2.imshow('frame', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

else:

print ("Not enough matches found - %d/%d" % (len(matches), MIN_MATCHES))

cap.release()

cv2.destroyAllWindows()

return 0

def render(img, obj, projection, model, color=False):

"""

Render a loaded obj model into the current video frame

"""

vertices = obj.vertices

scale_matrix = np.eye(5) *5

h, w = model.shape

for face in obj.faces:

face_vertices = face[0]

points = np.array([vertices[vertex - 1] for vertex in face_vertices])

points = np.dot(points, scale_matrix)

# render model in the middle of the reference surface. To do so,

# model points must be displaced

points = np.array([[p[0] + w / 2, p[1] + h / 2, p[2]] for p in points])

dst = cv2.perspectiveTransform(points.reshape(-1, 1, 3), projection)

imgpts = np.int32(dst)

if color is False:

cv2.fillConvexPoly(img, imgpts, (137, 27, 211))

else:

color = hex_to_rgb(face[-1])

color = color[::-1] # reverse

cv2.fillConvexPoly(img, imgpts, color)

return img

def projection_matrix(camera_parameters, homography):

"""

From the camera calibration matrix and the estimated homography

compute the 3D projection matrix

"""

# Compute rotation along the x and y axis as well as the translation

homography = homography * (-1)

rot_and_transl = np.dot(np.linalg.inv(camera_parameters), homography)

col_1 = rot_and_transl[:, 0]

col_2 = rot_and_transl[:, 1]

col_3 = rot_and_transl[:, 2]

# normalise vectors

l = math.sqrt(np.linalg.norm(col_1, 2) * np.linalg.norm(col_2, 2))

rot_1 = col_1 / l

rot_2 = col_2 / l

translation = col_3 / l

# compute the orthonormal basis

c = rot_1 + rot_2

p = np.cross(rot_1, rot_2)

d = np.cross(c, p)

rot_1 = np.dot(c / np.linalg.norm(c, 2) + d / np.linalg.norm(d, 2), 1 / math.sqrt(2))

rot_2 = np.dot(c / np.linalg.norm(c, 2) - d / np.linalg.norm(d, 2), 1 / math.sqrt(2))

rot_3 = np.cross(rot_1, rot_2)

# finally, compute the 3D projection matrix from the model to the current frame

projection = np.stack((rot_1, rot_2, rot_3, translation)).T

return np.dot(camera_parameters, projection)

def hex_to_rgb(hex_color):

"""

Helper function to convert hex strings to RGB

"""

hex_color = hex_color.lstrip('#')

h_len = len(hex_color)

return tuple(int(hex_color[i:i + h_len // 3], 16) for i in range(0, h_len, h_len // 3))

# Command line argument parsing

# NOT ALL OF THEM ARE SUPPORTED YET

parser = argparse.ArgumentParser(description='Augmented reality application')

parser.add_argument('-r','--rectangle', help = 'draw rectangle delimiting target surface on frame', action = 'store_true')

parser.add_argument('-mk','--model_keypoints', help = 'draw model keypoints', action = 'store_true')

parser.add_argument('-fk','--frame_keypoints', help = 'draw frame keypoints', action = 'store_true')

parser.add_argument('-ma','--matches', help = 'draw matches between keypoints', action = 'store_true')

# TODO jgallostraa -> add support for model specification

#parser.add_argument('-mo','--model', help = 'Specify model to be projected', action = 'store_true')

args = parser.parse_args()

if __name__ == '__main__':

main()

objloader_simple.py

class OBJ:

def __init__(self, filename, swapyz=False):

"""Loads a Wavefront OBJ file. """

self.vertices = []

self.normals = []

self.texcoords = []

self.faces = []

material = None

for line in open(filename, "r"):

if line.startswith('#'): continue

values = line.split()

if not values: continue

if values[0] == 'v':

v = list(map(float, values[1:4]))

if swapyz:

v = v[0], v[2], v[1]

self.vertices.append(v)

elif values[0] == 'vn':

v = list(map(float, values[1:4]))

if swapyz:

v = v[0], v[2], v[1]

self.normals.append(v)

elif values[0] == 'vt':

self.texcoords.append(map(float, values[1:3]))

#elif values[0] in ('usemtl', 'usemat'):

#material = values[1]

#elif values[0] == 'mtllib':

#self.mtl = MTL(values[1])

elif values[0] == 'f':

face = []

texcoords = []

norms = []

for v in values[1:]:

w = v.split('/')

face.append(int(w[0]))

if len(w) >= 2 and len(w[1]) > 0:

texcoords.append(int(w[1]))

else:

texcoords.append(0)

if len(w) >= 3 and len(w[2]) > 0:

norms.append(int(w[2]))

else:

norms.append(0)

#self.faces.append((face, norms, texcoords, material))

self.faces.append((face, norms, texcoords))

这是其中一帧的图像