调用hadoop api实现文件的上传、下载、删除、创建目录和显示功能

(1)添加必要的hadoop jar包。

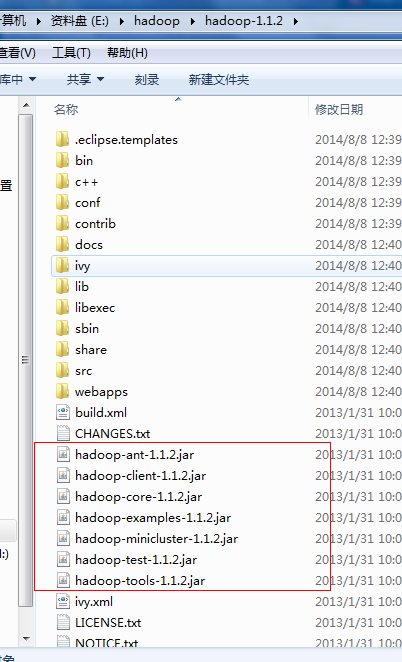

A、首先将Hadoop1.1.2.tar.gz解压到某一个磁盘下。

B、右键选择工程,选择build path...., build configure path;

C、将hadoop1.1.2文件夹下的jar包添加进去;

还有lib文件夹下的所有jar包(注意:jasper-compiler-5.5.12.jar和jasper-runtime-5.5.12.jar不要引进,否则会报错)

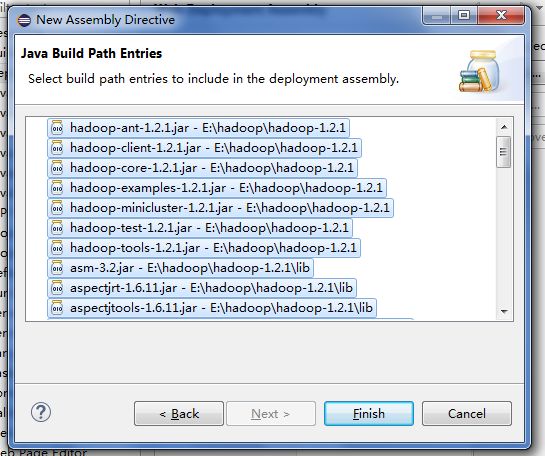

注意:在build path引入这些jar包后,还需要将这些jar包复制到WEB-INF/lib目录下,可以通过下面操作实现:

选择工程,右键“Properties”,选择Deployment Assembly。

点击Add,选择Java Build Path Entries。

然后把你刚刚引进的Jar包全部选上,点击finishes。

D 、创建java工程

创建HdfsDAO类:

001.

package com.model;

002.

003.

import java.io.IOException;

004.

import java.net.URI;

005.

006.

import org.apache.hadoop.conf.Configuration;

007.

import org.apache.hadoop.fs.FileStatus;

008.

import org.apache.hadoop.fs.FileSystem;

009.

import org.apache.hadoop.fs.Path;

010.

import org.apache.hadoop.mapred.JobConf;

011.

012.

public class HdfsDAO {

013.

014.

//HDFS访问地址

015.

private static final String HDFS = "hdfs://192.168.1.104:9000";

016.

017.

018.

019.

public HdfsDAO(Configuration conf) {

020.

this(HDFS, conf);

021.

}

022.

023.

public HdfsDAO(String hdfs, Configuration conf) {

024.

this.hdfsPath = hdfs;

025.

this.conf = conf;

026.

}

027.

028.

//hdfs路径

029.

private String hdfsPath;

030.

//Hadoop系统配置

031.

private Configuration conf;

032.

033.

034.

035.

//启动函数

036.

public static void main(String[] args) throws IOException {

037.

JobConf conf = config();

038.

HdfsDAO hdfs = new HdfsDAO(conf);

039.

//hdfs.mkdirs("/Tom");

040.

//hdfs.copyFile("C:\\files", "/wgc/");

041.

hdfs.ls("hdfs://192.168.1.104:9000/wgc/files");

042.

//hdfs.rmr("/wgc/files");

043.

//hdfs.download("/wgc/(3)windows下hadoop+eclipse环境搭建.docx", "c:\\");

044.

//System.out.println("success!");

045.

}

046.

047.

//加载Hadoop配置文件

048.

public static JobConf config(){

049.

JobConf conf = new JobConf(HdfsDAO.class);

050.

conf.setJobName("HdfsDAO");

051.

conf.addResource("classpath:/hadoop/core-site.xml");

052.

conf.addResource("classpath:/hadoop/hdfs-site.xml");

053.

conf.addResource("classpath:/hadoop/mapred-site.xml");

054.

return conf;

055.

}

056.

057.

//在根目录下创建文件夹

058.

public void mkdirs(String folder) throws IOException {

059.

Path path = new Path(folder);

060.

FileSystem fs = FileSystem.get(URI.create(hdfsPath), conf);

061.

if (!fs.exists(path)) {

062.

fs.mkdirs(path);

063.

System.out.println("Create: " + folder);

064.

}

065.

fs.close();

066.

}

067.

068.

//某个文件夹的文件列表

069.

public FileStatus[] ls(String folder) throws IOException {

070.

Path path = new Path(folder);

071.

FileSystem fs = FileSystem.get(URI.create(hdfsPath), conf);

072.

FileStatus[] list = fs.listStatus(path);

073.

System.out.println("ls: " + folder);

074.

System.out.println("==========================================================");

075.

if(list != null)

076.

for (FileStatus f : list) {

077.

//System.out.printf("name: %s, folder: %s, size: %d\n", f.getPath(), f.isDir(), f.getLen());

078.

System.out.printf("%s, folder: %s, 大小: %dK\n", f.getPath().getName(), (f.isDir()?"目录":"文件"), f.getLen()/1024);

079.

}

080.

System.out.println("==========================================================");

081.

fs.close();

082.

083.

return list;

084.

}

085.

086.

087.

public void copyFile(String local, String remote) throws IOException {

088.

FileSystem fs = FileSystem.get(URI.create(hdfsPath), conf);

089.

//remote---/用户/用户下的文件或文件夹

090.

fs.copyFromLocalFile(new Path(local), new Path(remote));

091.

System.out.println("copy from: " + local + " to " + remote);

092.

fs.close();

093.

}

094.

095.

//删除文件或文件夹

096.

public void rmr(String folder) throws IOException {

097.

Path path = new Path(folder);

098.

FileSystem fs = FileSystem.get(URI.create(hdfsPath), conf);

099.

fs.deleteOnExit(path);

100.

System.out.println("Delete: " + folder);

101.

fs.close();

102.

}

103.

104.

105.

//下载文件到本地系统

106.

public void download(String remote, String local) throws IOException {

107.

Path path = new Path(remote);

108.

FileSystem fs = FileSystem.get(URI.create(hdfsPath), conf);

109.

fs.copyToLocalFile(path, new Path(local));

110.

System.out.println("download: from" + remote + " to " + local);

111.

fs.close();

112.

}

113.

114.

115.

}

在测试前,请启动hadoop;

运行测试该程序:

其他函数测试也成功,这里就不一一列举了。

二、结合web前台和hadoop api

打开Uploadservlet文件,修改:

001.

package com.controller;

002.

003.

import java.io.File;

004.

import java.io.IOException;

005.

import java.util.Iterator;

006.

import java.util.List;

007.

008.

import javax.servlet.ServletContext;

009.

import javax.servlet.ServletException;

010.

import javax.servlet.http.HttpServlet;

011.

import javax.servlet.http.HttpServletRequest;

012.

import javax.servlet.http.HttpServletResponse;

013.

import javax.servlet."http://www.it165.net/pro/webjsp/" target="_blank"class="keylink">jsp.PageContext;

014.

015.

import org.apache.commons.fileupload.DiskFileUpload;

016.

import org.apache.commons.fileupload.FileItem;

017.

import org.apache.commons.fileupload.disk.DiskFileItemFactory;

018.

import org.apache.commons.fileupload.servlet.ServletFileUpload;

019.

import org.apache.hadoop.fs.FileStatus;

020.

import org.apache.hadoop.mapred.JobConf;

021.

022.

import com.model.HdfsDAO;

023.

024.

025.

/**

026.

* Servlet implementation class UploadServlet

027.

*/

028.

public class UploadServlet extends HttpServlet {

029.

030.

/**

031.

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

032.

*/

033.

protected void doGet(HttpServletRequest request, HttpServletResponse response) throwsServletException, IOException {

034.

this.doPost(request, response);

035.

}

036.

037.

/**

038.

* @see HttpServlet#doPost(HttpServletRequest request, HttpServletResponse response)

039.

*/

040.

protected void doPost(HttpServletRequest request, HttpServletResponse response) throwsServletException, IOException {

041.

request.setCharacterEncoding("UTF-8");

042.

File file ;

043.

int maxFileSize = 50 * 1024 *1024; //50M

044.

int maxMemSize = 50 * 1024 *1024; //50M

045.

ServletContext context = getServletContext();

046.

String filePath = context.getInitParameter("file-upload");

047.

System.out.println("source file path:"+filePath+"");

048.

// 验证上传内容了类型

049.

String contentType = request.getContentType();

050.

if ((contentType.indexOf("multipart/form-data") >= 0)) {

051.

052.

DiskFileItemFactory factory = new DiskFileItemFactory();

053.

// 设置内存中存储文件的最大值

054.

factory.setSizeThreshold(maxMemSize);

055.

// 本地存储的数据大于 maxMemSize.

056.

factory.setRepository(new File("c:\\temp"));

057.

058.

// 创建一个新的文件上传处理程序

059.

ServletFileUpload upload = new ServletFileUpload(factory);

060.

// 设置最大上传的文件大小

061.

upload.setSizeMax( maxFileSize );

062.

try{

063.

// 解析获取的文件

064.

List fileItems = upload.parseRequest(request);

065.

066.

// 处理上传的文件

067.

Iterator i = fileItems.iterator();

068.

069.

System.out.println("begin to upload file to tomcat server");

070.

while ( i.hasNext () )

071.

{

072.

FileItem fi = (FileItem)i.next();

073.

if ( !fi.isFormField () )

074.

{

075.

// 获取上传文件的参数

076.

String fieldName = fi.getFieldName();

077.

String fileName = fi.getName();

078.

079.

String fn = fileName.substring( fileName.lastIndexOf("\\")+1);

080.

System.out.println("

"+fn+"

");

081.

boolean isInMemory = fi.isInMemory();

082.

long sizeInBytes = fi.getSize();

083.

// 写入文件

084.

if( fileName.lastIndexOf("\\") >= 0 ){

085.

file = new File( filePath ,

086.

fileName.substring( fileName.lastIndexOf("\\"))) ;

087.

//out.println("filename"+fileName.substring( fileName.lastIndexOf("\\"))+"||||||");

088.

}else{

089.

file = new File( filePath ,

090.

fileName.substring(fileName.lastIndexOf("\\")+1)) ;

091.

}

092.

fi.write( file ) ;

093.

System.out.println("upload file to tomcat server success!");

094.

095.

"color:#ff0000;"> System.out.println("begin to upload file to hadoop hdfs");096.

//将tomcat上的文件上传到hadoop上

097.

098.

JobConf conf = HdfsDAO.config();

099.

HdfsDAO hdfs = new HdfsDAO(conf);

100.

hdfs.copyFile(filePath+"\\"+fn, "/wgc/"+fn);

101.

System.out.println("upload file to hadoop hdfs success!");

102.

103.

request.getRequestDispatcher("index.http://www.it165.net/pro/webjsp/" target="_blank" class="keylink">jsp").forward(request, response);

104.

105.

}

106.

}

107.

}catch(Exception ex) {

108.

System.out.println(ex);

109.

}

110.

}else{

111.

System.out.println("No file uploaded

");

112.

113.

}

114.

115.

116.

117.

}

118.

119.

}

启动tomcat服务器测试:

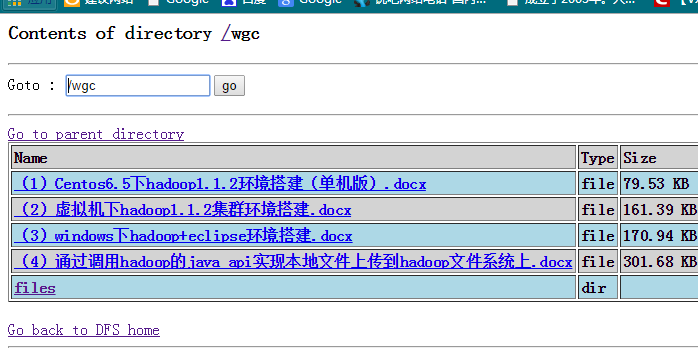

在上传前,hdfs下的wgc文件夹列表如下:

接下来我们上传:(4)通过调用hadoop的java api实现本地文件上传到hadoop文件系统上.docx

在tomcat服务器上,我们可以看到刚刚上传的文件:

打开http://hadoop:50070/查看文件系统,可以看到新上传的文件:

那么,到此,一个简陋的网盘上传功能就实现了,接下来我们就对这个简陋的网盘做一些美工,让它看起来更漂亮些。

参考资料:

http://blog.fens.me/hadoop-hdfs-api/